Vivien Rolland

3D Gaussian Representations with Motion Trajectory Field for Dynamic Scene Reconstruction

Aug 10, 2025

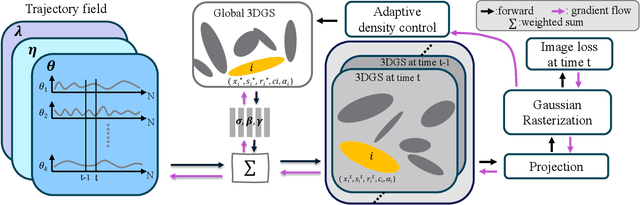

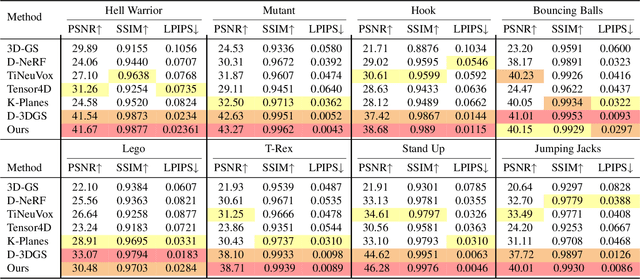

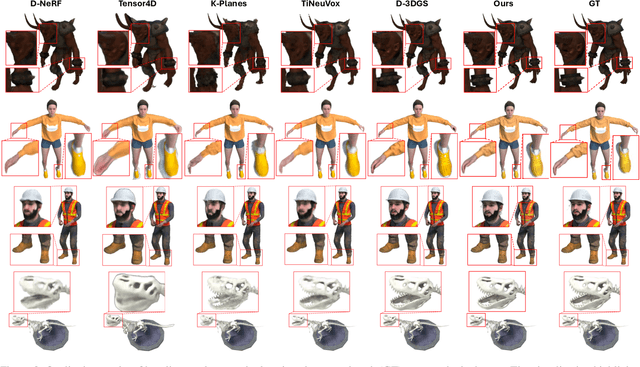

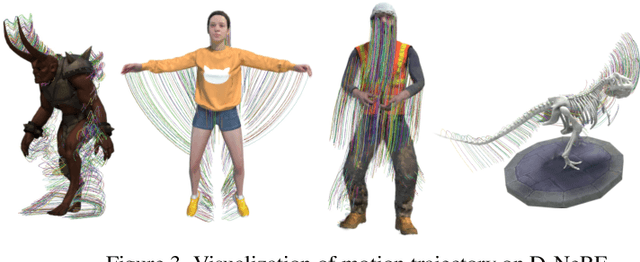

Abstract:This paper addresses the challenge of novel-view synthesis and motion reconstruction of dynamic scenes from monocular video, which is critical for many robotic applications. Although Neural Radiance Fields (NeRF) and 3D Gaussian Splatting (3DGS) have demonstrated remarkable success in rendering static scenes, extending them to reconstruct dynamic scenes remains challenging. In this work, we introduce a novel approach that combines 3DGS with a motion trajectory field, enabling precise handling of complex object motions and achieving physically plausible motion trajectories. By decoupling dynamic objects from static background, our method compactly optimizes the motion trajectory field. The approach incorporates time-invariant motion coefficients and shared motion trajectory bases to capture intricate motion patterns while minimizing optimization complexity. Extensive experiments demonstrate that our approach achieves state-of-the-art results in both novel-view synthesis and motion trajectory recovery from monocular video, advancing the capabilities of dynamic scene reconstruction.

DGNS: Deformable Gaussian Splatting and Dynamic Neural Surface for Monocular Dynamic 3D Reconstruction

Dec 05, 2024

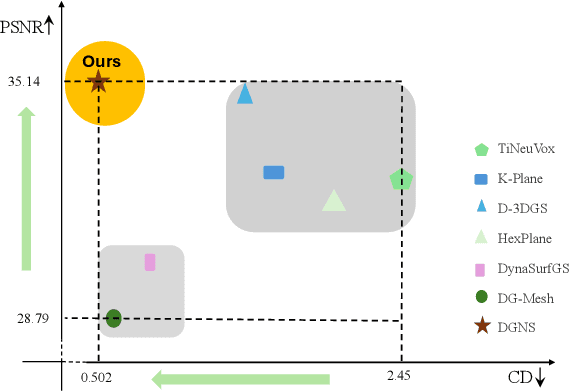

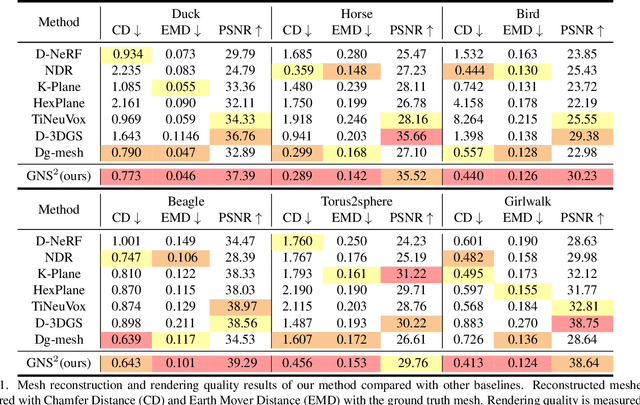

Abstract:Dynamic scene reconstruction from monocular video is critical for real-world applications. This paper tackles the dual challenges of dynamic novel-view synthesis and 3D geometry reconstruction by introducing a hybrid framework: Deformable Gaussian Splatting and Dynamic Neural Surfaces (DGNS), in which both modules can leverage each other for both tasks. During training, depth maps generated by the deformable Gaussian splatting module guide the ray sampling for faster processing and provide depth supervision within the dynamic neural surface module to improve geometry reconstruction. Simultaneously, the dynamic neural surface directs the distribution of Gaussian primitives around the surface, enhancing rendering quality. To further refine depth supervision, we introduce a depth-filtering process on depth maps derived from Gaussian rasterization. Extensive experiments on public datasets demonstrate that DGNS achieves state-of-the-art performance in both novel-view synthesis and 3D reconstruction.

NeFF-BioNet: Crop Biomass Prediction from Point Cloud to Drone Imagery

Oct 30, 2024Abstract:Crop biomass offers crucial insights into plant health and yield, making it essential for crop science, farming systems, and agricultural research. However, current measurement methods, which are labor-intensive, destructive, and imprecise, hinder large-scale quantification of this trait. To address this limitation, we present a biomass prediction network (BioNet), designed for adaptation across different data modalities, including point clouds and drone imagery. Our BioNet, utilizing a sparse 3D convolutional neural network (CNN) and a transformer-based prediction module, processes point clouds and other 3D data representations to predict biomass. To further extend BioNet for drone imagery, we integrate a neural feature field (NeFF) module, enabling 3D structure reconstruction and the transformation of 2D semantic features from vision foundation models into the corresponding 3D surfaces. For the point cloud modality, BioNet demonstrates superior performance on two public datasets, with an approximate 6.1% relative improvement (RI) over the state-of-the-art. In the RGB image modality, the combination of BioNet and NeFF achieves a 7.9% RI. Additionally, the NeFF-based approach utilizes inexpensive, portable drone-mounted cameras, providing a scalable solution for large field applications.

MMCBE: Multi-modality Dataset for Crop Biomass Estimation and Beyond

Apr 17, 2024Abstract:Crop biomass, a critical indicator of plant growth, health, and productivity, is invaluable for crop breeding programs and agronomic research. However, the accurate and scalable quantification of crop biomass remains inaccessible due to limitations in existing measurement methods. One of the obstacles impeding the advancement of current crop biomass prediction methodologies is the scarcity of publicly available datasets. Addressing this gap, we introduce a new dataset in this domain, i.e. Multi-modality dataset for crop biomass estimation (MMCBE). Comprising 216 sets of multi-view drone images, coupled with LiDAR point clouds, and hand-labelled ground truth, MMCBE represents the first multi-modality one in the field. This dataset aims to establish benchmark methods for crop biomass quantification and foster the development of vision-based approaches. We have rigorously evaluated state-of-the-art crop biomass estimation methods using MMCBE and ventured into additional potential applications, such as 3D crop reconstruction from drone imagery and novel-view rendering. With this publication, we are making our comprehensive dataset available to the broader community.

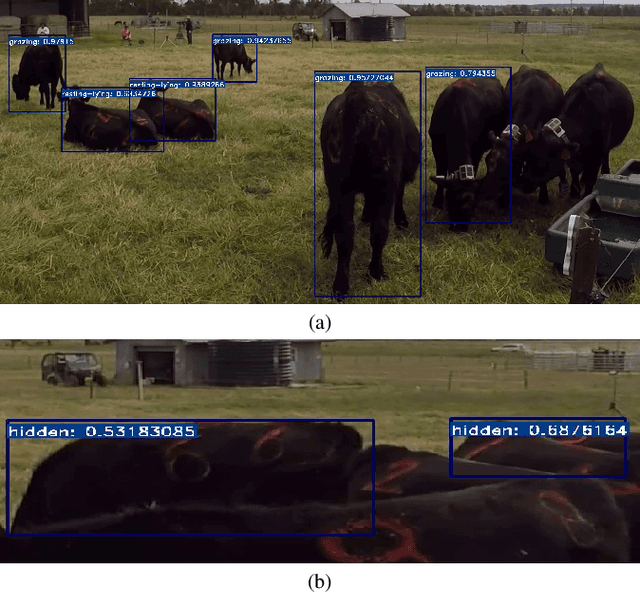

CVB: A Video Dataset of Cattle Visual Behaviors

May 26, 2023

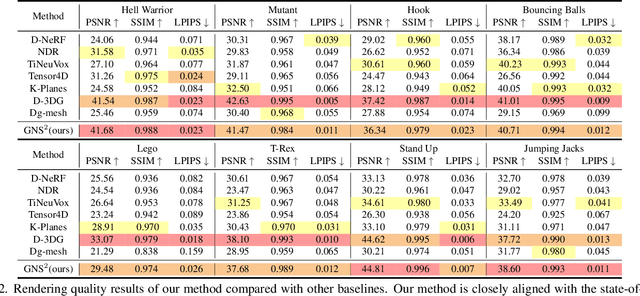

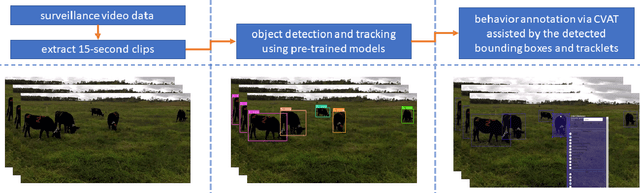

Abstract:Existing image/video datasets for cattle behavior recognition are mostly small, lack well-defined labels, or are collected in unrealistic controlled environments. This limits the utility of machine learning (ML) models learned from them. Therefore, we introduce a new dataset, called Cattle Visual Behaviors (CVB), that consists of 502 video clips, each fifteen seconds long, captured in natural lighting conditions, and annotated with eleven visually perceptible behaviors of grazing cattle. We use the Computer Vision Annotation Tool (CVAT) to collect our annotations. To make the procedure more efficient, we perform an initial detection and tracking of cattle in the videos using appropriate pre-trained models. The results are corrected by domain experts along with cattle behavior labeling in CVAT. The pre-hoc detection and tracking step significantly reduces the manual annotation time and effort. Moreover, we convert CVB to the atomic visual action (AVA) format and train and evaluate the popular SlowFast action recognition model on it. The associated preliminary results confirm that we can localize the cattle and recognize their frequently occurring behaviors with confidence. By creating and sharing CVB, our aim is to develop improved models capable of recognizing all important behaviors accurately and to assist other researchers and practitioners in developing and evaluating new ML models for cattle behavior classification using video data.

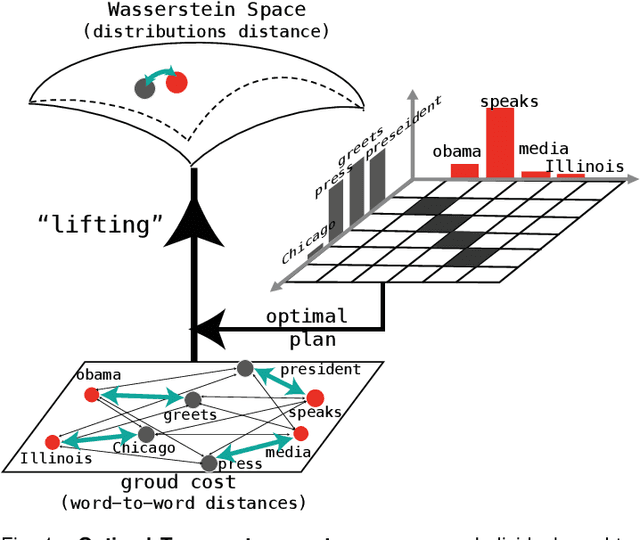

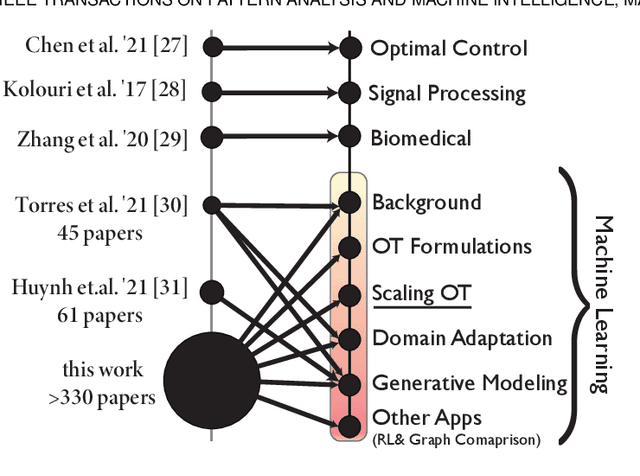

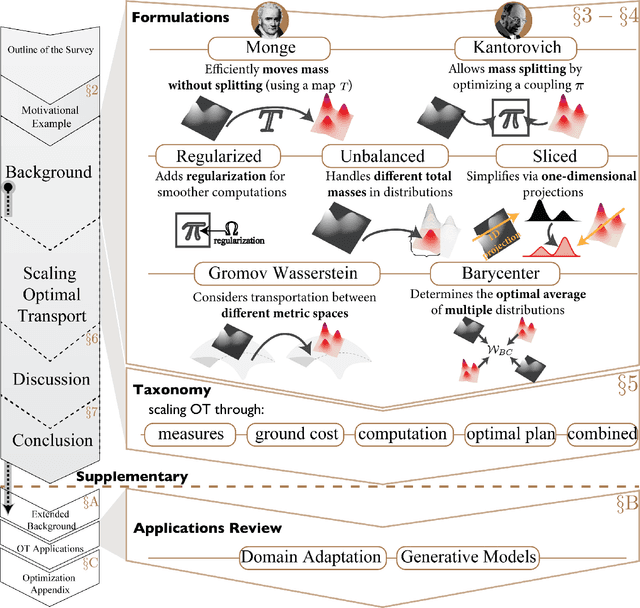

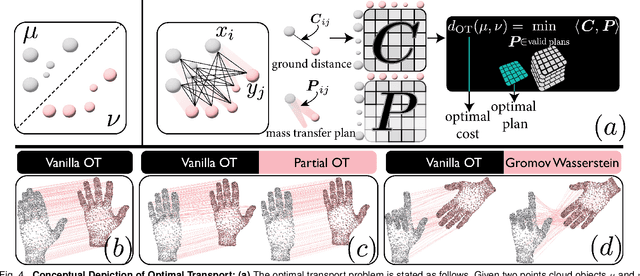

Earth Movers in The Big Data Era: A Review of Optimal Transport in Machine Learning

May 08, 2023

Abstract:Optimal Transport (OT) is a mathematical framework that first emerged in the eighteenth century and has led to a plethora of methods for answering many theoretical and applied questions. The last decade is a witness of the remarkable contributions of this classical optimization problem to machine learning. This paper is about where and how optimal transport is used in machine learning with a focus on the question of salable optimal transport. We provide a comprehensive survey of optimal transport while ensuring an accessible presentation as permitted by the nature of the topic and the context. First, we explain optimal transport background and introduce different flavors (i.e. mathematical formulations), properties, and notable applications. We then address the fundamental question of how to scale optimal transport to cope with the current demands of big and high dimensional data. We conduct a systematic analysis of the methods used in the literature for scaling OT and present the findings in a unified taxonomy. We conclude with presenting some open challenges and discussing potential future research directions. A live repository of related OT research papers is maintained in https://github.com/abdelwahed/OT_for_big_data.git.

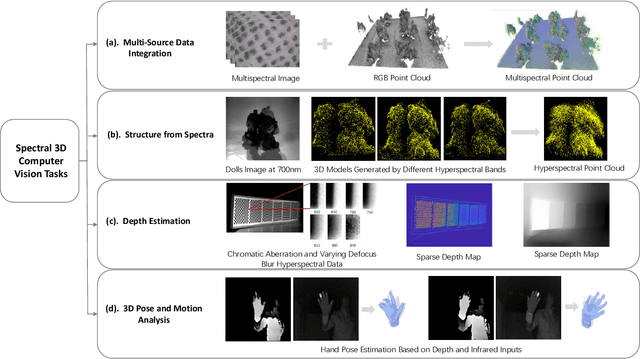

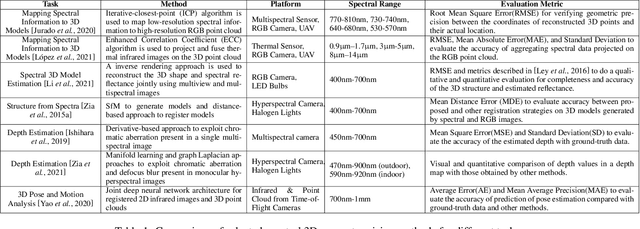

Spectral 3D Computer Vision -- A Review

Feb 16, 2023

Abstract:Spectral 3D computer vision examines both the geometric and spectral properties of objects. It provides a deeper understanding of an object's physical properties by providing information from narrow bands in various regions of the electromagnetic spectrum. Mapping the spectral information onto the 3D model reveals changes in the spectra-structure space or enhances 3D representations with properties such as reflectance, chromatic aberration, and varying defocus blur. This emerging paradigm advances traditional computer vision and opens new avenues of research in 3D structure, depth estimation, motion analysis, and more. It has found applications in areas such as smart agriculture, environment monitoring, building inspection, geological exploration, and digital cultural heritage records. This survey offers a comprehensive overview of spectral 3D computer vision, including a unified taxonomy of methods, key application areas, and future challenges and prospects.

Topological Deep Learning: A Review of an Emerging Paradigm

Feb 08, 2023Abstract:Topological data analysis (TDA) provides insight into data shape. The summaries obtained by these methods are principled global descriptions of multi-dimensional data whilst exhibiting stable properties such as robustness to deformation and noise. Such properties are desirable in deep learning pipelines but they are typically obtained using non-TDA strategies. This is partly caused by the difficulty of combining TDA constructs (e.g. barcode and persistence diagrams) with current deep learning algorithms. Fortunately, we are now witnessing a growth of deep learning applications embracing topologically-guided components. In this survey, we review the nascent field of topological deep learning by first revisiting the core concepts of TDA. We then explore how the use of TDA techniques has evolved over time to support deep learning frameworks, and how they can be integrated into different aspects of deep learning. Furthermore, we touch on TDA usage for analyzing existing deep models; deep topological analytics. Finally, we discuss the challenges and future prospects of topological deep learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge