Venkatnarayanan Lakshminarasimhan

TUMTraffic-VideoQA: A Benchmark for Unified Spatio-Temporal Video Understanding in Traffic Scenes

Feb 04, 2025

Abstract:We present TUMTraffic-VideoQA, a novel dataset and benchmark designed for spatio-temporal video understanding in complex roadside traffic scenarios. The dataset comprises 1,000 videos, featuring 85,000 multiple-choice QA pairs, 2,300 object captioning, and 5,700 object grounding annotations, encompassing diverse real-world conditions such as adverse weather and traffic anomalies. By incorporating tuple-based spatio-temporal object expressions, TUMTraffic-VideoQA unifies three essential tasks-multiple-choice video question answering, referred object captioning, and spatio-temporal object grounding-within a cohesive evaluation framework. We further introduce the TUMTraffic-Qwen baseline model, enhanced with visual token sampling strategies, providing valuable insights into the challenges of fine-grained spatio-temporal reasoning. Extensive experiments demonstrate the dataset's complexity, highlight the limitations of existing models, and position TUMTraffic-VideoQA as a robust foundation for advancing research in intelligent transportation systems. The dataset and benchmark are publicly available to facilitate further exploration.

WARM-3D: A Weakly-Supervised Sim2Real Domain Adaptation Framework for Roadside Monocular 3D Object Detection

Jul 30, 2024

Abstract:Existing roadside perception systems are limited by the absence of publicly available, large-scale, high-quality 3D datasets. Exploring the use of cost-effective, extensive synthetic datasets offers a viable solution to tackle this challenge and enhance the performance of roadside monocular 3D detection. In this study, we introduce the TUMTraf Synthetic Dataset, offering a diverse and substantial collection of high-quality 3D data to augment scarce real-world datasets. Besides, we present WARM-3D, a concise yet effective framework to aid the Sim2Real domain transfer for roadside monocular 3D detection. Our method leverages cheap synthetic datasets and 2D labels from an off-the-shelf 2D detector for weak supervision. We show that WARM-3D significantly enhances performance, achieving a +12.40% increase in mAP 3D over the baseline with only pseudo-2D supervision. With 2D GT as weak labels, WARM-3D even reaches performance close to the Oracle baseline. Moreover, WARM-3D improves the ability of 3D detectors to unseen sample recognition across various real-world environments, highlighting its potential for practical applications.

TUMTraf Event: Calibration and Fusion Resulting in a Dataset for Roadside Event-Based and RGB Cameras

Jan 16, 2024

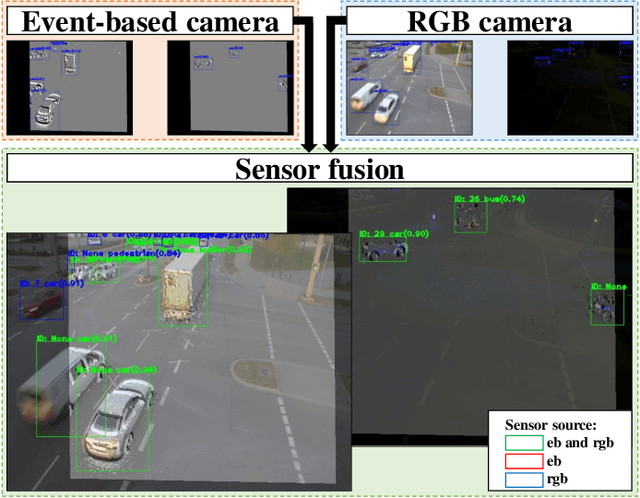

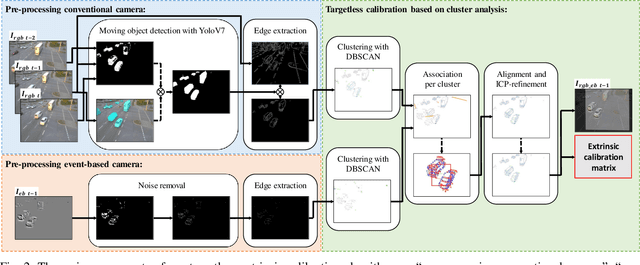

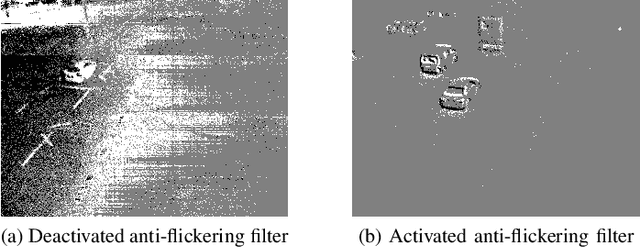

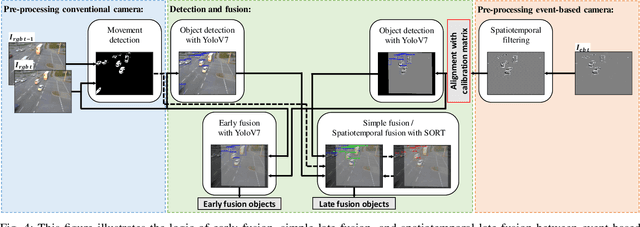

Abstract:Event-based cameras are predestined for Intelligent Transportation Systems (ITS). They provide very high temporal resolution and dynamic range, which can eliminate motion blur and make objects easier to recognize at night. However, event-based images lack color and texture compared to images from a conventional rgb camera. Considering that, data fusion between event-based and conventional cameras can combine the strengths of both modalities. For this purpose, extrinsic calibration is necessary. To the best of our knowledge, no targetless calibration between event-based and rgb cameras can handle multiple moving objects, nor data fusion optimized for the domain of roadside ITS exists, nor synchronized event-based and rgb camera datasets in the field of ITS are known. To fill these research gaps, based on our previous work, we extend our targetless calibration approach with clustering methods to handle multiple moving objects. Furthermore, we develop an early fusion, simple late fusion, and a novel spatiotemporal late fusion method. Lastly, we publish the TUMTraf Event Dataset, which contains more than 4k synchronized event-based and rgb images with 21.9k labeled 2D boxes. During our extensive experiments, we verified the effectiveness of our calibration method with multiple moving objects. Furthermore, compared to a single rgb camera, we increased the detection performance of up to +16% mAP in the day and up to +12% mAP in the challenging night with our presented event-based sensor fusion methods. The TUMTraf Event Dataset is available at https://innovation-mobility.com/tumtraf-dataset.

A9-Dataset: Multi-Sensor Infrastructure-Based Dataset for Mobility Research

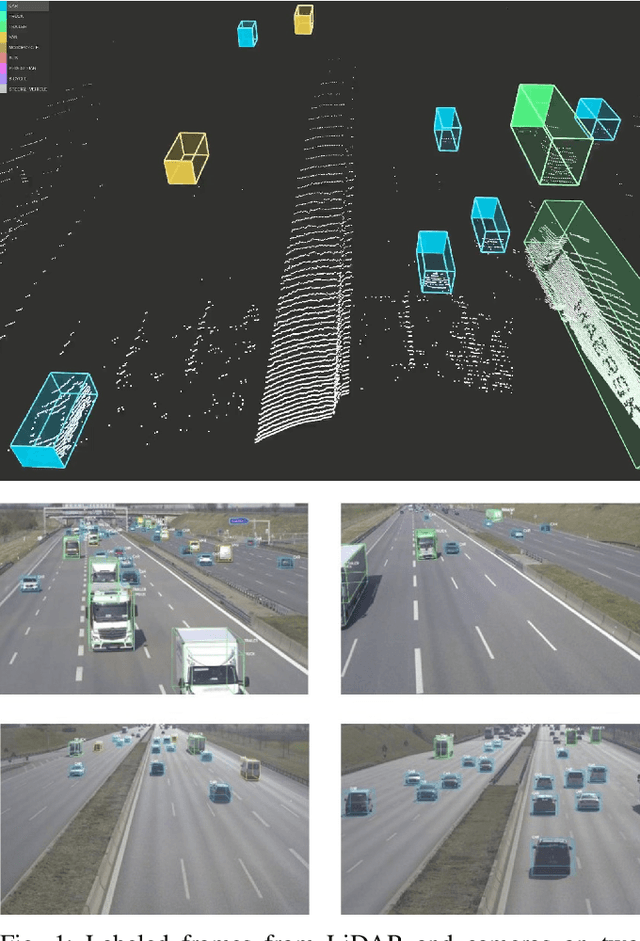

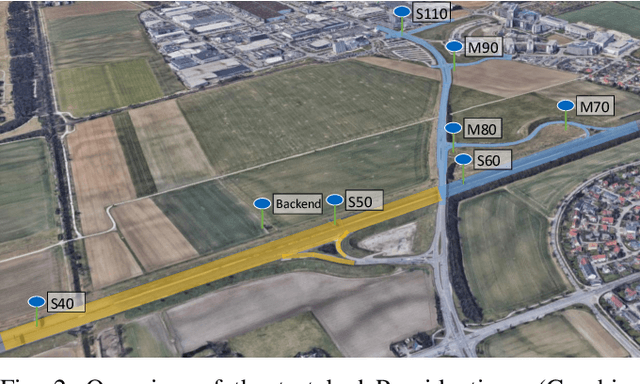

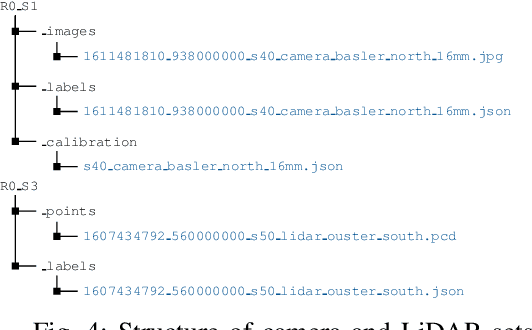

Apr 13, 2022

Abstract:Data-intensive machine learning based techniques increasingly play a prominent role in the development of future mobility solutions - from driver assistance and automation functions in vehicles, to real-time traffic management systems realized through dedicated infrastructure. The availability of high quality real-world data is often an important prerequisite for the development and reliable deployment of such systems in large scale. Towards this endeavour, we present the A9-Dataset based on roadside sensor infrastructure from the 3 km long Providentia++ test field near Munich in Germany. The dataset includes anonymized and precision-timestamped multi-modal sensor and object data in high resolution, covering a variety of traffic situations. As part of the first set of data, which we describe in this paper, we provide camera and LiDAR frames from two overhead gantry bridges on the A9 autobahn with the corresponding objects labeled with 3D bounding boxes. The first set includes in total more than 1000 sensor frames and 14000 traffic objects. The dataset is available for download at https://a9-dataset.com.

Federated Learning Framework Coping with Hierarchical Heterogeneity in Cooperative ITS

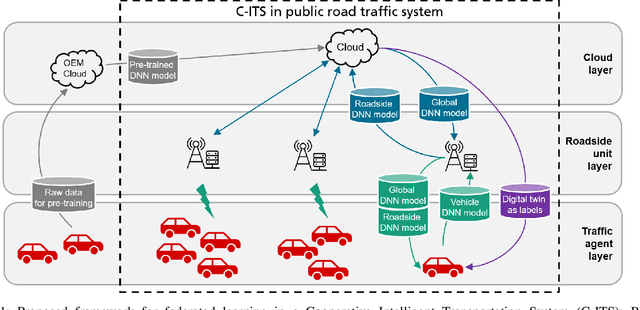

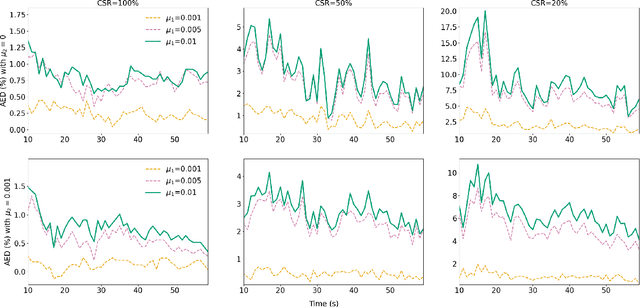

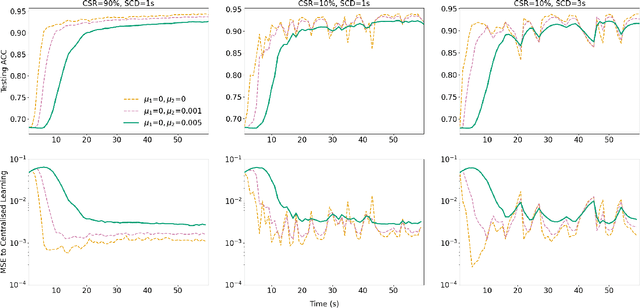

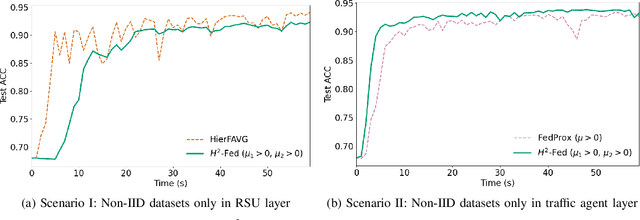

Apr 11, 2022

Abstract:In this paper, we introduce a federated learning framework coping with Hierarchical Heterogeneity (H2-Fed), which can notably enhance the conventional pre-trained deep learning model. The framework exploits data from connected public traffic agents in vehicular networks without affecting user data privacy. By coordinating existing traffic infrastructure, including roadside units and road traffic clouds, the model parameters are efficiently disseminated by vehicular communications and hierarchically aggregated. Considering the individual heterogeneity of data distribution, computational and communication capabilities across traffic agents and roadside units, we employ a novel method that addresses the heterogeneity of different aggregation layers of the framework architecture, i.e., aggregation in layers of roadside units and cloud. The experiment results indicate that our method can well balance the learning accuracy and stability according to the knowledge of heterogeneity in current communication networks. Compared to other baseline approaches, the evaluation on a Non-IID MNIST dataset shows that our framework is more general and capable especially in application scenarios with low communication quality. Even when 90% of the agents are timely disconnected, the pre-trained deep learning model can still be forced to converge stably, and its accuracy can be enhanced from 68% to over 90% after convergence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge