Christian Creß

ActiveAnno3D -- An Active Learning Framework for Multi-Modal 3D Object Detection

Feb 05, 2024

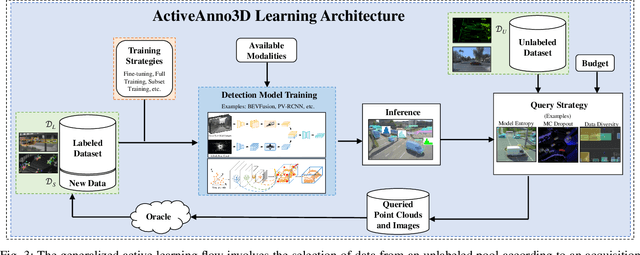

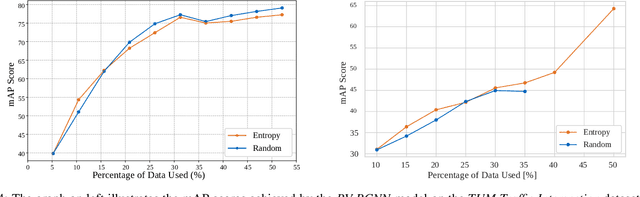

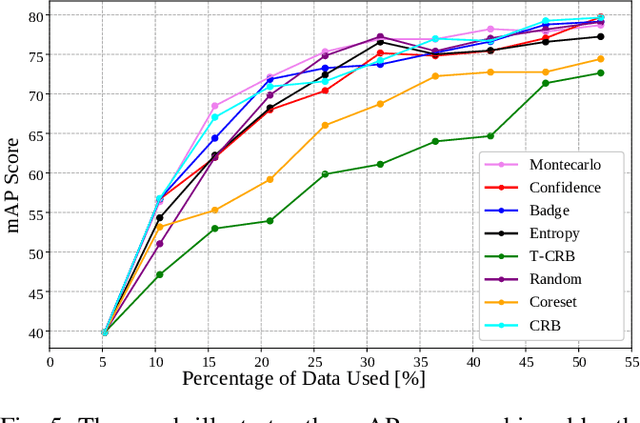

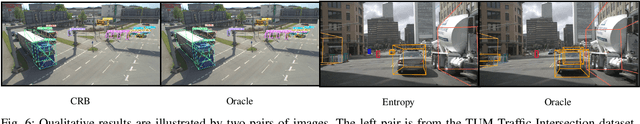

Abstract:The curation of large-scale datasets is still costly and requires much time and resources. Data is often manually labeled, and the challenge of creating high-quality datasets remains. In this work, we fill the research gap using active learning for multi-modal 3D object detection. We propose ActiveAnno3D, an active learning framework to select data samples for labeling that are of maximum informativeness for training. We explore various continuous training methods and integrate the most efficient method regarding computational demand and detection performance. Furthermore, we perform extensive experiments and ablation studies with BEVFusion and PV-RCNN on the nuScenes and TUM Traffic Intersection dataset. We show that we can achieve almost the same performance with PV-RCNN and the entropy-based query strategy when using only half of the training data (77.25 mAP compared to 83.50 mAP) of the TUM Traffic Intersection dataset. BEVFusion achieved an mAP of 64.31 when using half of the training data and 75.0 mAP when using the complete nuScenes dataset. We integrate our active learning framework into the proAnno labeling tool to enable AI-assisted data selection and labeling and minimize the labeling costs. Finally, we provide code, weights, and visualization results on our website: https://active3d-framework.github.io/active3d-framework.

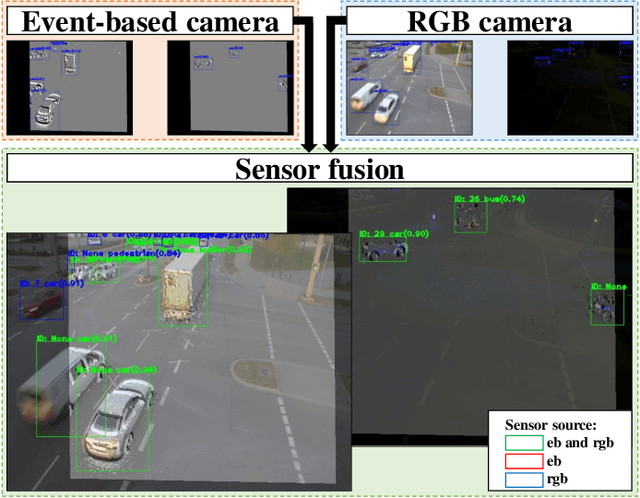

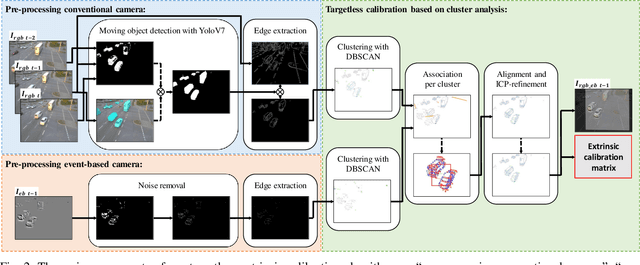

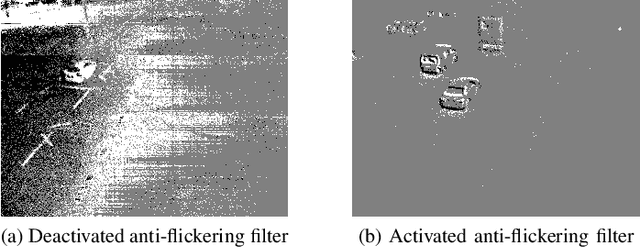

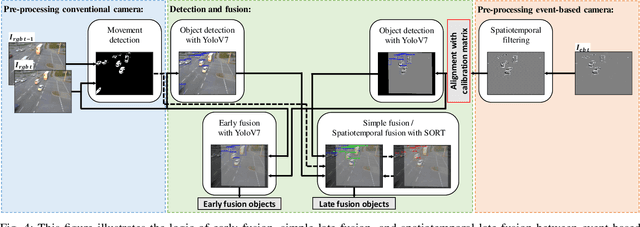

TUMTraf Event: Calibration and Fusion Resulting in a Dataset for Roadside Event-Based and RGB Cameras

Jan 16, 2024

Abstract:Event-based cameras are predestined for Intelligent Transportation Systems (ITS). They provide very high temporal resolution and dynamic range, which can eliminate motion blur and make objects easier to recognize at night. However, event-based images lack color and texture compared to images from a conventional rgb camera. Considering that, data fusion between event-based and conventional cameras can combine the strengths of both modalities. For this purpose, extrinsic calibration is necessary. To the best of our knowledge, no targetless calibration between event-based and rgb cameras can handle multiple moving objects, nor data fusion optimized for the domain of roadside ITS exists, nor synchronized event-based and rgb camera datasets in the field of ITS are known. To fill these research gaps, based on our previous work, we extend our targetless calibration approach with clustering methods to handle multiple moving objects. Furthermore, we develop an early fusion, simple late fusion, and a novel spatiotemporal late fusion method. Lastly, we publish the TUMTraf Event Dataset, which contains more than 4k synchronized event-based and rgb images with 21.9k labeled 2D boxes. During our extensive experiments, we verified the effectiveness of our calibration method with multiple moving objects. Furthermore, compared to a single rgb camera, we increased the detection performance of up to +16% mAP in the day and up to +12% mAP in the challenging night with our presented event-based sensor fusion methods. The TUMTraf Event Dataset is available at https://innovation-mobility.com/tumtraf-dataset.

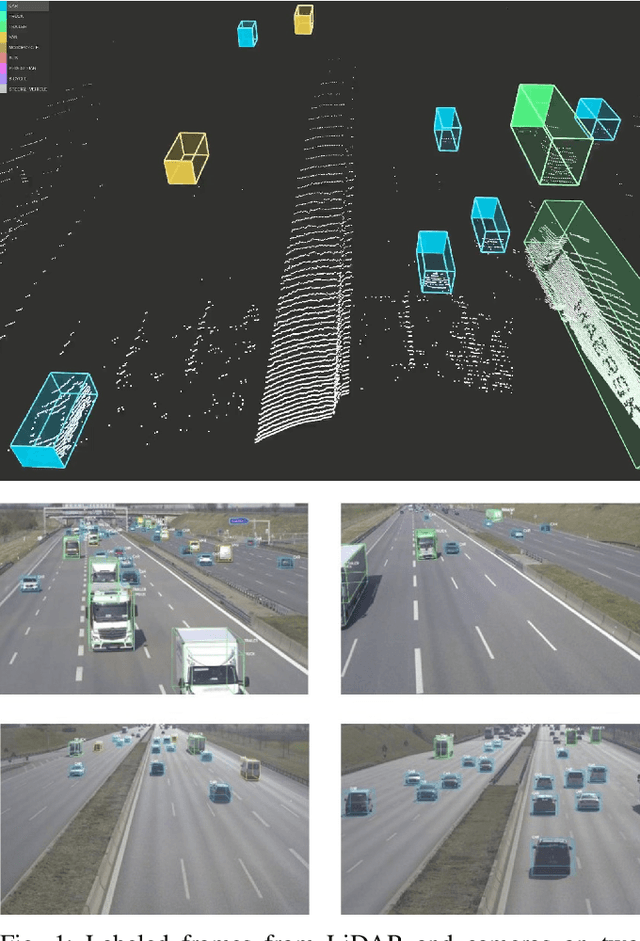

A9 Intersection Dataset: All You Need for Urban 3D Camera-LiDAR Roadside Perception

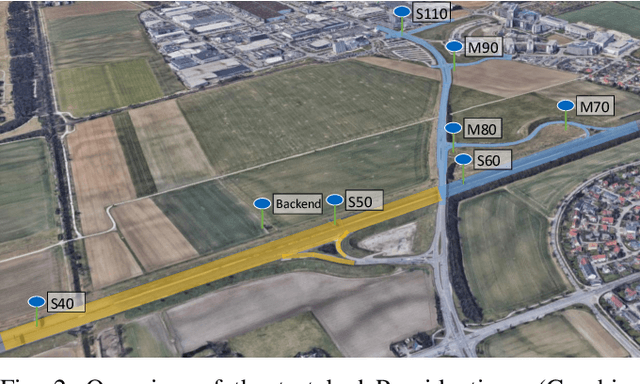

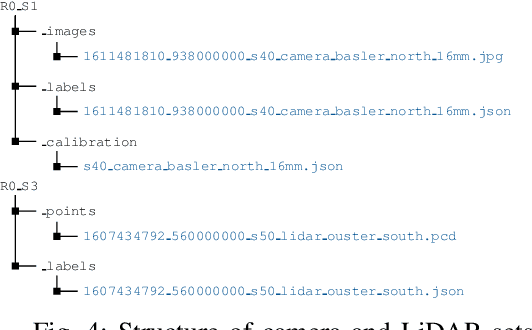

Jun 15, 2023Abstract:Intelligent Transportation Systems (ITS) allow a drastic expansion of the visibility range and decrease occlusions for autonomous driving. To obtain accurate detections, detailed labeled sensor data for training is required. Unfortunately, high-quality 3D labels of LiDAR point clouds from the infrastructure perspective of an intersection are still rare. Therefore, we provide the A9 Intersection Dataset, which consists of labeled LiDAR point clouds and synchronized camera images. Here, we recorded the sensor output from two roadside cameras and LiDARs mounted on intersection gantry bridges. The point clouds were labeled in 3D by experienced annotators. Furthermore, we provide calibration data between all sensors, which allow the projection of the 3D labels into the camera images and an accurate data fusion. Our dataset consists of 4.8k images and point clouds with more than 57.4k manually labeled 3D boxes. With ten object classes, it has a high diversity of road users in complex driving maneuvers, such as left and right turns, overtaking, and U-turns. In experiments, we provided multiple baselines for the perception tasks. Overall, our dataset is a valuable contribution to the scientific community to perform complex 3D camera-LiDAR roadside perception tasks. Find data, code, and more information at https://a9-dataset.com.

A9-Dataset: Multi-Sensor Infrastructure-Based Dataset for Mobility Research

Apr 13, 2022

Abstract:Data-intensive machine learning based techniques increasingly play a prominent role in the development of future mobility solutions - from driver assistance and automation functions in vehicles, to real-time traffic management systems realized through dedicated infrastructure. The availability of high quality real-world data is often an important prerequisite for the development and reliable deployment of such systems in large scale. Towards this endeavour, we present the A9-Dataset based on roadside sensor infrastructure from the 3 km long Providentia++ test field near Munich in Germany. The dataset includes anonymized and precision-timestamped multi-modal sensor and object data in high resolution, covering a variety of traffic situations. As part of the first set of data, which we describe in this paper, we provide camera and LiDAR frames from two overhead gantry bridges on the A9 autobahn with the corresponding objects labeled with 3D bounding boxes. The first set includes in total more than 1000 sensor frames and 14000 traffic objects. The dataset is available for download at https://a9-dataset.com.

Intelligent Transportation Systems With The Use of External Infrastructure: A Literature Survey

Dec 15, 2021

Abstract:Increasing problems in the transportation segment are accidents, bad traffic flow and pollution. The Intelligent Transportation System with the use of external infrastructure (ITS) can tackle these problems. To the best of our knowledge, there exists no current systematic review of the existing solutions. To fill this knowledge gap, this paper provides an overview about existing ITS which use external infrastructure. Furthermore, this paper discovers the currently not adequately answered research questions. For this reason, we performed a literature review to documents, which describes existing ITS solutions since 2009 until today. We categorized the results according to his technology level and analyzed their properties. Thereby, we made the several ITS comparable and highlighted the past development as well as the current trends. According to the mentioned method, we analyzed more than 346 papers, which includes 40 test bed projects. In summary, the current ITS can deliver high accurate information about individuals in traffic situations in real-time. However, further research in ITS should focus on more reliable perception of the traffic with the use of modern sensors, plug and play mechanism as well as secure real-time distribution in decentralized manner for a high amount of data. With addressing these topics, the development of Intelligent Transportation Systems is in a correction direction for the comprehensive roll-out.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge