Bach Ngoc Doan

TUMTraf Event: Calibration and Fusion Resulting in a Dataset for Roadside Event-Based and RGB Cameras

Jan 16, 2024

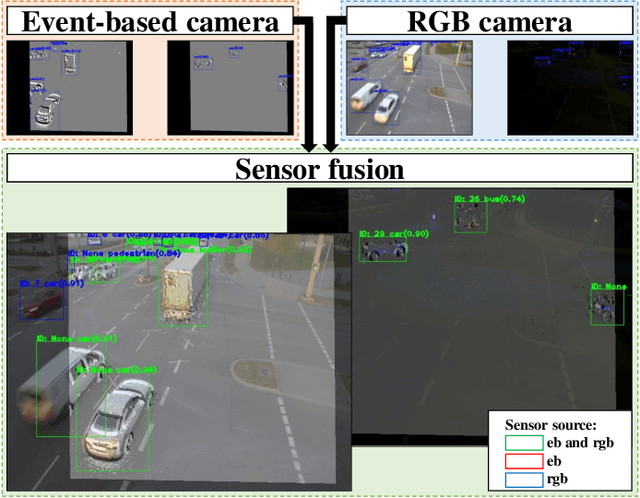

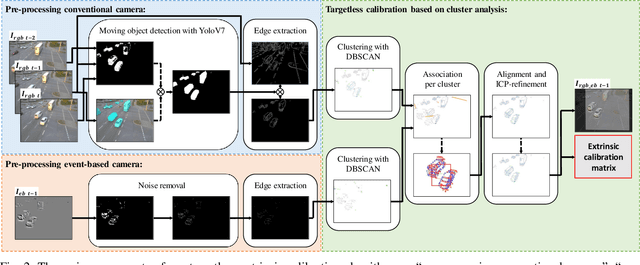

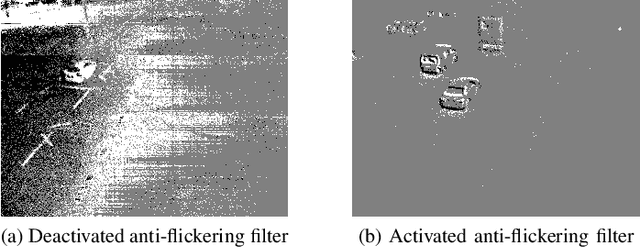

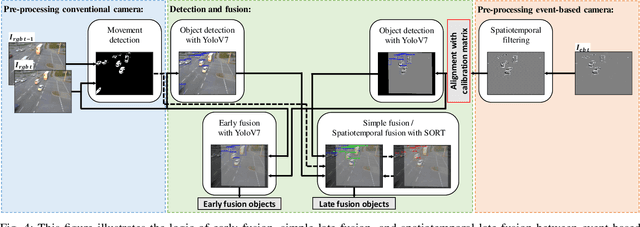

Abstract:Event-based cameras are predestined for Intelligent Transportation Systems (ITS). They provide very high temporal resolution and dynamic range, which can eliminate motion blur and make objects easier to recognize at night. However, event-based images lack color and texture compared to images from a conventional rgb camera. Considering that, data fusion between event-based and conventional cameras can combine the strengths of both modalities. For this purpose, extrinsic calibration is necessary. To the best of our knowledge, no targetless calibration between event-based and rgb cameras can handle multiple moving objects, nor data fusion optimized for the domain of roadside ITS exists, nor synchronized event-based and rgb camera datasets in the field of ITS are known. To fill these research gaps, based on our previous work, we extend our targetless calibration approach with clustering methods to handle multiple moving objects. Furthermore, we develop an early fusion, simple late fusion, and a novel spatiotemporal late fusion method. Lastly, we publish the TUMTraf Event Dataset, which contains more than 4k synchronized event-based and rgb images with 21.9k labeled 2D boxes. During our extensive experiments, we verified the effectiveness of our calibration method with multiple moving objects. Furthermore, compared to a single rgb camera, we increased the detection performance of up to +16% mAP in the day and up to +12% mAP in the challenging night with our presented event-based sensor fusion methods. The TUMTraf Event Dataset is available at https://innovation-mobility.com/tumtraf-dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge