Tommi Jauhiainen

Findings of the VarDial Evaluation Campaign 2023

May 31, 2023Abstract:This report presents the results of the shared tasks organized as part of the VarDial Evaluation Campaign 2023. The campaign is part of the tenth workshop on Natural Language Processing (NLP) for Similar Languages, Varieties and Dialects (VarDial), co-located with EACL 2023. Three separate shared tasks were included this year: Slot and intent detection for low-resource language varieties (SID4LR), Discriminating Between Similar Languages -- True Labels (DSL-TL), and Discriminating Between Similar Languages -- Speech (DSL-S). All three tasks were organized for the first time this year.

Language Variety Identification with True Labels

Mar 02, 2023

Abstract:Language identification is an important first step in many IR and NLP applications. Most publicly available language identification datasets, however, are compiled under the assumption that the gold label of each instance is determined by where texts are retrieved from. Research has shown that this is a problematic assumption, particularly in the case of very similar languages (e.g., Croatian and Serbian) and national language varieties (e.g., Brazilian and European Portuguese), where texts may contain no distinctive marker of the particular language or variety. To overcome this important limitation, this paper presents DSL True Labels (DSL-TL), the first human-annotated multilingual dataset for language variety identification. DSL-TL contains a total of 12,900 instances in Portuguese, split between European Portuguese and Brazilian Portuguese; Spanish, split between Argentine Spanish and Castilian Spanish; and English, split between American English and British English. We trained multiple models to discriminate between these language varieties, and we present the results in detail. The data and models presented in this paper provide a reliable benchmark toward the development of robust and fairer language variety identification systems. We make DSL-TL freely available to the research community.

Comparing Approaches to Dravidian Language Identification

Mar 09, 2021

Abstract:This paper describes the submissions by team HWR to the Dravidian Language Identification (DLI) shared task organized at VarDial 2021 workshop. The DLI training set includes 16,674 YouTube comments written in Roman script containing code-mixed text with English and one of the three South Dravidian languages: Kannada, Malayalam, and Tamil. We submitted results generated using two models, a Naive Bayes classifier with adaptive language models, which has shown to obtain competitive performance in many language and dialect identification tasks, and a transformer-based model which is widely regarded as the state-of-the-art in a number of NLP tasks. Our first submission was sent in the closed submission track using only the training set provided by the shared task organisers, whereas the second submission is considered to be open as it used a pretrained model trained with external data. Our team attained shared second position in the shared task with the submission based on Naive Bayes. Our results reinforce the idea that deep learning methods are not as competitive in language identification related tasks as they are in many other text classification tasks.

FinnSentiment -- A Finnish Social Media Corpus for Sentiment Polarity Annotation

Dec 04, 2020

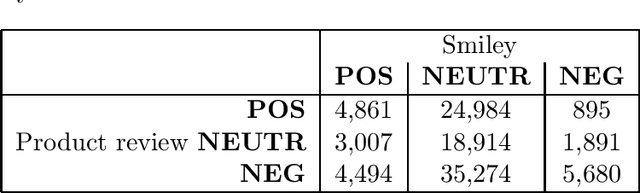

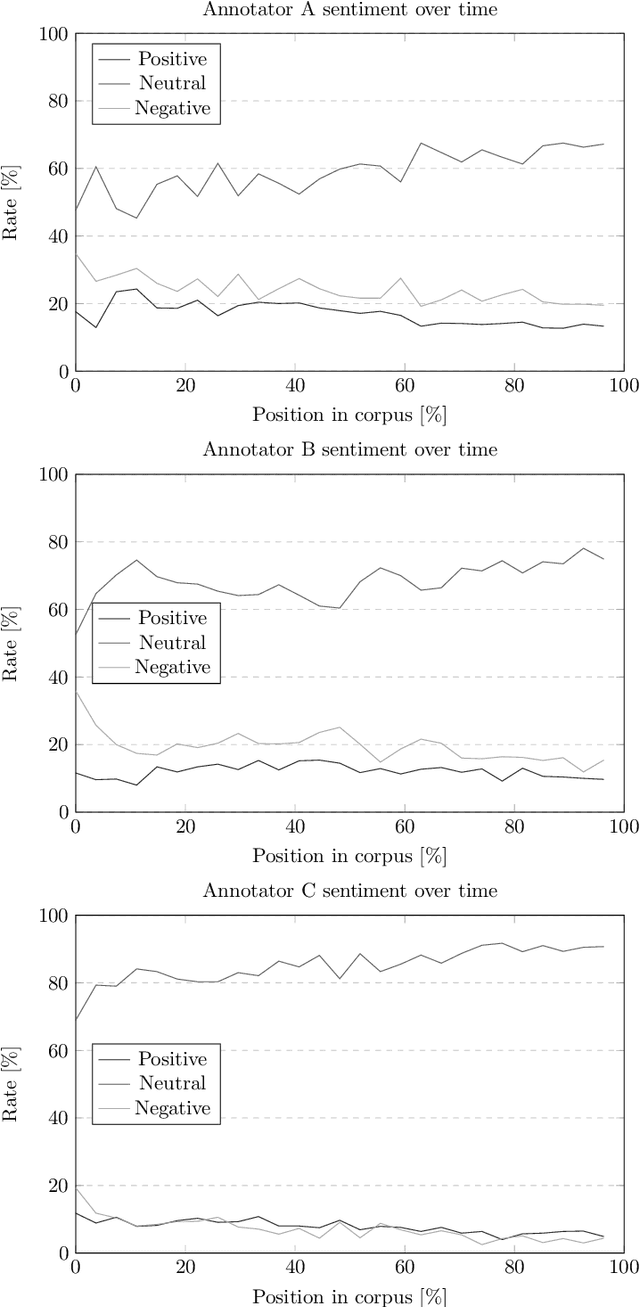

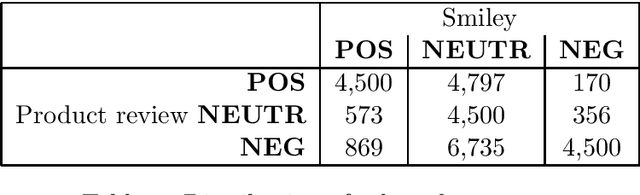

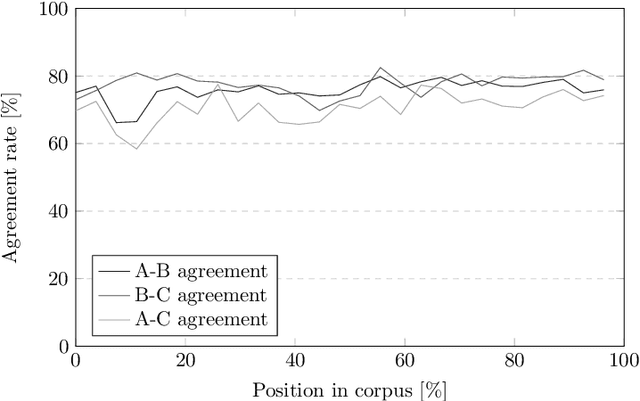

Abstract:Sentiment analysis and opinion mining is an important task with obvious application areas in social media, e.g. when indicating hate speech and fake news. In our survey of previous work, we note that there is no large-scale social media data set with sentiment polarity annotations for Finnish. This publications aims to remedy this shortcoming by introducing a 27,000 sentence data set annotated independently with sentiment polarity by three native annotators. We had the same three annotators for the whole data set, which provides a unique opportunity for further studies of annotator behaviour over time. We analyse their inter-annotator agreement and provide two baselines to validate the usefulness of the data set.

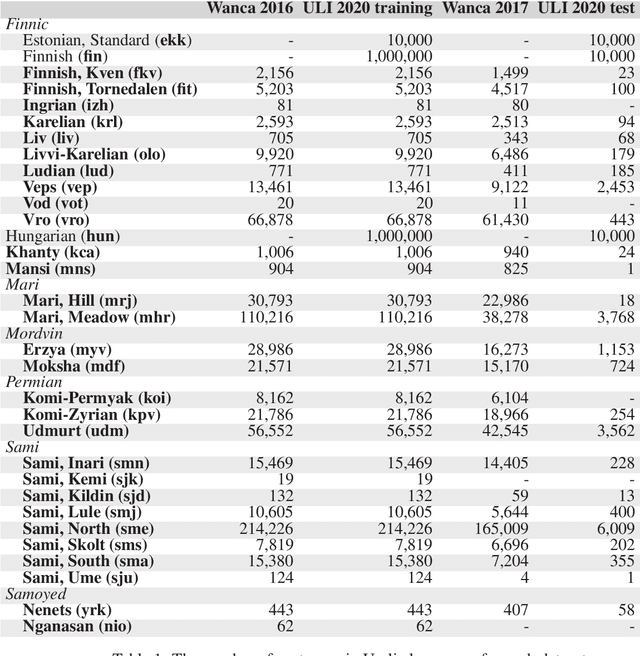

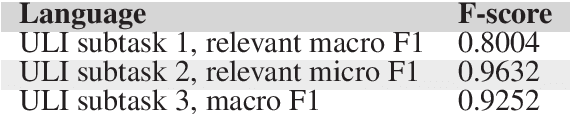

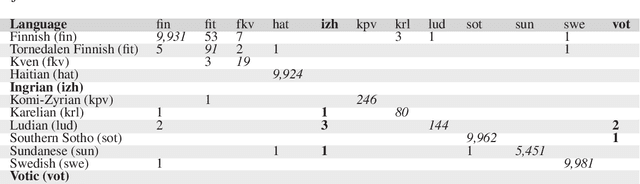

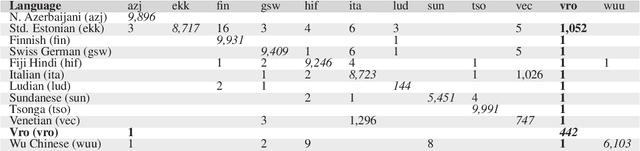

Uralic Language Identification (ULI) 2020 shared task dataset and the Wanca 2017 corpus

Aug 27, 2020

Abstract:This article introduces the Wanca 2017 corpus of texts crawled from the internet from which the sentences in rare Uralic languages for the use of the Uralic Language Identification (ULI) 2020 shared task were collected. We describe the ULI dataset and how it was constructed using the Wanca 2017 corpus and texts in different languages from the Leipzig corpora collection. We also provide baseline language identification experiments conducted using the ULI 2020 dataset.

Language Model Adaptation for Language and Dialect Identification of Text

Mar 26, 2019

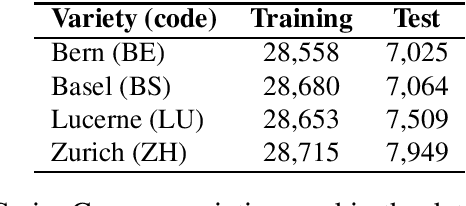

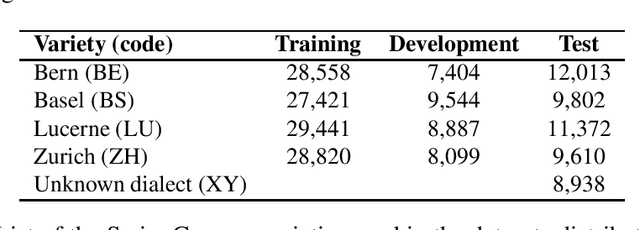

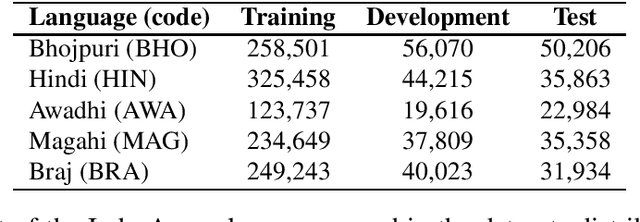

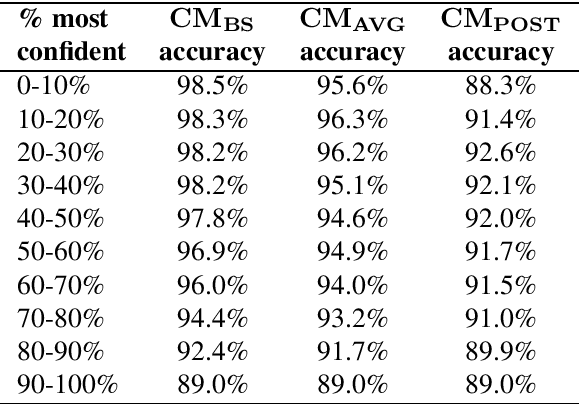

Abstract:This article describes an unsupervised language model adaptation approach that can be used to enhance the performance of language identification methods. The approach is applied to a current version of the HeLI language identification method, which is now called HeLI 2.0. We describe the HeLI 2.0 method in detail. The resulting system is evaluated using the datasets from the German dialect identification and Indo-Aryan language identification shared tasks of the VarDial workshops 2017 and 2018. The new approach with language identification provides considerably higher F1-scores than the previous HeLI method or the other systems which participated in the shared tasks. The results indicate that unsupervised language model adaptation should be considered as an option in all language identification tasks, especially in those where encountering out-of-domain data is likely.

Language and Dialect Identification of Cuneiform Texts

Mar 13, 2019

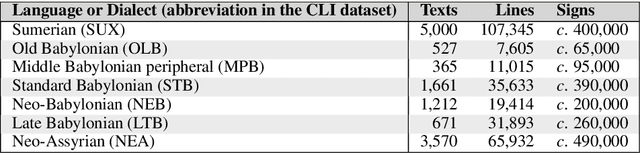

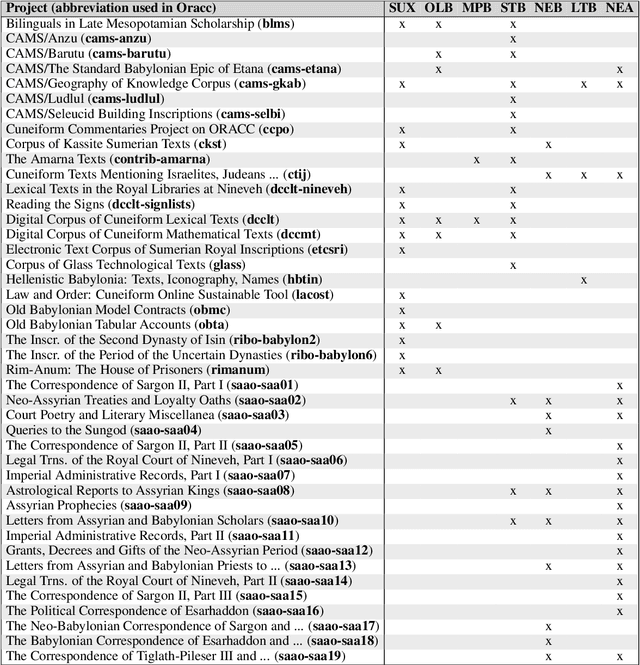

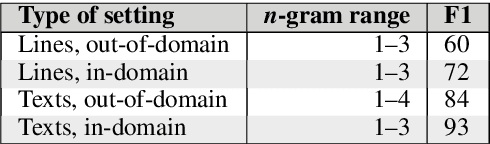

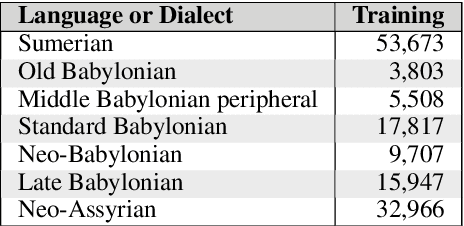

Abstract:This article introduces a corpus of cuneiform texts from which the dataset for the use of the Cuneiform Language Identification (CLI) 2019 shared task was derived as well as some preliminary language identification experiments conducted using that corpus. We also describe the CLI dataset and how it was derived from the corpus. In addition, we provide some baseline language identification results using the CLI dataset. To the best of our knowledge, the experiments detailed here are the first time automatic language identification methods have been used on cuneiform data.

Automatic Language Identification in Texts: A Survey

Apr 22, 2018

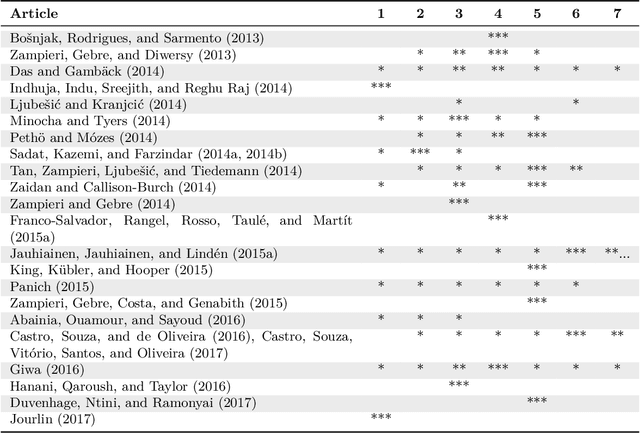

Abstract:Language identification (LI) is the problem of determining the natural language that a document or part thereof is written in. Automatic LI has been extensively researched for over fifty years. Today, LI is a key part of many text processing pipelines, as text processing techniques generally assume that the language of the input text is known. Research in this area has recently been especially active. This article provides a brief history of LI research, and an extensive survey of the features and methods used so far in the LI literature. For describing the features and methods we introduce a unified notation. We discuss evaluation methods, applications of LI, as well as off-the-shelf LI systems that do not require training by the end user. Finally, we identify open issues, survey the work to date on each issue, and propose future directions for research in LI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge