Timm Haucke

Deep in the Jungle: Towards Automating Chimpanzee Population Estimation

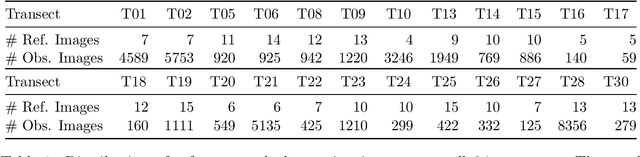

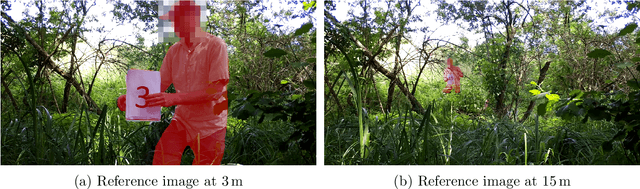

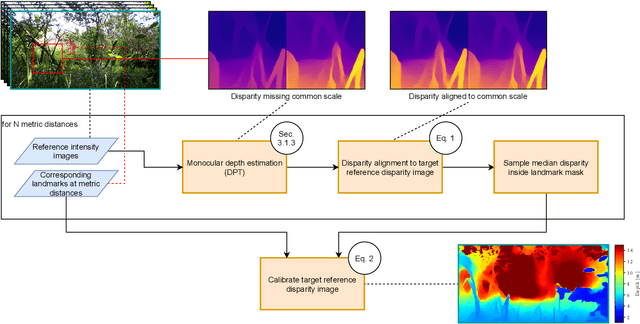

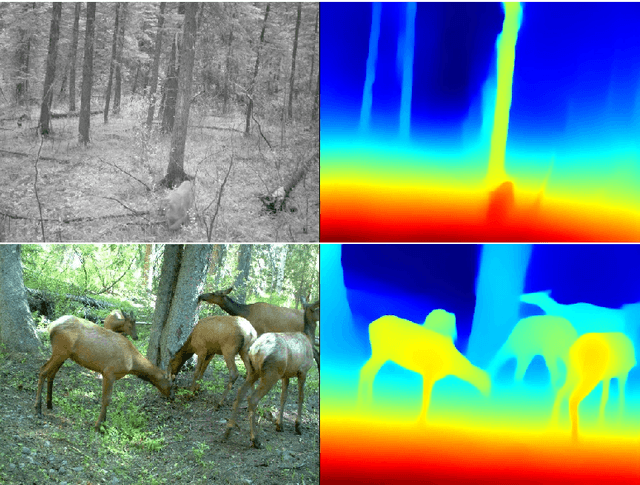

Jan 30, 2026Abstract:The estimation of abundance and density in unmarked populations of great apes relies on statistical frameworks that require animal-to-camera distance measurements. In practice, acquiring these distances depends on labour-intensive manual interpretation of animal observations across large camera trap video corpora. This study introduces and evaluates an only sparsely explored alternative: the integration of computer vision-based monocular depth estimation (MDE) pipelines directly into ecological camera trap workflows for great ape conservation. Using a real-world dataset of 220 camera trap videos documenting a wild chimpanzee population, we combine two MDE models, Dense Prediction Transformers and Depth Anything, with multiple distance sampling strategies. These components are used to generate detection distance estimates, from which population density and abundance are inferred. Comparative analysis against manually derived ground-truth distances shows that calibrated DPT consistently outperforms Depth Anything. This advantage is observed in both distance estimation accuracy and downstream density and abundance inference. Nevertheless, both models exhibit systematic biases. We show that, given complex forest environments, they tend to overestimate detection distances and consequently underestimate density and abundance relative to conventional manual approaches. We further find that failures in animal detection across distance ranges are a primary factor limiting estimation accuracy. Overall, this work provides a case study that shows MDE-driven camera trap distance sampling is a viable and practical alternative to manual distance estimation. The proposed approach yields population estimates within 22% of those obtained using traditional methods.

DataS^3: Dataset Subset Selection for Specialization

Apr 22, 2025

Abstract:In many real-world machine learning (ML) applications (e.g. detecting broken bones in x-ray images, detecting species in camera traps), in practice models need to perform well on specific deployments (e.g. a specific hospital, a specific national park) rather than the domain broadly. However, deployments often have imbalanced, unique data distributions. Discrepancy between the training distribution and the deployment distribution can lead to suboptimal performance, highlighting the need to select deployment-specialized subsets from the available training data. We formalize dataset subset selection for specialization (DS3): given a training set drawn from a general distribution and a (potentially unlabeled) query set drawn from the desired deployment-specific distribution, the goal is to select a subset of the training data that optimizes deployment performance. We introduce DataS^3; the first dataset and benchmark designed specifically for the DS3 problem. DataS^3 encompasses diverse real-world application domains, each with a set of distinct deployments to specialize in. We conduct a comprehensive study evaluating algorithms from various families--including coresets, data filtering, and data curation--on DataS^3, and find that general-distribution methods consistently fail on deployment-specific tasks. Additionally, we demonstrate the existence of manually curated (deployment-specific) expert subsets that outperform training on all available data with accuracy gains up to 51.3 percent. Our benchmark highlights the critical role of tailored dataset curation in enhancing performance and training efficiency on deployment-specific distributions, which we posit will only become more important as global, public datasets become available across domains and ML models are deployed in the real world.

Pairwise Matching of Intermediate Representations for Fine-grained Explainability

Mar 28, 2025

Abstract:The differences between images belonging to fine-grained categories are often subtle and highly localized, and existing explainability techniques for deep learning models are often too diffuse to provide useful and interpretable explanations. We propose a new explainability method (PAIR-X) that leverages both intermediate model activations and backpropagated relevance scores to generate fine-grained, highly-localized pairwise visual explanations. We use animal and building re-identification (re-ID) as a primary case study of our method, and we demonstrate qualitatively improved results over a diverse set of explainability baselines on 35 public re-ID datasets. In interviews, animal re-ID experts were in unanimous agreement that PAIR-X was an improvement over existing baselines for deep model explainability, and suggested that its visualizations would be directly applicable to their work. We also propose a novel quantitative evaluation metric for our method, and demonstrate that PAIR-X visualizations appear more plausible for correct image matches than incorrect ones even when the model similarity score for the pairs is the same. By improving interpretability, PAIR-X enables humans to better distinguish correct and incorrect matches. Our code is available at: https://github.com/pairx-explains/pairx

Counting Fish with Temporal Representations of Sonar Video

Feb 07, 2025

Abstract:Accurate estimates of salmon escapement - the number of fish migrating upstream to spawn - are key data for conservation and fishery management. Existing methods for salmon counting using high-resolution imaging sonar hardware are non-invasive and compatible with computer vision processing. Prior work in this area has utilized object detection and tracking based methods for automated salmon counting. However, these techniques remain inaccessible to many sonar deployment sites due to limited compute and connectivity in the field. We propose an alternative lightweight computer vision method for fish counting based on analyzing echograms - temporal representations that compress several hundred frames of imaging sonar video into a single image. We predict upstream and downstream counts within 200-frame time windows directly from echograms using a ResNet-18 model, and propose a set of domain-specific image augmentations and a weakly-supervised training protocol to further improve results. We achieve a count error of 23% on representative data from the Kenai River in Alaska, demonstrating the feasibility of our approach.

Align and Distill: Unifying and Improving Domain Adaptive Object Detection

Mar 18, 2024

Abstract:Object detectors often perform poorly on data that differs from their training set. Domain adaptive object detection (DAOD) methods have recently demonstrated strong results on addressing this challenge. Unfortunately, we identify systemic benchmarking pitfalls that call past results into question and hamper further progress: (a) Overestimation of performance due to underpowered baselines, (b) Inconsistent implementation practices preventing transparent comparisons of methods, and (c) Lack of generality due to outdated backbones and lack of diversity in benchmarks. We address these problems by introducing: (1) A unified benchmarking and implementation framework, Align and Distill (ALDI), enabling comparison of DAOD methods and supporting future development, (2) A fair and modern training and evaluation protocol for DAOD that addresses benchmarking pitfalls, (3) A new DAOD benchmark dataset, CFC-DAOD, enabling evaluation on diverse real-world data, and (4) A new method, ALDI++, that achieves state-of-the-art results by a large margin. ALDI++ outperforms the previous state-of-the-art by +3.5 AP50 on Cityscapes to Foggy Cityscapes, +5.7 AP50 on Sim10k to Cityscapes (where ours is the only method to outperform a fair baseline), and +2.0 AP50 on CFC Kenai to Channel. Our framework, dataset, and state-of-the-art method offer a critical reset for DAOD and provide a strong foundation for future research. Code and data are available: https://github.com/justinkay/aldi and https://github.com/visipedia/caltech-fish-counting.

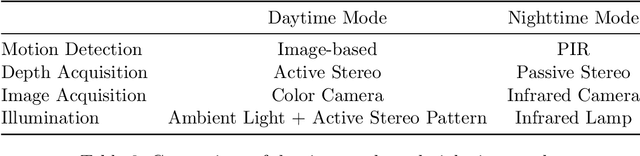

SOCRATES: A Stereo Camera Trap for Monitoring of Biodiversity

Sep 19, 2022

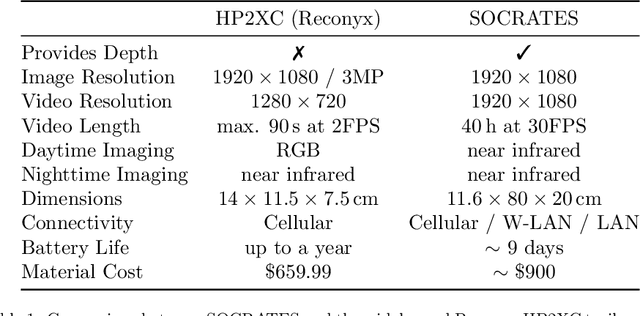

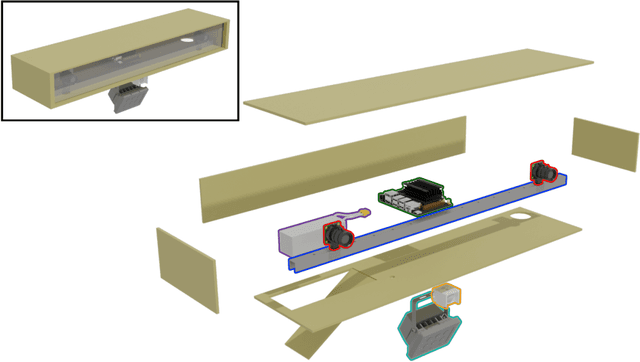

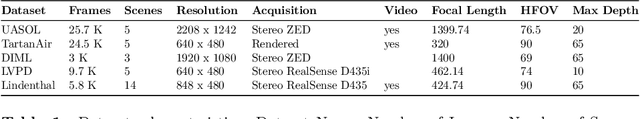

Abstract:The development and application of modern technology is an essential basis for the efficient monitoring of species in natural habitats and landscapes to trace the development of ecosystems, species communities, and populations, and to analyze reasons of changes. For estimating animal abundance using methods such as camera trap distance sampling, spatial information of natural habitats in terms of 3D (three-dimensional) measurements is crucial. Additionally, 3D information improves the accuracy of animal detection using camera trapping. This study presents a novel approach to 3D camera trapping featuring highly optimized hardware and software. This approach employs stereo vision to infer 3D information of natural habitats and is designated as StereO CameRA Trap for monitoring of biodivErSity (SOCRATES). A comprehensive evaluation of SOCRATES shows not only a $3.23\%$ improvement in animal detection (bounding box $\text{mAP}_{75}$) but also its superior applicability for estimating animal abundance using camera trap distance sampling. The software and documentation of SOCRATES is provided at https://github.com/timmh/socrates

Distance Estimation and Animal Tracking for Wildlife Camera Trapping

Feb 09, 2022

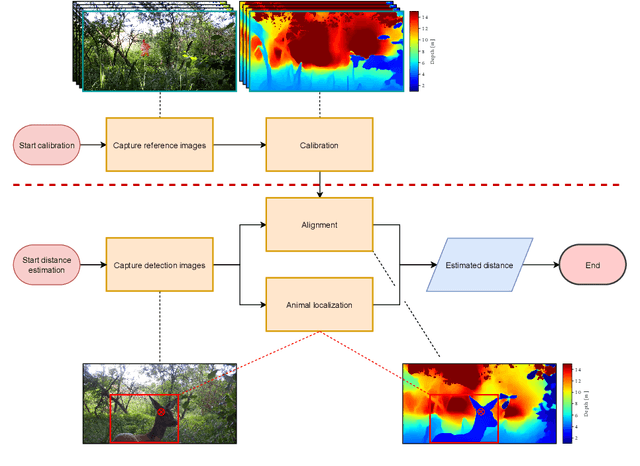

Abstract:The ongoing biodiversity crysis calls for accurate estimation of animal density and abundance to identify, for example, sources of biodiversity decline and effectiveness of conservation interventions. Camera traps together with abundance estimation methods are often employed for this purpose. The necessary distances between camera and observed animal are traditionally derived in a laborious, fully manual or semi-automatic process. Both approaches require reference image material, which is both difficult to acquire and not available for existing datasets. In this study, we propose a fully automatic approach to estimate camera-to-animal distances, based on monocular depth estimation (MDE), and without the need of reference image material. We leverage state-of-the-art relative MDE and a novel alignment procedure to estimate metric distances. We evaluate the approach on a zoo scenario dataset unseen during training. We achieve a mean absolute distance estimation error of only 0.9864 meters at a precision of 90.3% and recall of 63.8%, while completely eliminating the previously required manual effort for biodiversity researchers. The code will be made available.

Overcoming the Distance Estimation Bottleneck in Camera Trap Distance Sampling

May 10, 2021

Abstract:Biodiversity crisis is still accelerating. Estimating animal abundance is of critical importance to assess, for example, the consequences of land-use change and invasive species on species composition, or the effectiveness of conservation interventions. Camera trap distance sampling (CTDS) is a recently developed monitoring method providing reliable estimates of wildlife population density and abundance. However, in current applications of CTDS, the required camera-to-animal distance measurements are derived by laborious, manual and subjective estimation methods. To overcome this distance estimation bottleneck in CTDS, this study proposes a completely automatized workflow utilizing state-of-the-art methods of image processing and pattern recognition.

Exploiting Depth Information for Wildlife Monitoring

Feb 10, 2021

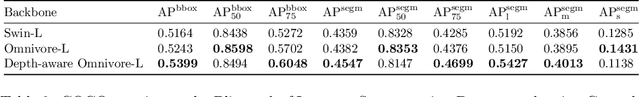

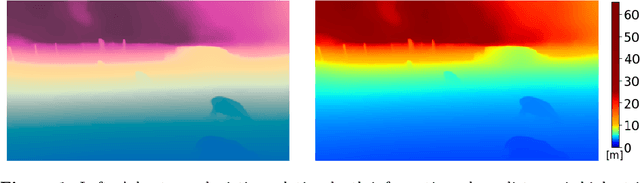

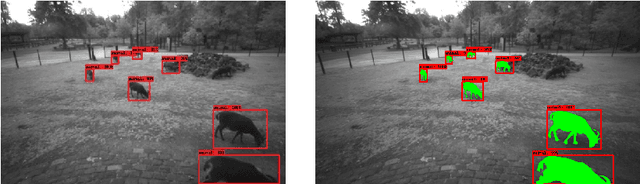

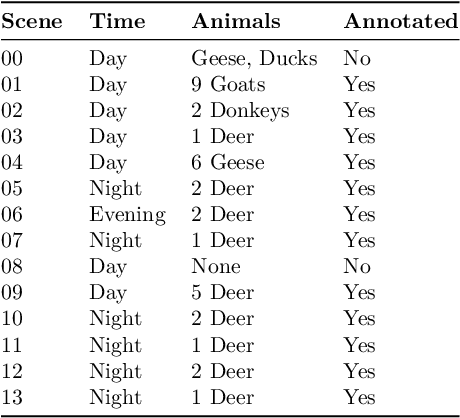

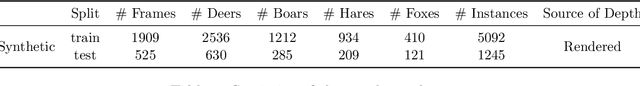

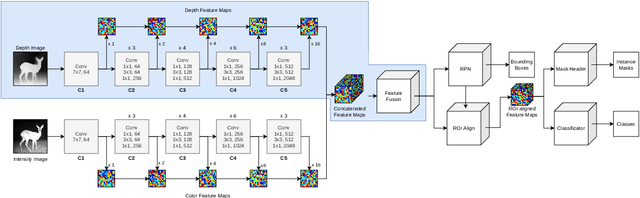

Abstract:Camera traps are a proven tool in biology and specifically biodiversity research. However, camera traps including depth estimation are not widely deployed, despite providing valuable context about the scene and facilitating the automation of previously laborious manual ecological methods. In this study, we propose an automated camera trap-based approach to detect and identify animals using depth estimation. To detect and identify individual animals, we propose a novel method D-Mask R-CNN for the so-called instance segmentation which is a deep learning-based technique to detect and delineate each distinct object of interest appearing in an image or a video clip. An experimental evaluation shows the benefit of the additional depth estimation in terms of improved average precision scores of the animal detection compared to the standard approach that relies just on the image information. This novel approach was also evaluated in terms of a proof-of-concept in a zoo scenario using an RGB-D camera trap.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge