Levi Cai

A Roadmap for Climate-Relevant Robotics Research

Jul 15, 2025

Abstract:Climate change is one of the defining challenges of the 21st century, and many in the robotics community are looking for ways to contribute. This paper presents a roadmap for climate-relevant robotics research, identifying high-impact opportunities for collaboration between roboticists and experts across climate domains such as energy, the built environment, transportation, industry, land use, and Earth sciences. These applications include problems such as energy systems optimization, construction, precision agriculture, building envelope retrofits, autonomous trucking, and large-scale environmental monitoring. Critically, we include opportunities to apply not only physical robots but also the broader robotics toolkit - including planning, perception, control, and estimation algorithms - to climate-relevant problems. A central goal of this roadmap is to inspire new research directions and collaboration by highlighting specific, actionable problems at the intersection of robotics and climate. This work represents a collaboration between robotics researchers and domain experts in various climate disciplines, and it serves as an invitation to the robotics community to bring their expertise to bear on urgent climate priorities.

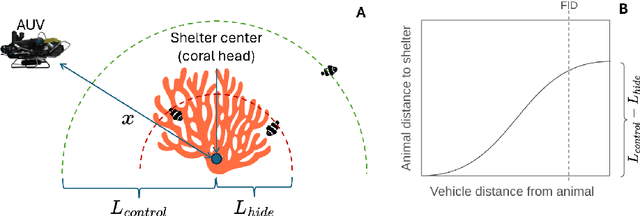

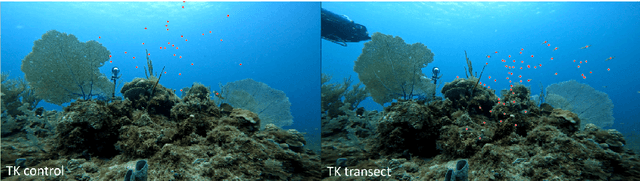

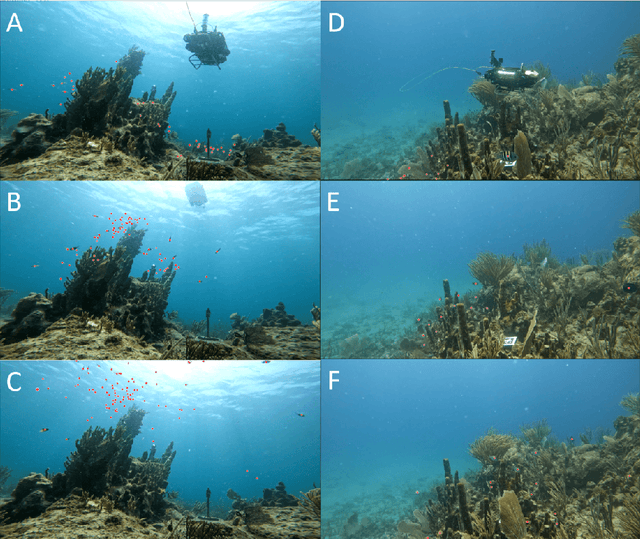

Measuring and Minimizing Disturbance of Marine Animals to Underwater Vehicles

Jun 12, 2025

Abstract:Do fish respond to the presence of underwater vehicles, potentially biasing our estimates about them? If so, are there strategies to measure and mitigate this response? This work provides a theoretical and practical framework towards bias-free estimation of animal behavior from underwater vehicle observations. We also provide preliminary results from the field in coral reef environments to address these questions.

DataS^3: Dataset Subset Selection for Specialization

Apr 22, 2025

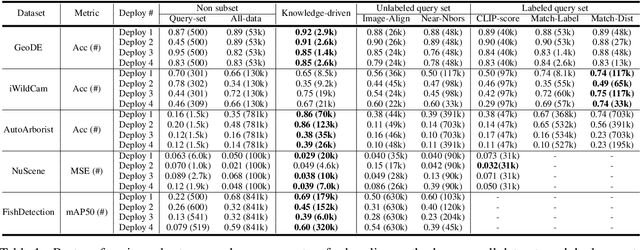

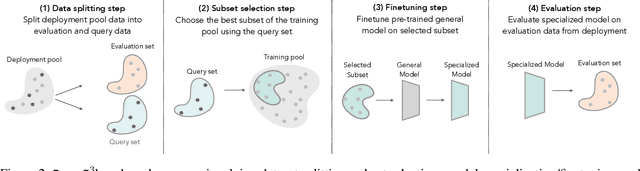

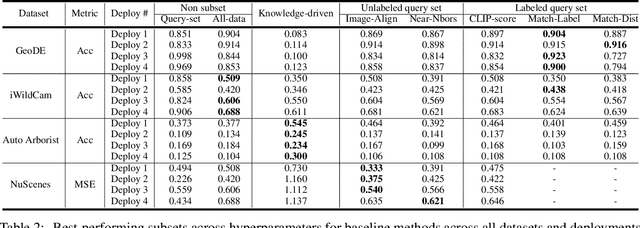

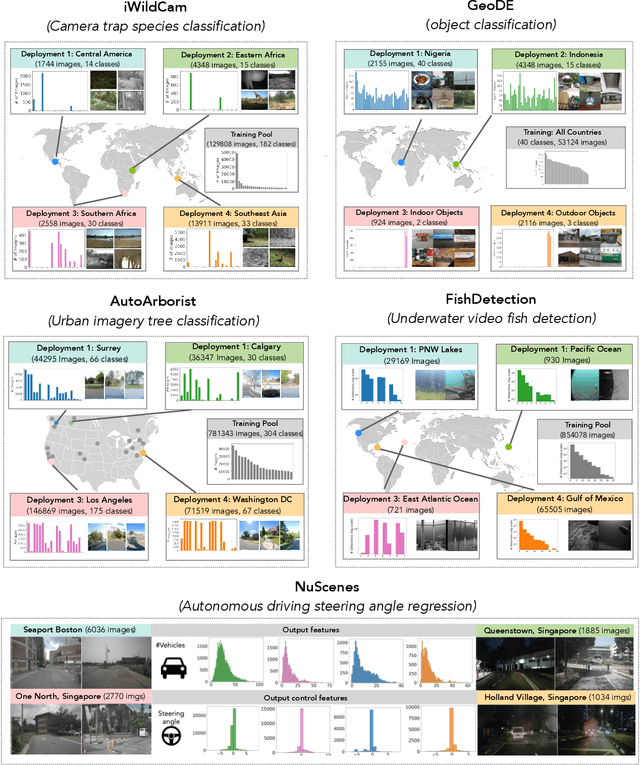

Abstract:In many real-world machine learning (ML) applications (e.g. detecting broken bones in x-ray images, detecting species in camera traps), in practice models need to perform well on specific deployments (e.g. a specific hospital, a specific national park) rather than the domain broadly. However, deployments often have imbalanced, unique data distributions. Discrepancy between the training distribution and the deployment distribution can lead to suboptimal performance, highlighting the need to select deployment-specialized subsets from the available training data. We formalize dataset subset selection for specialization (DS3): given a training set drawn from a general distribution and a (potentially unlabeled) query set drawn from the desired deployment-specific distribution, the goal is to select a subset of the training data that optimizes deployment performance. We introduce DataS^3; the first dataset and benchmark designed specifically for the DS3 problem. DataS^3 encompasses diverse real-world application domains, each with a set of distinct deployments to specialize in. We conduct a comprehensive study evaluating algorithms from various families--including coresets, data filtering, and data curation--on DataS^3, and find that general-distribution methods consistently fail on deployment-specific tasks. Additionally, we demonstrate the existence of manually curated (deployment-specific) expert subsets that outperform training on all available data with accuracy gains up to 51.3 percent. Our benchmark highlights the critical role of tailored dataset curation in enhancing performance and training efficiency on deployment-specific distributions, which we posit will only become more important as global, public datasets become available across domains and ML models are deployed in the real world.

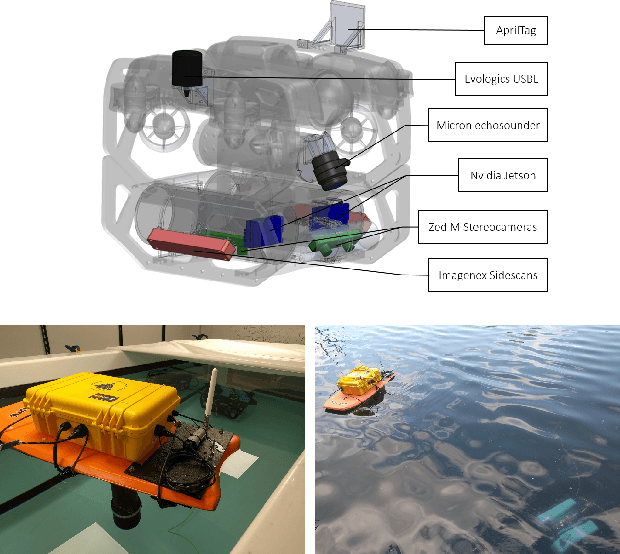

ReefGlider: A highly maneuverable vectored buoyancy engine based underwater robot

May 09, 2024Abstract:There exists a capability gap in the design of currently available autonomous underwater vehicles (AUV). Most AUVs use a set of thrusters, and optionally control surfaces, to control their depth and pose. AUVs utilizing thrusters can be highly maneuverable, making them well-suited to operate in complex environments such as in close-proximity to coral reefs. However, they are inherently power-inefficient and produce significant noise and disturbance. Underwater gliders, on the other hand, use changes in buoyancy and center of mass, in combination with a control surface to move around. They are extremely power efficient but not very maneuverable. Gliders are designed for long-range missions that do not require precision maneuvering. Furthermore, since gliders only activate the buoyancy engine for small time intervals, they do not disturb the environment and can also be used for passive acoustic observations. In this paper we present ReefGlider, a novel AUV that uses only buoyancy for control but is still highly maneuverable from additional buoyancy control devices. ReefGlider bridges the gap between the capabilities of thruster-driven AUVs and gliders. These combined characteristics make ReefGlider ideal for tasks such as long-term visual and acoustic monitoring of coral reefs. We present the overall design and implementation of the system, as well as provide analysis of some of its capabilities.

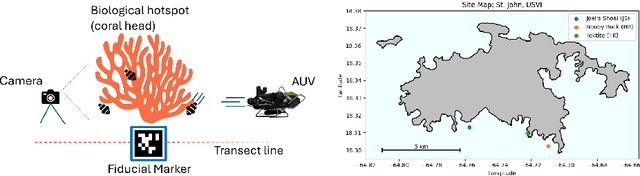

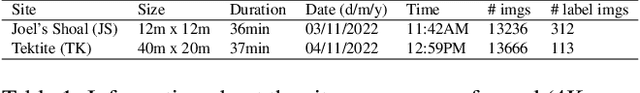

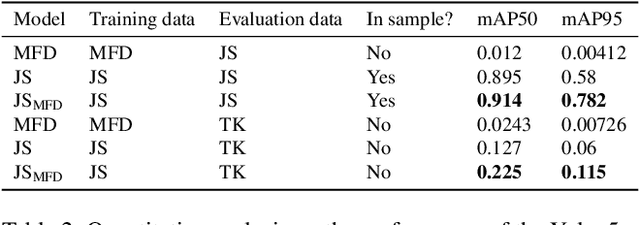

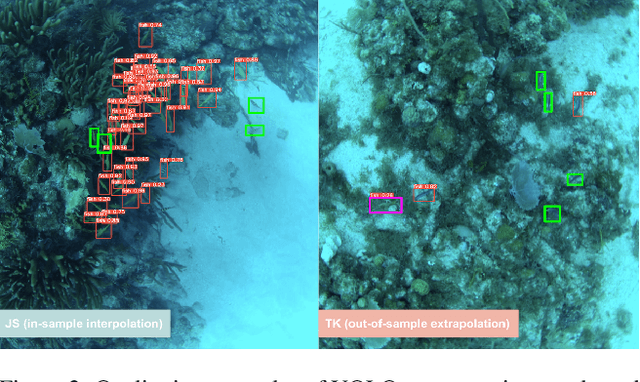

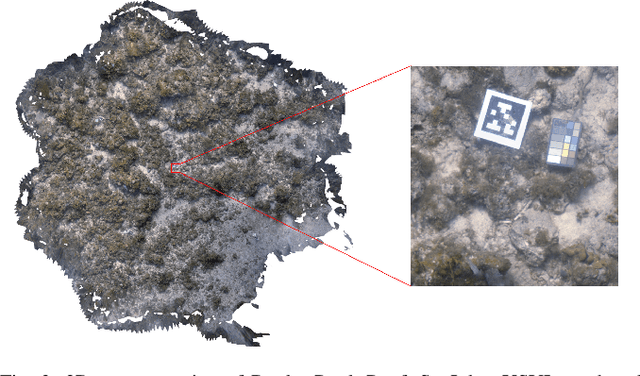

Biological Hotspot Mapping in Coral Reefs with Robotic Visual Surveys

May 03, 2023

Abstract:Coral reefs are fast-changing and complex ecosystems that are crucial to monitor and study. Biological hotspot detection can help coral reef managers prioritize limited resources for monitoring and intervention tasks. Here, we explore the use of autonomous underwater vehicles (AUVs) with cameras, coupled with visual detectors and photogrammetry, to map and identify these hotspots. This approach can provide high spatial resolution information in fast feedback cycles. To the best of our knowledge, we present one of the first attempts at using an AUV to gather visually-observed, fine-grain biological hotspot maps in concert with topography of a coral reefs. Our hotspot maps correlate with rugosity, an established proxy metric for coral reef biodiversity and abundance, as well as with our visual inspections of the 3D reconstruction. We also investigate issues of scaling this approach when applied to new reefs by using these visual detectors pre-trained on large public datasets.

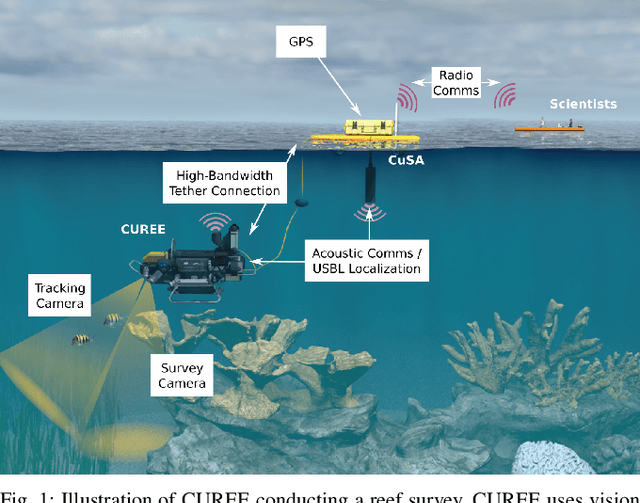

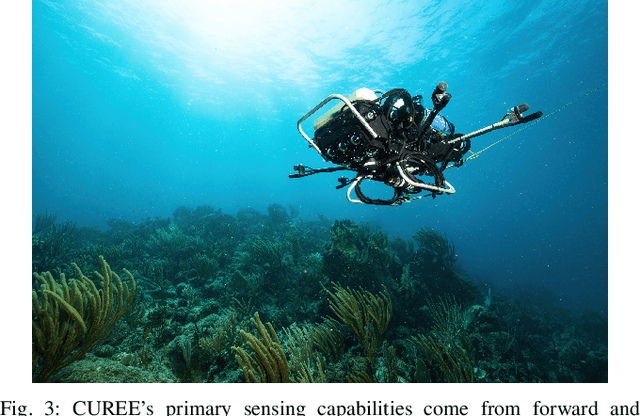

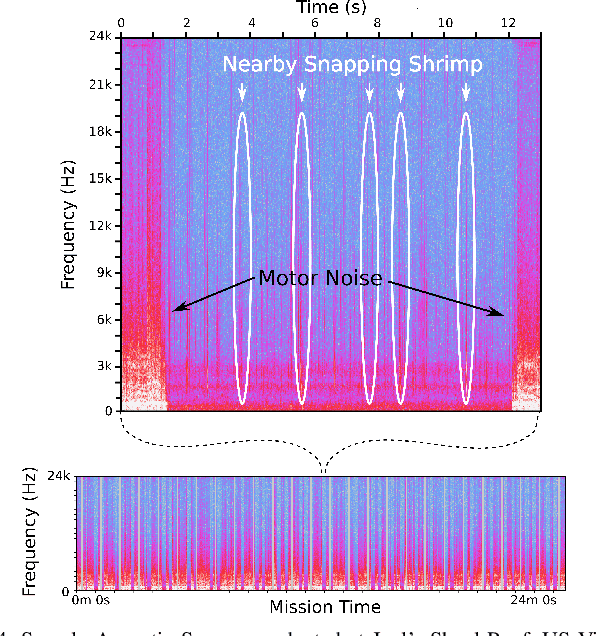

CUREE: A Curious Underwater Robot for Ecosystem Exploration

Mar 07, 2023

Abstract:The current approach to exploring and monitoring complex underwater ecosystems, such as coral reefs, is to conduct surveys using diver-held or static cameras, or deploying sensor buoys. These approaches often fail to capture the full variation and complexity of interactions between different reef organisms and their habitat. The CUREE platform presented in this paper provides a unique set of capabilities in the form of robot behaviors and perception algorithms to enable scientists to explore different aspects of an ecosystem. Examples of these capabilities include low-altitude visual surveys, soundscape surveys, habitat characterization, and animal following. We demonstrate these capabilities by describing two field deployments on coral reefs in the US Virgin Islands. In the first deployment, we show that CUREE can identify the preferred habitat type of snapping shrimp in a reef through a combination of a visual survey, habitat characterization, and a soundscape survey. In the second deployment, we demonstrate CUREE's ability to follow arbitrary animals by separately following a barracuda and stingray for several minutes each in midwater and benthic environments, respectively.

* 7 pages

Semi-Supervised Visual Tracking of Marine Animals using Autonomous Underwater Vehicles

Feb 14, 2023Abstract:In-situ visual observations of marine organisms is crucial to developing behavioural understandings and their relations to their surrounding ecosystem. Typically, these observations are collected via divers, tags, and remotely-operated or human-piloted vehicles. Recently, however, autonomous underwater vehicles equipped with cameras and embedded computers with GPU capabilities are being developed for a variety of applications, and in particular, can be used to supplement these existing data collection mechanisms where human operation or tags are more difficult. Existing approaches have focused on using fully-supervised tracking methods, but labelled data for many underwater species are severely lacking. Semi-supervised trackers may offer alternative tracking solutions because they require less data than fully-supervised counterparts. However, because there are not existing realistic underwater tracking datasets, the performance of semi-supervised tracking algorithms in the marine domain is not well understood. To better evaluate their performance and utility, in this paper we provide (1) a novel dataset specific to marine animals located at http://warp.whoi.edu/vmat/, (2) an evaluation of state-of-the-art semi-supervised algorithms in the context of underwater animal tracking, and (3) an evaluation of real-world performance through demonstrations using a semi-supervised algorithm on-board an autonomous underwater vehicle to track marine animals in the wild.

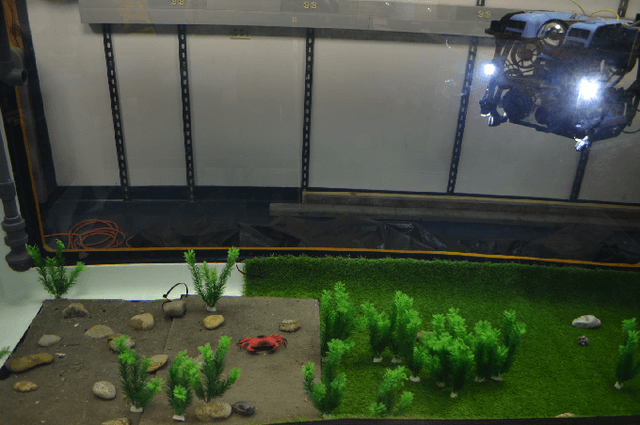

Streaming Scene Maps for Co-Robotic Exploration in Bandwidth Limited Environments

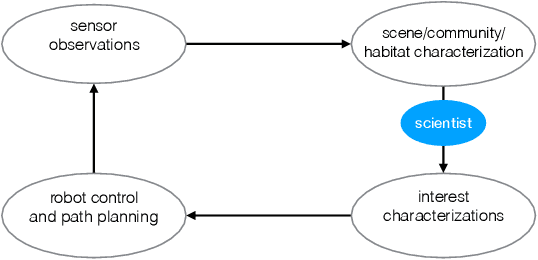

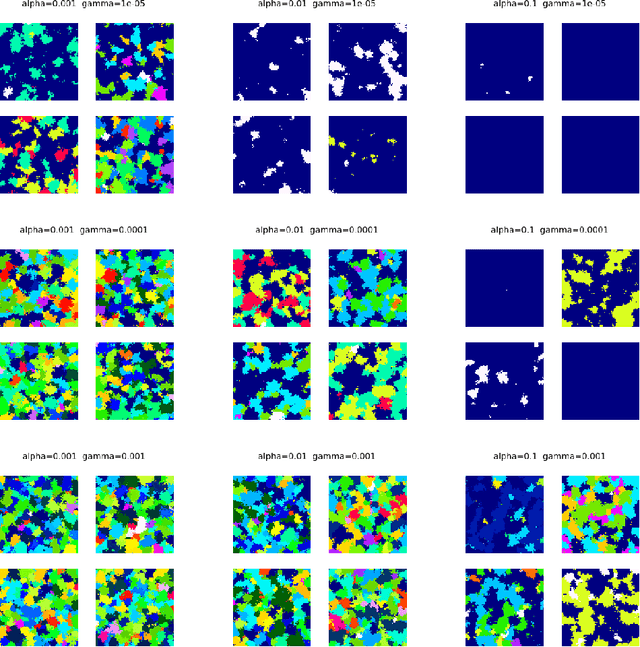

Mar 07, 2019

Abstract:This paper proposes a bandwidth tunable technique for real-time probabilistic scene modeling and mapping to enable co-robotic exploration in communication constrained environments such as the deep sea. The parameters of the system enable the user to characterize the scene complexity represented by the map, which in turn determines the bandwidth requirements. The approach is demonstrated using an underwater robot that learns an unsupervised scene model of the environment and then uses this scene model to communicate the spatial distribution of various high-level semantic scene constructs to a human operator. Preliminary experiments in an artificially constructed tank environment as well as simulated missions over a 10m$\times$10m coral reef using real data show the tunability of the maps to different bandwidth constraints and science interests. To our knowledge this is the first paper to quantify how the free parameters of the unsupervised scene model impact both the scientific utility of and bandwidth required to communicate the resulting scene model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge