Tieru Wu

ViRC: Enhancing Visual Interleaved Mathematical CoT with Reason Chunking

Dec 17, 2025Abstract:CoT has significantly enhanced the reasoning ability of LLMs while it faces challenges when extended to multimodal domains, particularly in mathematical tasks. Existing MLLMs typically perform textual reasoning solely from a single static mathematical image, overlooking dynamic visual acquisition during reasoning. In contrast, humans repeatedly examine visual image and employ step-by-step reasoning to prove intermediate propositions. This strategy of decomposing the problem-solving process into key logical nodes adheres to Miller's Law in cognitive science. Inspired by this insight, we propose a ViRC framework for multimodal mathematical tasks, introducing a Reason Chunking mechanism that structures multimodal mathematical CoT into consecutive Critical Reasoning Units (CRUs) to simulate human expert problem-solving patterns. CRUs ensure intra-unit textual coherence for intermediate proposition verification while integrating visual information across units to generate subsequent propositions and support structured reasoning. To this end, we present CRUX dataset by using three visual tools and four reasoning patterns to provide explicitly annotated CRUs across multiple reasoning paths for each mathematical problem. Leveraging the CRUX dataset, we propose a progressive training strategy inspired by human cognitive learning, which includes Instructional SFT, Practice SFT, and Strategic RL, aimed at further strengthening the Reason Chunking ability of the model. The resulting ViRC-7B model achieves a 18.8% average improvement over baselines across multiple mathematical benchmarks. Code is available at https://github.com/Leon-LihongWang/ViRC.

Generally-Occurring Model Change for Robust Counterfactual Explanations

Jul 16, 2024

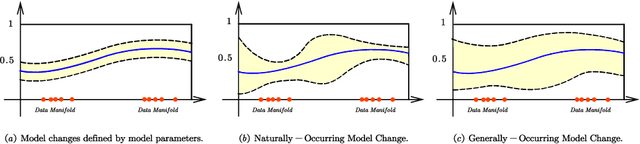

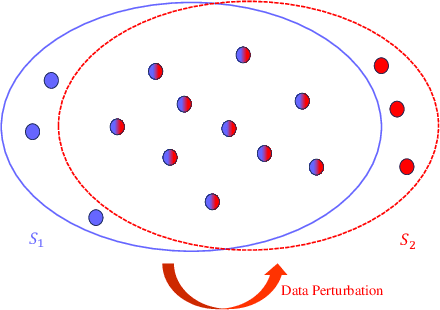

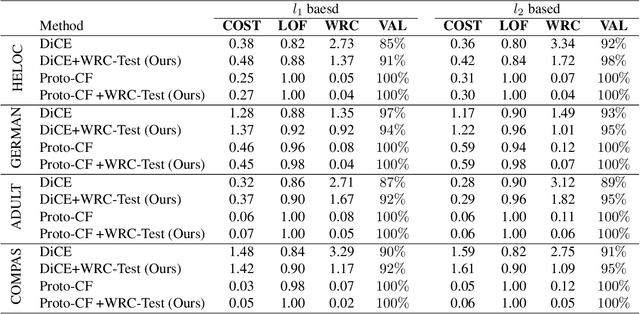

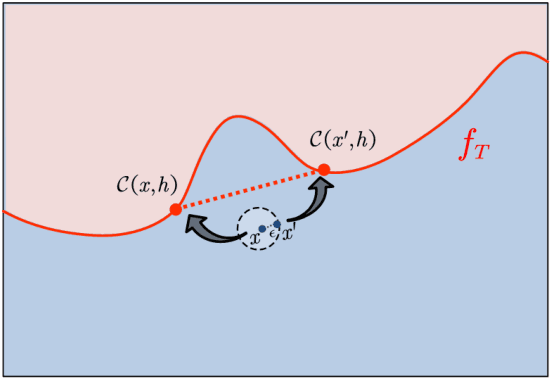

Abstract:With the increasing impact of algorithmic decision-making on human lives, the interpretability of models has become a critical issue in machine learning. Counterfactual explanation is an important method in the field of interpretable machine learning, which can not only help users understand why machine learning models make specific decisions, but also help users understand how to change these decisions. Naturally, it is an important task to study the robustness of counterfactual explanation generation algorithms to model changes. Previous literature has proposed the concept of Naturally-Occurring Model Change, which has given us a deeper understanding of robustness to model change. In this paper, we first further generalize the concept of Naturally-Occurring Model Change, proposing a more general concept of model parameter changes, Generally-Occurring Model Change, which has a wider range of applicability. We also prove the corresponding probabilistic guarantees. In addition, we consider a more specific problem, data set perturbation, and give relevant theoretical results by combining optimization theory.

Enhancing Counterfactual Image Generation Using Mahalanobis Distance with Distribution Preferences in Feature Space

May 31, 2024

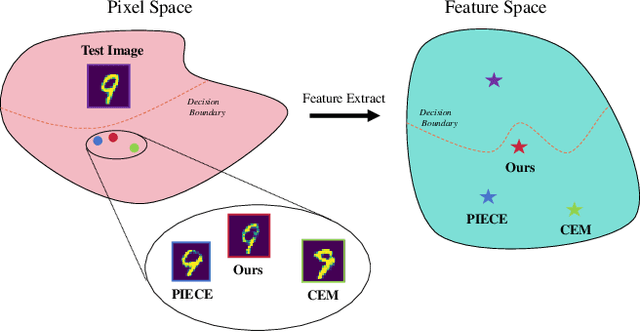

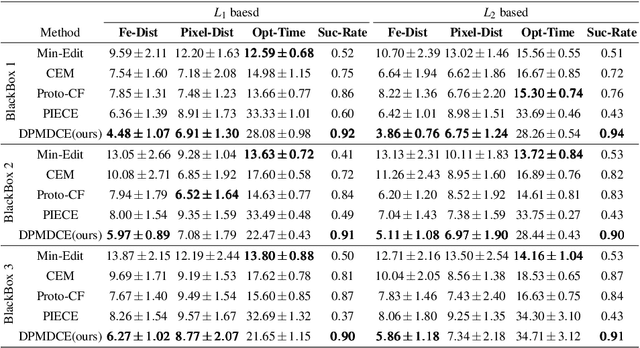

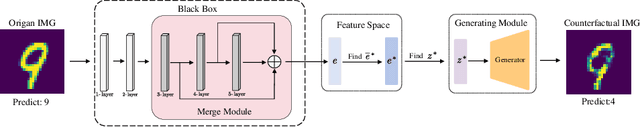

Abstract:In the realm of Artificial Intelligence (AI), the importance of Explainable Artificial Intelligence (XAI) is increasingly recognized, particularly as AI models become more integral to our lives. One notable single-instance XAI approach is counterfactual explanation, which aids users in comprehending a model's decisions and offers guidance on altering these decisions. Specifically in the context of image classification models, effective image counterfactual explanations can significantly enhance user understanding. This paper introduces a novel method for computing feature importance within the feature space of a black-box model. By employing information fusion techniques, our method maximizes the use of data to address feature counterfactual explanations in the feature space. Subsequently, we utilize an image generation model to transform these feature counterfactual explanations into image counterfactual explanations. Our experiments demonstrate that the counterfactual explanations generated by our method closely resemble the original images in both pixel and feature spaces. Additionally, our method outperforms established baselines, achieving impressive experimental results.

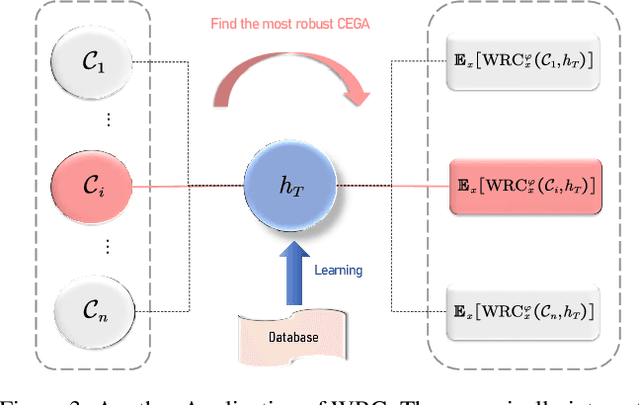

Weak Robust Compatibility Between Learning Algorithms and Counterfactual Explanation Generation Algorithms

May 31, 2024

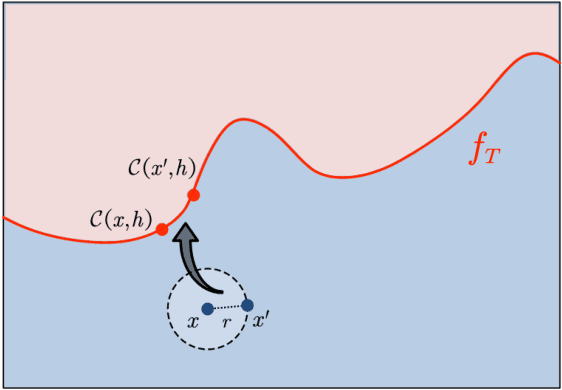

Abstract:Counterfactual explanation generation is a powerful method for Explainable Artificial Intelligence. It can help users understand why machine learning models make specific decisions, and how to change those decisions. Evaluating the robustness of counterfactual explanation algorithms is therefore crucial. Previous literature has widely studied the robustness based on the perturbation of input instances. However, the robustness defined from the perspective of perturbed instances is sometimes biased, because this definition ignores the impact of learning algorithms on robustness. In this paper, we propose a more reasonable definition, Weak Robust Compatibility, based on the perspective of explanation strength. In practice, we propose WRC-Test to help us generate more robust counterfactuals. Meanwhile, we designed experiments to verify the effectiveness of WRC-Test. Theoretically, we introduce the concepts of PAC learning theory and define the concept of PAC WRC-Approximability. Based on reasonable assumptions, we establish oracle inequalities about weak robustness, which gives a sufficient condition for PAC WRC-Approximability.

Diff3DS: Generating View-Consistent 3D Sketch via Differentiable Curve Rendering

May 24, 2024Abstract:3D sketches are widely used for visually representing the 3D shape and structure of objects or scenes. However, the creation of 3D sketch often requires users to possess professional artistic skills. Existing research efforts primarily focus on enhancing the ability of interactive sketch generation in 3D virtual systems. In this work, we propose Diff3DS, a novel differentiable rendering framework for generating view-consistent 3D sketch by optimizing 3D parametric curves under various supervisions. Specifically, we perform perspective projection to render the 3D rational B\'ezier curves into 2D curves, which are subsequently converted to a 2D raster image via our customized differentiable rasterizer. Our framework bridges the domains of 3D sketch and raster image, achieving end-toend optimization of 3D sketch through gradients computed in the 2D image domain. Our Diff3DS can enable a series of novel 3D sketch generation tasks, including textto-3D sketch and image-to-3D sketch, supported by the popular distillation-based supervision, such as Score Distillation Sampling (SDS). Extensive experiments have yielded promising results and demonstrated the potential of our framework.

WEITS: A Wavelet-enhanced residual framework for interpretable time series forecasting

May 17, 2024Abstract:Time series (TS) forecasting has been an unprecedentedly popular problem in recent years, with ubiquitous applications in both scientific and business fields. Various approaches have been introduced to time series analysis, including both statistical approaches and deep neural networks. Although neural network approaches have illustrated stronger ability of representation than statistical methods, they struggle to provide sufficient interpretablility, and can be too complicated to optimize. In this paper, we present WEITS, a frequency-aware deep learning framework that is highly interpretable and computationally efficient. Through multi-level wavelet decomposition, WEITS novelly infuses frequency analysis into a highly deep learning framework. Combined with a forward-backward residual architecture, it enjoys both high representation capability and statistical interpretability. Extensive experiments on real-world datasets have demonstrated competitive performance of our model, along with its additional advantage of high computation efficiency. Furthermore, WEITS provides a general framework that can always seamlessly integrate with state-of-the-art approaches for time series forecast.

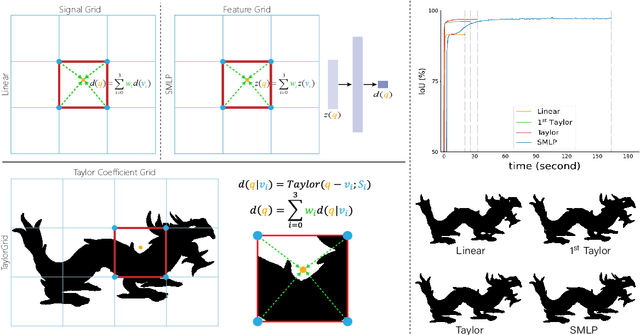

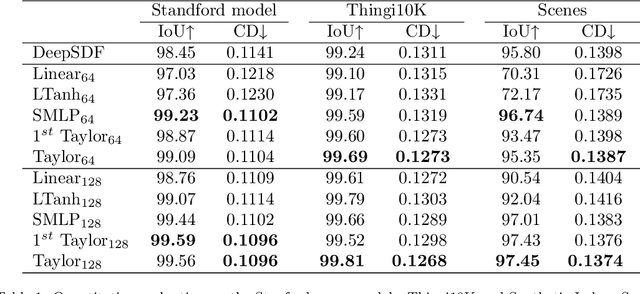

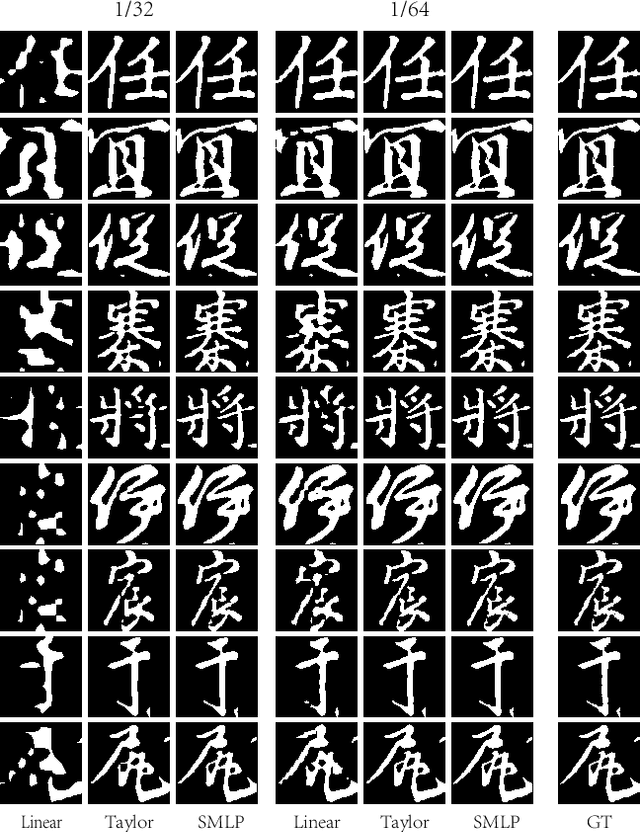

TaylorGrid: Towards Fast and High-Quality Implicit Field Learning via Direct Taylor-based Grid Optimization

Feb 22, 2024

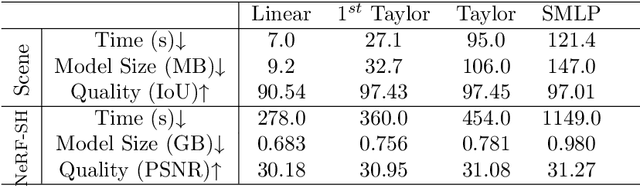

Abstract:Coordinate-based neural implicit representation or implicit fields have been widely studied for 3D geometry representation or novel view synthesis. Recently, a series of efforts have been devoted to accelerating the speed and improving the quality of the coordinate-based implicit field learning. Instead of learning heavy MLPs to predict the neural implicit values for the query coordinates, neural voxels or grids combined with shallow MLPs have been proposed to achieve high-quality implicit field learning with reduced optimization time. On the other hand, lightweight field representations such as linear grid have been proposed to further improve the learning speed. In this paper, we aim for both fast and high-quality implicit field learning, and propose TaylorGrid, a novel implicit field representation which can be efficiently computed via direct Taylor expansion optimization on 2D or 3D grids. As a general representation, TaylorGrid can be adapted to different implicit fields learning tasks such as SDF learning or NeRF. From extensive quantitative and qualitative comparisons, TaylorGrid achieves a balance between the linear grid and neural voxels, showing its superiority in fast and high-quality implicit field learning.

Mix-GENEO: A flexible filtration for multiparameter persistent homology detects digital images

Jan 09, 2024Abstract:Two important problems in the field of Topological Data Analysis are defining practical multifiltrations on objects and showing ability of TDA to detect the geometry. Motivated by the problems, we constuct three multifiltrations named multi-GENEO, multi-DGENEO and mix-GENEO, and prove the stability of both the interleaving distance and multiparameter persistence landscape of multi-GENEO with respect to the pseudometric of the subspace of bounded functions. We also give the estimations of upper bound for multi-DGENEO and mix-GENEO. Finally, we provide experiment results on MNIST dataset to demonstrate our bifiltrations have ability to detect geometric and topological differences of digital images.

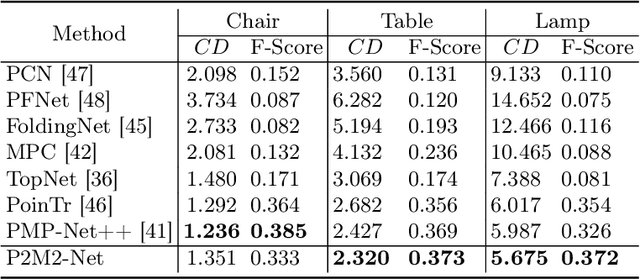

P2M2-Net: Part-Aware Prompt-Guided Multimodal Point Cloud Completion

Dec 29, 2023

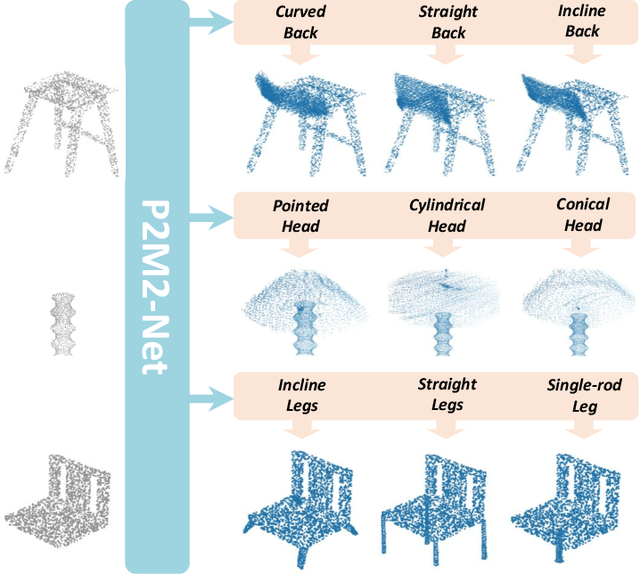

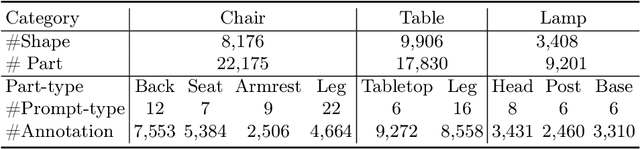

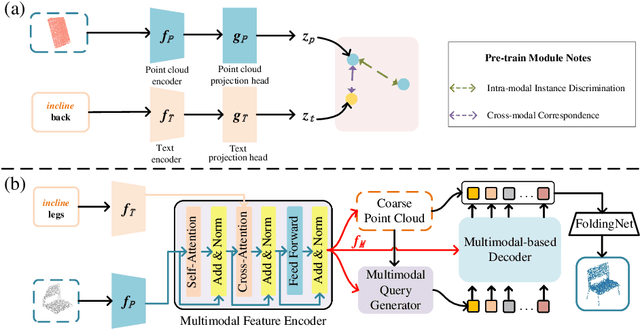

Abstract:Inferring missing regions from severely occluded point clouds is highly challenging. Especially for 3D shapes with rich geometry and structure details, inherent ambiguities of the unknown parts are existing. Existing approaches either learn a one-to-one mapping in a supervised manner or train a generative model to synthesize the missing points for the completion of 3D point cloud shapes. These methods, however, lack the controllability for the completion process and the results are either deterministic or exhibiting uncontrolled diversity. Inspired by the prompt-driven data generation and editing, we propose a novel prompt-guided point cloud completion framework, coined P2M2-Net, to enable more controllable and more diverse shape completion. Given an input partial point cloud and a text prompt describing the part-aware information such as semantics and structure of the missing region, our Transformer-based completion network can efficiently fuse the multimodal features and generate diverse results following the prompt guidance. We train the P2M2-Net on a new large-scale PartNet-Prompt dataset and conduct extensive experiments on two challenging shape completion benchmarks. Quantitative and qualitative results show the efficacy of incorporating prompts for more controllable part-aware point cloud completion and generation. Code and data are available at https://github.com/JLU-ICL/P2M2-Net.

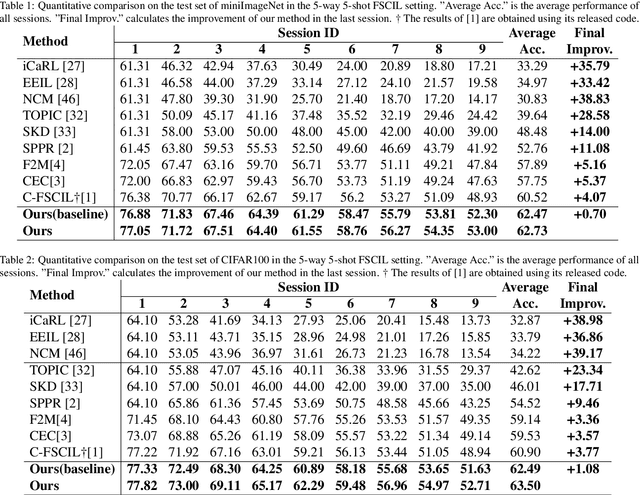

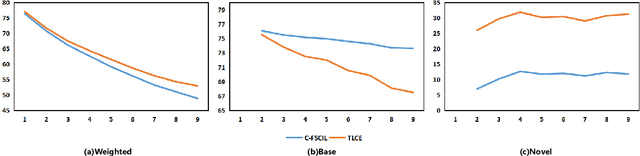

TLCE: Transfer-Learning Based Classifier Ensembles for Few-Shot Class-Incremental Learning

Dec 07, 2023

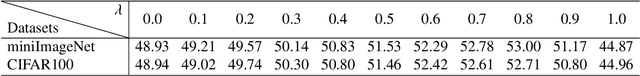

Abstract:Few-shot class-incremental learning (FSCIL) struggles to incrementally recognize novel classes from few examples without catastrophic forgetting of old classes or overfitting to new classes. We propose TLCE, which ensembles multiple pre-trained models to improve separation of novel and old classes. TLCE minimizes interference between old and new classes by mapping old class images to quasi-orthogonal prototypes using episodic training. It then ensembles diverse pre-trained models to better adapt to novel classes despite data imbalance. Extensive experiments on various datasets demonstrate that our transfer learning ensemble approach outperforms state-of-the-art FSCIL methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge