Enhancing Counterfactual Image Generation Using Mahalanobis Distance with Distribution Preferences in Feature Space

Paper and Code

May 31, 2024

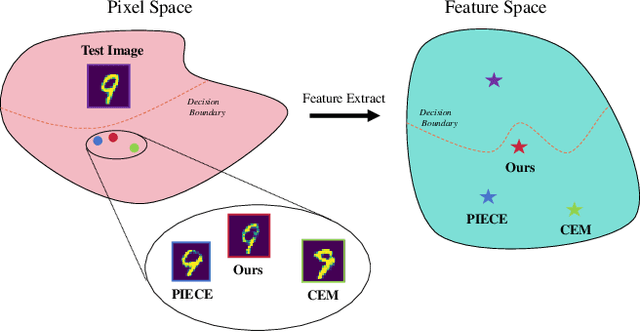

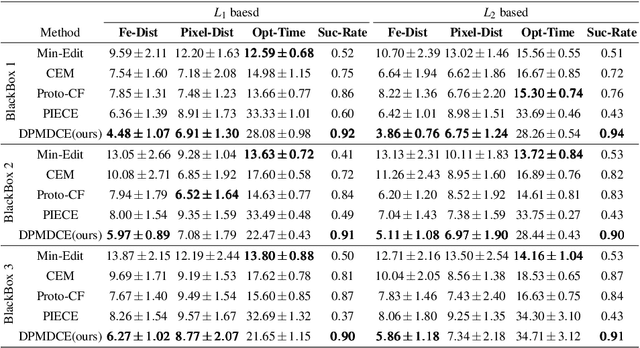

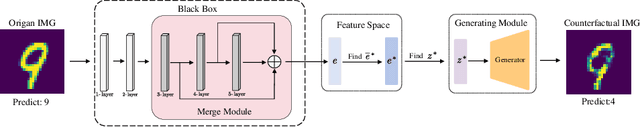

In the realm of Artificial Intelligence (AI), the importance of Explainable Artificial Intelligence (XAI) is increasingly recognized, particularly as AI models become more integral to our lives. One notable single-instance XAI approach is counterfactual explanation, which aids users in comprehending a model's decisions and offers guidance on altering these decisions. Specifically in the context of image classification models, effective image counterfactual explanations can significantly enhance user understanding. This paper introduces a novel method for computing feature importance within the feature space of a black-box model. By employing information fusion techniques, our method maximizes the use of data to address feature counterfactual explanations in the feature space. Subsequently, we utilize an image generation model to transform these feature counterfactual explanations into image counterfactual explanations. Our experiments demonstrate that the counterfactual explanations generated by our method closely resemble the original images in both pixel and feature spaces. Additionally, our method outperforms established baselines, achieving impressive experimental results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge