Tianlin Xu

Neural Image Compression with a Diffusion-Based Decoder

Jan 23, 2023

Abstract:Diffusion probabilistic models have recently achieved remarkable success in generating high quality image and video data. In this work, we build on this class of generative models and introduce a method for lossy compression of high resolution images. The resulting codec, which we call DIffuson-based Residual Augmentation Codec (DIRAC),is the first neural codec to allow smooth traversal of the rate-distortion-perception tradeoff at test time, while obtaining competitive performance with GAN-based methods in perceptual quality. Furthermore, while sampling from diffusion probabilistic models is notoriously expensive, we show that in the compression setting the number of steps can be drastically reduced.

SPATE-GAN: Improved Generative Modeling of Dynamic Spatio-Temporal Patterns with an Autoregressive Embedding Loss

Sep 30, 2021

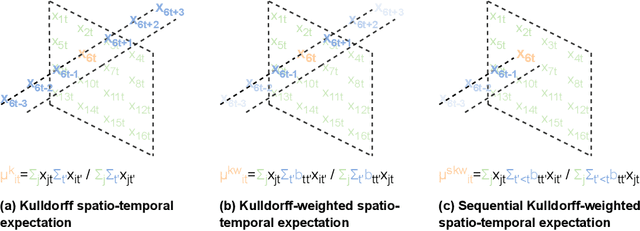

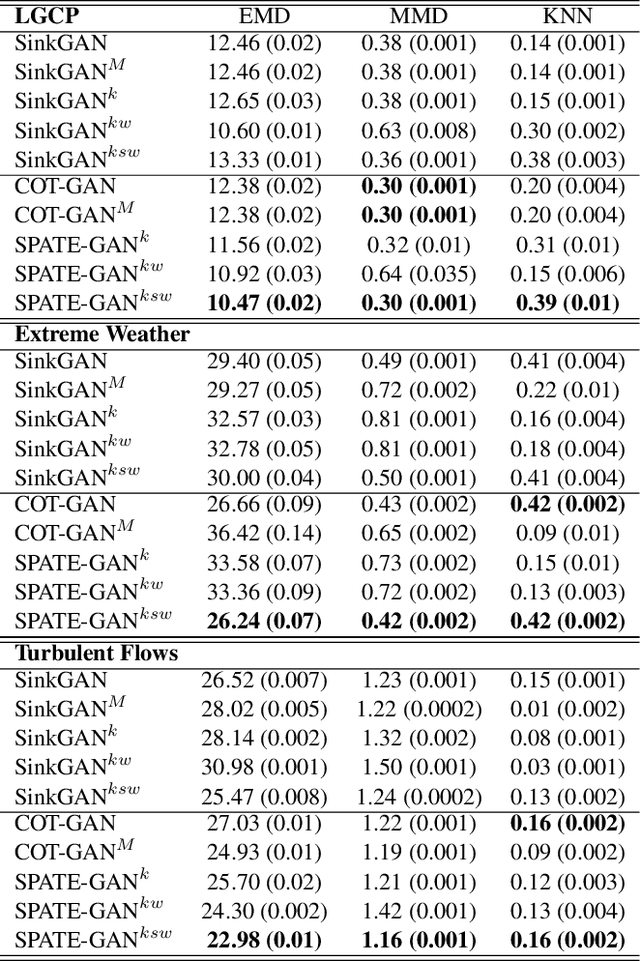

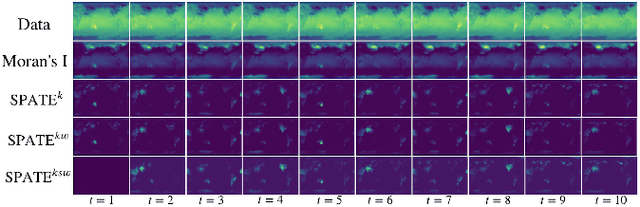

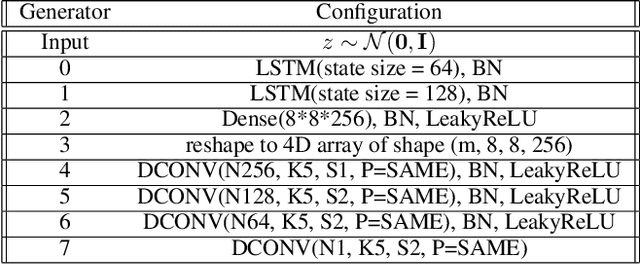

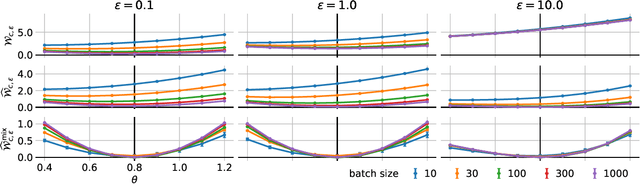

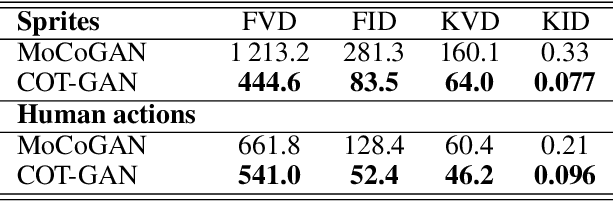

Abstract:From ecology to atmospheric sciences, many academic disciplines deal with data characterized by intricate spatio-temporal complexities, the modeling of which often requires specialized approaches. Generative models of these data are of particular interest, as they enable a range of impactful downstream applications like simulation or creating synthetic training data. Recent work has highlighted the potential of generative adversarial nets (GANs) for generating spatio-temporal data. A new GAN algorithm COT-GAN, inspired by the theory of causal optimal transport (COT), was proposed in an attempt to better tackle this challenge. However, the task of learning more complex spatio-temporal patterns requires additional knowledge of their specific data structures. In this study, we propose a novel loss objective combined with COT-GAN based on an autoregressive embedding to reinforce the learning of spatio-temporal dynamics. We devise SPATE (spatio-temporal association), a new metric measuring spatio-temporal autocorrelation by using the deviance of observations from their expected values. We compute SPATE for real and synthetic data samples and use it to compute an embedding loss that considers space-time interactions, nudging the GAN to learn outputs that are faithful to the observed dynamics. We test this new objective on a diverse set of complex spatio-temporal patterns: turbulent flows, log-Gaussian Cox processes and global weather data. We show that our novel embedding loss improves performance without any changes to the architecture of the COT-GAN backbone, highlighting our model's increased capacity for capturing autoregressive structures. We also contextualize our work with respect to recent advances in physics-informed deep learning and interdisciplinary work connecting neural networks with geographic and geophysical sciences.

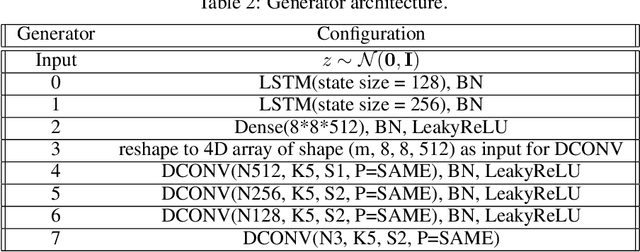

Quantized Conditional COT-GAN for Video Prediction

Jun 10, 2021

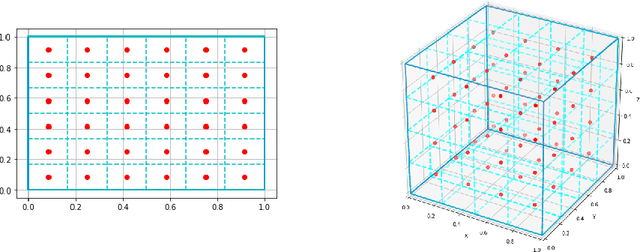

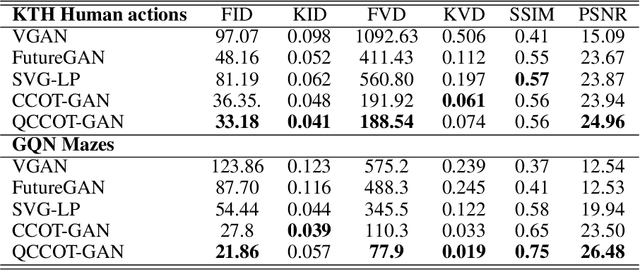

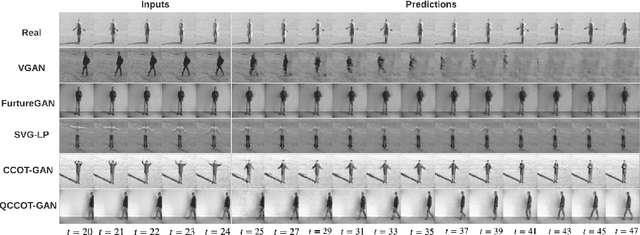

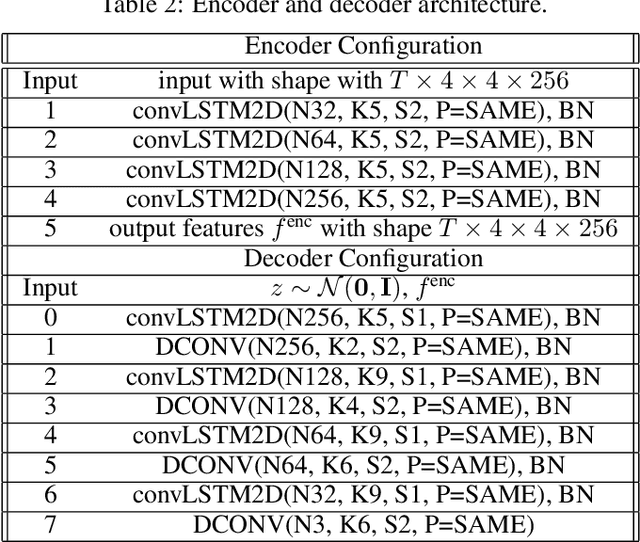

Abstract:Causal Optimal Transport (COT) results from imposing a temporal causality constraint on classic optimal transport problems, which naturally generates a new concept of distances between distributions on path spaces. The first application of the COT theory for sequential learning was given in Xu et al. (2020), where COT-GAN was introduced as an adversarial algorithm to train implicit generative models optimized for producing sequential data. Relying on Xu et al. (2020), the contribution of the present paper is twofold. First, we develop a conditional version of COT-GAN suitable for sequence prediction. This means that the dataset is now used in order to learn how a sequence will evolve given the observation of its past evolution. Second, we improve on the convergence results by working with modifications of the empirical measures via a specific type of quantization due to Backhoff et al. (2020). The resulting quantized conditional COT-GAN algorithm is illustrated with an application for video prediction.

Generative modeling of spatio-temporal weather patterns with extreme event conditioning

Apr 26, 2021

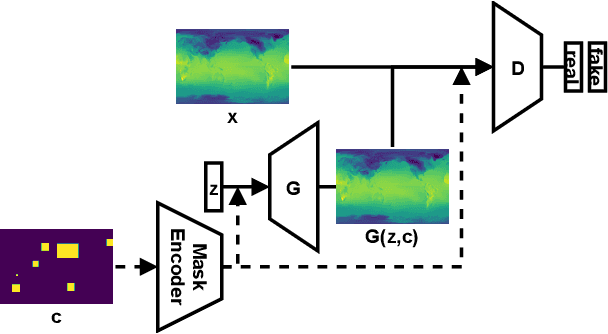

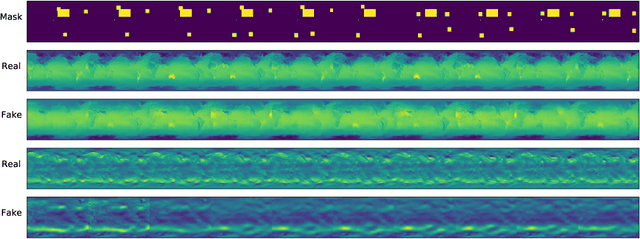

Abstract:Deep generative models are increasingly used to gain insights in the geospatial data domain, e.g., for climate data. However, most existing approaches work with temporal snapshots or assume 1D time-series; few are able to capture spatio-temporal processes simultaneously. Beyond this, Earth-systems data often exhibit highly irregular and complex patterns, for example caused by extreme weather events. Because of climate change, these phenomena are only increasing in frequency. Here, we proposed a novel GAN-based approach for generating spatio-temporal weather patterns conditioned on detected extreme events. Our approach augments GAN generator and discriminator with an encoded extreme weather event segmentation mask. These segmentation masks can be created from raw input using existing event detection frameworks. As such, our approach is highly modular and can be combined with custom GAN architectures. We highlight the applicability of our proposed approach in experiments with real-world surface radiation and zonal wind data.

COT-GAN: Generating Sequential Data via Causal Optimal Transport

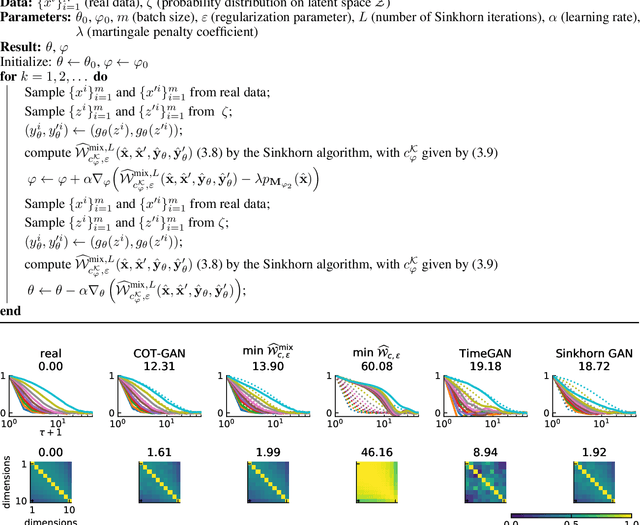

Jun 15, 2020

Abstract:We introduce COT-GAN, an adversarial algorithm to train implicit generative models optimized for producing sequential data. The loss function of this algorithm is formulated using ideas from Causal Optimal Transport (COT), which combines classic optimal transport methods with an additional temporal causality constraint. Remarkably, we find that this causality condition provides a natural framework to parameterize the cost function that is learned by the discriminator as a robust (worst-case) distance, and an ideal mechanism for learning time dependent data distributions. Following Genevay et al.\ (2018), we also include an entropic penalization term which allows for the use of the Sinkhorn algorithm when computing the optimal transport cost. Our experiments show effectiveness and stability of COT-GAN when generating both low- and high-dimensional time series data. The success of the algorithm also relies on a new, improved version of the Sinkhorn divergence which demonstrates less bias in learning.

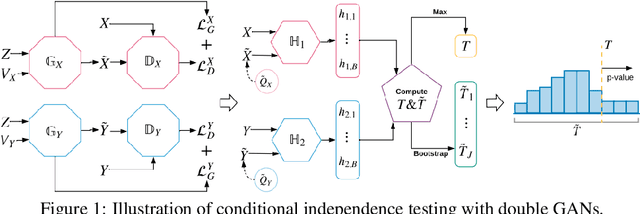

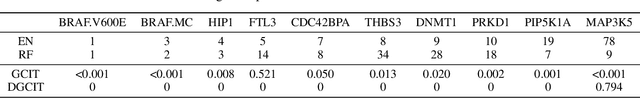

Double Generative Adversarial Networks for Conditional Independence Testing

Jun 03, 2020

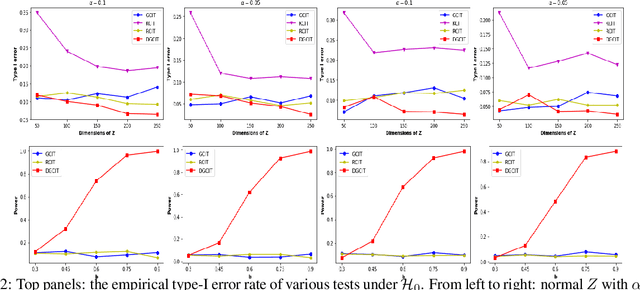

Abstract:In this article, we consider the problem of high-dimensional conditional independence testing, which is a key building block in statistics and machine learning. We propose a double generative adversarial networks (GANs)-based inference procedure. We first introduce a double GANs framework to learn two generators, and integrate the two generators to construct a doubly-robust test statistic. We next consider multiple generalized covariance measures, and take their maximum as our test statistic. Finally, we obtain the empirical distribution of our test statistic through multiplier bootstrap. We show that our test controls type-I error, while the power approaches one asymptotically. More importantly, these theoretical guarantees are obtained under much weaker and practically more feasible conditions compared to existing tests. We demonstrate the efficacy of our test through both synthetic and real datasets.

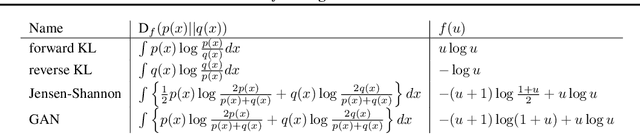

Variational f-divergence Minimization

Jul 27, 2019

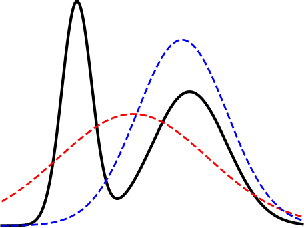

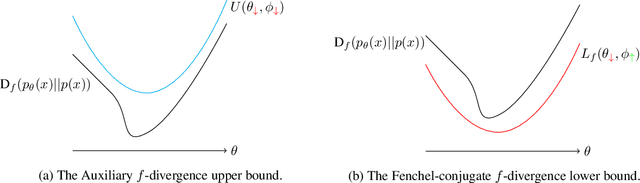

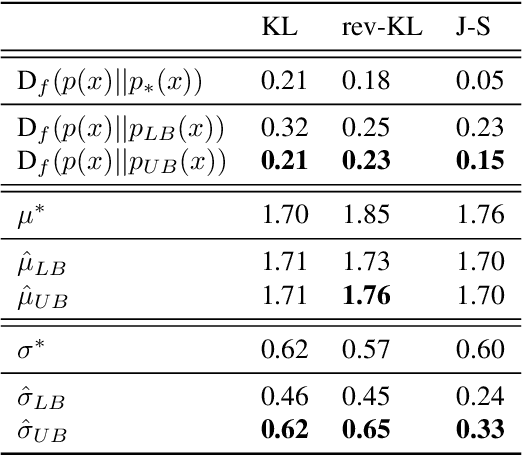

Abstract:Probabilistic models are often trained by maximum likelihood, which corresponds to minimizing a specific f-divergence between the model and data distribution. In light of recent successes in training Generative Adversarial Networks, alternative non-likelihood training criteria have been proposed. Whilst not necessarily statistically efficient, these alternatives may better match user requirements such as sharp image generation. A general variational method for training probabilistic latent variable models using maximum likelihood is well established; however, how to train latent variable models using other f-divergences is comparatively unknown. We discuss a variational approach that, when combined with the recently introduced Spread Divergence, can be applied to train a large class of latent variable models using any f-divergence.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge