Suman Datta

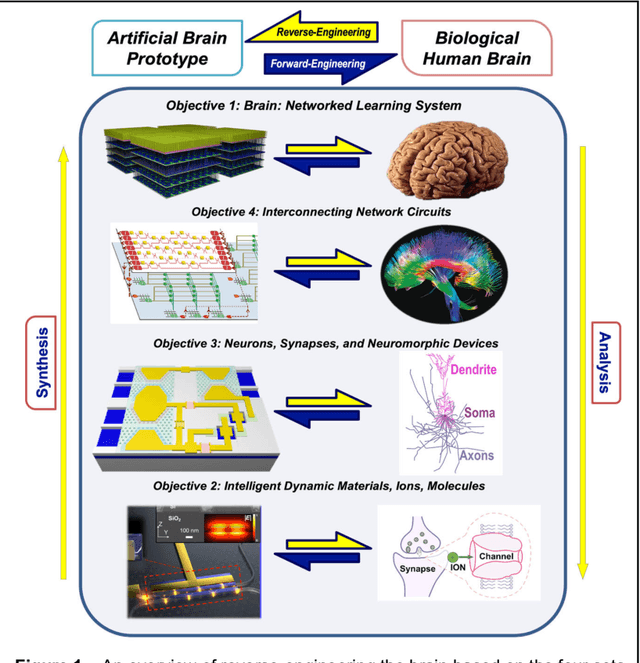

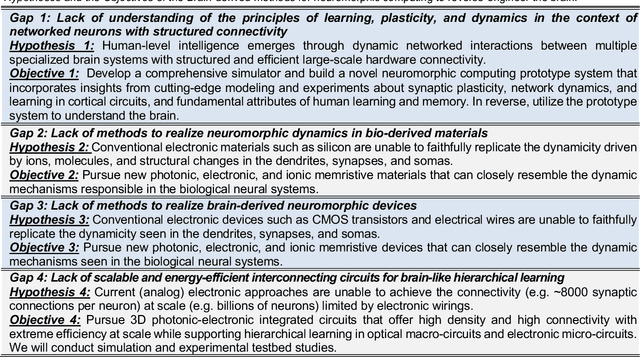

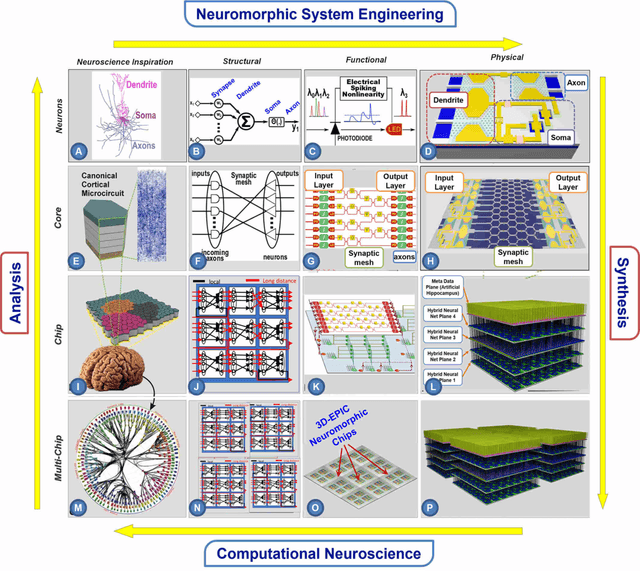

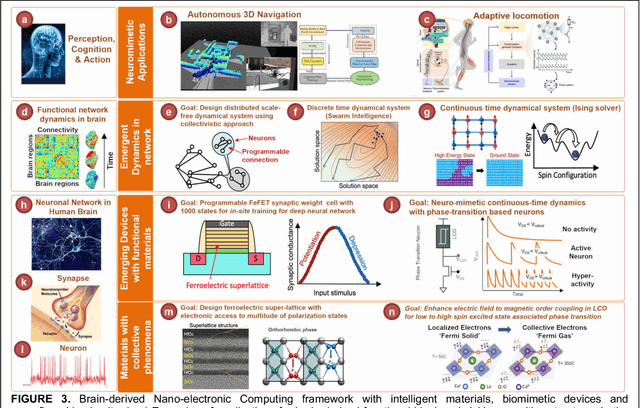

Towards Reverse-Engineering the Brain: Brain-Derived Neuromorphic Computing Approach with Photonic, Electronic, and Ionic Dynamicity in 3D integrated circuits

Mar 28, 2024

Abstract:The human brain has immense learning capabilities at extreme energy efficiencies and scale that no artificial system has been able to match. For decades, reverse engineering the brain has been one of the top priorities of science and technology research. Despite numerous efforts, conventional electronics-based methods have failed to match the scalability, energy efficiency, and self-supervised learning capabilities of the human brain. On the other hand, very recent progress in the development of new generations of photonic and electronic memristive materials, device technologies, and 3D electronic-photonic integrated circuits (3D EPIC ) promise to realize new brain-derived neuromorphic systems with comparable connectivity, density, energy-efficiency, and scalability. When combined with bio-realistic learning algorithms and architectures, it may be possible to realize an 'artificial brain' prototype with general self-learning capabilities. This paper argues the possibility of reverse-engineering the brain through architecting a prototype of a brain-derived neuromorphic computing system consisting of artificial electronic, ionic, photonic materials, devices, and circuits with dynamicity resembling the bio-plausible molecular, neuro/synaptic, neuro-circuit, and multi-structural hierarchical macro-circuits of the brain based on well-tested computational models. We further argue the importance of bio-plausible local learning algorithms applicable to the neuromorphic computing system that capture the flexible and adaptive unsupervised and self-supervised learning mechanisms central to human intelligence. Most importantly, we emphasize that the unique capabilities in brain-derived neuromorphic computing prototype systems will enable us to understand links between specific neuronal and network-level properties with system-level functioning and behavior.

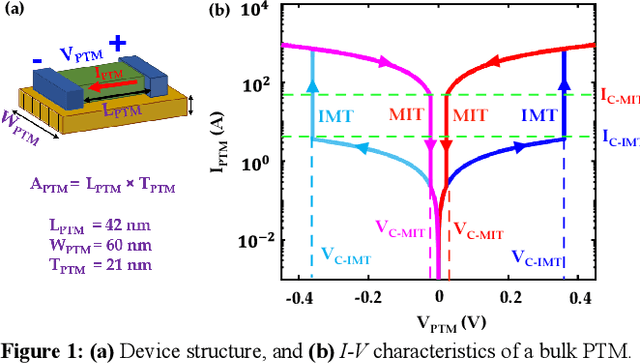

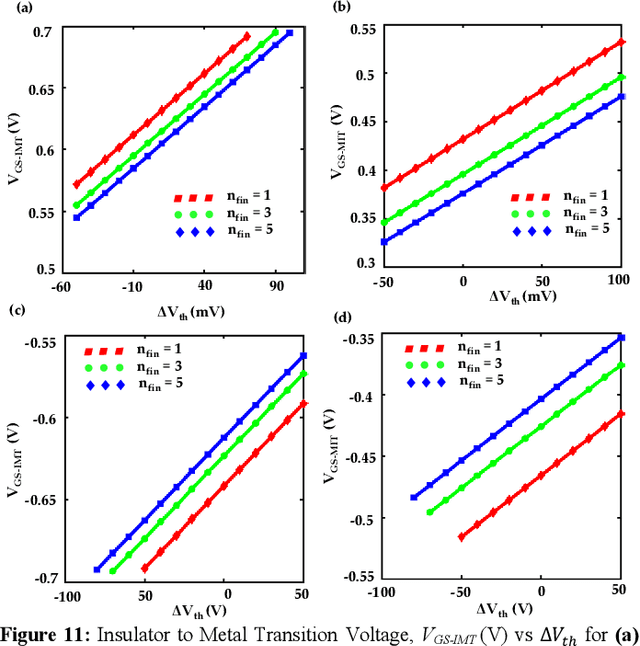

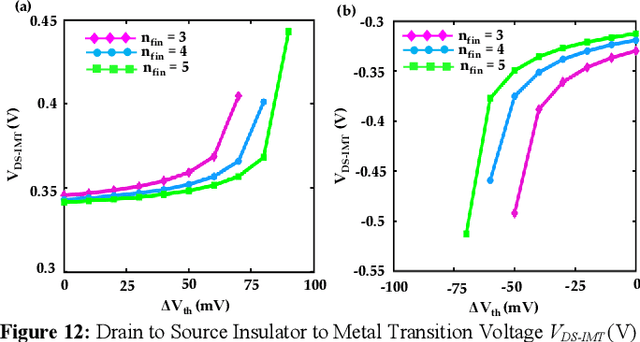

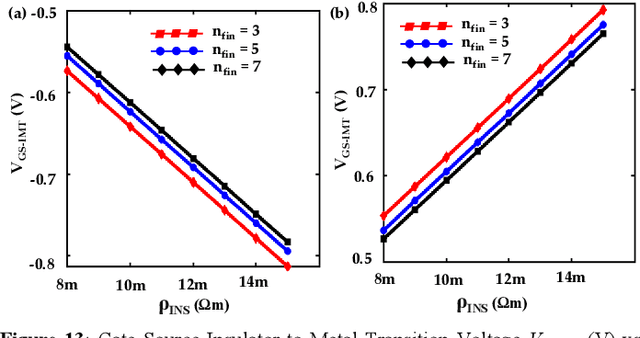

Reimagining Sense Amplifiers: Harnessing Phase Transition Materials for Current and Voltage Sensing

Aug 30, 2023

Abstract:Energy-efficient sense amplifier (SA) circuits are essential for reliable detection of stored memory states in emerging memory systems. In this work, we present four novel sense amplifier (SA) topologies based on phase transition material (PTM) tailored for non-volatile memory applications. We utilize the abrupt switching and volatile hysteretic characteristics of PTMs which enables efficient and fast sensing operation in our proposed SA topologies. We provide comprehensive details of their functionality and assess how process variations impact their performance metrics. Our proposed sense amplifier topologies manifest notable performance enhancement. We achieve a ~67% reduction in sensing delay and a ~80% decrease in sensing power for current sensing. For voltage sensing, we achieve a ~75% reduction in sensing delay and a ~33% decrease in sensing power. Moreover, the proposed SA topologies exhibit improved variation robustness compared to conventional SAs. We also scrutinize the dependence of transistor mirroring window and PTM transition voltages on several device parameters to determine the optimum operating conditions and stance of tunability for each of the proposed SA topologies.

Neural Sampling Machine with Stochastic Synapse allows Brain-like Learning and Inference

Feb 20, 2021

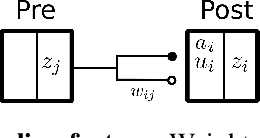

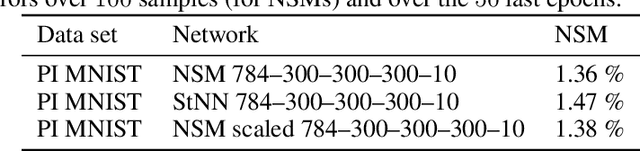

Abstract:Many real-world mission-critical applications require continual online learning from noisy data and real-time decision making with a defined confidence level. Probabilistic models and stochastic neural networks can explicitly handle uncertainty in data and allow adaptive learning-on-the-fly, but their implementation in a low-power substrate remains a challenge. Here, we introduce a novel hardware fabric that implements a new class of stochastic NN called Neural-Sampling-Machine that exploits stochasticity in synaptic connections for approximate Bayesian inference. Harnessing the inherent non-linearities and stochasticity occurring at the atomic level in emerging materials and devices allows us to capture the synaptic stochasticity occurring at the molecular level in biological synapses. We experimentally demonstrate in-silico hybrid stochastic synapse by pairing a ferroelectric field-effect transistor -based analog weight cell with a two-terminal stochastic selector element. Such a stochastic synapse can be integrated within the well-established crossbar array architecture for compute-in-memory. We experimentally show that the inherent stochastic switching of the selector element between the insulator and metallic state introduces a multiplicative stochastic noise within the synapses of NSM that samples the conductance states of the FeFET, both during learning and inference. We perform network-level simulations to highlight the salient automatic weight normalization feature introduced by the stochastic synapses of the NSM that paves the way for continual online learning without any offline Batch Normalization. We also showcase the Bayesian inferencing capability introduced by the stochastic synapse during inference mode, thus accounting for uncertainty in data. We report 98.25%accuracy on standard image classification task as well as estimation of data uncertainty in rotated samples.

Inherent Weight Normalization in Stochastic Neural Networks

Oct 27, 2019

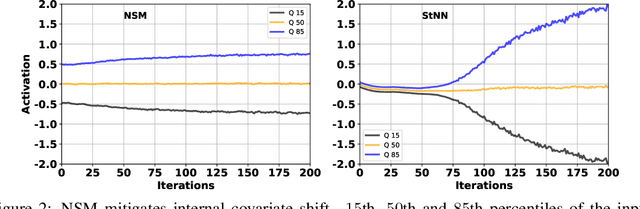

Abstract:Multiplicative stochasticity such as Dropout improves the robustness and generalizability of deep neural networks. Here, we further demonstrate that always-on multiplicative stochasticity combined with simple threshold neurons are sufficient operations for deep neural networks. We call such models Neural Sampling Machines (NSM). We find that the probability of activation of the NSM exhibits a self-normalizing property that mirrors Weight Normalization, a previously studied mechanism that fulfills many of the features of Batch Normalization in an online fashion. The normalization of activities during training speeds up convergence by preventing internal covariate shift caused by changes in the input distribution. The always-on stochasticity of the NSM confers the following advantages: the network is identical in the inference and learning phases, making the NSM suitable for online learning, it can exploit stochasticity inherent to a physical substrate such as analog non-volatile memories for in-memory computing, and it is suitable for Monte Carlo sampling, while requiring almost exclusively addition and comparison operations. We demonstrate NSMs on standard classification benchmarks (MNIST and CIFAR) and event-based classification benchmarks (N-MNIST and DVS Gestures). Our results show that NSMs perform comparably or better than conventional artificial neural networks with the same architecture.

Design space exploration of Ferroelectric FET based Processing-in-Memory DNN Accelerator

Aug 12, 2019

Abstract:In this letter, we quantify the impact of device limitations on the classification accuracy of an artificial neural network, where the synaptic weights are implemented in a Ferroelectric FET (FeFET) based in-memory processing architecture. We explore a design-space consisting of the resolution of the analog-to-digital converter, number of bits per FeFET cell, and the neural network depth. We show how the system architecture, training models and overparametrization can address some of the device limitations.

AI based Safety System for Employees of Manufacturing Industries in Developing Countries

Nov 28, 2018

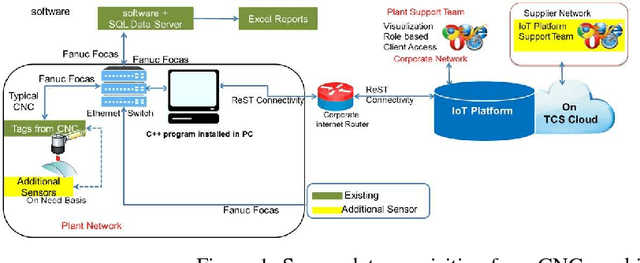

Abstract:In this paper authors are going to present a Markov Decision Process (MDP) based algorithm in Industrial Internet of Things (IIoT) as a safety compliance layer for human in loop system. Though some industries are moving towards Industry 4.0 and attempting to automate the systems as much as possible by using robots, still human in loop systems are very common in developing countries like India. When ever there is a need for human machine interaction, there is a scope of health hazard. In this work we have developed a system for one such industry using MDP. The proposed algorithm used in this system learned the probability of state transition from experience as well as the system is adaptable to new changes by incorporating the concept of transfer learning. The system was evaluated on the data set obtained from 39 sensors connected to a computer numerically controlled (CNC) machine pushing data every second in a 24x7 scenario. The state changes are typically instructed by a human which subsequently lead to some intentional or unintentional mistakes and errors. The proposed system raises an alarm for the operator to warn which he may or may not overlook depending on his own perception about the present condition of the system. Repeated ignorance of the operator for a particular state transition warning guides the system to retrain the model. We observed 95.61% alarms raised by the said system are taken care of by the operator. 3.2% alarms are coming from the changes in the system which in turn used to retrain the model and 1.19% alarms are false alarms. We could not compute the error coming from the mistake performed by the human operator as there is no ground truth available for that.

Stochastic IMT (insulator-metal-transition) neurons: An interplay of thermal and threshold noise at bifurcation

Mar 28, 2018

Abstract:Artificial neural networks can harness stochasticity in multiple ways to enable a vast class of computationally powerful models. Electronic implementation of such stochastic networks is currently limited to addition of algorithmic noise to digital machines which is inherently inefficient; albeit recent efforts to harness physical noise in devices for stochasticity have shown promise. To succeed in fabricating electronic neuromorphic networks we need experimental evidence of devices with measurable and controllable stochasticity which is complemented with the development of reliable statistical models of such observed stochasticity. Current research literature has sparse evidence of the former and a complete lack of the latter. This motivates the current article where we demonstrate a stochastic neuron using an insulator-metal-transition (IMT) device, based on electrically induced phase-transition, in series with a tunable resistance. We show that an IMT neuron has dynamics similar to a piecewise linear FitzHugh-Nagumo (FHN) neuron and incorporates all characteristics of a spiking neuron in the device phenomena. We experimentally demonstrate spontaneous stochastic spiking along with electrically controllable firing probabilities using Vanadium Dioxide (VO$_2$) based IMT neurons which show a sigmoid-like transfer function. The stochastic spiking is explained by two noise sources - thermal noise and threshold fluctuations, which act as precursors of bifurcation. As such, the IMT neuron is modeled as an Ornstein-Uhlenbeck (OU) process with a fluctuating boundary resulting in transfer curves that closely match experiments. As one of the first comprehensive studies of a stochastic neuron hardware and its statistical properties, this article would enable efficient implementation of a large class of neuro-mimetic networks and algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge