Garrett S. Rose

Spike-based Neuromorphic Computing for Next-Generation Computer Vision

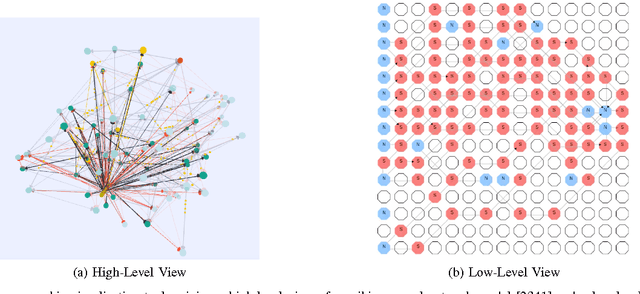

Oct 15, 2023Abstract:Neuromorphic Computing promises orders of magnitude improvement in energy efficiency compared to traditional von Neumann computing paradigm. The goal is to develop an adaptive, fault-tolerant, low-footprint, fast, low-energy intelligent system by learning and emulating brain functionality which can be realized through innovation in different abstraction layers including material, device, circuit, architecture and algorithm. As the energy consumption in complex vision tasks keep increasing exponentially due to larger data set and resource-constrained edge devices become increasingly ubiquitous, spike-based neuromorphic computing approaches can be viable alternative to deep convolutional neural network that is dominating the vision field today. In this book chapter, we introduce neuromorphic computing, outline a few representative examples from different layers of the design stack (devices, circuits and algorithms) and conclude with a few exciting applications and future research directions that seem promising for computer vision in the near future.

Reimagining Sense Amplifiers: Harnessing Phase Transition Materials for Current and Voltage Sensing

Aug 30, 2023

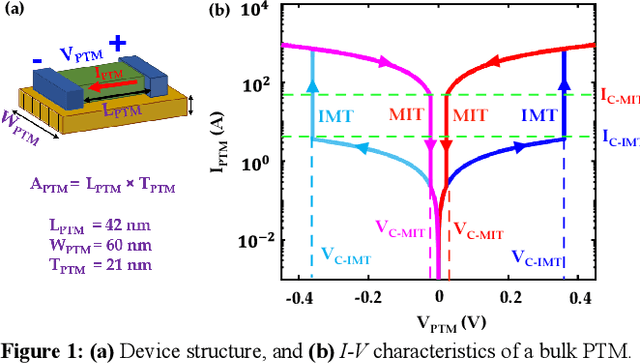

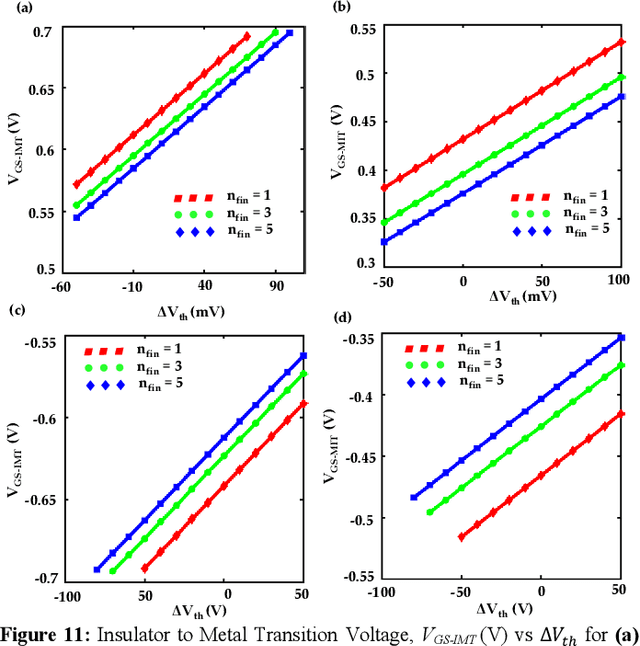

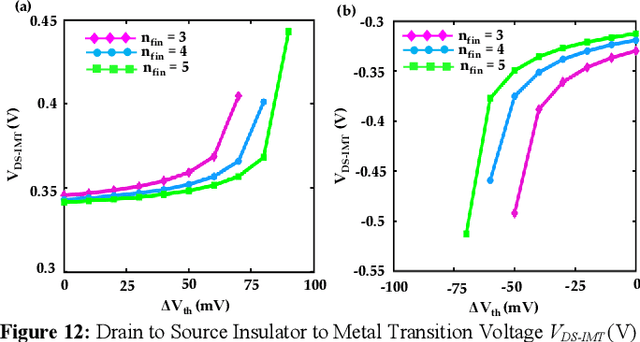

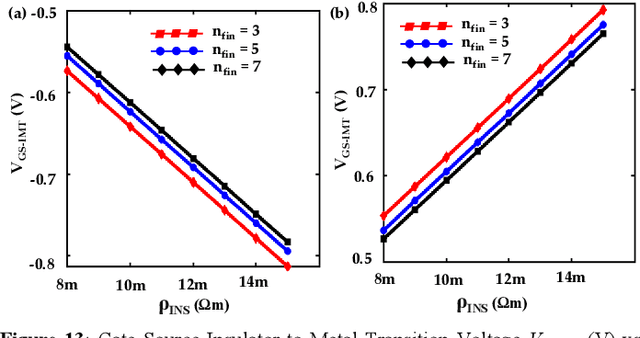

Abstract:Energy-efficient sense amplifier (SA) circuits are essential for reliable detection of stored memory states in emerging memory systems. In this work, we present four novel sense amplifier (SA) topologies based on phase transition material (PTM) tailored for non-volatile memory applications. We utilize the abrupt switching and volatile hysteretic characteristics of PTMs which enables efficient and fast sensing operation in our proposed SA topologies. We provide comprehensive details of their functionality and assess how process variations impact their performance metrics. Our proposed sense amplifier topologies manifest notable performance enhancement. We achieve a ~67% reduction in sensing delay and a ~80% decrease in sensing power for current sensing. For voltage sensing, we achieve a ~75% reduction in sensing delay and a ~33% decrease in sensing power. Moreover, the proposed SA topologies exhibit improved variation robustness compared to conventional SAs. We also scrutinize the dependence of transistor mirroring window and PTM transition voltages on several device parameters to determine the optimum operating conditions and stance of tunability for each of the proposed SA topologies.

Functional Specification of the RAVENS Neuroprocessor

Jul 27, 2023Abstract:RAVENS is a neuroprocessor that has been developed by the TENNLab research group at the University of Tennessee. Its main focus has been as a vehicle for chip design with memristive elements; however it has also been the vehicle for all-digital CMOS development, plus it has implementations on FPGA's, microcontrollers and software simulation. The software simulation is supported by the TENNLab neuromorphic software framework so that researchers may develop RAVENS solutions for a variety of neuromorphic computing applications. This document provides a functional specification of RAVENS that should apply to all implementations of the RAVENS neuroprocessor.

An Efficient and Accurate Memristive Memory for Array-based Spiking Neural Networks

Jun 11, 2023Abstract:Memristors provide a tempting solution for weighted synapse connections in neuromorphic computing due to their size and non-volatile nature. However, memristors are unreliable in the commonly used voltage-pulse-based programming approaches and require precisely shaped pulses to avoid programming failure. In this paper, we demonstrate a current-limiting-based solution that provides a more predictable analog memory behavior when reading and writing memristive synapses. With our proposed design READ current can be optimized by about 19x compared to the 1T1R design. Moreover, our proposed design saves about 9x energy compared to the 1T1R design. Our 3T1R design also shows promising write operation which is less affected by the process variation in MOSFETs and the inherent stochastic behavior of memristors. Memristors used for testing are hafnium oxide based and were fabricated in a 65nm hybrid CMOS-memristor process. The proposed design also shows linear characteristics between the voltage applied and the resulting resistance for the writing operation. The simulation and measured data show similar patterns with respect to voltage pulse-based programming and current compliance-based programming. We further observed the impact of this behavior on neuromorphic-specific applications such as a spiking neural network

Disclosure of a Neuromorphic Starter Kit

Nov 08, 2022Abstract:This paper presents a Neuromorphic Starter Kit, which has been designed to help a variety of research groups perform research, exploration and real-world demonstrations of brain-based, neuromorphic processors and hardware environments. A prototype kit has been built and tested. We explain the motivation behind the kit, its design and composition, and a prototype physical demonstration.

The Case for RISP: A Reduced Instruction Spiking Processor

Jun 28, 2022

Abstract:In this paper, we introduce RISP, a reduced instruction spiking processor. While most spiking neuroprocessors are based on the brain, or notions from the brain, we present the case for a spiking processor that simplifies rather than complicates. As such, it features discrete integration cycles, configurable leak, and little else. We present the computing model of RISP and highlight the benefits of its simplicity. We demonstrate how it aids in developing hand built neural networks for simple computational tasks, detail how it may be employed to simplify neural networks built with more complicated machine learning techniques, and demonstrate how it performs similarly to other spiking neurprocessors.

A Survey of Neuromorphic Computing and Neural Networks in Hardware

May 19, 2017

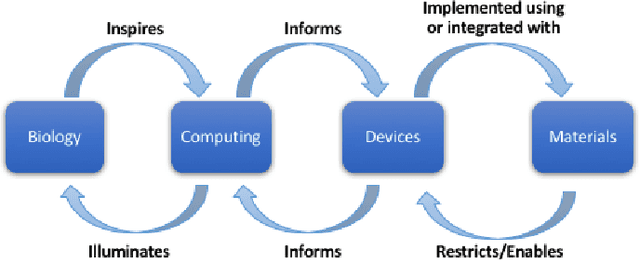

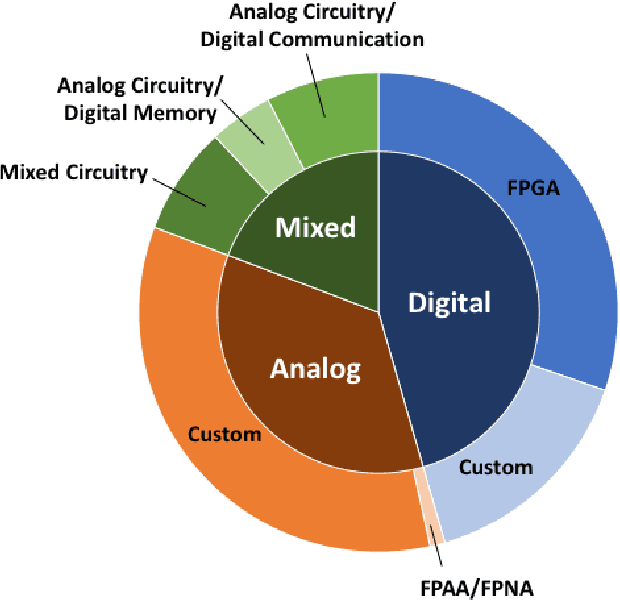

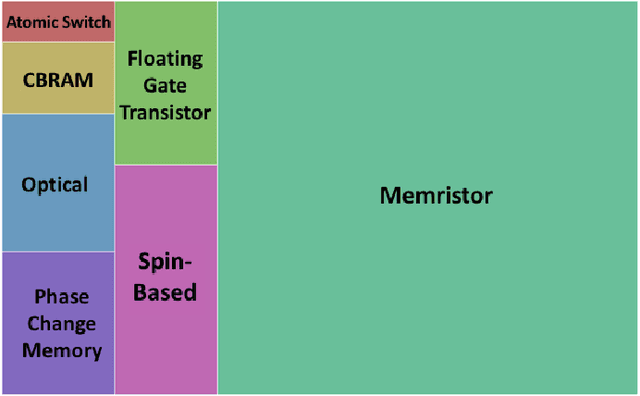

Abstract:Neuromorphic computing has come to refer to a variety of brain-inspired computers, devices, and models that contrast the pervasive von Neumann computer architecture. This biologically inspired approach has created highly connected synthetic neurons and synapses that can be used to model neuroscience theories as well as solve challenging machine learning problems. The promise of the technology is to create a brain-like ability to learn and adapt, but the technical challenges are significant, starting with an accurate neuroscience model of how the brain works, to finding materials and engineering breakthroughs to build devices to support these models, to creating a programming framework so the systems can learn, to creating applications with brain-like capabilities. In this work, we provide a comprehensive survey of the research and motivations for neuromorphic computing over its history. We begin with a 35-year review of the motivations and drivers of neuromorphic computing, then look at the major research areas of the field, which we define as neuro-inspired models, algorithms and learning approaches, hardware and devices, supporting systems, and finally applications. We conclude with a broad discussion on the major research topics that need to be addressed in the coming years to see the promise of neuromorphic computing fulfilled. The goals of this work are to provide an exhaustive review of the research conducted in neuromorphic computing since the inception of the term, and to motivate further work by illuminating gaps in the field where new research is needed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge