James S. Plank

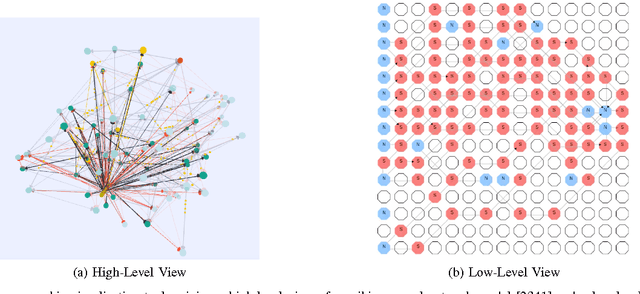

A Neuromorphic Implementation of the DBSCAN Algorithm

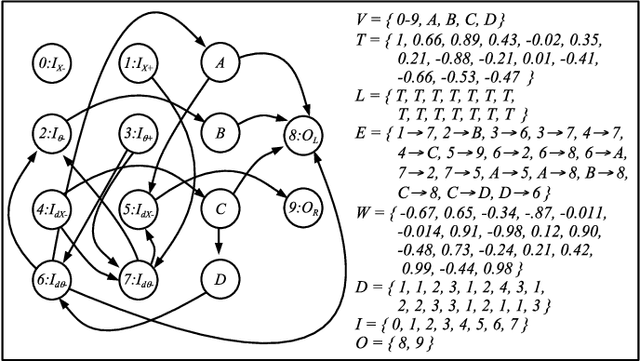

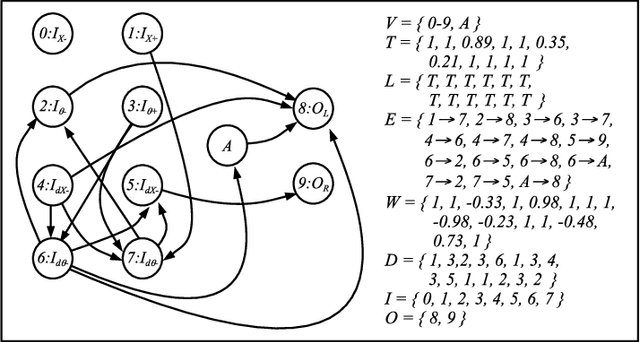

Sep 22, 2024Abstract:DBSCAN is an algorithm that performs clustering in the presence of noise. In this paper, we provide two constructions that allow DBSCAN to be implemented neuromorphically, using spiking neural networks. The first construction is termed "flat," resulting in large spiking neural networks that compute the algorithm quickly, in five timesteps. Moreover, the networks allow pipelining, so that a new DBSCAN calculation may be performed every timestep. The second construction is termed "systolic", and generates much smaller networks, but requires the inputs to be spiked in over several timesteps, column by column. We provide precise specifications of the constructions and analyze them in practical neuromorphic computing settings. We also provide an open-source implementation.

Speed-based Filtration and DBSCAN of Event-based Camera Data with Neuromorphic Computing

Jan 26, 2024Abstract:Spiking neural networks are powerful computational elements that pair well with event-based cameras (EBCs). In this work, we present two spiking neural network architectures that process events from EBCs: one that isolates and filters out events based on their speeds, and another that clusters events based on the DBSCAN algorithm.

Functional Specification of the RAVENS Neuroprocessor

Jul 27, 2023Abstract:RAVENS is a neuroprocessor that has been developed by the TENNLab research group at the University of Tennessee. Its main focus has been as a vehicle for chip design with memristive elements; however it has also been the vehicle for all-digital CMOS development, plus it has implementations on FPGA's, microcontrollers and software simulation. The software simulation is supported by the TENNLab neuromorphic software framework so that researchers may develop RAVENS solutions for a variety of neuromorphic computing applications. This document provides a functional specification of RAVENS that should apply to all implementations of the RAVENS neuroprocessor.

Disclosure of a Neuromorphic Starter Kit

Nov 08, 2022Abstract:This paper presents a Neuromorphic Starter Kit, which has been designed to help a variety of research groups perform research, exploration and real-world demonstrations of brain-based, neuromorphic processors and hardware environments. A prototype kit has been built and tested. We explain the motivation behind the kit, its design and composition, and a prototype physical demonstration.

The Case for RISP: A Reduced Instruction Spiking Processor

Jun 28, 2022

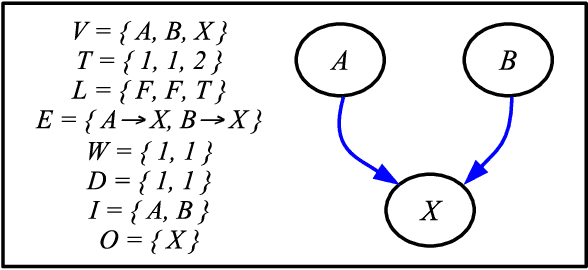

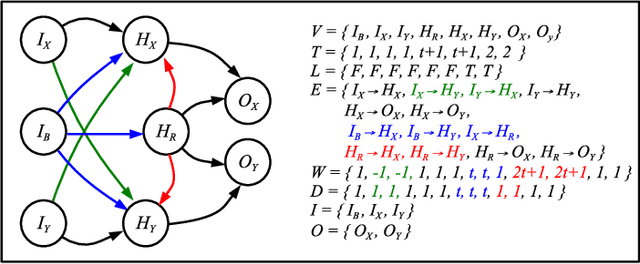

Abstract:In this paper, we introduce RISP, a reduced instruction spiking processor. While most spiking neuroprocessors are based on the brain, or notions from the brain, we present the case for a spiking processor that simplifies rather than complicates. As such, it features discrete integration cycles, configurable leak, and little else. We present the computing model of RISP and highlight the benefits of its simplicity. We demonstrate how it aids in developing hand built neural networks for simple computational tasks, detail how it may be employed to simplify neural networks built with more complicated machine learning techniques, and demonstrate how it performs similarly to other spiking neurprocessors.

An Oracle and Observations for the OpenAI Gym / ALE Freeway Environment

Sep 02, 2021

Abstract:The OpenAI Gym project contains hundreds of control problems whose goal is to provide a testbed for reinforcement learning algorithms. One such problem is Freeway-ram-v0, where the observations presented to the agent are 128 bytes of RAM. While the goals of the project are for non-expert AI agents to solve the control problems with general training, in this work, we seek to learn more about the problem, so that we can better evaluate solutions. In particular, we develop on oracle to play the game, so that we may have baselines for success. We present details of the oracle, plus optimal game-playing situations that can be used for training and testing AI agents.

Stochasticity and Robustness in Spiking Neural Networks

Jun 06, 2019

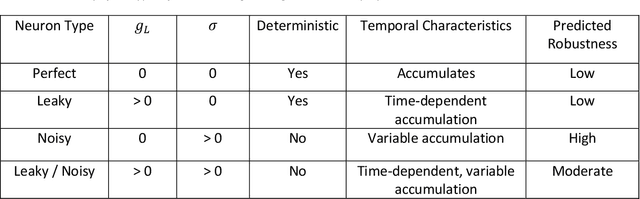

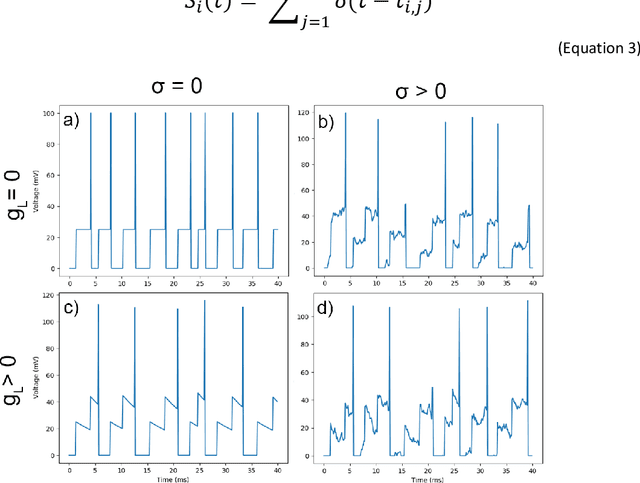

Abstract:Artificial neural networks normally require precise weights to operate, despite their origins in biological systems, which can be highly variable and noisy. When implementing artificial networks which utilize analog 'synaptic' devices to encode weights, however, inherent limits are placed on the accuracy and precision with which these values can be encoded. In this work, we investigate the effects that inaccurate synapses have on spiking neurons and spiking neural networks. Starting with a mathematical analysis of integrate-and-fire (IF) neurons, including different non-idealities (such as leakage and channel noise), we demonstrate that noise can be used to make the behavior of IF neurons more robust to synaptic inaccuracy. We then train spiking networks which utilize IF neurons with and without noise and leakage, and experimentally confirm that the noisy networks are more robust. Lastly, we show that a noisy network can tolerate the inaccuracy expected when hafnium-oxide based resistive random-access memory is used to encode synaptic weights.

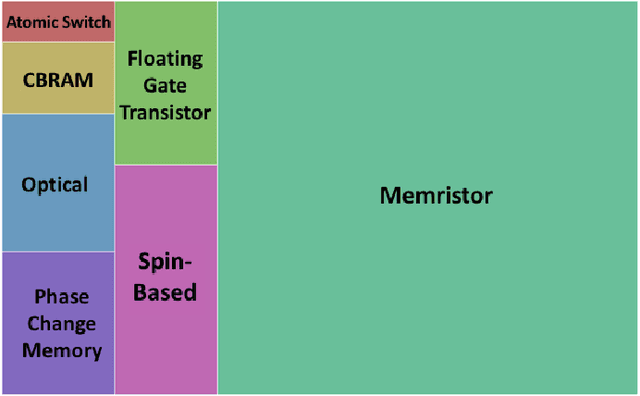

A Survey of Neuromorphic Computing and Neural Networks in Hardware

May 19, 2017

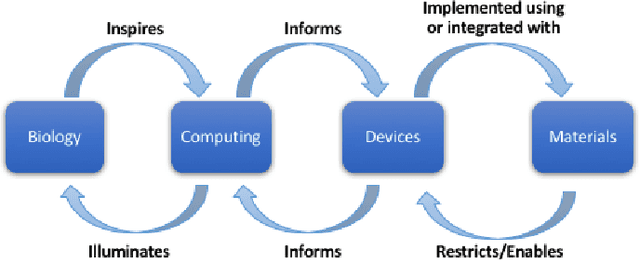

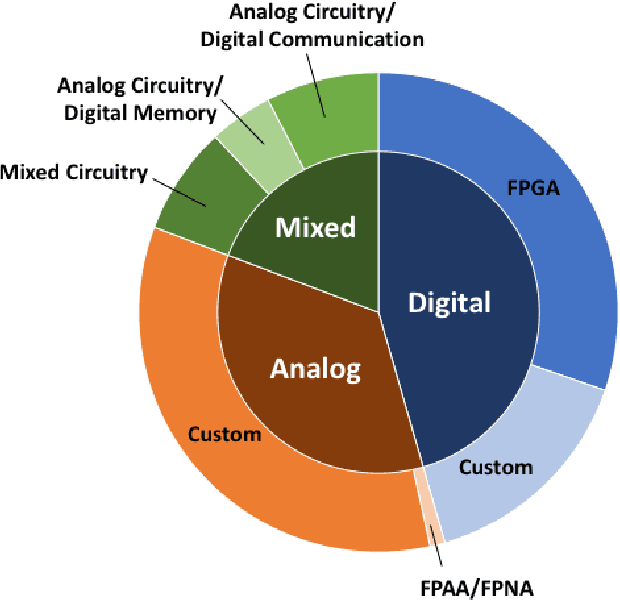

Abstract:Neuromorphic computing has come to refer to a variety of brain-inspired computers, devices, and models that contrast the pervasive von Neumann computer architecture. This biologically inspired approach has created highly connected synthetic neurons and synapses that can be used to model neuroscience theories as well as solve challenging machine learning problems. The promise of the technology is to create a brain-like ability to learn and adapt, but the technical challenges are significant, starting with an accurate neuroscience model of how the brain works, to finding materials and engineering breakthroughs to build devices to support these models, to creating a programming framework so the systems can learn, to creating applications with brain-like capabilities. In this work, we provide a comprehensive survey of the research and motivations for neuromorphic computing over its history. We begin with a 35-year review of the motivations and drivers of neuromorphic computing, then look at the major research areas of the field, which we define as neuro-inspired models, algorithms and learning approaches, hardware and devices, supporting systems, and finally applications. We conclude with a broad discussion on the major research topics that need to be addressed in the coming years to see the promise of neuromorphic computing fulfilled. The goals of this work are to provide an exhaustive review of the research conducted in neuromorphic computing since the inception of the term, and to motivate further work by illuminating gaps in the field where new research is needed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge