Steven A. Niederer

Multi-Class Segmentation of Aortic Branches and Zones in Computed Tomography Angiography: The AortaSeg24 Challenge

Feb 07, 2025

Abstract:Multi-class segmentation of the aorta in computed tomography angiography (CTA) scans is essential for diagnosing and planning complex endovascular treatments for patients with aortic dissections. However, existing methods reduce aortic segmentation to a binary problem, limiting their ability to measure diameters across different branches and zones. Furthermore, no open-source dataset is currently available to support the development of multi-class aortic segmentation methods. To address this gap, we organized the AortaSeg24 MICCAI Challenge, introducing the first dataset of 100 CTA volumes annotated for 23 clinically relevant aortic branches and zones. This dataset was designed to facilitate both model development and validation. The challenge attracted 121 teams worldwide, with participants leveraging state-of-the-art frameworks such as nnU-Net and exploring novel techniques, including cascaded models, data augmentation strategies, and custom loss functions. We evaluated the submitted algorithms using the Dice Similarity Coefficient (DSC) and Normalized Surface Distance (NSD), highlighting the approaches adopted by the top five performing teams. This paper presents the challenge design, dataset details, evaluation metrics, and an in-depth analysis of the top-performing algorithms. The annotated dataset, evaluation code, and implementations of the leading methods are publicly available to support further research. All resources can be accessed at https://aortaseg24.grand-challenge.org.

Whole Heart Anatomical Refinement from CCTA using Extrapolation and Parcellation

Nov 18, 2021

Abstract:Coronary computed tomography angiography (CCTA) provides detailed an-atomical information on all chambers of the heart. Existing segmentation tools can label the gross anatomy, but addition of application-specific labels can require detailed and often manual refinement. We developed a U-Net based framework to i) extrapolate a new label from existing labels, and ii) parcellate one label into multiple labels, both using label-to-label mapping, to create a desired segmentation that could then be learnt directly from the image (image- to-label mapping). This approach only required manual correction in a small subset of cases (80 for extrapolation, 50 for parcella-tion, compared with 260 for initial labels). An initial 6-label segmentation (left ventricle, left ventricular myocardium, right ventricle, left atrium, right atrium and aorta) was refined to a 10-label segmentation that added a label for the pulmonary artery and divided the left atrium label into body, left and right veins and appendage components. The final method was tested using 30 cases, 10 each from Philips, Siemens and Toshiba scanners. In addition to the new labels, the median Dice scores were improved for all the initial 6 labels to be above 95% in the 10-label segmentation, e.g. from 91% to 97% for the left atrium body and from 92% to 96% for the right ventricle. This method provides a simple framework for flexible refinement of anatomical labels. The code and executables are available at cemrg.com.

Optimal Thinning of MCMC Output

May 08, 2020

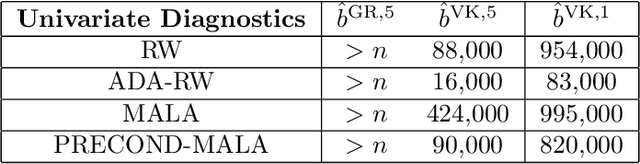

Abstract:The use of heuristics to assess the convergence and compress the output of Markov chain Monte Carlo can be sub-optimal in terms of the empirical approximations that are produced. Typically a number of the initial states are attributed to "burn in" and removed, whilst the chain can be "thinned" if compression is also required. In this paper we consider the problem of selecting a subset of states, of fixed cardinality, such that the approximation provided by their empirical distribution is close to optimal. A novel method is proposed, based on greedy minimisation of a kernel Stein discrepancy, that is suitable for problems where heavy compression is required. Theoretical results guarantee consistency of the method and its effectiveness is demonstrated in the challenging context of parameter inference for ordinary differential equations. Software is available in the "Stein Thinning" package in both Python and MATLAB, and example code is included.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge