Shivang Patel

Improving Accuracy and Generalization for Efficient Visual Tracking

Nov 28, 2024

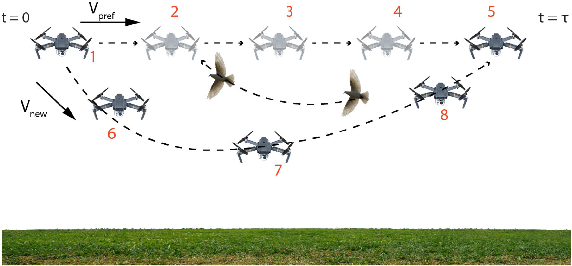

Abstract:Efficient visual trackers overfit to their training distributions and lack generalization abilities, resulting in them performing well on their respective in-distribution (ID) test sets and not as well on out-of-distribution (OOD) sequences, imposing limitations to their deployment in-the-wild under constrained resources. We introduce SiamABC, a highly efficient Siamese tracker that significantly improves tracking performance, even on OOD sequences. SiamABC takes advantage of new architectural designs in the way it bridges the dynamic variability of the target, and of new losses for training. Also, it directly addresses OOD tracking generalization by including a fast backward-free dynamic test-time adaptation method that continuously adapts the model according to the dynamic visual changes of the target. Our extensive experiments suggest that SiamABC shows remarkable performance gains in OOD sets while maintaining accurate performance on the ID benchmarks. SiamABC outperforms MixFormerV2-S by 7.6\% on the OOD AVisT benchmark while being 3x faster (100 FPS) on a CPU.

A Robust Likelihood Model for Novelty Detection

Jun 06, 2023Abstract:Current approaches to novelty or anomaly detection are based on deep neural networks. Despite their effectiveness, neural networks are also vulnerable to imperceptible deformations of the input data. This is a serious issue in critical applications, or when data alterations are generated by an adversarial attack. While this is a known problem that has been studied in recent years for the case of supervised learning, the case of novelty detection has received very limited attention. Indeed, in this latter setting the learning is typically unsupervised because outlier data is not available during training, and new approaches for this case need to be investigated. We propose a new prior that aims at learning a robust likelihood for the novelty test, as a defense against attacks. We also integrate the same prior with a state-of-the-art novelty detection approach. Because of the geometric properties of that approach, the resulting robust training is computationally very efficient. An initial evaluation of the method indicates that it is effective at improving performance with respect to the standard models in the absence and presence of attacks.

Self-supervised Interest Point Detection and Description for Fisheye and Perspective Images

Jun 02, 2023

Abstract:Keypoint detection and matching is a fundamental task in many computer vision problems, from shape reconstruction, to structure from motion, to AR/VR applications and robotics. It is a well-studied problem with remarkable successes such as SIFT, and more recent deep learning approaches. While great robustness is exhibited by these techniques with respect to noise, illumination variation, and rigid motion transformations, less attention has been placed on image distortion sensitivity. In this work, we focus on the case when this is caused by the geometry of the cameras used for image acquisition, and consider the keypoint detection and matching problem between the hybrid scenario of a fisheye and a projective image. We build on a state-of-the-art approach and derive a self-supervised procedure that enables training an interest point detector and descriptor network. We also collected two new datasets for additional training and testing in this unexplored scenario, and we demonstrate that current approaches are suboptimal because they are designed to work in traditional projective conditions, while the proposed approach turns out to be the most effective.

Learning Representations for Masked Facial Recovery

Dec 28, 2022Abstract:The pandemic of these very recent years has led to a dramatic increase in people wearing protective masks in public venues. This poses obvious challenges to the pervasive use of face recognition technology that now is suffering a decline in performance. One way to address the problem is to revert to face recovery methods as a preprocessing step. Current approaches to face reconstruction and manipulation leverage the ability to model the face manifold, but tend to be generic. We introduce a method that is specific for the recovery of the face image from an image of the same individual wearing a mask. We do so by designing a specialized GAN inversion method, based on an appropriate set of losses for learning an unmasking encoder. With extensive experiments, we show that the approach is effective at unmasking face images. In addition, we also show that the identity information is preserved sufficiently well to improve face verification performance based on several face recognition benchmark datasets.

Multi-Agent Coverage in Urban Environments

Aug 17, 2020

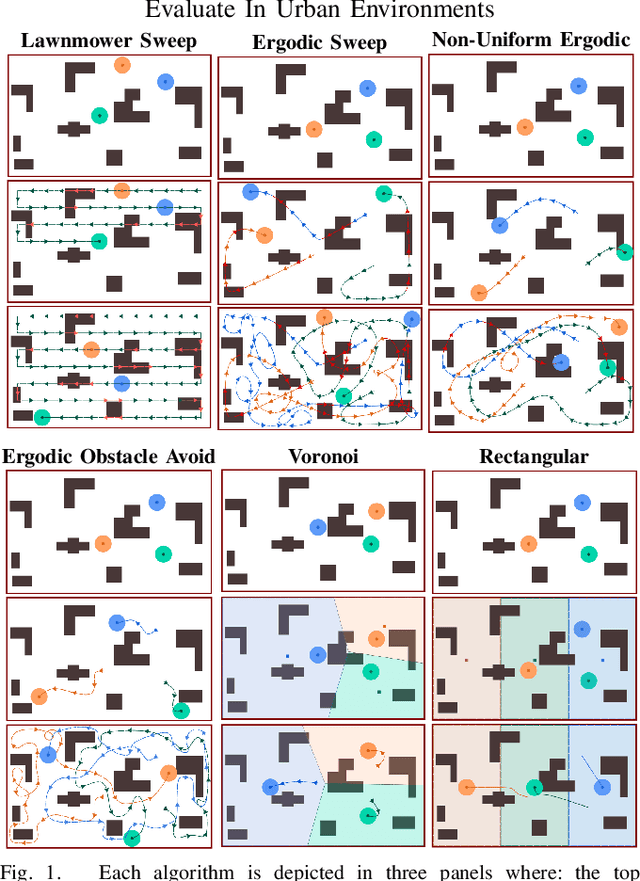

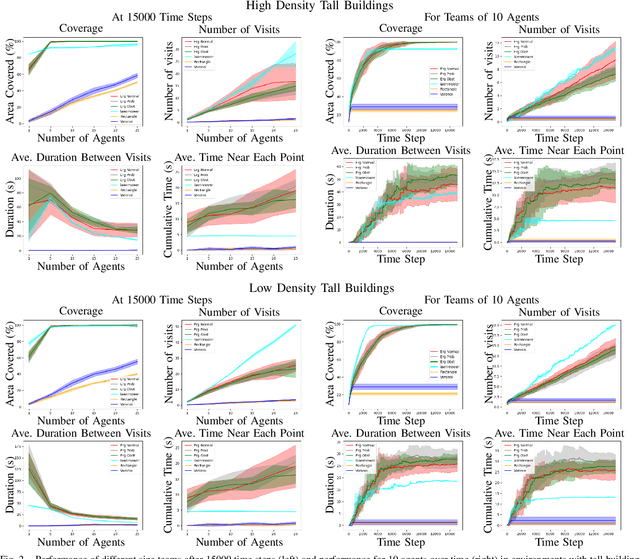

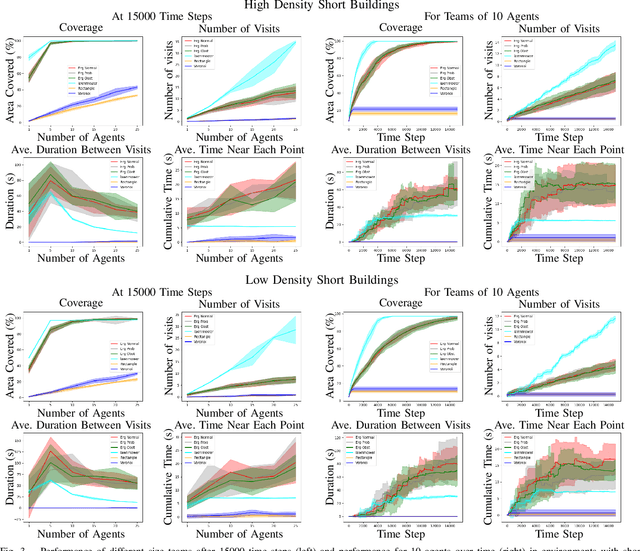

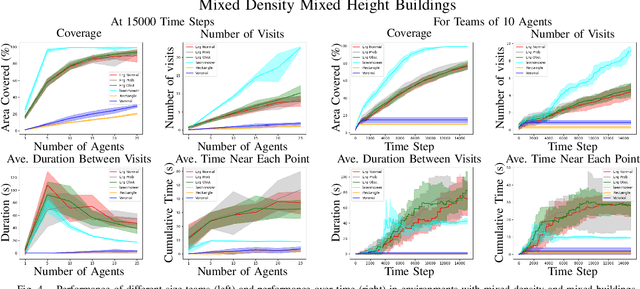

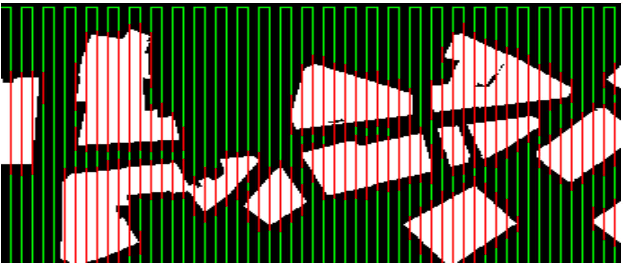

Abstract:We study multi-agent coverage algorithms for autonomous monitoring and patrol in urban environments. We consider scenarios in which a team of flying agents uses downward facing cameras (or similar sensors) to observe the environment outside of buildings at street-level. Buildings are considered obstacles that impede movement, and cameras are assumed to be ineffective above a maximum altitude. We study multi-agent urban coverage problems related to this scenario, including: (1) static multi-agent urban coverage, in which agents are expected to observe the environment from static locations, and (2) dynamic multi-agent urban coverage where agents move continuously through the environment. We experimentally evaluate six different multi-agent coverage methods, including: three types of ergodic coverage (that avoid buildings in different ways), lawn-mower sweep, voronoi region based control, and a naive grid method. We evaluate all algorithms with respect to four performance metrics (percent coverage, revist count, revist time, and the integral of area viewed over time), across four types of urban environments [low density, high density] x [short buildings, tall buildings], and for team sizes ranging from 2 to 25 agents. We believe this is the first extensive comparison of these methods in an urban setting. Our results highlight how the relative performance of static and dynamic methods changes based on the ratio of team size to search area, as well the relative effects that different characteristics of urban environments (tall, short, dense, sparse, mixed) have on each algorithm.

LSwarm: Efficient Collision Avoidance for Large Swarms with Coverage Constraints in Complex Urban Scenes

Mar 06, 2019

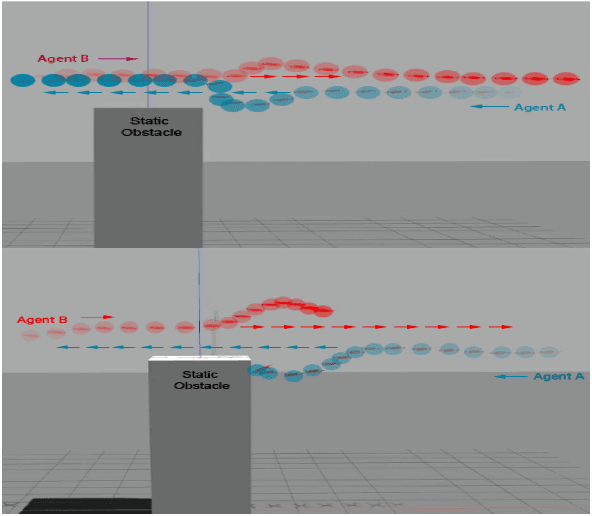

Abstract:In this paper, we address the problem of collision avoidance for a swarm of UAVs used for continuous surveillance of an urban environment. Our method, LSwarm, efficiently avoids collisions with static obstacles, dynamic obstacles and other agents in 3-D urban environments while considering coverage constraints. LSwarm computes collision avoiding velocities that (i) maximize the conformity of an agent to an optimal path given by a global coverage strategy and (ii) ensure sufficient resolution of the coverage data collected by each agent. Our algorithm is formulated based on ORCA (Optimal Reciprocal Collision Avoidance) and is scalable with respect to the size of the swarm. We evaluate the coverage performance of LSwarm in realistic simulations of a swarm of quadrotors in complex urban models. In practice, our approach can compute collision avoiding velocities for a swarm composed of tens to hundreds of agents in a few milliseconds on dense urban scenes consisting of tens of buildings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge