Senthil Hariharan

DC-MRTA: Decentralized Multi-Robot Task Allocation and Navigation in Complex Environments

Sep 07, 2022

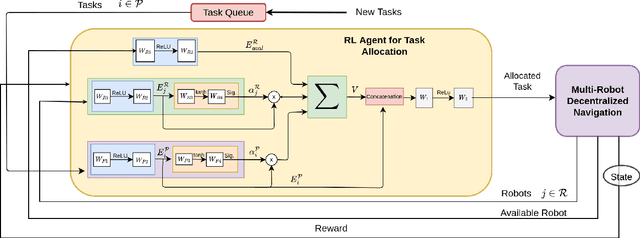

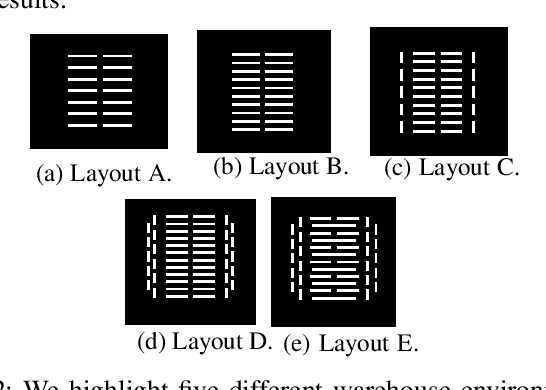

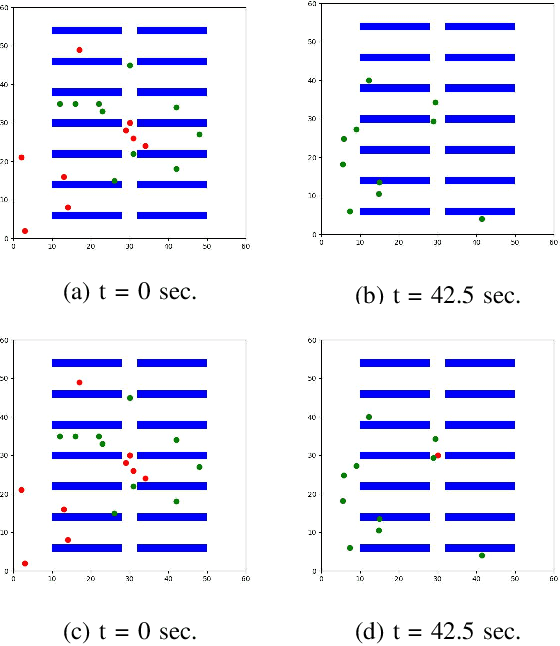

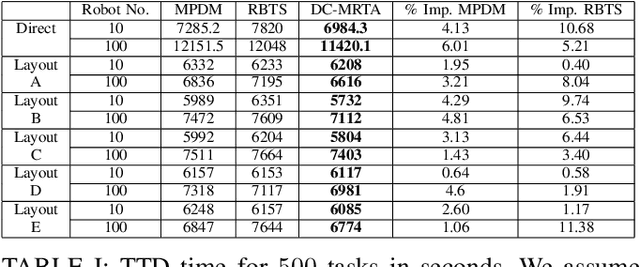

Abstract:We present a novel reinforcement learning (RL) based task allocation and decentralized navigation algorithm for mobile robots in warehouse environments. Our approach is designed for scenarios in which multiple robots are used to perform various pick up and delivery tasks. We consider the problem of joint decentralized task allocation and navigation and present a two level approach to solve it. At the higher level, we solve the task allocation by formulating it in terms of Markov Decision Processes and choosing the appropriate rewards to minimize the Total Travel Delay (TTD). At the lower level, we use a decentralized navigation scheme based on ORCA that enables each robot to perform these tasks in an independent manner, and avoid collisions with other robots and dynamic obstacles. We combine these lower and upper levels by defining rewards for the higher level as the feedback from the lower level navigation algorithm. We perform extensive evaluation in complex warehouse layouts with large number of agents and highlight the benefits over state-of-the-art algorithms based on myopic pickup distance minimization and regret-based task selection. We observe improvement up to 14% in terms of task completion time and up-to 40% improvement in terms of computing collision-free trajectories for the robots.

Multi-Agent Coverage in Urban Environments

Aug 17, 2020

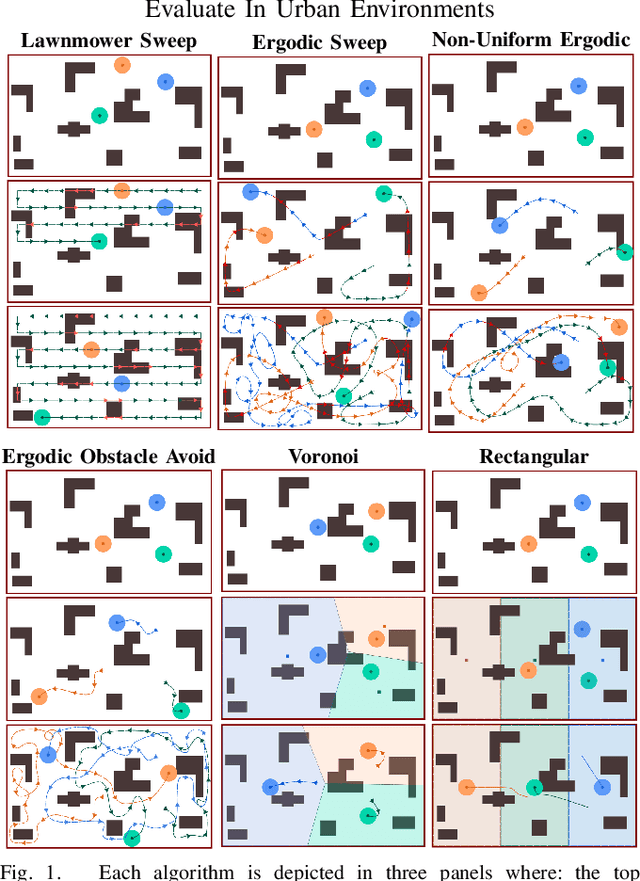

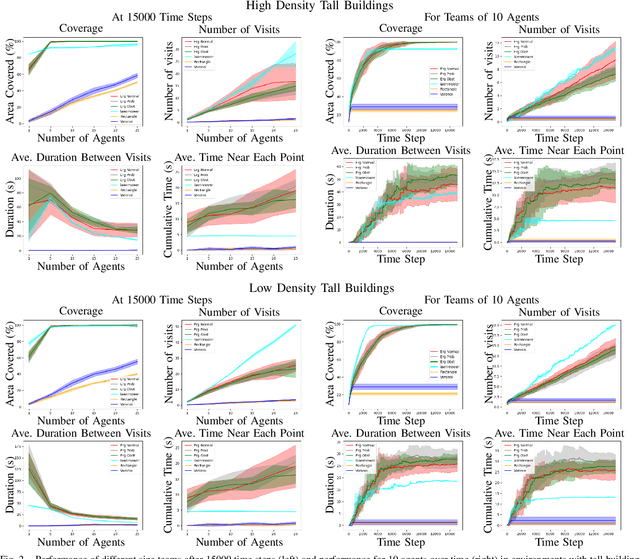

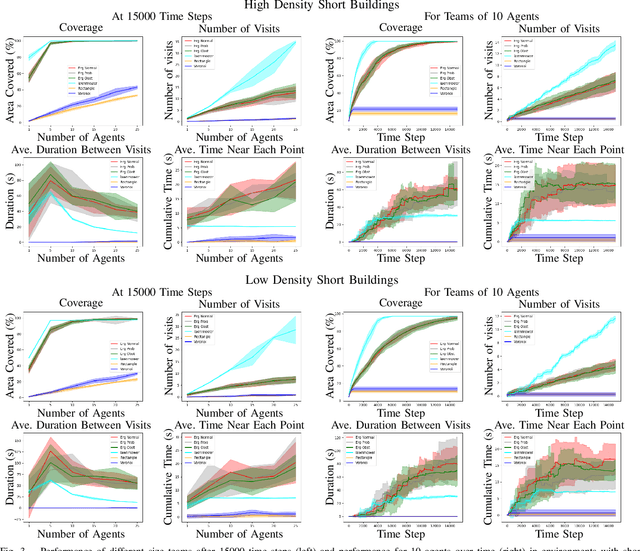

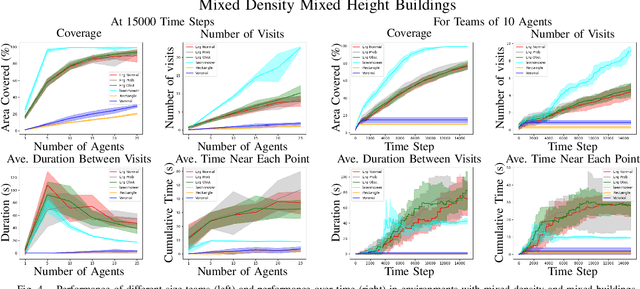

Abstract:We study multi-agent coverage algorithms for autonomous monitoring and patrol in urban environments. We consider scenarios in which a team of flying agents uses downward facing cameras (or similar sensors) to observe the environment outside of buildings at street-level. Buildings are considered obstacles that impede movement, and cameras are assumed to be ineffective above a maximum altitude. We study multi-agent urban coverage problems related to this scenario, including: (1) static multi-agent urban coverage, in which agents are expected to observe the environment from static locations, and (2) dynamic multi-agent urban coverage where agents move continuously through the environment. We experimentally evaluate six different multi-agent coverage methods, including: three types of ergodic coverage (that avoid buildings in different ways), lawn-mower sweep, voronoi region based control, and a naive grid method. We evaluate all algorithms with respect to four performance metrics (percent coverage, revist count, revist time, and the integral of area viewed over time), across four types of urban environments [low density, high density] x [short buildings, tall buildings], and for team sizes ranging from 2 to 25 agents. We believe this is the first extensive comparison of these methods in an urban setting. Our results highlight how the relative performance of static and dynamic methods changes based on the ratio of team size to search area, as well the relative effects that different characteristics of urban environments (tall, short, dense, sparse, mixed) have on each algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge