DC-MRTA: Decentralized Multi-Robot Task Allocation and Navigation in Complex Environments

Paper and Code

Sep 07, 2022

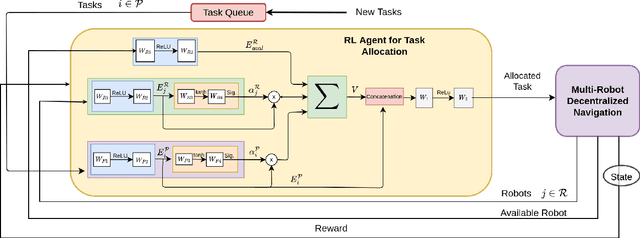

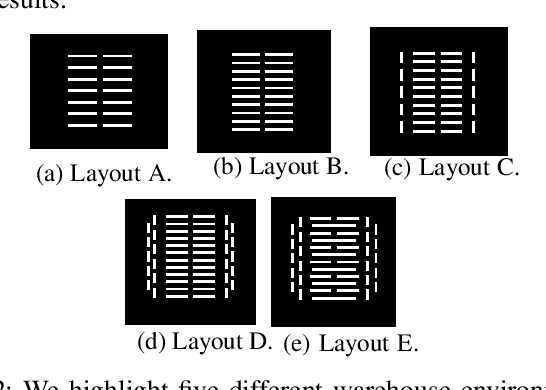

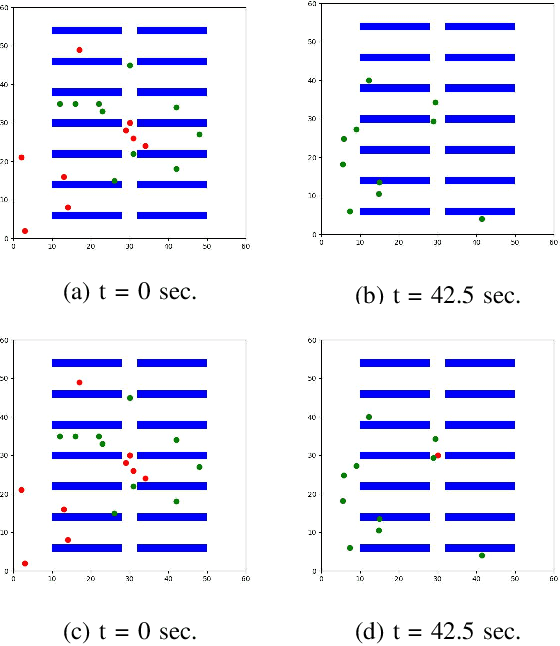

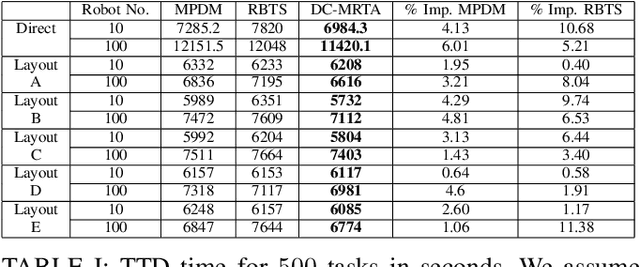

We present a novel reinforcement learning (RL) based task allocation and decentralized navigation algorithm for mobile robots in warehouse environments. Our approach is designed for scenarios in which multiple robots are used to perform various pick up and delivery tasks. We consider the problem of joint decentralized task allocation and navigation and present a two level approach to solve it. At the higher level, we solve the task allocation by formulating it in terms of Markov Decision Processes and choosing the appropriate rewards to minimize the Total Travel Delay (TTD). At the lower level, we use a decentralized navigation scheme based on ORCA that enables each robot to perform these tasks in an independent manner, and avoid collisions with other robots and dynamic obstacles. We combine these lower and upper levels by defining rewards for the higher level as the feedback from the lower level navigation algorithm. We perform extensive evaluation in complex warehouse layouts with large number of agents and highlight the benefits over state-of-the-art algorithms based on myopic pickup distance minimization and regret-based task selection. We observe improvement up to 14% in terms of task completion time and up-to 40% improvement in terms of computing collision-free trajectories for the robots.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge