Shaolei Wang

CL-bench: A Benchmark for Context Learning

Feb 03, 2026Abstract:Current language models (LMs) excel at reasoning over prompts using pre-trained knowledge. However, real-world tasks are far more complex and context-dependent: models must learn from task-specific context and leverage new knowledge beyond what is learned during pre-training to reason and resolve tasks. We term this capability context learning, a crucial ability that humans naturally possess but has been largely overlooked. To this end, we introduce CL-bench, a real-world benchmark consisting of 500 complex contexts, 1,899 tasks, and 31,607 verification rubrics, all crafted by experienced domain experts. Each task is designed such that the new content required to resolve it is contained within the corresponding context. Resolving tasks in CL-bench requires models to learn from the context, ranging from new domain-specific knowledge, rule systems, and complex procedures to laws derived from empirical data, all of which are absent from pre-training. This goes far beyond long-context tasks that primarily test retrieval or reading comprehension, and in-context learning tasks, where models learn simple task patterns via instructions and demonstrations. Our evaluations of ten frontier LMs find that models solve only 17.2% of tasks on average. Even the best-performing model, GPT-5.1, solves only 23.7%, revealing that LMs have yet to achieve effective context learning, which poses a critical bottleneck for tackling real-world, complex context-dependent tasks. CL-bench represents a step towards building LMs with this fundamental capability, making them more intelligent and advancing their deployment in real-world scenarios.

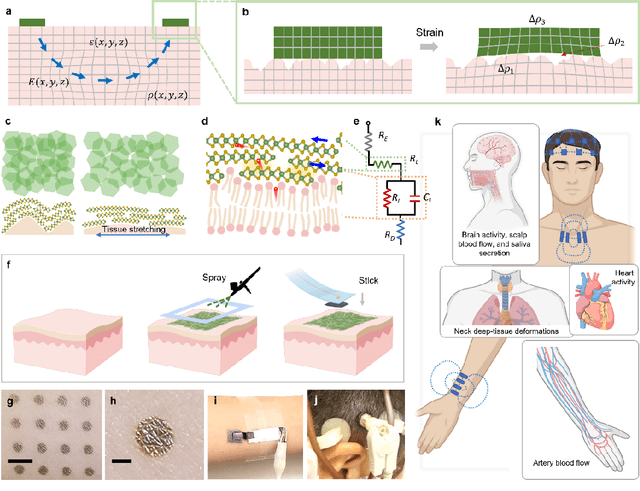

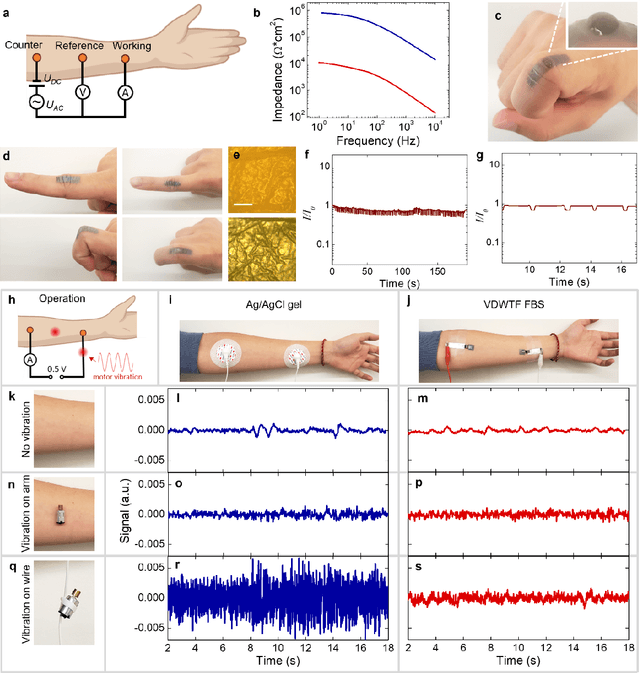

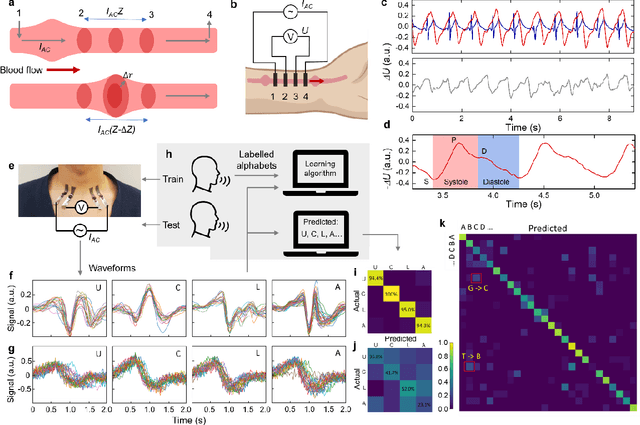

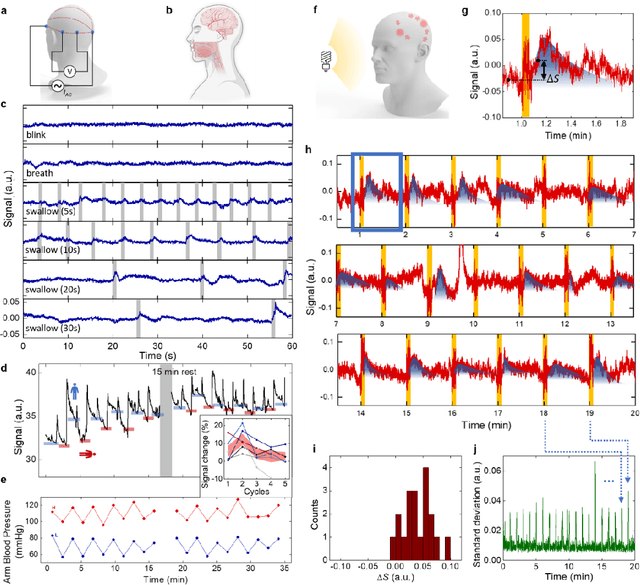

Electrically functionalized body surface for deep-tissue bioelectrical recording

Dec 04, 2024

Abstract:Directly probing deep tissue activities from body surfaces offers a noninvasive approach to monitoring essential physiological processes1-3. However, this method is technically challenged by rapid signal attenuation toward the body surface and confounding motion artifacts4-6 primarily due to excessive contact impedance and mechanical mismatch with conventional electrodes. Herein, by formulating and directly spray coating biocompatible two-dimensional nanosheet ink onto the human body under ambient conditions, we create microscopically conformal and adaptive van der Waals thin films (VDWTFs) that seamlessly merge with non-Euclidean, hairy, and dynamically evolving body surfaces. Unlike traditional deposition methods, which often struggle with conformality and adaptability while retaining high electronic performance, this gentle process enables the formation of high-performance VDWTFs directly on the body surface under bio-friendly conditions, making it ideal for biological applications. This results in low-impedance electrically functionalized body surfaces (EFBS), enabling highly robust monitoring of biopotential and bioimpedance modulations associated with deep-tissue activities, such as blood circulation, muscle movements, and brain activities. Compared to commercial solutions, our VDWTF-EFBS exhibits nearly two-orders of magnitude lower contact impedance and substantially reduces the extrinsic motion artifacts, enabling reliable extraction of bioelectrical signals from irregular surfaces, such as unshaved human scalps. This advancement defines a technology for continuous, noninvasive monitoring of deep-tissue activities during routine body movements.

Eval-GCSC: A New Metric for Evaluating ChatGPT's Performance in Chinese Spelling Correction

Nov 14, 2023

Abstract:ChatGPT has demonstrated impressive performance in various downstream tasks. However, in the Chinese Spelling Correction (CSC) task, we observe a discrepancy: while ChatGPT performs well under human evaluation, it scores poorly according to traditional metrics. We believe this inconsistency arises because the traditional metrics are not well-suited for evaluating generative models. Their overly strict length and phonics constraints may lead to underestimating ChatGPT's correction capabilities. To better evaluate generative models in the CSC task, this paper proposes a new evaluation metric: Eval-GCSC. By incorporating word-level and semantic similarity judgments, it relaxes the stringent length and phonics constraints. Experimental results show that Eval-GCSC closely aligns with human evaluations. Under this metric, ChatGPT's performance is comparable to traditional token-level classification models (TCM), demonstrating its potential as a CSC tool. The source code and scripts can be accessed at https://github.com/ktlKTL/Eval-GCSC.

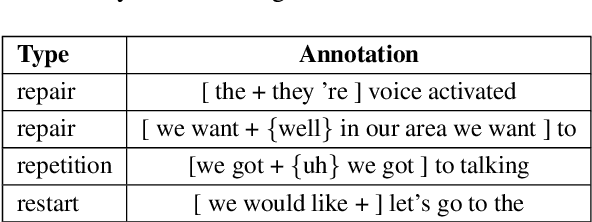

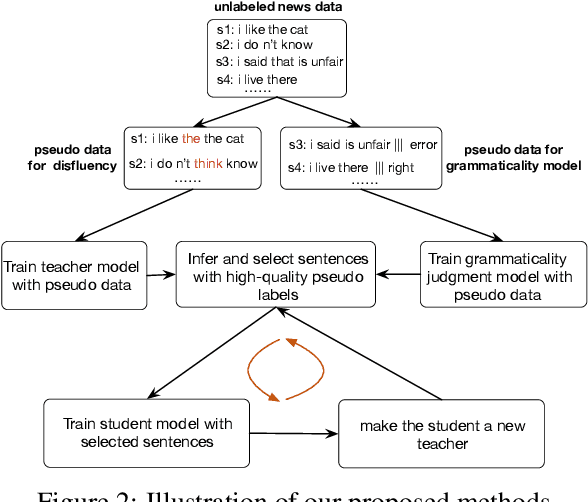

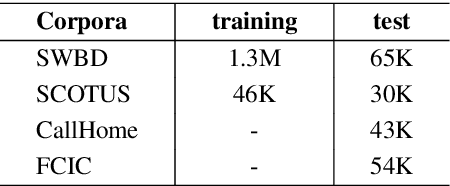

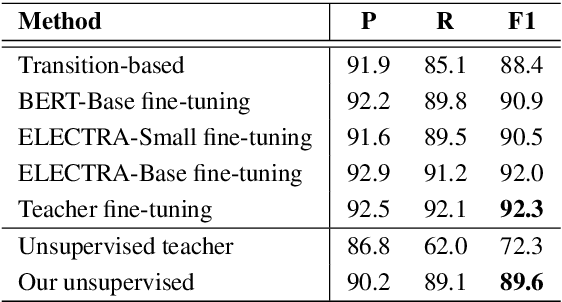

Combining Self-Training and Self-Supervised Learning for Unsupervised Disfluency Detection

Oct 29, 2020

Abstract:Most existing approaches to disfluency detection heavily rely on human-annotated corpora, which is expensive to obtain in practice. There have been several proposals to alleviate this issue with, for instance, self-supervised learning techniques, but they still require human-annotated corpora. In this work, we explore the unsupervised learning paradigm which can potentially work with unlabeled text corpora that are cheaper and easier to obtain. Our model builds upon the recent work on Noisy Student Training, a semi-supervised learning approach that extends the idea of self-training. Experimental results on the commonly used English Switchboard test set show that our approach achieves competitive performance compared to the previous state-of-the-art supervised systems using contextualized word embeddings (e.g. BERT and ELECTRA).

Multi-Task Self-Supervised Learning for Disfluency Detection

Aug 15, 2019

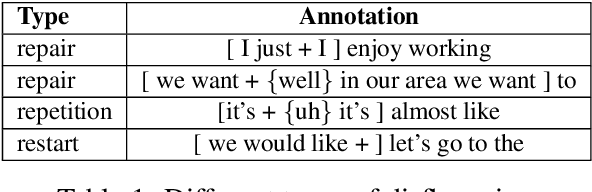

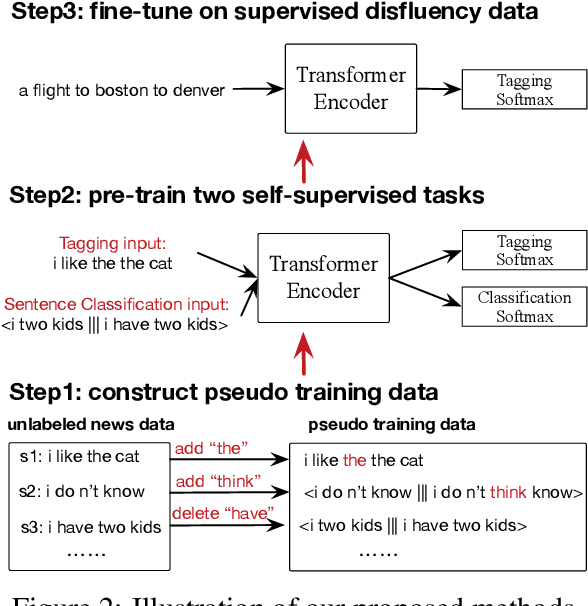

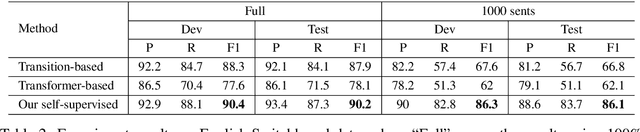

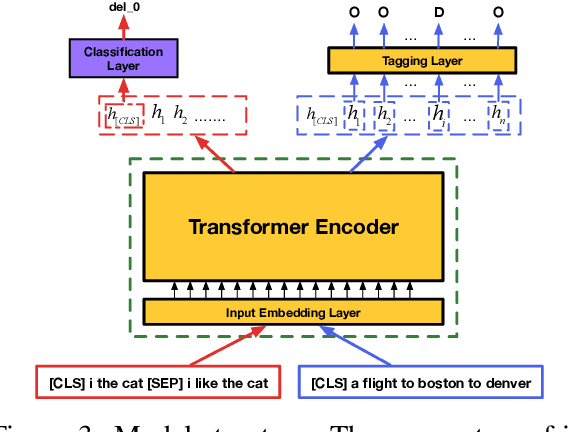

Abstract:Most existing approaches to disfluency detection heavily rely on human-annotated data, which is expensive to obtain in practice. To tackle the training data bottleneck, we investigate methods for combining multiple self-supervised tasks-i.e., supervised tasks where data can be collected without manual labeling. First, we construct large-scale pseudo training data by randomly adding or deleting words from unlabeled news data, and propose two self-supervised pre-training tasks: (i) tagging task to detect the added noisy words. (ii) sentence classification to distinguish original sentences from grammatically-incorrect sentences. We then combine these two tasks to jointly train a network. The pre-trained network is then fine-tuned using human-annotated disfluency detection training data. Experimental results on the commonly used English Switchboard test set show that our approach can achieve competitive performance compared to the previous systems (trained using the full dataset) by using less than 1% (1000 sentences) of the training data. Our method trained on the full dataset significantly outperforms previous methods, reducing the error by 21% on English Switchboard.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge