Seoyeon Jang

TRIP: Terrain Traversability Mapping With Risk-Aware Prediction for Enhanced Online Quadrupedal Robot Navigation

Nov 26, 2024

Abstract:Accurate traversability estimation using an online dense terrain map is crucial for safe navigation in challenging environments like construction and disaster areas. However, traversability estimation for legged robots on rough terrains faces substantial challenges owing to limited terrain information caused by restricted field-of-view, and data occlusion and sparsity. To robustly map traversable regions, we introduce terrain traversability mapping with risk-aware prediction (TRIP). TRIP reconstructs the terrain maps while predicting multi-modal traversability risks, enhancing online autonomous navigation with the following contributions. Firstly, estimating steppability in a spherical projection space allows for addressing data sparsity while accomodating scalable terrain properties. Moreover, the proposed traversability-aware Bayesian generalized kernel (T-BGK)-based inference method enhances terrain completion accuracy and efficiency. Lastly, leveraging the steppability-based Mahalanobis distance contributes to robustness against outliers and dynamic elements, ultimately yielding a static terrain traversability map. As verified in both public and our in-house datasets, our TRIP shows significant performance increases in terms of terrain reconstruction and navigation map. A demo video that demonstrates its feasibility as an integral component within an onboard online autonomous navigation system for quadruped robots is available at https://youtu.be/d7HlqAP4l0c.

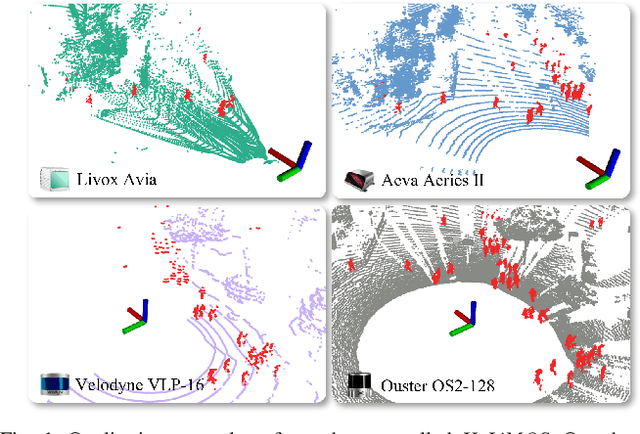

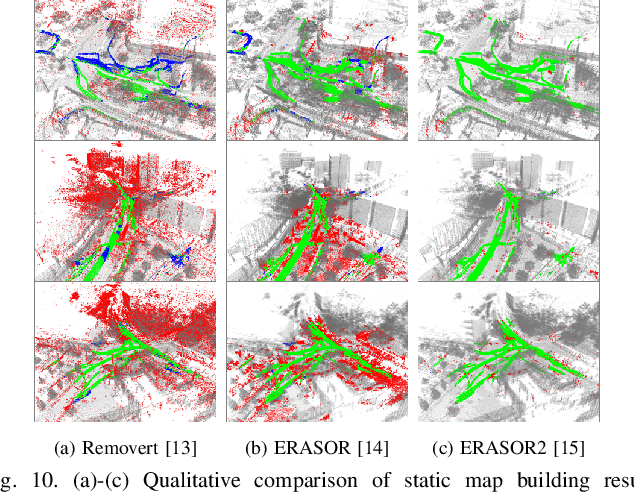

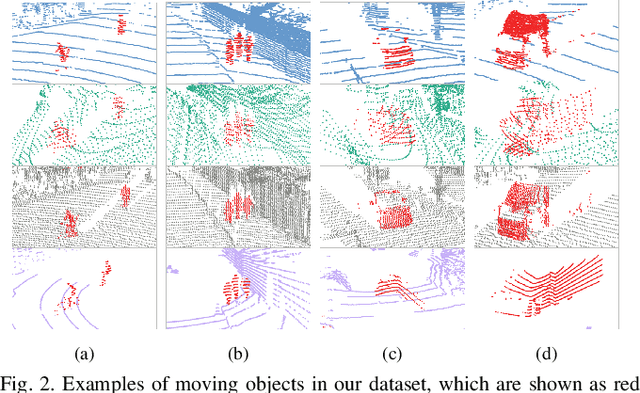

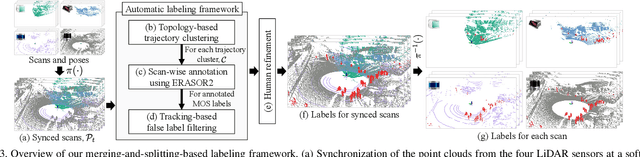

HeLiMOS: A Dataset for Moving Object Segmentation in 3D Point Clouds From Heterogeneous LiDAR Sensors

Aug 12, 2024

Abstract:Moving object segmentation (MOS) using a 3D light detection and ranging (LiDAR) sensor is crucial for scene understanding and identification of moving objects. Despite the availability of various types of 3D LiDAR sensors in the market, MOS research still predominantly focuses on 3D point clouds from mechanically spinning omnidirectional LiDAR sensors. Thus, we are, for example, lacking a dataset with MOS labels for point clouds from solid-state LiDAR sensors which have irregular scanning patterns. In this paper, we present a labeled dataset, called \textit{HeLiMOS}, that enables to test MOS approaches on four heterogeneous LiDAR sensors, including two solid-state LiDAR sensors. Furthermore, we introduce a novel automatic labeling method to substantially reduce the labeling effort required from human annotators. To this end, our framework exploits an instance-aware static map building approach and tracking-based false label filtering. Finally, we provide experimental results regarding the performance of commonly used state-of-the-art MOS approaches on HeLiMOS that suggest a new direction for a sensor-agnostic MOS, which generally works regardless of the type of LiDAR sensors used to capture 3D point clouds. Our dataset is available at https://sites.google.com/view/helimos.

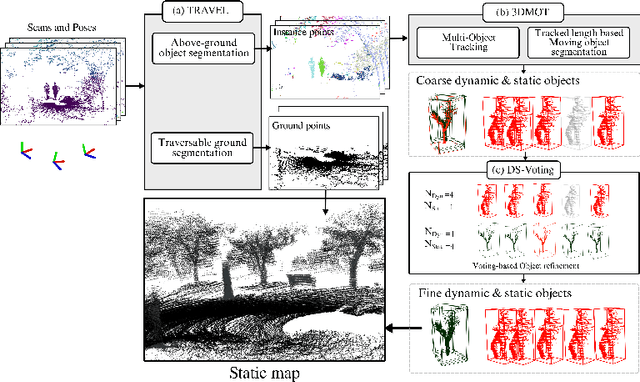

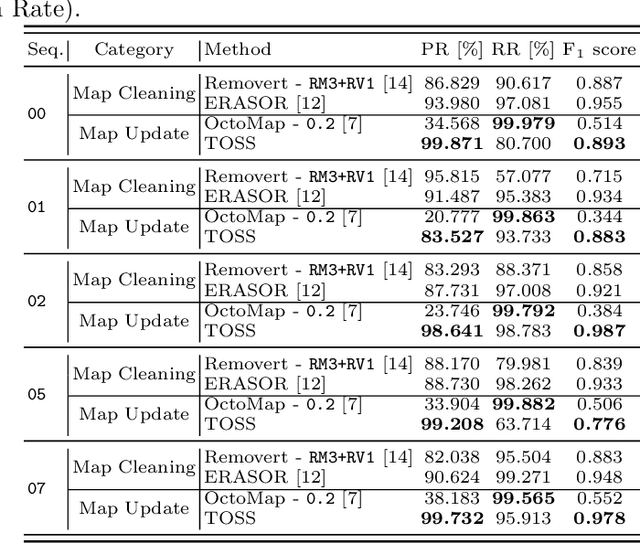

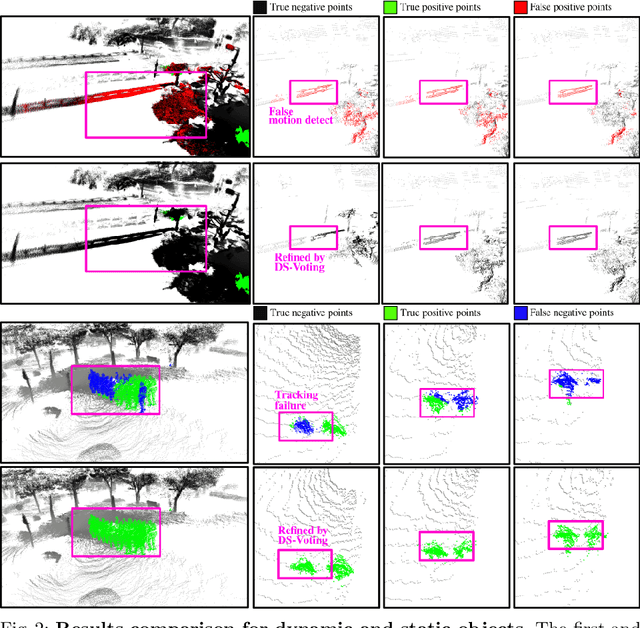

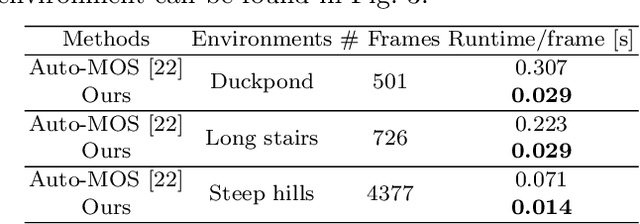

TOSS: Real-time Tracking and Moving Object Segmentation for Static Scene Mapping

Aug 10, 2024

Abstract:Safe navigation with simultaneous localization and mapping (SLAM) for autonomous robots is crucial in challenging environments. To achieve this goal, detecting moving objects in the surroundings and building a static map are essential. However, existing moving object segmentation methods have been developed separately for each field, making it challenging to perform real-time navigation and precise static map building simultaneously. In this paper, we propose an integrated real-time framework that combines online tracking-based moving object segmentation with static map building. For safe navigation, we introduce a computationally efficient hierarchical association cost matrix to enable real-time moving object segmentation. In the context of precise static mapping, we present a voting-based method, DS-Voting, designed to achieve accurate dynamic object removal and static object recovery by emphasizing their spatio-temporal differences. We evaluate our proposed method quantitatively and qualitatively in the SemanticKITTI dataset and real-world challenging environments. The results demonstrate that dynamic objects can be clearly distinguished and incorporated into static map construction, even in stairs, steep hills, and dense vegetation.

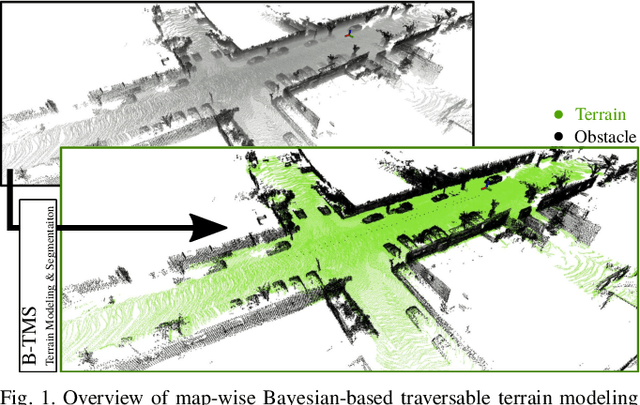

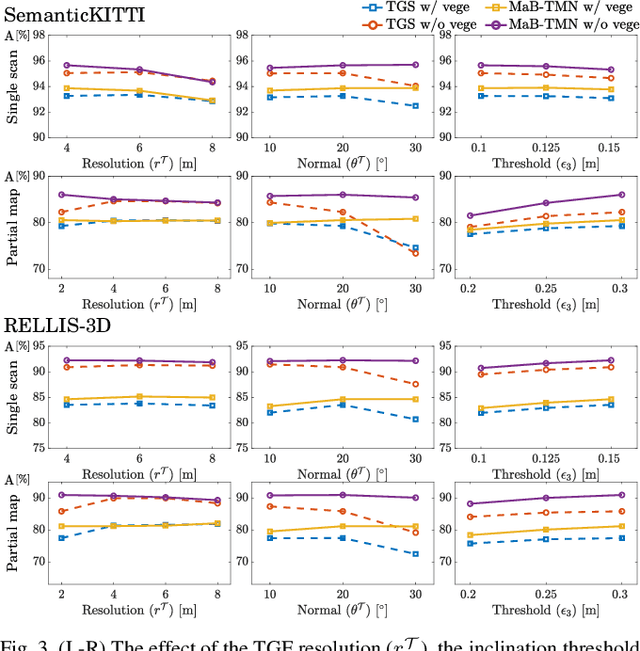

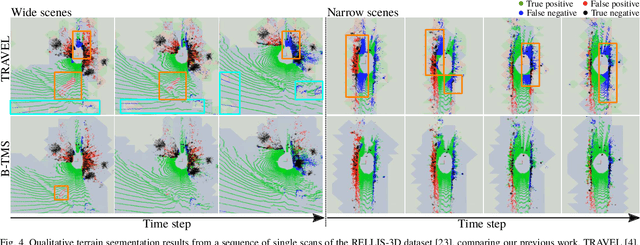

B-TMS: Bayesian Traversable Terrain Modeling and Segmentation Across 3D LiDAR Scans and Maps for Enhanced Off-Road Navigation

Jun 26, 2024

Abstract:Recognizing traversable terrain from 3D point cloud data is critical, as it directly impacts the performance of autonomous navigation in off-road environments. However, existing segmentation algorithms often struggle with challenges related to changes in data distribution, environmental specificity, and sensor variations. Moreover, when encountering sunken areas, their performance is frequently compromised, and they may even fail to recognize them. To address these challenges, we introduce B-TMS, a novel approach that performs map-wise terrain modeling and segmentation by utilizing Bayesian generalized kernel (BGK) within the graph structure known as the tri-grid field (TGF). Our experiments encompass various data distributions, ranging from single scans to partial maps, utilizing both public datasets representing urban scenes and off-road environments, and our own dataset acquired from extremely bumpy terrains. Our results demonstrate notable contributions, particularly in terms of robustness to data distribution variations, adaptability to diverse environmental conditions, and resilience against the challenges associated with parameter changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge