Se-In Jang

TauPETGen: Text-Conditional Tau PET Image Synthesis Based on Latent Diffusion Models

Jun 21, 2023

Abstract:In this work, we developed a novel text-guided image synthesis technique which could generate realistic tau PET images from textual descriptions and the subject's MR image. The generated tau PET images have the potential to be used in examining relations between different measures and also increasing the public availability of tau PET datasets. The method was based on latent diffusion models. Both textual descriptions and the subject's MR prior image were utilized as conditions during image generation. The subject's MR image can provide anatomical details, while the text descriptions, such as gender, scan time, cognitive test scores, and amyloid status, can provide further guidance regarding where the tau neurofibrillary tangles might be deposited. Preliminary experimental results based on clinical [18F]MK-6240 datasets demonstrate the feasibility of the proposed method in generating realistic tau PET images at different clinical stages.

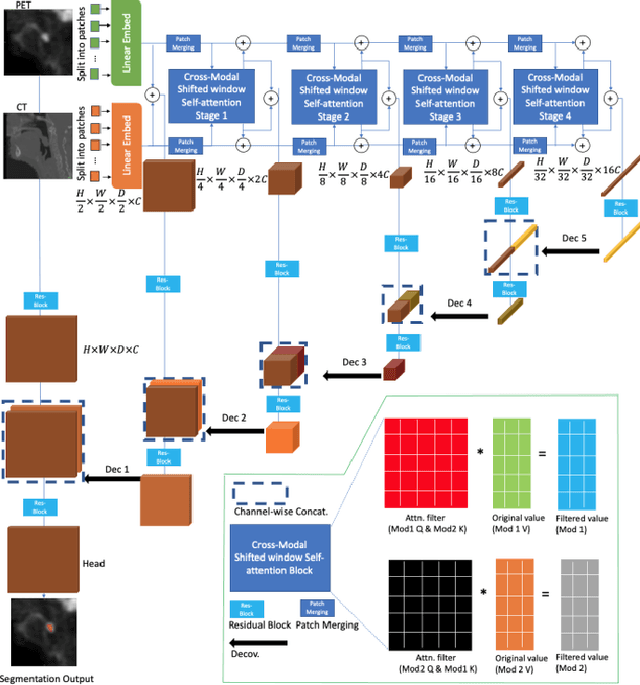

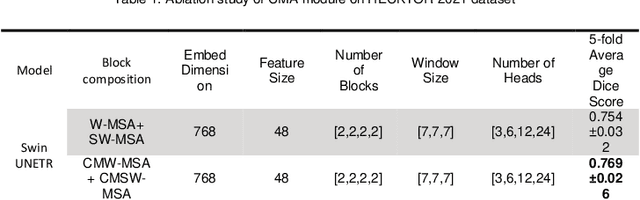

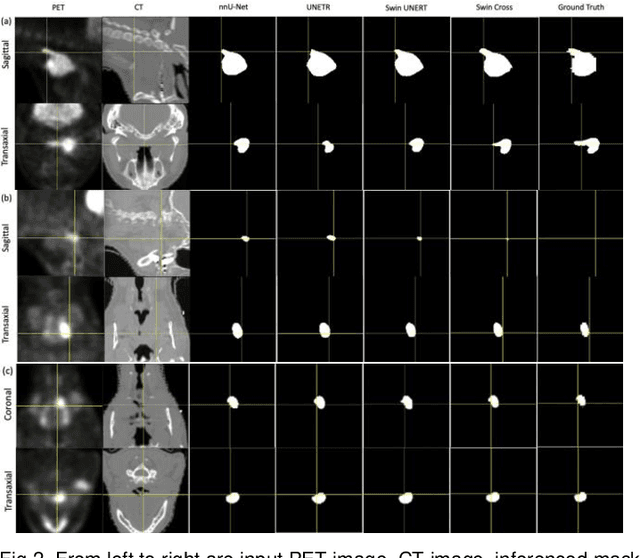

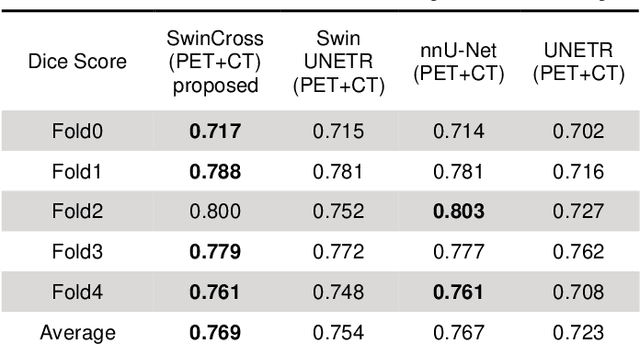

SwinCross: Cross-modal Swin Transformer for Head-and-Neck Tumor Segmentation in PET/CT Images

Feb 08, 2023

Abstract:Radiotherapy (RT) combined with cetuximab is the standard treatment for patients with inoperable head and neck cancers. Segmentation of head and neck (H&N) tumors is a prerequisite for radiotherapy planning but a time-consuming process. In recent years, deep convolutional neural networks have become the de facto standard for automated image segmentation. However, due to the expensive computational cost associated with enlarging the field of view in DCNNs, their ability to model long-range dependency is still limited, and this can result in sub-optimal segmentation performance for objects with background context spanning over long distances. On the other hand, Transformer models have demonstrated excellent capabilities in capturing such long-range information in several semantic segmentation tasks performed on medical images. Inspired by the recent success of Vision Transformers and advances in multi-modal image analysis, we propose a novel segmentation model, debuted, Cross-Modal Swin Transformer (SwinCross), with cross-modal attention (CMA) module to incorporate cross-modal feature extraction at multiple resolutions.To validate the effectiveness of the proposed method, we performed experiments on the HECKTOR 2021 challenge dataset and compared it with the nnU-Net (the backbone of the top-5 methods in HECKTOR 2021) and other state-of-the-art transformer-based methods such as UNETR, and Swin UNETR. The proposed method is experimentally shown to outperform these comparing methods thanks to the ability of the CMA module to capture better inter-modality complimentary feature representations between PET and CT, for the task of head-and-neck tumor segmentation.

Deterministic Online Classification: Non-iteratively Reweighted Recursive Least-Squares for Binary Class Rebalancing

Jan 22, 2023

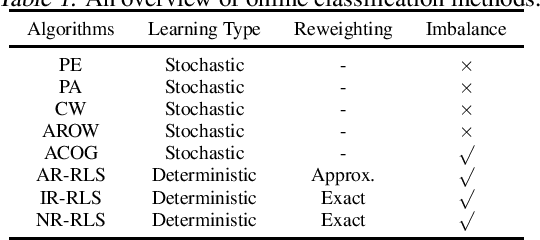

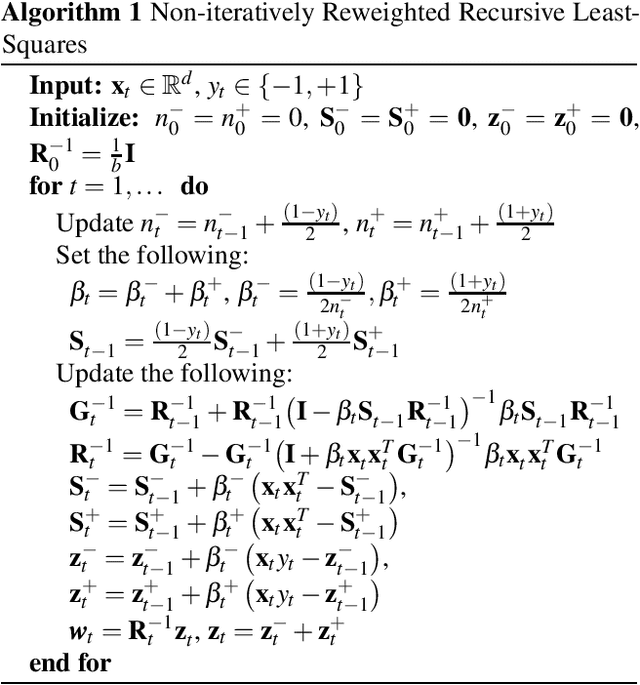

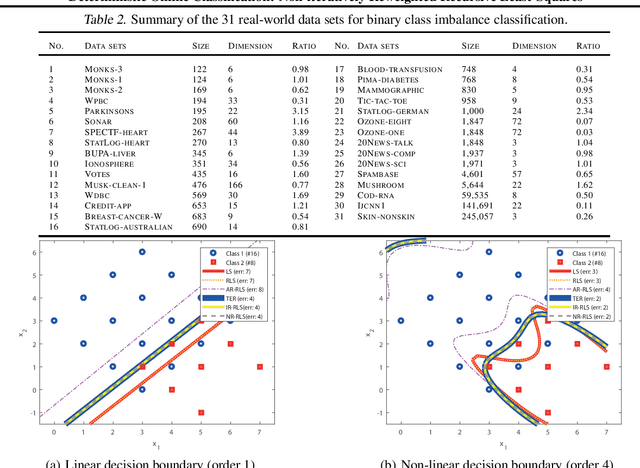

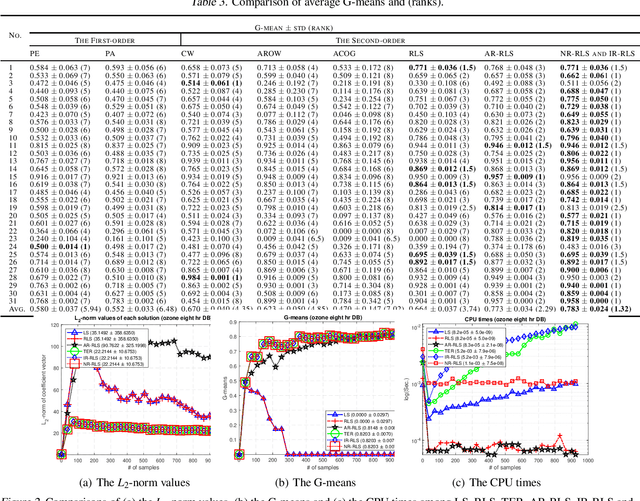

Abstract:Deterministic solutions are becoming more critical for interpretability. Weighted Least-Squares (WLS) has been widely used as a deterministic batch solution with a specific weight design. In the online settings of WLS, exact reweighting is necessary to converge to its batch settings. In order to comply with its necessity, the iteratively reweighted least-squares algorithm is mainly utilized with a linearly growing time complexity which is not attractive for online learning. Due to the high and growing computational costs, an efficient online formulation of reweighted least-squares is desired. We introduce a new deterministic online classification algorithm of WLS with a constant time complexity for binary class rebalancing. We demonstrate that our proposed online formulation exactly converges to its batch formulation and outperforms existing state-of-the-art stochastic online binary classification algorithms in real-world data sets empirically.

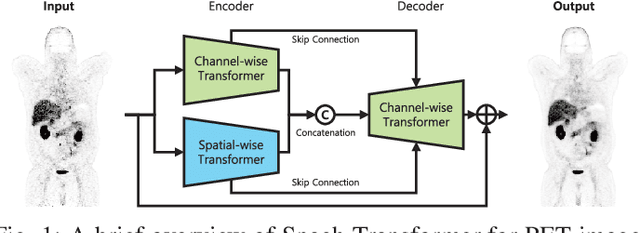

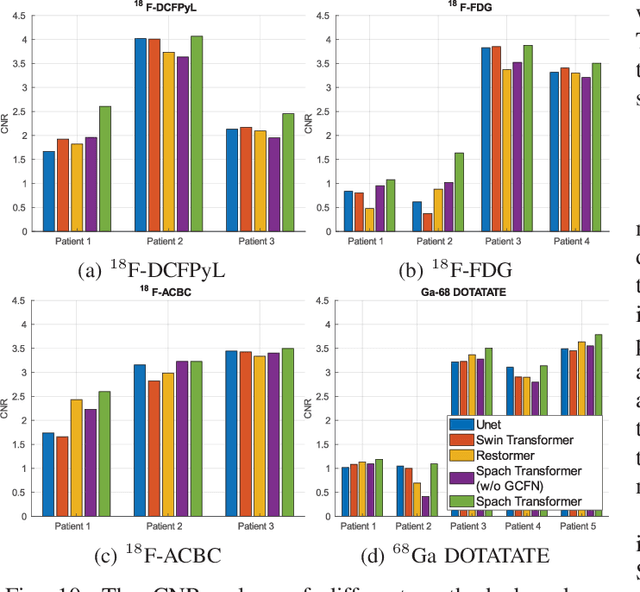

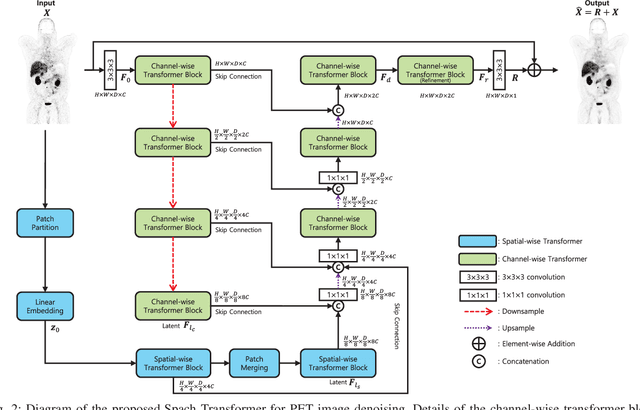

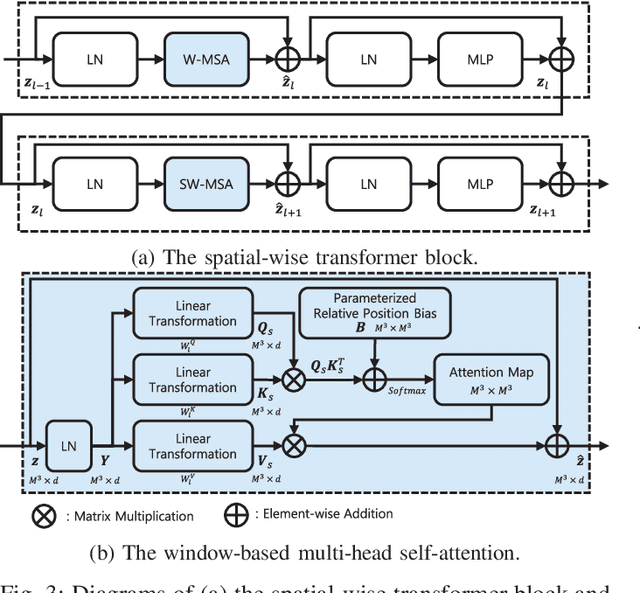

Spach Transformer: Spatial and Channel-wise Transformer Based on Local and Global Self-attentions for PET Image Denoising

Sep 07, 2022

Abstract:Position emission tomography (PET) is widely used in clinics and research due to its quantitative merits and high sensitivity, but suffers from low signal-to-noise ratio (SNR). Recently convolutional neural networks (CNNs) have been widely used to improve PET image quality. Though successful and efficient in local feature extraction, CNN cannot capture long-range dependencies well due to its limited receptive field. Global multi-head self-attention (MSA) is a popular approach to capture long-range information. However, the calculation of global MSA for 3D images has high computational costs. In this work, we proposed an efficient spatial and channel-wise encoder-decoder transformer, Spach Transformer, that can leverage spatial and channel information based on local and global MSAs. Experiments based on datasets of different PET tracers, i.e., $^{18}$F-FDG, $^{18}$F-ACBC, $^{18}$F-DCFPyL, and $^{68}$Ga-DOTATATE, were conducted to evaluate the proposed framework. Quantitative results show that the proposed Spach Transformer can achieve better performance than other reference methods.

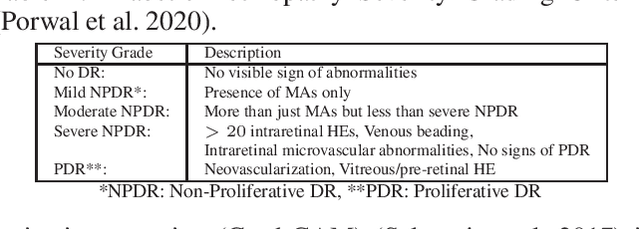

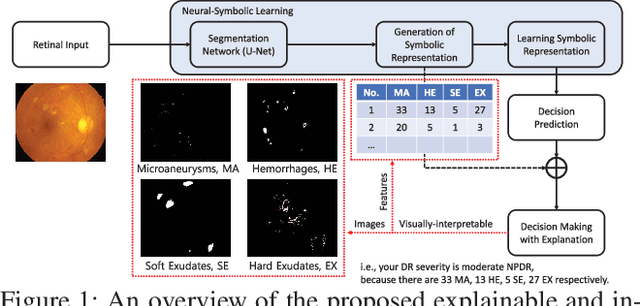

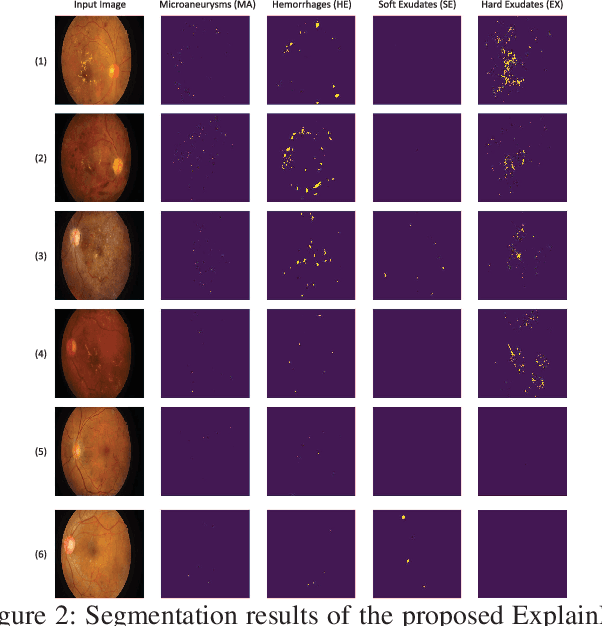

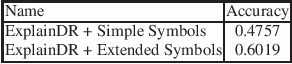

Explainable and Interpretable Diabetic Retinopathy Classification Based on Neural-Symbolic Learning

Apr 01, 2022

Abstract:In this paper, we propose an explainable and interpretable diabetic retinopathy (ExplainDR) classification model based on neural-symbolic learning. To gain explainability, a highlevel symbolic representation should be considered in decision making. Specifically, we introduce a human-readable symbolic representation, which follows a taxonomy style of diabetic retinopathy characteristics related to eye health conditions to achieve explainability. We then include humanreadable features obtained from the symbolic representation in the disease prediction. Experimental results on a diabetic retinopathy classification dataset show that our proposed ExplainDR method exhibits promising performance when compared to that from state-of-the-art methods applied to the IDRiD dataset, while also providing interpretability and explainability.

A Noise-level-aware Framework for PET Image Denoising

Mar 15, 2022

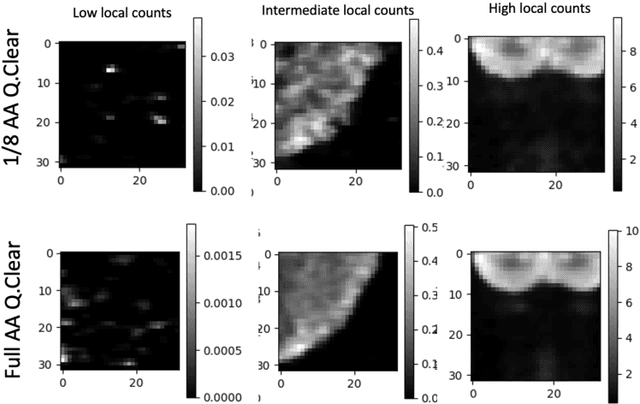

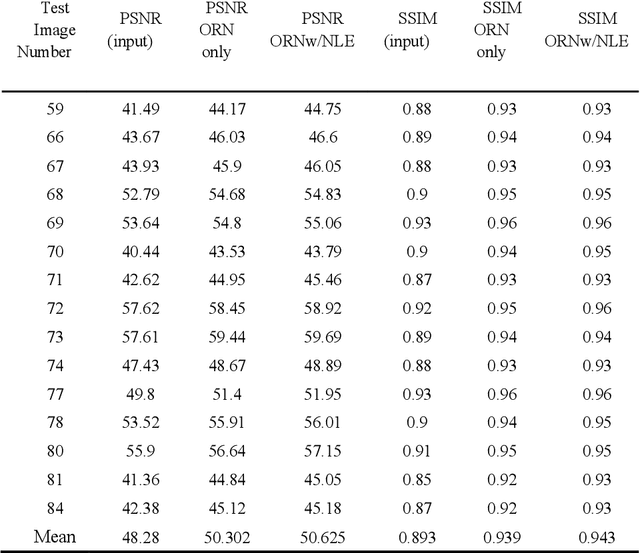

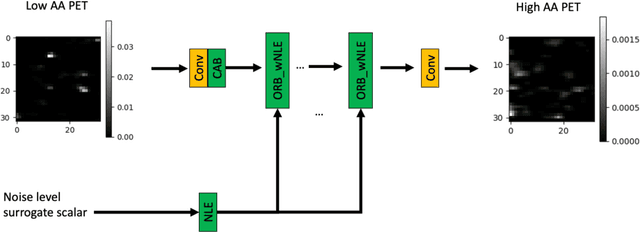

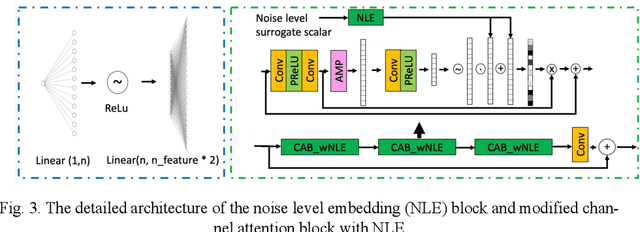

Abstract:In PET, the amount of relative (signal-dependent) noise present in different body regions can be significantly different and is inherently related to the number of counts present in that region. The number of counts in a region depends, in principle and among other factors, on the total administered activity, scanner sensitivity, image acquisition duration, radiopharmaceutical tracer uptake in the region, and patient local body morphometry surrounding the region. In theory, less amount of denoising operations is needed to denoise a high-count (low relative noise) image than images a low-count (high relative noise) image, and vice versa. The current deep-learning-based methods for PET image denoising are predominantly trained on image appearance only and have no special treatment for images of different noise levels. Our hypothesis is that by explicitly providing the local relative noise level of the input image to a deep convolutional neural network (DCNN), the DCNN can outperform itself trained on image appearance only. To this end, we propose a noise-level-aware framework denoising framework that allows embedding of local noise level into a DCNN. The proposed is trained and tested on 30 and 15 patient PET images acquired on a GE Discovery MI PET/CT system. Our experiments showed that the increases in both PSNR and SSIM from our backbone network with relative noise level embedding (NLE) versus the same network without NLE were statistically significant with p<0.001, and the proposed method significantly outperformed a strong baseline method by a large margin.

Online Passive-Aggressive Total-Error-Rate Minimization

Feb 05, 2020

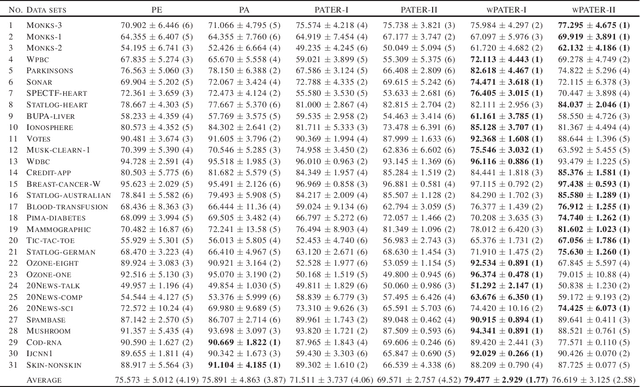

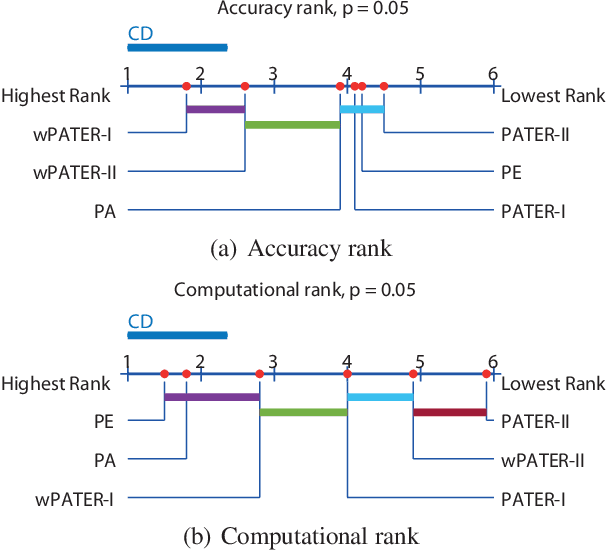

Abstract:We provide a new online learning algorithm which utilizes online passive-aggressive learning (PA) and total-error-rate minimization (TER) for binary classification. The PA learning establishes not only large margin training but also the capacity to handle non-separable data. The TER learning on the other hand minimizes an approximated classification error based objective function. We propose an online PATER algorithm which combines those useful properties. In addition, we also present a weighted PATER algorithm to improve the ability to cope with data imbalance problems. Experimental results demonstrate that the proposed PATER algorithms achieves better performances in terms of efficiency and effectiveness than the existing state-of-the-art online learning algorithms in real-world data sets.

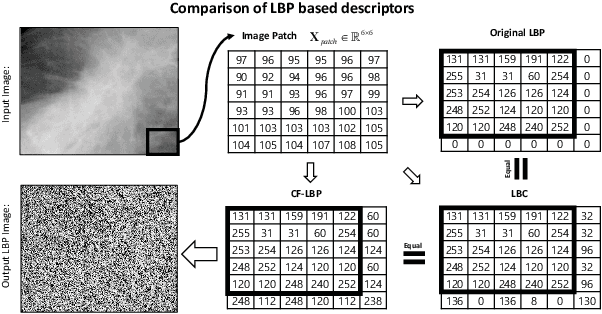

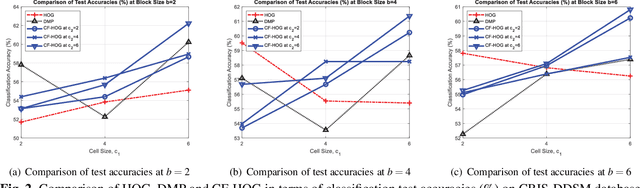

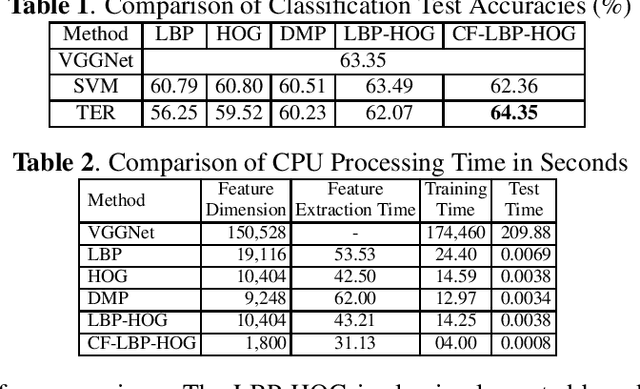

A Convolution-Free LBP-HOG Descriptor For Mammogram Classification

Mar 30, 2019

Abstract:In image based feature descriptor design, an iterative scanning process utilizing the convolution operation is often adopted to extract local information of the image pixels. In this paper, we propose a convolution-free Local Binary Pattern (CF-LBP) and a convolution-free Histogram of Oriented Gradients (CF-HOG) descriptors in matrix form for mammogram classification. An integrated form of CF-LBP and CF-HOG, CF-LBP-HOG, is subsequently constructed in a single matrix formulation. The proposed descriptors are evaluated using a publicly available mammogram database. The results show promising performance in terms of classification accuracy and computational efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge