Satoshi Ikehata

High-Fidelity 3D Tooth Reconstruction by Fusing Intraoral Scans and CBCT Data via a Deep Implicit Representation

Jan 21, 2026Abstract:High-fidelity 3D tooth models are essential for digital dentistry, but must capture both the detailed crown and the complete root. Clinical imaging modalities are limited: Cone-Beam Computed Tomography (CBCT) captures the root but has a noisy, low-resolution crown, while Intraoral Scanners (IOS) provide a high-fidelity crown but no root information. A naive fusion of these sources results in unnatural seams and artifacts. We propose a novel, fully-automated pipeline that fuses CBCT and IOS data using a deep implicit representation. Our method first segments and robustly registers the tooth instances, then creates a hybrid proxy mesh combining the IOS crown and the CBCT root. The core of our approach is to use this noisy proxy to guide a class-specific DeepSDF network. This optimization process projects the input onto a learned manifold of ideal tooth shapes, generating a seamless, watertight, and anatomically coherent model. Qualitative and quantitative evaluations show our method uniquely preserves both the high-fidelity crown from IOS and the patient-specific root morphology from CBCT, overcoming the limitations of each modality and naive stitching.

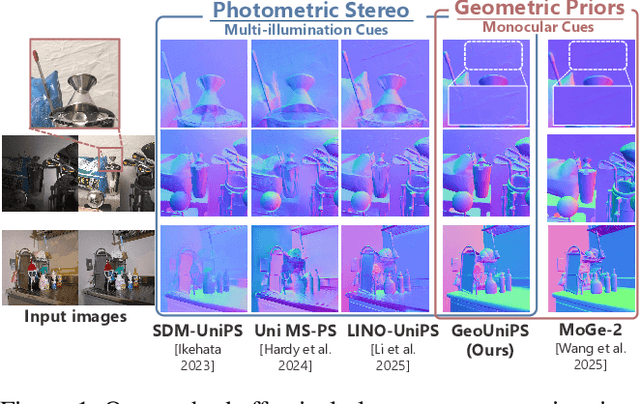

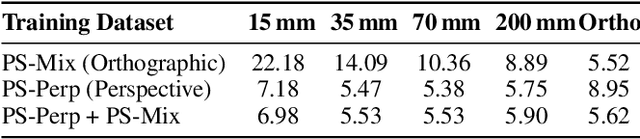

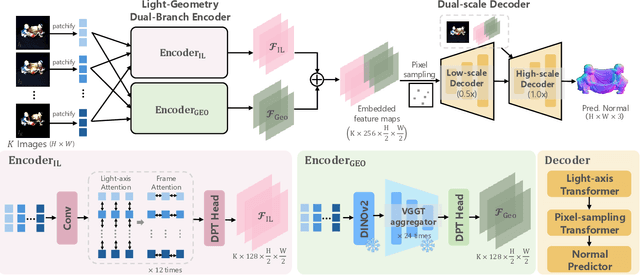

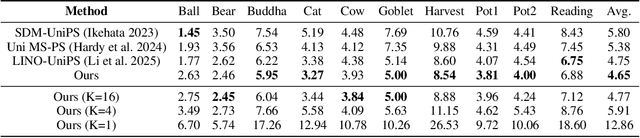

Geometry Meets Light: Leveraging Geometric Priors for Universal Photometric Stereo under Limited Multi-Illumination Cues

Nov 18, 2025

Abstract:Universal Photometric Stereo is a promising approach for recovering surface normals without strict lighting assumptions. However, it struggles when multi-illumination cues are unreliable, such as under biased lighting or in shadows or self-occluded regions of complex in-the-wild scenes. We propose GeoUniPS, a universal photometric stereo network that integrates synthetic supervision with high-level geometric priors from large-scale 3D reconstruction models pretrained on massive in-the-wild data. Our key insight is that these 3D reconstruction models serve as visual-geometry foundation models, inherently encoding rich geometric knowledge of real scenes. To leverage this, we design a Light-Geometry Dual-Branch Encoder that extracts both multi-illumination cues and geometric priors from the frozen 3D reconstruction model. We also address the limitations of the conventional orthographic projection assumption by introducing the PS-Perp dataset with realistic perspective projection to enable learning of spatially varying view directions. Extensive experiments demonstrate that GeoUniPS delivers state-of-the-arts performance across multiple datasets, both quantitatively and qualitatively, especially in the complex in-the-wild scenes.

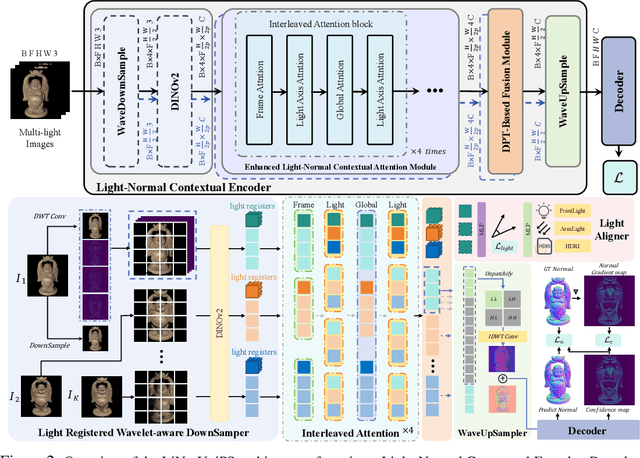

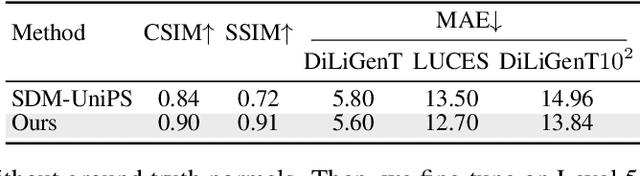

Light of Normals: Unified Feature Representation for Universal Photometric Stereo

Jun 24, 2025

Abstract:Universal photometric stereo (PS) aims to recover high-quality surface normals from objects under arbitrary lighting conditions without relying on specific illumination models. Despite recent advances such as SDM-UniPS and Uni MS-PS, two fundamental challenges persist: 1) the deep coupling between varying illumination and surface normal features, where ambiguity in observed intensity makes it difficult to determine whether brightness variations stem from lighting changes or surface orientation; and 2) the preservation of high-frequency geometric details in complex surfaces, where intricate geometries create self-shadowing, inter-reflections, and subtle normal variations that conventional feature processing operations struggle to capture accurately.

Rectified Lagrangian for Out-of-Distribution Detection in Modern Hopfield Networks

Feb 19, 2025

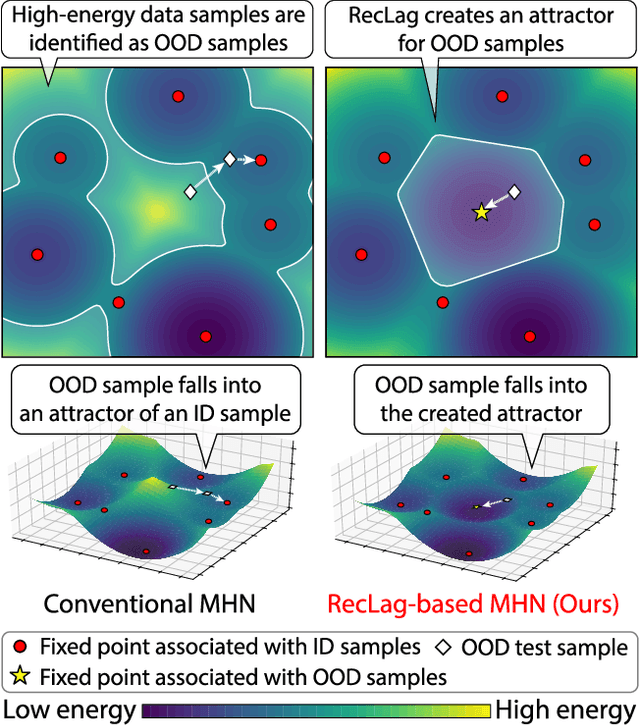

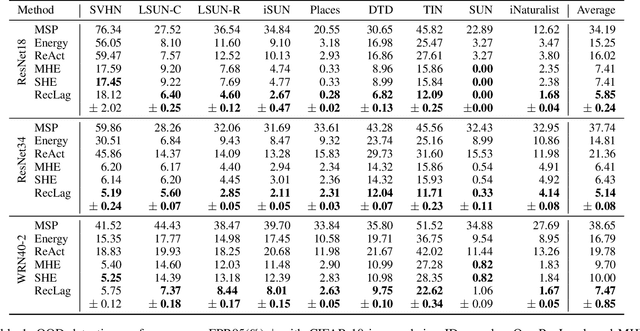

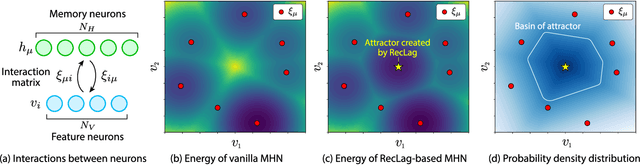

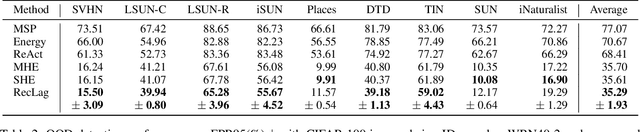

Abstract:Modern Hopfield networks (MHNs) have recently gained significant attention in the field of artificial intelligence because they can store and retrieve a large set of patterns with an exponentially large memory capacity. A MHN is generally a dynamical system defined with Lagrangians of memory and feature neurons, where memories associated with in-distribution (ID) samples are represented by attractors in the feature space. One major problem in existing MHNs lies in managing out-of-distribution (OOD) samples because it was originally assumed that all samples are ID samples. To address this, we propose the rectified Lagrangian (RegLag), a new Lagrangian for memory neurons that explicitly incorporates an attractor for OOD samples in the dynamical system of MHNs. RecLag creates a trivial point attractor for any interaction matrix, enabling OOD detection by identifying samples that fall into this attractor as OOD. The interaction matrix is optimized so that the probability densities can be estimated to identify ID/OOD. We demonstrate the effectiveness of RecLag-based MHNs compared to energy-based OOD detection methods, including those using state-of-the-art Hopfield energies, across nine image datasets.

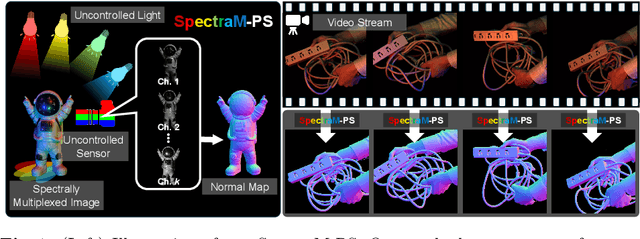

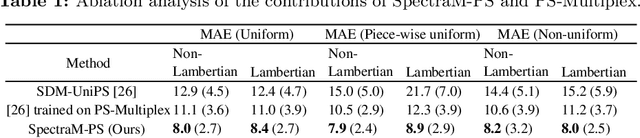

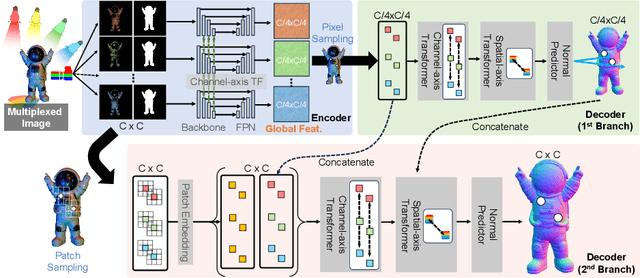

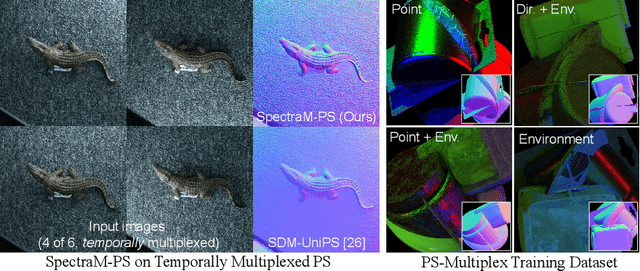

Physics-Free Spectrally Multiplexed Photometric Stereo under Unknown Spectral Composition

Oct 28, 2024

Abstract:In this paper, we present a groundbreaking spectrally multiplexed photometric stereo approach for recovering surface normals of dynamic surfaces without the need for calibrated lighting or sensors, a notable advancement in the field traditionally hindered by stringent prerequisites and spectral ambiguity. By embracing spectral ambiguity as an advantage, our technique enables the generation of training data without specialized multispectral rendering frameworks. We introduce a unique, physics-free network architecture, SpectraM-PS, that effectively processes multiplexed images to determine surface normals across a wide range of conditions and material types, without relying on specific physically-based knowledge. Additionally, we establish the first benchmark dataset, SpectraM14, for spectrally multiplexed photometric stereo, facilitating comprehensive evaluations against existing calibrated methods. Our contributions significantly enhance the capabilities for dynamic surface recovery, particularly in uncalibrated setups, marking a pivotal step forward in the application of photometric stereo across various domains.

GUMBEL-NERF: Representing Unseen Objects as Part-Compositional Neural Radiance Fields

Oct 27, 2024

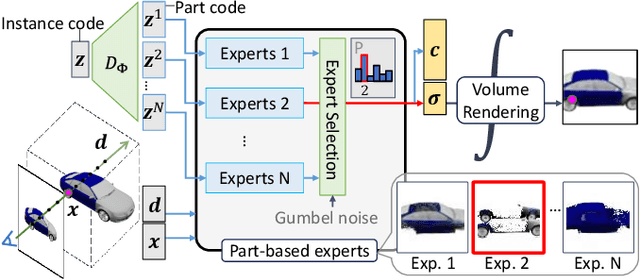

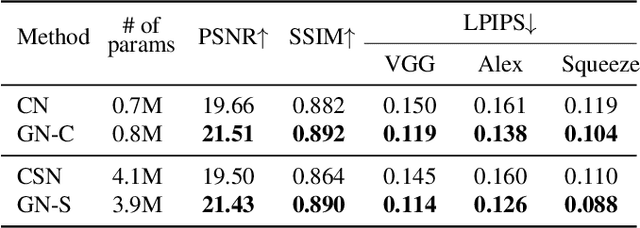

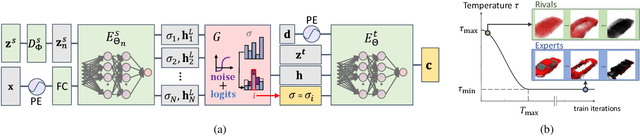

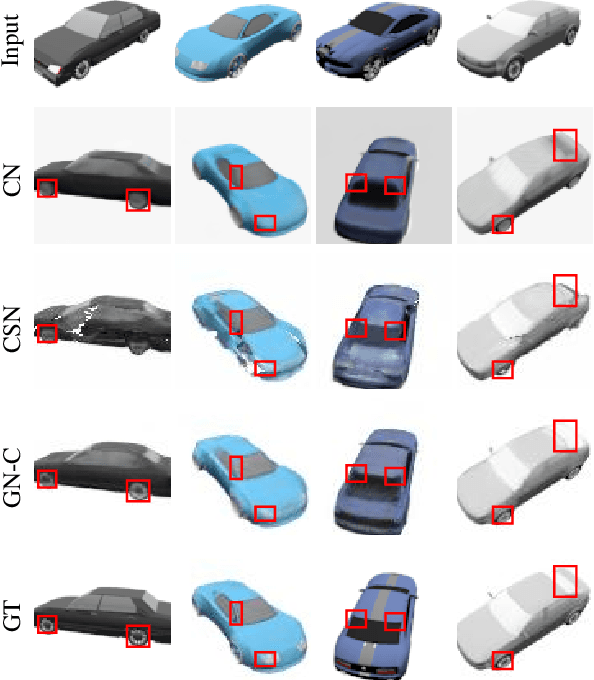

Abstract:We propose Gumbel-NeRF, a mixture-of-expert (MoE) neural radiance fields (NeRF) model with a hindsight expert selection mechanism for synthesizing novel views of unseen objects. Previous studies have shown that the MoE structure provides high-quality representations of a given large-scale scene consisting of many objects. However, we observe that such a MoE NeRF model often produces low-quality representations in the vicinity of experts' boundaries when applied to the task of novel view synthesis of an unseen object from one/few-shot input. We find that this deterioration is primarily caused by the foresight expert selection mechanism, which may leave an unnatural discontinuity in the object shape near the experts' boundaries. Gumbel-NeRF adopts a hindsight expert selection mechanism, which guarantees continuity in the density field even near the experts' boundaries. Experiments using the SRN cars dataset demonstrate the superiority of Gumbel-NeRF over the baselines in terms of various image quality metrics.

MERLiN: Single-Shot Material Estimation and Relighting for Photometric Stereo

Sep 01, 2024

Abstract:Photometric stereo typically demands intricate data acquisition setups involving multiple light sources to recover surface normals accurately. In this paper, we propose MERLiN, an attention-based hourglass network that integrates single image-based inverse rendering and relighting within a single unified framework. We evaluate the performance of photometric stereo methods using these relit images and demonstrate how they can circumvent the underlying challenge of complex data acquisition. Our physically-based model is trained on a large synthetic dataset containing complex shapes with spatially varying BRDF and is designed to handle indirect illumination effects to improve material reconstruction and relighting. Through extensive qualitative and quantitative evaluation, we demonstrate that the proposed framework generalizes well to real-world images, achieving high-quality shape, material estimation, and relighting. We assess these synthetically relit images over photometric stereo benchmark methods for their physical correctness and resulting normal estimation accuracy, paving the way towards single-shot photometric stereo through physically-based relighting. This work allows us to address the single image-based inverse rendering problem holistically, applying well to both synthetic and real data and taking a step towards mitigating the challenge of data acquisition in photometric stereo.

Entity-NeRF: Detecting and Removing Moving Entities in Urban Scenes

Mar 24, 2024

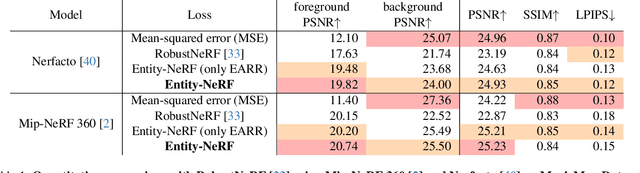

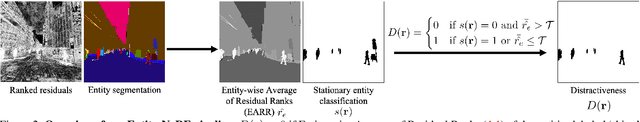

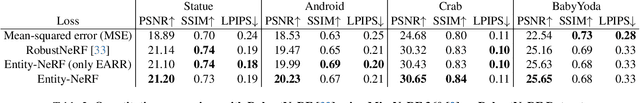

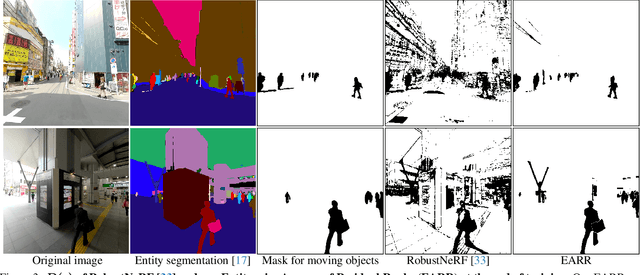

Abstract:Recent advancements in the study of Neural Radiance Fields (NeRF) for dynamic scenes often involve explicit modeling of scene dynamics. However, this approach faces challenges in modeling scene dynamics in urban environments, where moving objects of various categories and scales are present. In such settings, it becomes crucial to effectively eliminate moving objects to accurately reconstruct static backgrounds. Our research introduces an innovative method, termed here as Entity-NeRF, which combines the strengths of knowledge-based and statistical strategies. This approach utilizes entity-wise statistics, leveraging entity segmentation and stationary entity classification through thing/stuff segmentation. To assess our methodology, we created an urban scene dataset masked with moving objects. Our comprehensive experiments demonstrate that Entity-NeRF notably outperforms existing techniques in removing moving objects and reconstructing static urban backgrounds, both quantitatively and qualitatively.

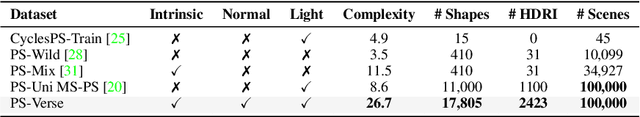

Scalable, Detailed and Mask-Free Universal Photometric Stereo

Mar 28, 2023Abstract:In this paper, we introduce SDM-UniPS, a groundbreaking Scalable, Detailed, Mask-free, and Universal Photometric Stereo network. Our approach can recover astonishingly intricate surface normal maps, rivaling the quality of 3D scanners, even when images are captured under unknown, spatially-varying lighting conditions in uncontrolled environments. We have extended previous universal photometric stereo networks to extract spatial-light features, utilizing all available information in high-resolution input images and accounting for non-local interactions among surface points. Moreover, we present a new synthetic training dataset that encompasses a diverse range of shapes, materials, and illumination scenarios found in real-world scenes. Through extensive evaluation, we demonstrate that our method not only surpasses calibrated, lighting-specific techniques on public benchmarks, but also excels with a significantly smaller number of input images even without object masks.

Non-uniform Sampling Strategies for NeRF on 360{\textdegree} images

Dec 07, 2022Abstract:In recent years, the performance of novel view synthesis using perspective images has dramatically improved with the advent of neural radiance fields (NeRF). This study proposes two novel techniques that effectively build NeRF for 360{\textdegree} omnidirectional images. Due to the characteristics of a 360{\textdegree} image of ERP format that has spatial distortion in their high latitude regions and a 360{\textdegree} wide viewing angle, NeRF's general ray sampling strategy is ineffective. Hence, the view synthesis accuracy of NeRF is limited and learning is not efficient. We propose two non-uniform ray sampling schemes for NeRF to suit 360{\textdegree} images - distortion-aware ray sampling and content-aware ray sampling. We created an evaluation dataset Synth360 using Replica and SceneCity models of indoor and outdoor scenes, respectively. In experiments, we show that our proposal successfully builds 360{\textdegree} image NeRF in terms of both accuracy and efficiency. The proposal is widely applicable to advanced variants of NeRF. DietNeRF, AugNeRF, and NeRF++ combined with the proposed techniques further improve the performance. Moreover, we show that our proposed method enhances the quality of real-world scenes in 360{\textdegree} images. Synth360: https://drive.google.com/drive/folders/1suL9B7DO2no21ggiIHkH3JF3OecasQLb.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge