Sarah Miller

Blending Knowledge in Deep Recurrent Networks for Adverse Event Prediction at Hospital Discharge

Apr 09, 2021

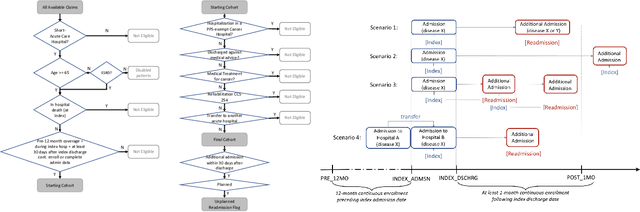

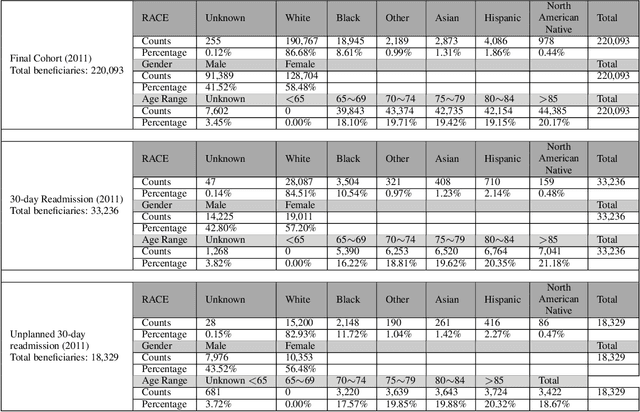

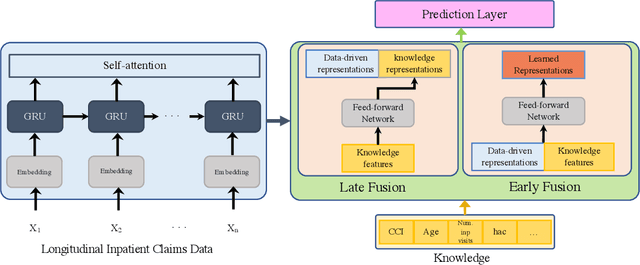

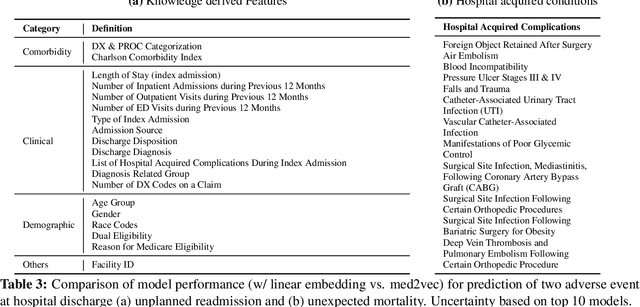

Abstract:Deep learning architectures have an extremely high-capacity for modeling complex data in a wide variety of domains. However, these architectures have been limited in their ability to support complex prediction problems using insurance claims data, such as readmission at 30 days, mainly due to data sparsity issue. Consequently, classical machine learning methods, especially those that embed domain knowledge in handcrafted features, are often on par with, and sometimes outperform, deep learning approaches. In this paper, we illustrate how the potential of deep learning can be achieved by blending domain knowledge within deep learning architectures to predict adverse events at hospital discharge, including readmissions. More specifically, we introduce a learning architecture that fuses a representation of patient data computed by a self-attention based recurrent neural network, with clinically relevant features. We conduct extensive experiments on a large claims dataset and show that the blended method outperforms the standard machine learning approaches.

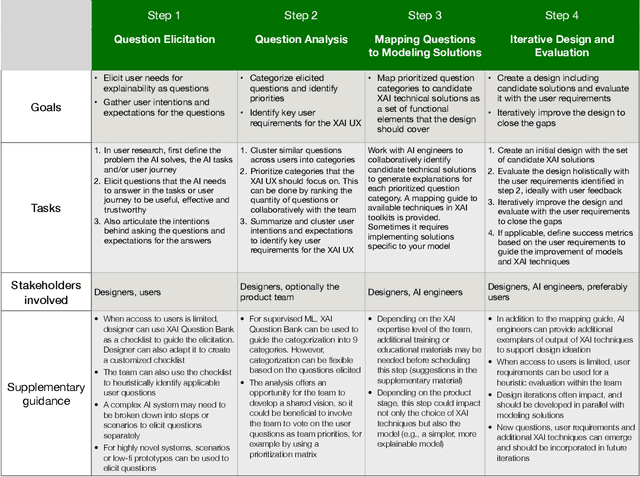

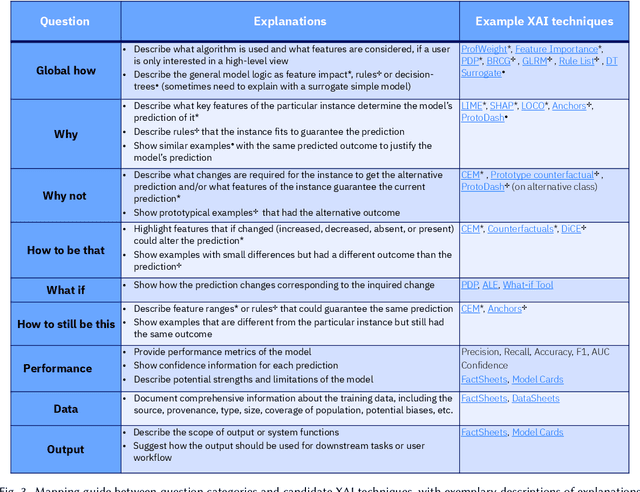

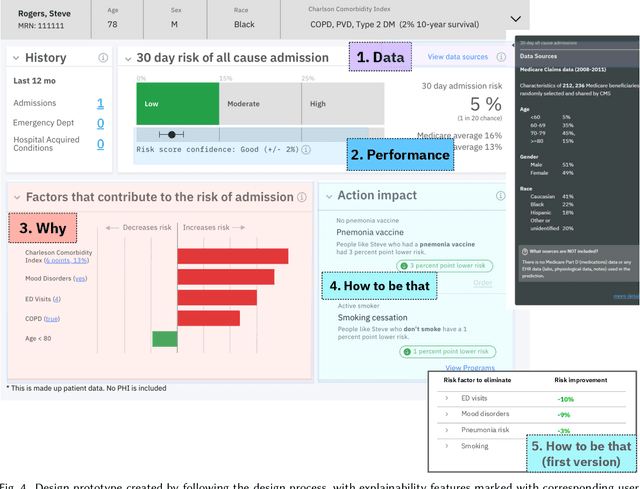

Question-Driven Design Process for Explainable AI User Experiences

Apr 08, 2021

Abstract:A pervasive design issue of AI systems is their explainability--how to provide appropriate information to help users understand the AI. The technical field of explainable AI (XAI) has produced a rich toolbox of techniques. Designers are now tasked with the challenges of how to select the most suitable XAI techniques and translate them into UX solutions. Informed by our previous work studying design challenges around XAI UX, this work proposes a design process to tackle these challenges. We review our and related prior work to identify requirements that the process should fulfill, and accordingly, propose a Question-Driven Design Process that grounds the user needs, choices of XAI techniques, design, and evaluation of XAI UX all in the user questions. We provide a mapping guide between prototypical user questions and exemplars of XAI techniques, serving as boundary objects to support collaboration between designers and AI engineers. We demonstrate it with a use case of designing XAI for healthcare adverse events prediction, and discuss lessons learned for tackling design challenges of AI systems.

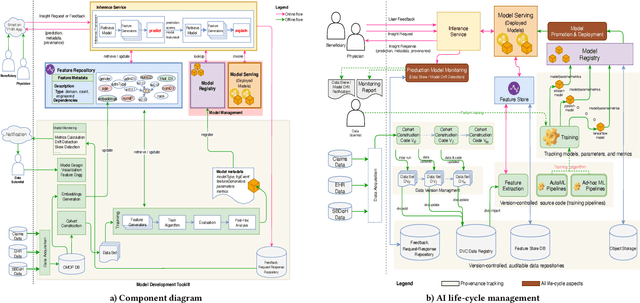

A Canonical Architecture For Predictive Analytics on Longitudinal Patient Records

Jul 24, 2020

Abstract:Many institutions within the healthcare ecosystem are making significant investments in AI technologies to optimize their business operations at lower cost with improved patient outcomes. Despite the hype with AI, the full realization of this potential is seriously hindered by several systemic problems, including data privacy, security, bias, fairness, and explainability. In this paper, we propose a novel canonical architecture for the development of AI models in healthcare that addresses these challenges. This system enables the creation and management of AI predictive models throughout all the phases of their life cycle, including data ingestion, model building, and model promotion in production environments. This paper describes this architecture in detail, along with a qualitative evaluation of our experience of using it on real world problems.

Questioning the AI: Informing Design Practices for Explainable AI User Experiences

Feb 08, 2020

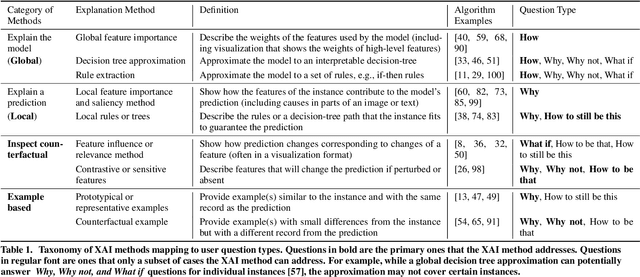

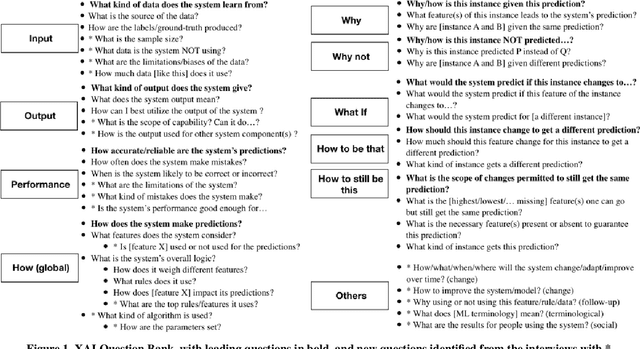

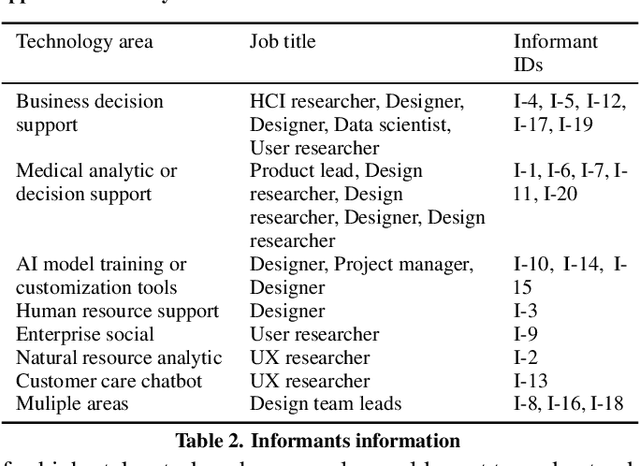

Abstract:A surge of interest in explainable AI (XAI) has led to a vast collection of algorithmic work on the topic. While many recognize the necessity to incorporate explainability features in AI systems, how to address real-world user needs for understanding AI remains an open question. By interviewing 20 UX and design practitioners working on various AI products, we seek to identify gaps between the current XAI algorithmic work and practices to create explainable AI products. To do so, we develop an algorithm-informed XAI question bank in which user needs for explainability are represented as prototypical questions users might ask about the AI, and use it as a study probe. Our work contributes insights into the design space of XAI, informs efforts to support design practices in this space, and identifies opportunities for future XAI work. We also provide an extended XAI question bank and discuss how it can be used for creating user-centered XAI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge