Question-Driven Design Process for Explainable AI User Experiences

Paper and Code

Apr 08, 2021

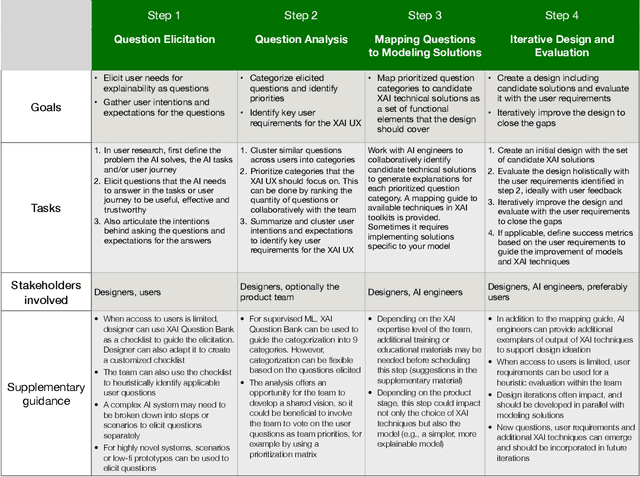

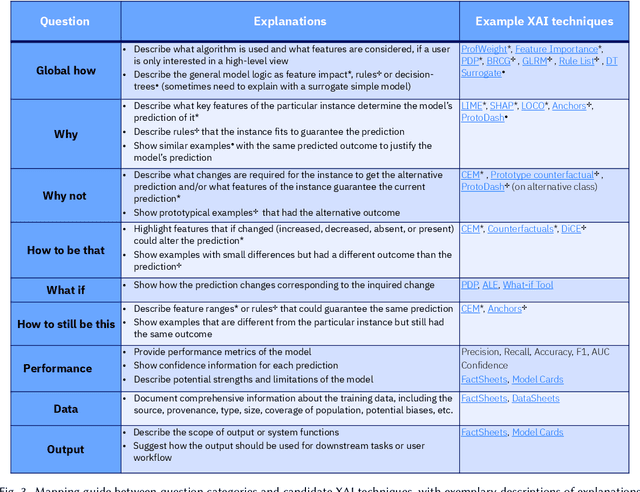

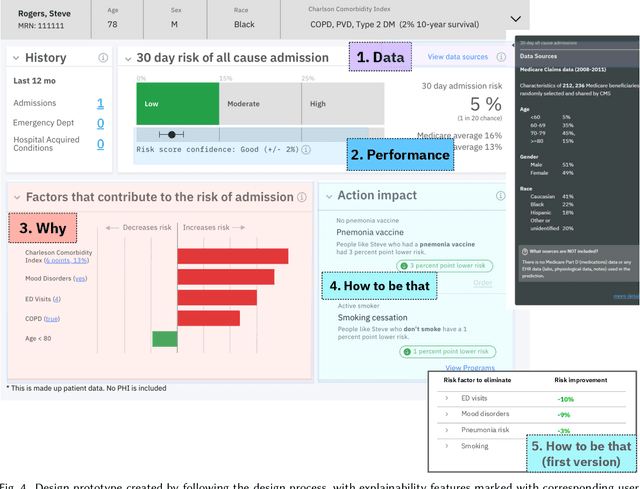

A pervasive design issue of AI systems is their explainability--how to provide appropriate information to help users understand the AI. The technical field of explainable AI (XAI) has produced a rich toolbox of techniques. Designers are now tasked with the challenges of how to select the most suitable XAI techniques and translate them into UX solutions. Informed by our previous work studying design challenges around XAI UX, this work proposes a design process to tackle these challenges. We review our and related prior work to identify requirements that the process should fulfill, and accordingly, propose a Question-Driven Design Process that grounds the user needs, choices of XAI techniques, design, and evaluation of XAI UX all in the user questions. We provide a mapping guide between prototypical user questions and exemplars of XAI techniques, serving as boundary objects to support collaboration between designers and AI engineers. We demonstrate it with a use case of designing XAI for healthcare adverse events prediction, and discuss lessons learned for tackling design challenges of AI systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge