Samuel Triest

SALON: Self-supervised Adaptive Learning for Off-road Navigation

Dec 10, 2024

Abstract:Autonomous robot navigation in off-road environments presents a number of challenges due to its lack of structure, making it difficult to handcraft robust heuristics for diverse scenarios. While learned methods using hand labels or self-supervised data improve generalizability, they often require a tremendous amount of data and can be vulnerable to domain shifts. To improve generalization in novel environments, recent works have incorporated adaptation and self-supervision to develop autonomous systems that can learn from their own experiences online. However, current works often rely on significant prior data, for example minutes of human teleoperation data for each terrain type, which is difficult to scale with more environments and robots. To address these limitations, we propose SALON, a perception-action framework for fast adaptation of traversability estimates with minimal human input. SALON rapidly learns online from experience while avoiding out of distribution terrains to produce adaptive and risk-aware cost and speed maps. Within seconds of collected experience, our results demonstrate comparable navigation performance over kilometer-scale courses in diverse off-road terrain as methods trained on 100-1000x more data. We additionally show promising results on significantly different robots in different environments. Our code is available at https://theairlab.org/SALON.

UNRealNet: Learning Uncertainty-Aware Navigation Features from High-Fidelity Scans of Real Environments

Jul 11, 2024

Abstract:Traversability estimation in rugged, unstructured environments remains a challenging problem in field robotics. Often, the need for precise, accurate traversability estimation is in direct opposition to the limited sensing and compute capability present on affordable, small-scale mobile robots. To address this issue, we present a novel method to learn [u]ncertainty-aware [n]avigation features from high-fidelity scans of [real]-world environments (UNRealNet). This network can be deployed on-robot to predict these high-fidelity features using input from lower-quality sensors. UNRealNet predicts dense, metric-space features directly from single-frame lidar scans, thus reducing the effects of occlusion and odometry error. Our approach is label-free, and is able to produce traversability estimates that are robot-agnostic. Additionally, we can leverage UNRealNet's predictive uncertainty to both produce risk-aware traversability estimates, and refine our feature predictions over time. We find that our method outperforms traditional local mapping and inpainting baselines by up to 40%, and demonstrate its efficacy on multiple legged platforms.

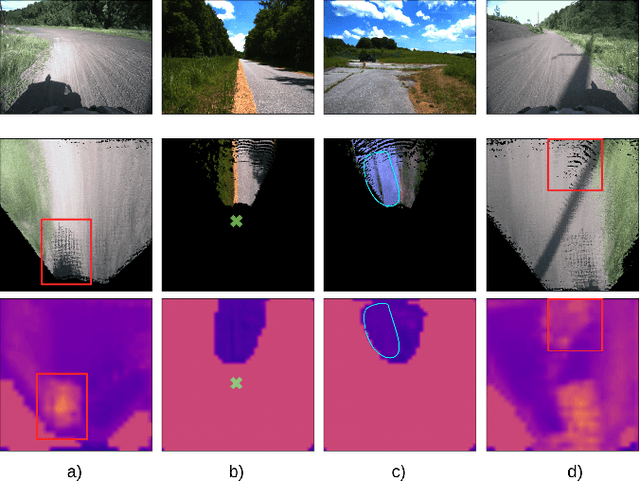

Deep Bayesian Future Fusion for Self-Supervised, High-Resolution, Off-Road Mapping

Mar 18, 2024

Abstract:The limited sensing resolution of resource-constrained off-road vehicles poses significant challenges towards reliable off-road autonomy. To overcome this limitation, we propose a general framework based on fusing the future information (i.e. future fusion) for self-supervision. Recent approaches exploit this future information alongside the hand-crafted heuristics to directly supervise the targeted downstream tasks (e.g. traversability estimation). However, in this paper, we opt for a more general line of development - time-efficient completion of the highest resolution (i.e. 2cm per pixel) BEV map in a self-supervised manner via future fusion, which can be used for any downstream tasks for better longer range prediction. To this end, first, we create a high-resolution future-fusion dataset containing pairs of (RGB / height) raw sparse and noisy inputs and map-based dense labels. Next, to accommodate the noise and sparsity of the sensory information, especially in the distal regions, we design an efficient realization of the Bayes filter onto the vanilla convolutional network via the recurrent mechanism. Equipped with the ideas from SOTA generative models, our Bayesian structure effectively predicts high-quality BEV maps in the distal regions. Extensive evaluation on both the quality of completion and downstream task on our future-fusion dataset demonstrates the potential of our approach.

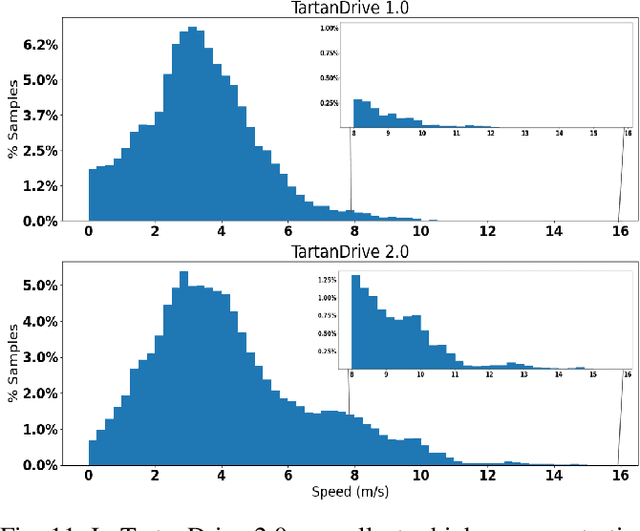

TartanDrive 2.0: More Modalities and Better Infrastructure to Further Self-Supervised Learning Research in Off-Road Driving Tasks

Feb 02, 2024

Abstract:We present TartanDrive 2.0, a large-scale off-road driving dataset for self-supervised learning tasks. In 2021 we released TartanDrive 1.0, which is one of the largest datasets for off-road terrain. As a follow-up to our original dataset, we collected seven hours of data at speeds of up to 15m/s with the addition of three new LiDAR sensors alongside the original camera, inertial, GPS, and proprioceptive sensors. We also release the tools we use for collecting, processing, and querying the data, including our metadata system designed to further the utility of our data. Custom infrastructure allows end users to reconfigure the data to cater to their own platforms. These tools and infrastructure alongside the dataset are useful for a variety of tasks in the field of off-road autonomy and, by releasing them, we encourage collaborative data aggregation. These resources lower the barrier to entry to utilizing large-scale datasets, thereby helping facilitate the advancement of robotics in areas such as self-supervised learning, multi-modal perception, inverse reinforcement learning, and representation learning. The dataset is available at https://github.com/castacks/tartan drive 2.0.

PIAug -- Physics Informed Augmentation for Learning Vehicle Dynamics for Off-Road Navigation

Nov 01, 2023

Abstract:Modeling the precise dynamics of off-road vehicles is a complex yet essential task due to the challenging terrain they encounter and the need for optimal performance and safety. Recently, there has been a focus on integrating nominal physics-based models alongside data-driven neural networks using Physics Informed Neural Networks. These approaches often assume the availability of a well-distributed dataset; however, this assumption may not hold due to regions in the physical distribution that are hard to collect, such as high-speed motions and rare terrains. Therefore, we introduce a physics-informed data augmentation methodology called PIAug. We show an example use case of the same by modeling high-speed and aggressive motion predictions, given a dataset with only low-speed data. During the training phase, we leverage the nominal model for generating target domain (medium and high velocity) data using the available source data (low velocity). Subsequently, we employ a physics-inspired loss function with this augmented dataset to incorporate prior knowledge of physics into the neural network. Our methodology results in up to 67% less mean error in trajectory prediction in comparison to a standalone nominal model, especially during aggressive maneuvers at speeds outside the training domain. In real-life navigation experiments, our model succeeds in 4x tighter waypoint tracking constraints than the Kinematic Bicycle Model (KBM) at out-of-domain velocities.

Learning Risk-Aware Costmaps via Inverse Reinforcement Learning for Off-Road Navigation

Jan 31, 2023Abstract:The process of designing costmaps for off-road driving tasks is often a challenging and engineering-intensive task. Recent work in costmap design for off-road driving focuses on training deep neural networks to predict costmaps from sensory observations using corpora of expert driving data. However, such approaches are generally subject to over-confident mispredictions and are rarely evaluated in-the-loop on physical hardware. We present an inverse reinforcement learning-based method of efficiently training deep cost functions that are uncertainty-aware. We do so by leveraging recent advances in highly parallel model-predictive control and robotic risk estimation. In addition to demonstrating improvement at reproducing expert trajectories, we also evaluate the efficacy of these methods in challenging off-road navigation scenarios. We observe that our method significantly outperforms a geometric baseline, resulting in 44% improvement in expert path reconstruction and 57% fewer interventions in practice. We also observe that varying the risk tolerance of the vehicle results in qualitatively different navigation behaviors, especially with respect to higher-risk scenarios such as slopes and tall grass.

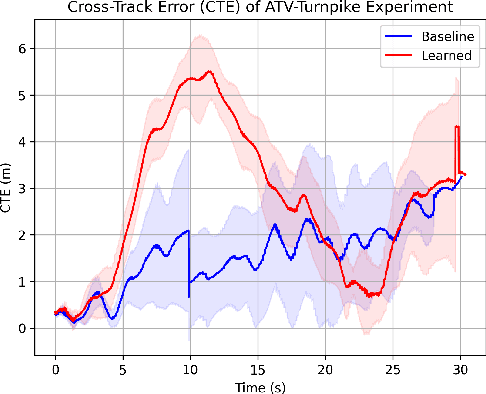

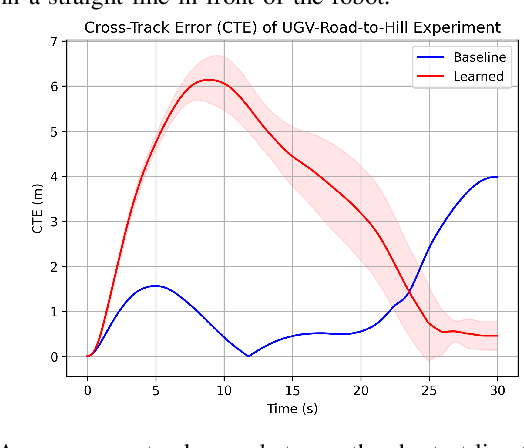

How Does It Feel? Self-Supervised Costmap Learning for Off-Road Vehicle Traversability

Sep 22, 2022

Abstract:Estimating terrain traversability in off-road environments requires reasoning about complex interaction dynamics between the robot and these terrains. However, it is challenging to build an accurate physics model, or create informative labels to learn a model in a supervised manner, for these interactions. We propose a method that learns to predict traversability costmaps by combining exteroceptive environmental information with proprioceptive terrain interaction feedback in a self-supervised manner. Additionally, we propose a novel way of incorporating robot velocity in the costmap prediction pipeline. We validate our method in multiple short and large-scale navigation tasks on a large, autonomous all-terrain vehicle (ATV) on challenging off-road terrains, and demonstrate ease of integration on a separate large ground robot. Our short-scale navigation results show that using our learned costmaps leads to overall smoother navigation, and provides the robot with a more fine-grained understanding of the interactions between the robot and different terrain types, such as grass and gravel. Our large-scale navigation trials show that we can reduce the number of interventions by up to 57% compared to an occupancy-based navigation baseline in challenging off-road courses ranging from 400 m to 3150 m.

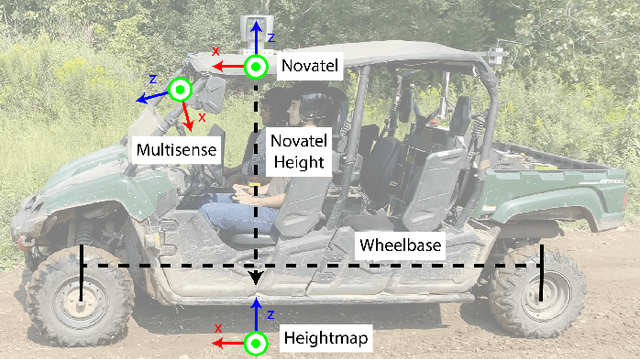

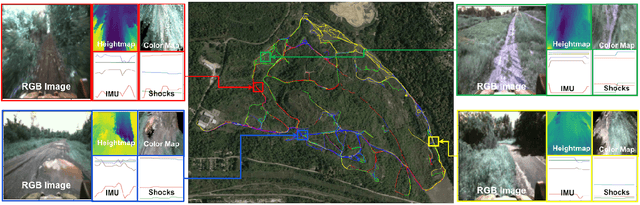

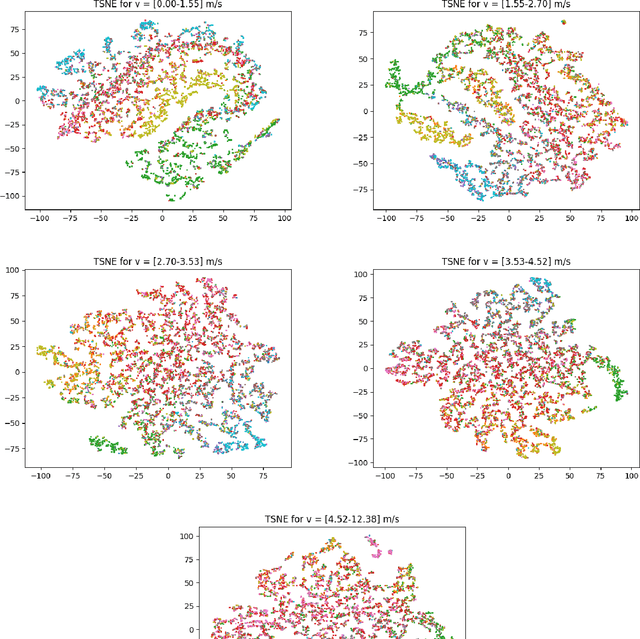

TartanDrive: A Large-Scale Dataset for Learning Off-Road Dynamics Models

May 03, 2022

Abstract:We present TartanDrive, a large scale dataset for learning dynamics models for off-road driving. We collected a dataset of roughly 200,000 off-road driving interactions on a modified Yamaha Viking ATV with seven unique sensing modalities in diverse terrains. To the authors' knowledge, this is the largest real-world multi-modal off-road driving dataset, both in terms of number of interactions and sensing modalities. We also benchmark several state-of-the-art methods for model-based reinforcement learning from high-dimensional observations on this dataset. We find that extending these models to multi-modality leads to significant performance on off-road dynamics prediction, especially in more challenging terrains. We also identify some shortcomings with current neural network architectures for the off-road driving task. Our dataset is available at https://github.com/castacks/tartan_drive.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge