Sam Kriegman

University of Vermont

Reconfigurable legged metamachines that run on autonomous modular legs

May 01, 2025

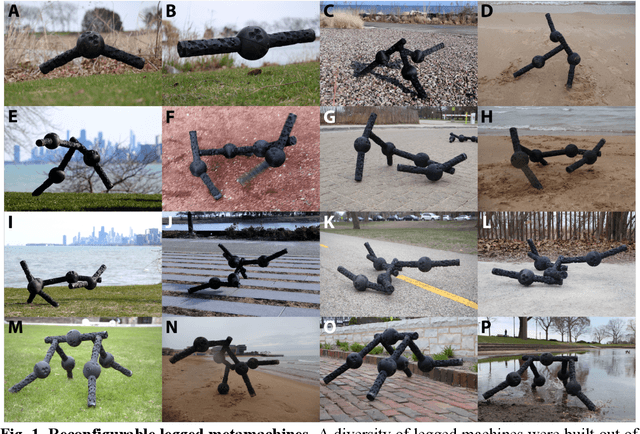

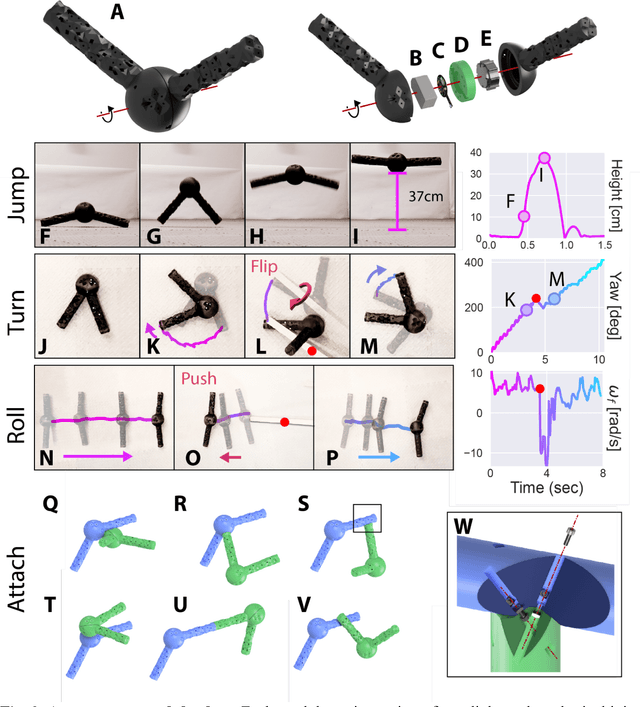

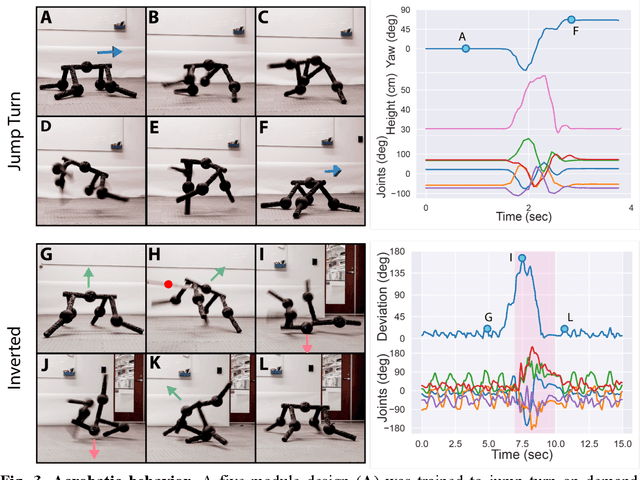

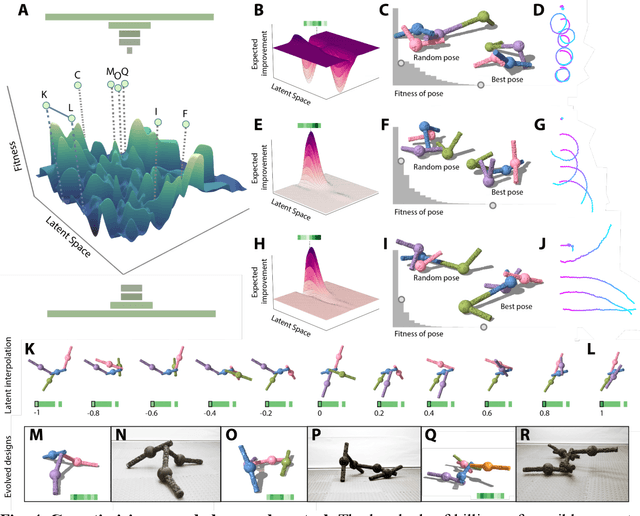

Abstract:Legged machines are becoming increasingly agile and adaptive but they have so far lacked the basic reconfigurability of legged animals, which have been rearranged and reshaped to fill millions of niches. Unlike their biological counterparts, legged machines have largely converged over the past decade to canonical quadrupedal and bipedal architectures that cannot be easily reconfigured to meet new tasks or recover from injury. Here we introduce autonomous modular legs: agile yet minimal, single-degree-of-freedom jointed links that can learn complex dynamic behaviors and may be freely attached to form legged metamachines at the meter scale. This enables rapid repair, redesign, and recombination of highly-dynamic modular agents that move quickly and acrobatically (non-quasistatically) through unstructured environments. Because each module is itself a complete agent, legged metamachines are able to sustain deep structural damage that would completely disable other legged robots. We also show how to encode the vast space of possible body configurations into a compact latent design genome that can be efficiently explored, revealing a wide diversity of novel legged forms.

Generating Freeform Endoskeletal Robots

Dec 02, 2024

Abstract:The automatic design of embodied agents (e.g. robots) has existed for 31 years and is experiencing a renaissance of interest in the literature. To date however, the field has remained narrowly focused on two kinds of anatomically simple robots: (1) fully rigid, jointed bodies; and (2) fully soft, jointless bodies. Here we bridge these two extremes with the open ended creation of terrestrial endoskeletal robots: deformable soft bodies that leverage jointed internal skeletons to move efficiently across land. Simultaneous de novo generation of external and internal structures is achieved by (i) modeling 3D endoskeletal body plans as integrated collections of elastic and rigid cells that directly attach to form soft tissues anchored to compound rigid bodies; (ii) encoding these discrete mechanical subsystems into a continuous yet coherent latent embedding; (iii) optimizing the sensorimotor coordination of each decoded design using model-free reinforcement learning; and (iv) navigating this smooth yet highly non-convex latent manifold using evolutionary strategies. This yields an endless stream of novel species of "higher robots" that, like all higher animals, harness the mechanical advantages of both elastic tissues and skeletal levers for terrestrial travel. It also provides a plug-and-play experimental platform for benchmarking evolutionary design and representation learning algorithms in complex hierarchical embodied systems.

Evolution and learning in differentiable robots

May 23, 2024Abstract:The automatic design of robots has existed for 30 years but has been constricted by serial non-differentiable design evaluations, premature convergence to simple bodies or clumsy behaviors, and a lack of sim2real transfer to physical machines. Thus, here we employ massively-parallel differentiable simulations to rapidly and simultaneously optimize individual neural control of behavior across a large population of candidate body plans and return a fitness score for each design based on the performance of its fully optimized behavior. Non-differentiable changes to the mechanical structure of each robot in the population -- mutations that rearrange, combine, add, or remove body parts -- were applied by a genetic algorithm in an outer loop of search, generating a continuous flow of novel morphologies with highly-coordinated and graceful behaviors honed by gradient descent. This enabled the exploration of several orders-of-magnitude more designs than all previous methods, despite the fact that robots here have the potential to be much more complex, in terms of number of independent motors, than those in prior studies. We found that evolution reliably produces ``increasingly differentiable'' robots: body plans that smooth the loss landscape in which learning operates and thereby provide better training paths toward performant behaviors. Finally, one of the highly differentiable morphologies discovered in simulation was realized as a physical robot and shown to retain its optimized behavior. This provides a cyberphysical platform to investigate the relationship between evolution and learning in biological systems and broadens our understanding of how a robot's physical structure can influence the ability to train policies for it. Videos and code at https://sites.google.com/view/eldir.

A non-cubic space-filling modular robot

Mar 02, 2024Abstract:Space-filling building blocks of diverse shape permeate nature at all levels of organization, from atoms to honeycombs, and have proven useful in artificial systems, from molecular containers to clay bricks. But, despite the wide variety of space-filling polyhedra known to mathematics, only the cube has been explored in robotics. Thus, here we roboticize a non-cubic space-filling shape: the rhombic dodecahedron. This geometry offers an appealing alternative to cubes as it greatly simplifies rotational motion of one cell about the edge of another, and increases the number of neighbors each cell can communicate with and hold on to. To better understand the challenges and opportunities of these and other space-filling machines, we manufactured 48 rhombic dodecahedral cells and used them to build various superstructures. We report locomotive ability of some of the structures we built, and discuss the dis/advantages of the different designs we tested. We also introduce a strategy for genderless passive docking of cells that generalizes to any polyhedra with radially symmetrical faces. Future work will allow the cells to freely roll/rotate about one another so that they may realize the full potential of their unique shape.

Reinforcement learning for freeform robot design

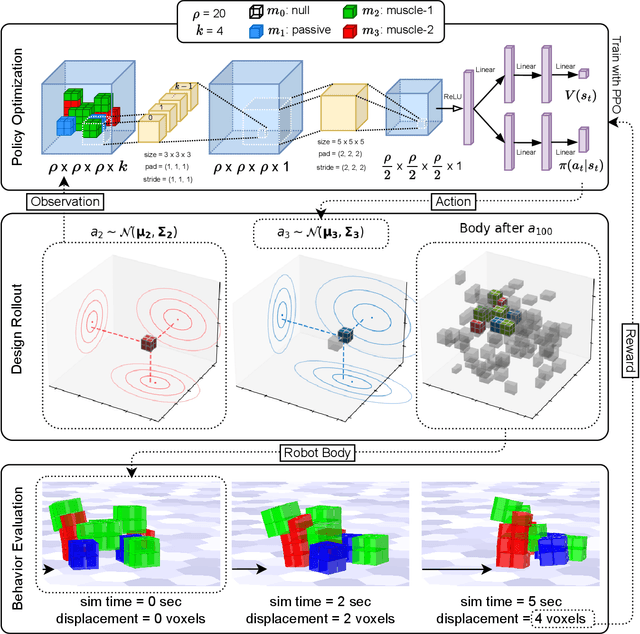

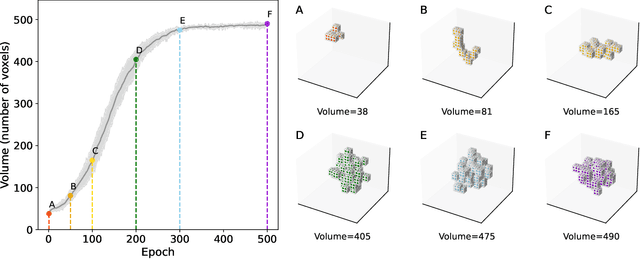

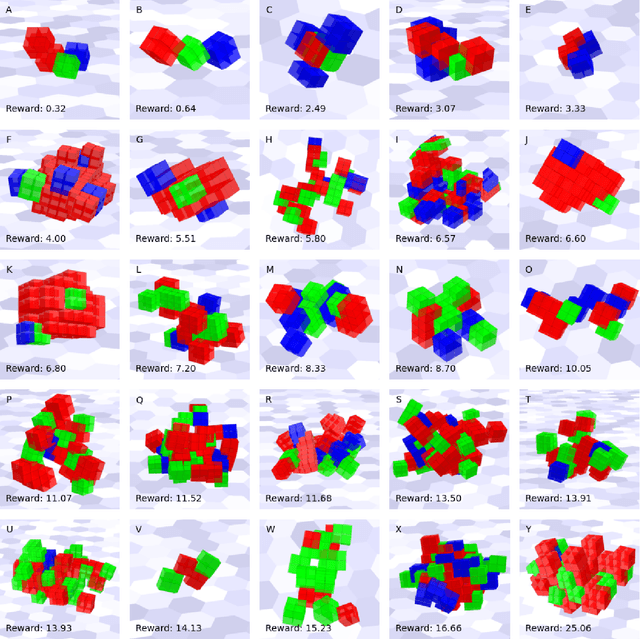

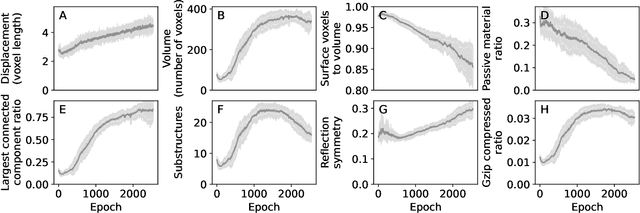

Oct 09, 2023

Abstract:Inspired by the necessity of morphological adaptation in animals, a growing body of work has attempted to expand robot training to encompass physical aspects of a robot's design. However, reinforcement learning methods capable of optimizing the 3D morphology of a robot have been restricted to reorienting or resizing the limbs of a predetermined and static topological genus. Here we show policy gradients for designing freeform robots with arbitrary external and internal structure. This is achieved through actions that deposit or remove bundles of atomic building blocks to form higher-level nonparametric macrostructures such as appendages, organs and cavities. Although results are provided for open loop control only, we discuss how this method could be adapted for closed loop control and sim2real transfer to physical machines in future.

Glamour muscles: why having a body is not what it means to be embodied

Jul 17, 2023

Abstract:Embodiment has recently enjoyed renewed consideration as a means to amplify the faculties of smart machines. Proponents of embodiment seem to imply that optimizing for movement in physical space promotes something more than the acquisition of niche capabilities for solving problems in physical space. However, there is nothing in principle which should so distinguish the problem of action selection in physical space from the problem of action selection in more abstract spaces, like that of language. Rather, what makes embodiment persuasive as a means toward higher intelligence is that it promises to capture, but does not actually realize, contingent facts about certain bodies (living intelligence) and the patterns of activity associated with them. These include an active resistance to annihilation and revisable constraints on the processes that make the world intelligible. To be theoretically or practically useful beyond the creation of niche tools, we argue that "embodiment" cannot be the trivial fact of a body, nor its movement through space, but the perpetual negotiation of the function, design, and integrity of that body$\unicode{x2013}$that is, to participate in what it means to $\textit{constitute}$ a given body. It follows that computer programs which are strictly incapable of traversing physical space might, under the right conditions, be more embodied than a walking, talking robot.

Efficient automatic design of robots

Jun 05, 2023Abstract:Robots are notoriously difficult to design because of complex interdependencies between their physical structure, sensory and motor layouts, and behavior. Despite this, almost every detail of every robot built to date has been manually determined by a human designer after several months or years of iterative ideation, prototyping, and testing. Inspired by evolutionary design in nature, the automated design of robots using evolutionary algorithms has been attempted for two decades, but it too remains inefficient: days of supercomputing are required to design robots in simulation that, when manufactured, exhibit desired behavior. Here we show for the first time de-novo optimization of a robot's structure to exhibit a desired behavior, within seconds on a single consumer-grade computer, and the manufactured robot's retention of that behavior. Unlike other gradient-based robot design methods, this algorithm does not presuppose any particular anatomical form; starting instead from a randomly-generated apodous body plan, it consistently discovers legged locomotion, the most efficient known form of terrestrial movement. If combined with automated fabrication and scaled up to more challenging tasks, this advance promises near instantaneous design, manufacture, and deployment of unique and useful machines for medical, environmental, vehicular, and space-based tasks.

Scale invariant robot behavior with fractals

Mar 08, 2021

Abstract:Robots deployed at orders of magnitude different size scales, and that retain the same desired behavior at any of those scales, would greatly expand the environments in which the robots could operate. However it is currently not known whether such robots exist, and, if they do, how to design them. Since self similar structures in nature often exhibit self similar behavior at different scales, we hypothesize that there may exist robot designs that have the same property. Here we demonstrate that this is indeed the case for some, but not all, modular soft robots: there are robot designs that exhibit a desired behavior at a small size scale, and if copies of that robot are attached together to realize the same design at higher scales, those larger robots exhibit similar behavior. We show how to find such designs in simulation using an evolutionary algorithm. Further, when fractal attachment is not assumed and attachment geometries must thus be evolved along with the design of the base robot unit, scale invariant behavior is not achieved, demonstrating that structural self similarity, when combined with appropriate designs, is a useful path to realizing scale invariant robot behavior. We validate our findings by demonstrating successful transferal of self similar structure and behavior to pneumatically-controlled soft robots. Finally, we show that biobots can spontaneously exhibit self similar attachment geometries, thereby suggesting that self similar behavior via self similar structure may be realizable across a wide range of robot platforms in future.

Gaining environments through shape change

Aug 14, 2020

Abstract:Many organisms, including various species of spiders and caterpillars, change their shape to switch gaits and adapt to different environments. Recent technological advances, ranging from stretchable circuits to highly deformable soft robots, have begun to make shape changing robots a possibility. However, it is currently unclear how and when shape change should occur, and what capabilities could be gained, leading to a wide range of unsolved design and control problems. To begin addressing these questions, here we simulate, design, and build a soft robot that utilizes shape change to achieve locomotion over both a flat and inclined surface. Modeling this robot in simulation, we explore its capabilities in two environments and demonstrate the automated discovery of environment-specific shapes and gaits that successfully transfer to the physical hardware. We found that the shape-changing robot traverses these environments better than an equivalent but non-morphing robot, in simulation and reality.

Scalable sim-to-real transfer of soft robot designs

Nov 23, 2019

Abstract:The manual design of soft robots and their controllers is notoriously challenging, but it could be augmented---or, in some cases, entirely replaced---by automated design tools. Machine learning algorithms can automatically propose, test, and refine designs in simulation, and the most promising ones can then be manufactured in reality (sim2real). However, it is currently not known how to guarantee that behavior generated in simulation can be preserved when deployed in reality. Although many previous studies have devised training protocols that facilitate sim2real transfer of control polices, little to no work has investigated the simulation-reality gap as a function of morphology. This is due in part to an overall lack of tools capable of systematically designing and rapidly manufacturing robots. Here we introduce a low cost, open source, and modular soft robot design and construction kit, and use it to simulate, fabricate, and measure the simulation-reality gap of minimally complex yet soft, locomoting machines. We prove the scalability of this approach by transferring an order of magnitude more robot designs from simulation to reality than any other method. The kit and its instructions can be found here: https://github.com/skriegman/sim2real4designs

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge