S. Farokh Atashzar

Tunable Passivity Control for Centralized Multiport Networked Systems

Nov 07, 2025Abstract:Centralized Multiport Networked Dynamic (CMND) systems have emerged as a key architecture with applications in several complex network systems, such as multilateral telerobotics and multi-agent control. These systems consist of a hub node/subsystem connecting with multiple remote nodes/subsystems via a networked architecture. One challenge for this system is stability, which can be affected by non-ideal network artifacts. Conventional passivity-based approaches can stabilize the system under specialized applications like small-scale networked systems. However, those conventional passive stabilizers have several restrictions, such as distributing compensation across subsystems in a decentralized manner, limiting flexibility, and, at the same time, relying on the restrictive assumptions of node passivity. This paper synthesizes a centralized optimal passivity-based stabilization framework for CMND systems. It consists of a centralized passivity observer monitoring overall energy flow and an optimal passivity controller that distributes the just-needed dissipation among various nodes, guaranteeing strict passivity and, thus, L2 stability. The proposed data-driven model-free approach, i.e., Tunable Centralized Optimal Passivity Control (TCoPC), optimizes total performance based on the prescribed dissipation distribution strategy while ensuring stability. The controller can put high dissipation loads on some sub-networks while relaxing the dissipation on other nodes. Simulation results demonstrate the proposed frameworks performance in a complex task under different time-varying delay scenarios while relaxing the remote nodes minimum phase and passivity assumption, enhancing the scalability and generalizability.

Encoding Biomechanical Energy Margin into Passivity-based Synchronization for Networked Telerobotic Systems

Nov 07, 2025

Abstract:Maintaining system stability and accurate position tracking is imperative in networked robotic systems, particularly for haptics-enabled human-robot interaction. Recent literature has integrated human biomechanics into the stabilizers implemented for teleoperation, enhancing force preservation while guaranteeing convergence and safety. However, position desynchronization due to imperfect communication and non-passive behaviors remains a challenge. This paper proposes a two-port biomechanics-aware passivity-based synchronizer and stabilizer, referred to as TBPS2. This stabilizer optimizes position synchronization by leveraging human biomechanics while reducing the stabilizer's conservatism in its activation. We provide the mathematical design synthesis of the stabilizer and the proof of stability. We also conducted a series of grid simulations and systematic experiments, comparing their performance with that of state-of-the-art solutions under varying time delays and environmental conditions.

A Systematic Review of EEG-based Machine Intelligence Algorithms for Depression Diagnosis, and Monitoring

Mar 25, 2025Abstract:Depression disorder is a serious health condition that has affected the lives of millions of people around the world. Diagnosis of depression is a challenging practice that relies heavily on subjective studies and, in most cases, suffers from late findings. Electroencephalography (EEG) biomarkers have been suggested and investigated in recent years as a potential transformative objective practice. In this article, for the first time, a detailed systematic review of EEG-based depression diagnosis approaches is conducted using advanced machine learning techniques and statistical analyses. For this, 938 potentially relevant articles (since 1985) were initially detected and filtered into 139 relevant articles based on the review scheme 'preferred reporting items for systematic reviews and meta-analyses (PRISMA).' This article compares and discusses the selected articles and categorizes them according to the type of machine learning techniques and statistical analyses. Algorithms, preprocessing techniques, extracted features, and data acquisition systems are discussed and summarized. This review paper explains the existing challenges of the current algorithms and sheds light on the future direction of the field. This systematic review outlines the issues and challenges in machine intelligence for the diagnosis of EEG depression that can be addressed in future studies and possibly in future wearable technologies.

EEG-Based Analysis of Brain Responses in Multi-Modal Human-Robot Interaction: Modulating Engagement

Nov 27, 2024Abstract:User engagement, cognitive participation, and motivation during task execution in physical human-robot interaction are crucial for motor learning. These factors are especially important in contexts like robotic rehabilitation, where neuroplasticity is targeted. However, traditional robotic rehabilitation systems often face challenges in maintaining user engagement, leading to unpredictable therapeutic outcomes. To address this issue, various techniques, such as assist-as-needed controllers, have been developed to prevent user slacking and encourage active participation. In this paper, we introduce a new direction through a novel multi-modal robotic interaction designed to enhance user engagement by synergistically integrating visual, motor, cognitive, and auditory (speech recognition) tasks into a single, comprehensive activity. To assess engagement quantitatively, we compared multiple electroencephalography (EEG) biomarkers between this multi-modal protocol and a traditional motor-only protocol. Fifteen healthy adult participants completed 100 trials of each task type. Our findings revealed that EEG biomarkers, particularly relative alpha power, showed statistically significant improvements in engagement during the multi-modal task compared to the motor-only task. Moreover, while engagement decreased over time in the motor-only task, the multi-modal protocol maintained consistent engagement, suggesting that users could remain engaged for longer therapy sessions. Our observations on neural responses during interaction indicate that the proposed multi-modal approach can effectively enhance user engagement, which is critical for improving outcomes. This is the first time that objective neural response highlights the benefit of a comprehensive robotic intervention combining motor, cognitive, and auditory functions in healthy subjects.

The Role of Functional Muscle Networks in Improving Hand Gesture Perception for Human-Machine Interfaces

Aug 05, 2024Abstract:Developing accurate hand gesture perception models is critical for various robotic applications, enabling effective communication between humans and machines and directly impacting neurorobotics and interactive robots. Recently, surface electromyography (sEMG) has been explored for its rich informational context and accessibility when combined with advanced machine learning approaches and wearable systems. The literature presents numerous approaches to boost performance while ensuring robustness for neurorobots using sEMG, often resulting in models requiring high processing power, large datasets, and less scalable solutions. This paper addresses this challenge by proposing the decoding of muscle synchronization rather than individual muscle activation. We study coherence-based functional muscle networks as the core of our perception model, proposing that functional synchronization between muscles and the graph-based network of muscle connectivity encode contextual information about intended hand gestures. This can be decoded using shallow machine learning approaches without the need for deep temporal networks. Our technique could impact myoelectric control of neurorobots by reducing computational burdens and enhancing efficiency. The approach is benchmarked on the Ninapro database, which contains 12 EMG signals from 40 subjects performing 17 hand gestures. It achieves an accuracy of 85.1%, demonstrating improved performance compared to existing methods while requiring much less computational power. The results support the hypothesis that a coherence-based functional muscle network encodes critical information related to gesture execution, significantly enhancing hand gesture perception with potential applications for neurorobotic systems and interactive machines.

An LSTM Feature Imitation Network for Hand Movement Recognition from sEMG Signals

May 23, 2024

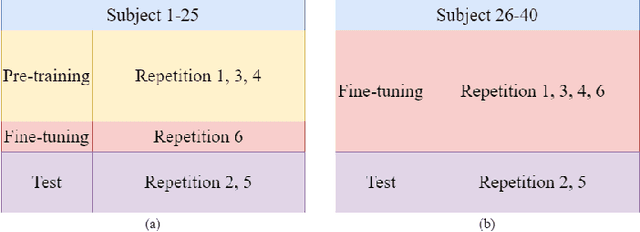

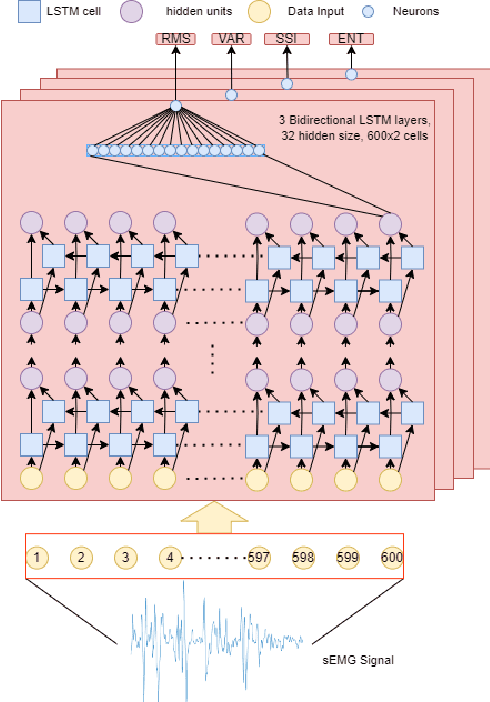

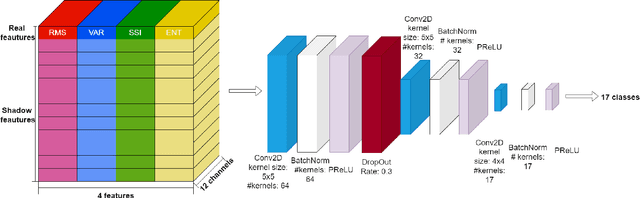

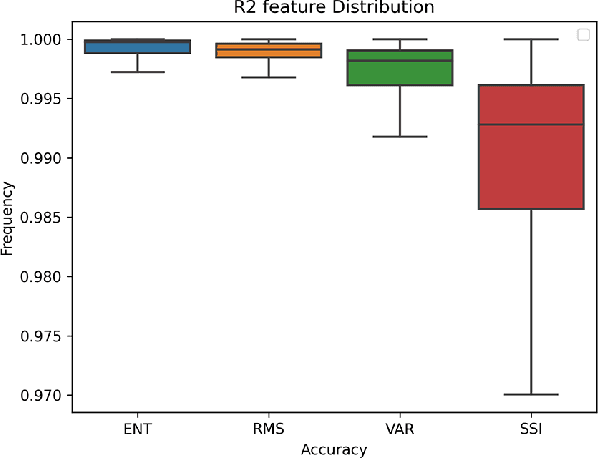

Abstract:Surface Electromyography (sEMG) is a non-invasive signal that is used in the recognition of hand movement patterns, the diagnosis of diseases, and the robust control of prostheses. Despite the remarkable success of recent end-to-end Deep Learning approaches, they are still limited by the need for large amounts of labeled data. To alleviate the requirement for big data, researchers utilize Feature Engineering, which involves decomposing the sEMG signal into several spatial, temporal, and frequency features. In this paper, we propose utilizing a feature-imitating network (FIN) for closed-form temporal feature learning over a 300ms signal window on Ninapro DB2, and applying it to the task of 17 hand movement recognition. We implement a lightweight LSTM-FIN network to imitate four standard temporal features (entropy, root mean square, variance, simple square integral). We then explore transfer learning capabilities by applying the pre-trained LSTM-FIN for tuning to a downstream hand movement recognition task. We observed that the LSTM network can achieve up to 99\% R2 accuracy in feature reconstruction and 80\% accuracy in hand movement recognition. Our results also showed that the model can be robustly applied for both within- and cross-subject movement recognition, as well as simulated low-latency environments. Overall, our work demonstrates the potential of the FIN modeling paradigm in data-scarce scenarios for sEMG signal processing.

ViT-MDHGR: Cross-day Reliability and Agility in Dynamic Hand Gesture Prediction via HD-sEMG Signal Decoding

Sep 22, 2023Abstract:Surface electromyography (sEMG) and high-density sEMG (HD-sEMG) biosignals have been extensively investigated for myoelectric control of prosthetic devices, neurorobotics, and more recently human-computer interfaces because of their capability for hand gesture recognition/prediction in a wearable and non-invasive manner. High intraday (same-day) performance has been reported. However, the interday performance (separating training and testing days) is substantially degraded due to the poor generalizability of conventional approaches over time, hindering the application of such techniques in real-life practices. There are limited recent studies on the feasibility of multi-day hand gesture recognition. The existing studies face a major challenge: the need for long sEMG epochs makes the corresponding neural interfaces impractical due to the induced delay in myoelectric control. This paper proposes a compact ViT-based network for multi-day dynamic hand gesture prediction. We tackle the main challenge as the proposed model only relies on very short HD-sEMG signal windows (i.e., 50 ms, accounting for only one-sixth of the convention for real-time myoelectric implementation), boosting agility and responsiveness. Our proposed model can predict 11 dynamic gestures for 20 subjects with an average accuracy of over 71% on the testing day, 3-25 days after training. Moreover, when calibrated on just a small portion of data from the testing day, the proposed model can achieve over 92% accuracy by retraining less than 10% of the parameters for computational efficiency.

From Unstable Contacts to Stable Control: A Deep Learning Paradigm for HD-sEMG in Neurorobotics

Sep 20, 2023

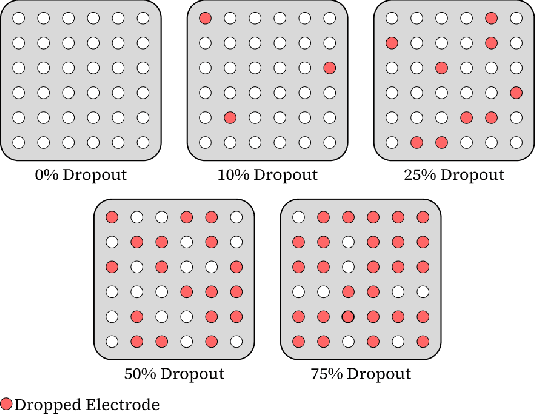

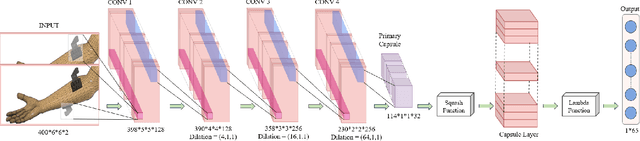

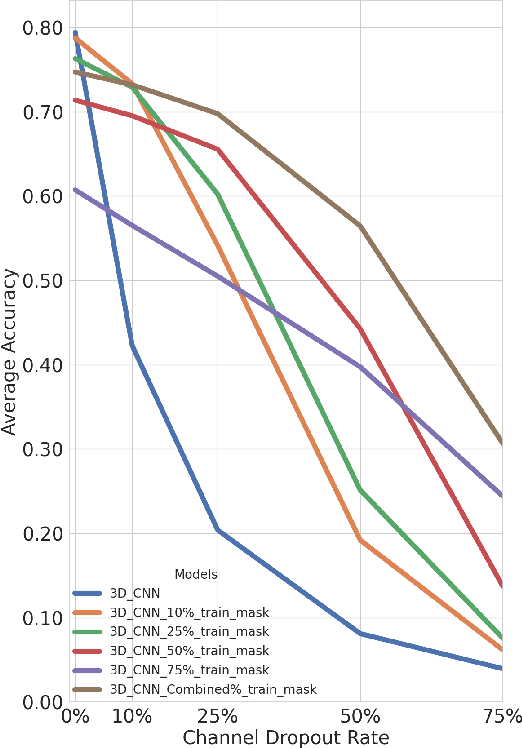

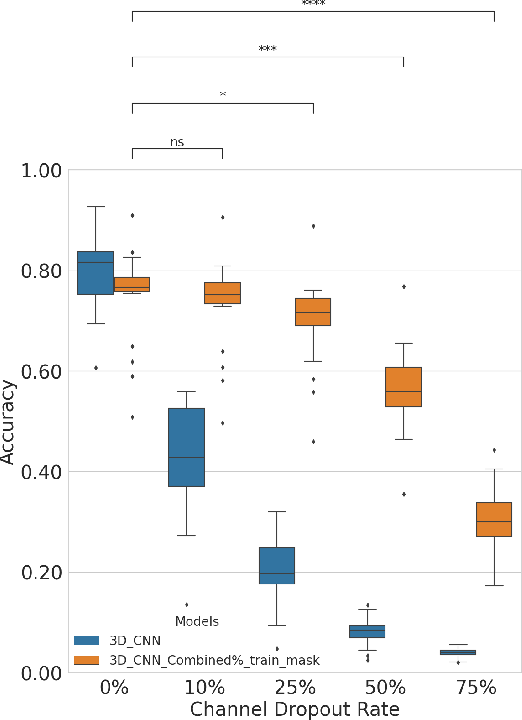

Abstract:In the past decade, there has been significant advancement in designing wearable neural interfaces for controlling neurorobotic systems, particularly bionic limbs. These interfaces function by decoding signals captured non-invasively from the skin's surface. Portable high-density surface electromyography (HD-sEMG) modules combined with deep learning decoding have attracted interest by achieving excellent gesture prediction and myoelectric control of prosthetic systems and neurorobots. However, factors like pixel-shape electrode size and unstable skin contact make HD-sEMG susceptible to pixel electrode drops. The sparse electrode-skin disconnections rooted in issues such as low adhesion, sweating, hair blockage, and skin stretch challenge the reliability and scalability of these modules as the perception unit for neurorobotic systems. This paper proposes a novel deep-learning model providing resiliency for HD-sEMG modules, which can be used in the wearable interfaces of neurorobots. The proposed 3D Dilated Efficient CapsNet model trains on an augmented input space to computationally `force' the network to learn channel dropout variations and thus learn robustness to channel dropout. The proposed framework maintained high performance under a sensor dropout reliability study conducted. Results show conventional models' performance significantly degrades with dropout and is recovered using the proposed architecture and the training paradigm.

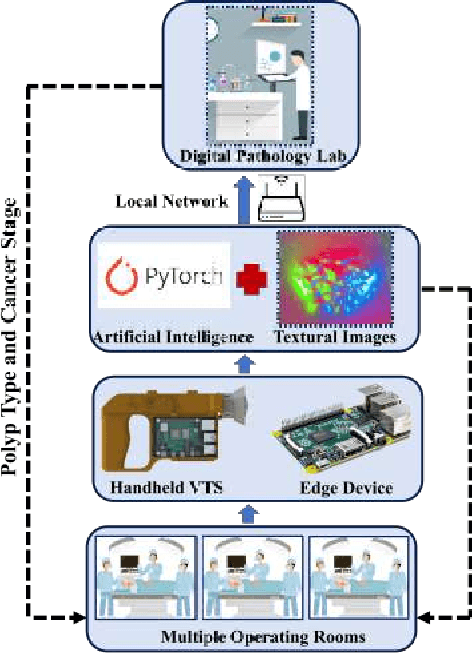

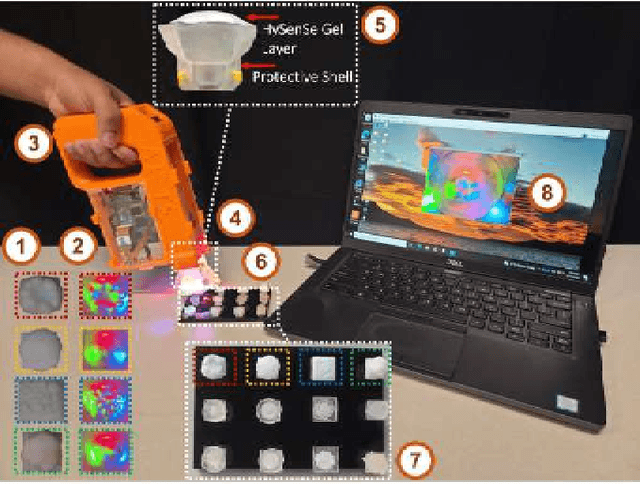

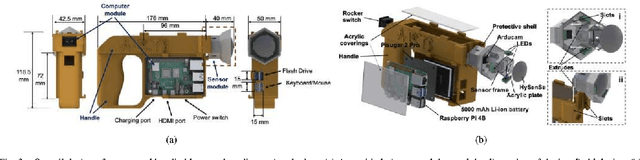

A Smart Handheld Edge Device for On-Site Diagnosis and Classification of Texture and Stiffness of Excised Colorectal Cancer Polyps

Sep 18, 2023

Abstract:This paper proposes a smart handheld textural sensing medical device with complementary Machine Learning (ML) algorithms to enable on-site Colorectal Cancer (CRC) polyp diagnosis and pathology of excised tumors. The proposed unique handheld edge device benefits from a unique tactile sensing module and a dual-stage machine learning algorithms (composed of a dilated residual network and a t-SNE engine) for polyp type and stiffness characterization. Solely utilizing the occlusion-free, illumination-resilient textural images captured by the proposed tactile sensor, the framework is able to sensitively and reliably identify the type and stage of CRC polyps by classifying their texture and stiffness, respectively. Moreover, the proposed handheld medical edge device benefits from internet connectivity for enabling remote digital pathology (boosting the diagnosis in operating rooms and promoting accessibility and equity in medical diagnosis).

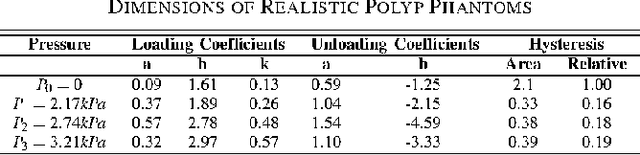

How Does the Inner Geometry of Soft Actuators Modulate the Dynamic and Hysteretic Response?

Aug 09, 2023

Abstract:This paper investigates the influence of the internal geometrical structure of soft pneu-nets on the dynamic response and hysteresis of the actuators. The research findings indicate that by strategically manipulating the stress distribution within soft robots, it is possible to enhance the dynamic response while reducing hysteresis. The study utilizes the Finite Element Method (FEM) and includes experimental validation through markerless motion tracking of the soft robot. In particular, the study examines actuator bending angles up to 500% strain while achieving 95% accuracy in predicting the bending angle. The results demonstrate that the particular design with the minimum air chamber width in the center significantly improves both high- and low-frequency hysteresis behavior by 21.5% while also enhancing dynamic response by 60% to 112% across various frequencies and peak-to-peak pressures. Consequently, the paper evaluates the effectiveness of "mechanically programming" stress distribution and distributed energy storage within soft robots to maximize their dynamic performance, offering direct benefits for control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge