Naruhiko Ikoma

Robot-Enabled Machine Learning-Based Diagnosis of Gastric Cancer Polyps Using Partial Surface Tactile Imaging

Aug 02, 2024

Abstract:In this paper, to collectively address the existing limitations on endoscopic diagnosis of Advanced Gastric Cancer (AGC) Tumors, for the first time, we propose (i) utilization and evaluation of our recently developed Vision-based Tactile Sensor (VTS), and (ii) a complementary Machine Learning (ML) algorithm for classifying tumors using their textural features. Leveraging a seven DoF robotic manipulator and unique custom-designed and additively-manufactured realistic AGC tumor phantoms, we demonstrated the advantages of automated data collection using the VTS addressing the problem of data scarcity and biases encountered in traditional ML-based approaches. Our synthetic-data-trained ML model was successfully evaluated and compared with traditional ML models utilizing various statistical metrics even under mixed morphological characteristics and partial sensor contact.

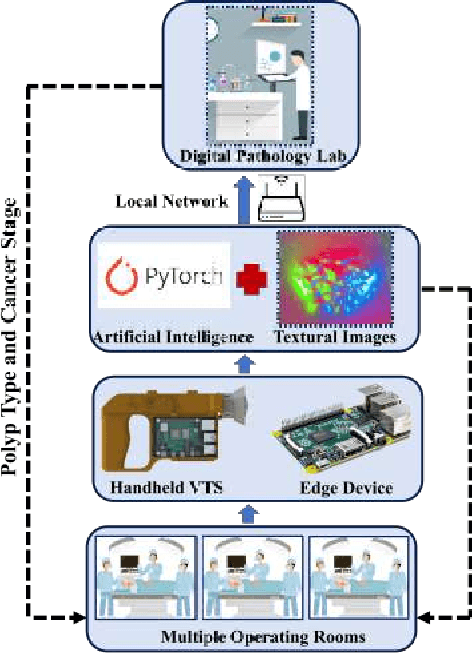

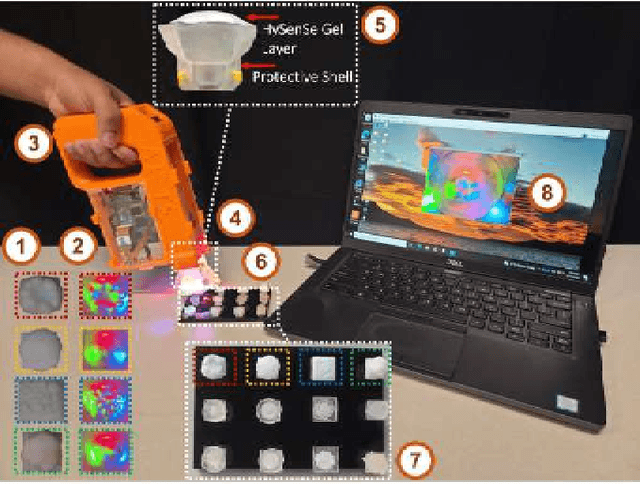

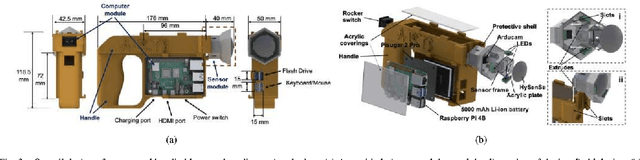

A Smart Handheld Edge Device for On-Site Diagnosis and Classification of Texture and Stiffness of Excised Colorectal Cancer Polyps

Sep 18, 2023

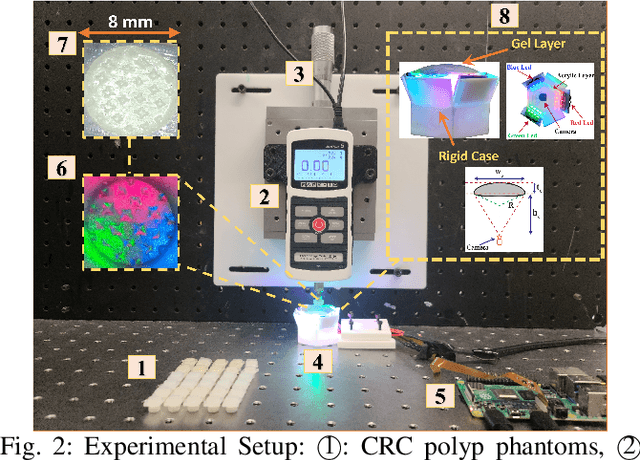

Abstract:This paper proposes a smart handheld textural sensing medical device with complementary Machine Learning (ML) algorithms to enable on-site Colorectal Cancer (CRC) polyp diagnosis and pathology of excised tumors. The proposed unique handheld edge device benefits from a unique tactile sensing module and a dual-stage machine learning algorithms (composed of a dilated residual network and a t-SNE engine) for polyp type and stiffness characterization. Solely utilizing the occlusion-free, illumination-resilient textural images captured by the proposed tactile sensor, the framework is able to sensitively and reliably identify the type and stage of CRC polyps by classifying their texture and stiffness, respectively. Moreover, the proposed handheld medical edge device benefits from internet connectivity for enabling remote digital pathology (boosting the diagnosis in operating rooms and promoting accessibility and equity in medical diagnosis).

Design and Development of a Novel Soft and Inflatable Tactile Sensing Balloon for Early Diagnosis of Colorectal Cancer Polyps

Sep 18, 2023Abstract:In this paper, with the goal of addressing the high early-detection miss rate of colorectal cancer (CRC) polyps during a colonoscopy procedure, we propose the design and fabrication of a unique inflatable vision-based tactile sensing balloon (VTSB). The proposed soft VTSB can readily be integrated with the existing colonoscopes and provide a radiation-free, safe, and high-resolution textural mapping and morphology characterization of CRC polyps. The performance of the proposed VTSB has been thoroughly characterized and evaluated on four different types of additively manufactured CRC polyp phantoms with three different stiffness levels. Additionally, we integrated the VTSB with a colonoscope and successfully performed a simulated colonoscopic procedure inside a tube with a few CRC polyp phantoms attached to its internal surface.

Towards Reliable Colorectal Cancer Polyps Classification via Vision Based Tactile Sensing and Confidence-Calibrated Neural Networks

Apr 25, 2023

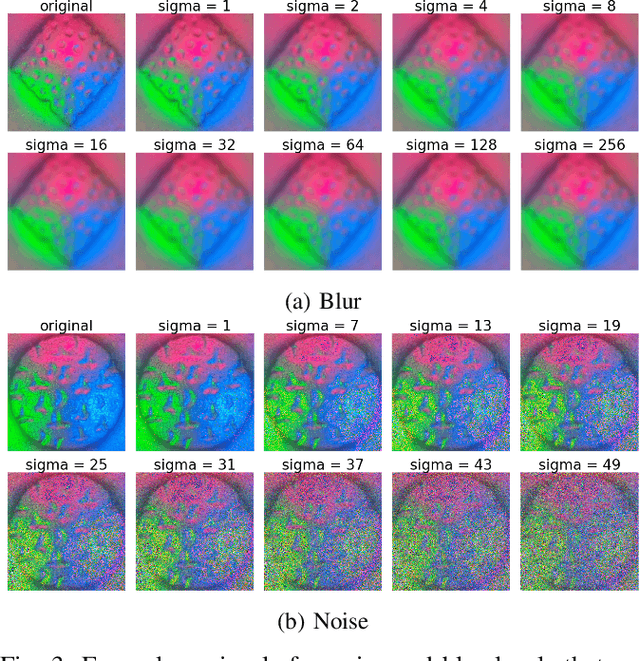

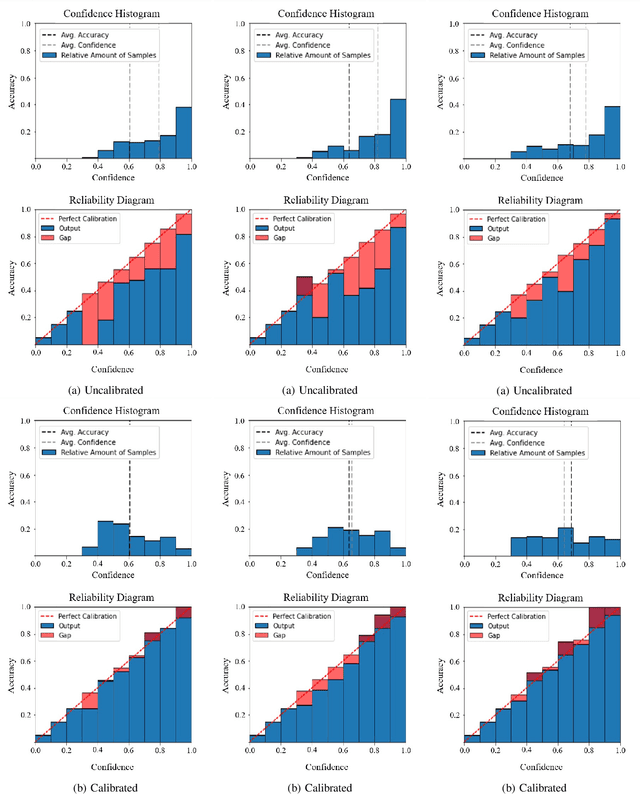

Abstract:In this study, toward addressing the over-confident outputs of existing artificial intelligence-based colorectal cancer (CRC) polyp classification techniques, we propose a confidence-calibrated residual neural network. Utilizing a novel vision-based tactile sensing (VS-TS) system and unique CRC polyp phantoms, we demonstrate that traditional metrics such as accuracy and precision are not sufficient to encapsulate model performance for handling a sensitive CRC polyp diagnosis. To this end, we develop a residual neural network classifier and address its over-confident outputs for CRC polyps classification via the post-processing method of temperature scaling. To evaluate the proposed method, we introduce noise and blur to the obtained textural images of the VS-TS and test the model's reliability for non-ideal inputs through reliability diagrams and other statistical metrics.

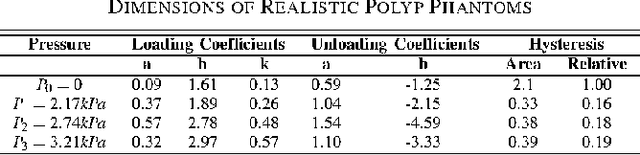

Classification of Colorectal Cancer Polyps via Transfer Learning and Vision-Based Tactile Sensing

Nov 08, 2022

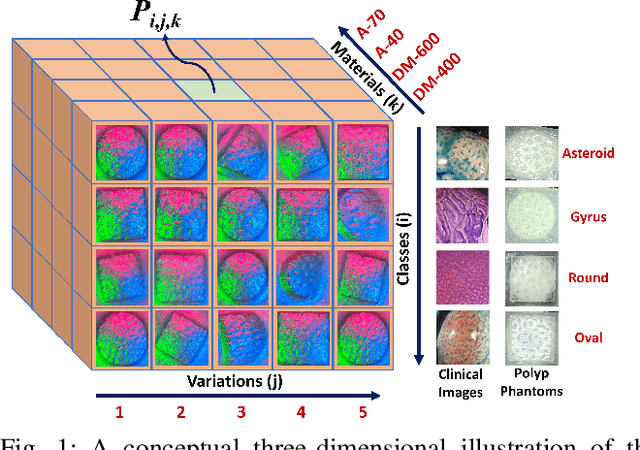

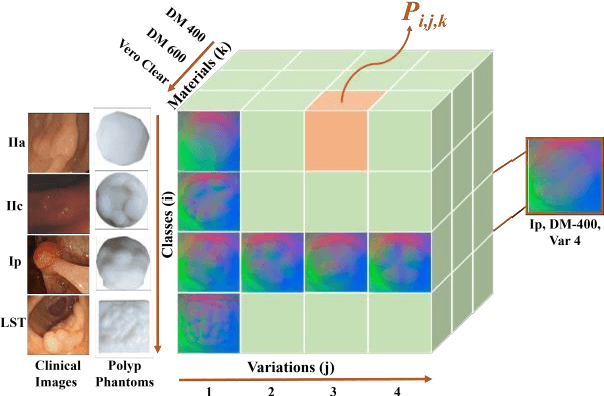

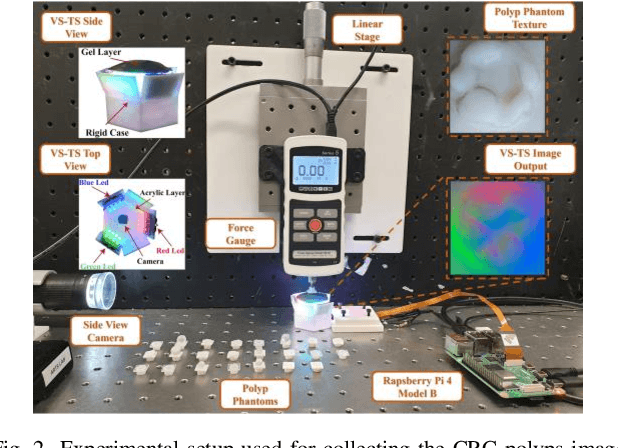

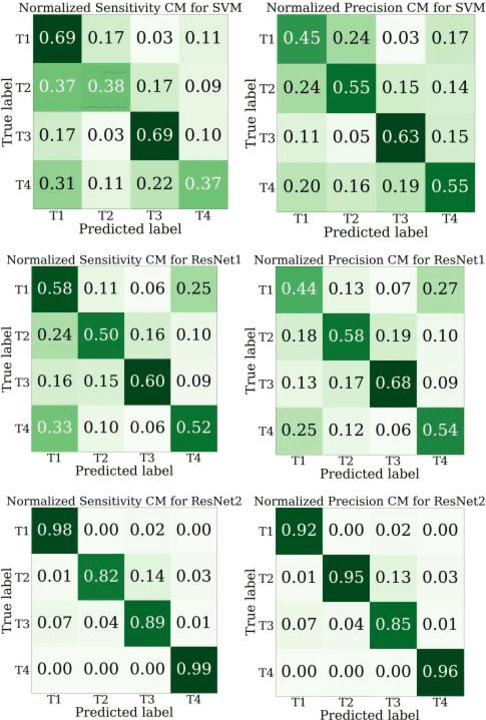

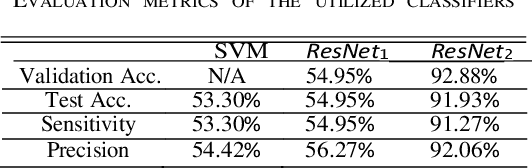

Abstract:In this study, to address the current high earlydetection miss rate of colorectal cancer (CRC) polyps, we explore the potentials of utilizing transfer learning and machine learning (ML) classifiers to precisely and sensitively classify the type of CRC polyps. Instead of using the common colonoscopic images, we applied three different ML algorithms on the 3D textural image outputs of a unique vision-based surface tactile sensor (VS-TS). To collect realistic textural images of CRC polyps for training the utilized ML classifiers and evaluating their performance, we first designed and additively manufactured 48 types of realistic polyp phantoms with different hardness, type, and textures. Next, the performance of the used three ML algorithms in classifying the type of fabricated polyps was quantitatively evaluated using various statistical metrics.

HySenSe: A Hyper-Sensitive and High-Fidelity Vision-Based Tactile Sensor

Nov 08, 2022Abstract:In this paper, to address the sensitivity and durability trade-off of Vision-based Tactile Sensor (VTSs), we introduce a hyper-sensitive and high-fidelity VTS called HySenSe. We demonstrate that by solely changing one step during the fabrication of the gel layer of the GelSight sensor (as the most well-known VTS), we can substantially improve its sensitivity and durability. Our experimental results clearly demonstrate the outperformance of the HySenSe compared with a similar GelSight sensor in detecting textural details of various objects under identical experimental conditions and low interaction forces (<= 1.5 N).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge