Ruonan Zhang

Comprehend and Talk: Text to Speech Synthesis via Dual Language Modeling

Sep 26, 2025Abstract:Existing Large Language Model (LLM) based autoregressive (AR) text-to-speech (TTS) systems, while achieving state-of-the-art quality, still face critical challenges. The foundation of this LLM-based paradigm is the discretization of the continuous speech waveform into a sequence of discrete tokens by neural audio codec. However, single codebook modeling is well suited to text LLMs, but suffers from significant information loss; hierarchical acoustic tokens, typically generated via Residual Vector Quantization (RVQ), often lack explicit semantic structure, placing a heavy learning burden on the model. Furthermore, the autoregressive process is inherently susceptible to error accumulation, which can degrade generation stability. To address these limitations, we propose CaT-TTS, a novel framework for robust and semantically-grounded zero-shot synthesis. First, we introduce S3Codec, a split RVQ codec that injects explicit linguistic features into its primary codebook via semantic distillation from a state-of-the-art ASR model, providing a structured representation that simplifies the learning task. Second, we propose an ``Understand-then-Generate'' dual-Transformer architecture that decouples comprehension from rendering. An initial ``Understanding'' Transformer models the cross-modal relationship between text and the audio's semantic tokens to form a high-level utterance plan. A subsequent ``Generation'' Transformer then executes this plan, autoregressively synthesizing hierarchical acoustic tokens. Finally, to enhance generation stability, we introduce Masked Audio Parallel Inference (MAPI), a nearly parameter-free inference strategy that dynamically guides the decoding process to mitigate local errors.

Quantize More, Lose Less: Autoregressive Generation from Residually Quantized Speech Representations

Jul 16, 2025

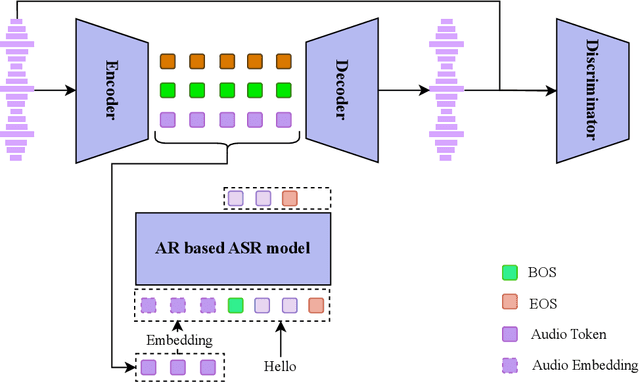

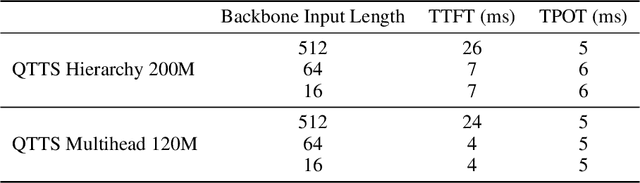

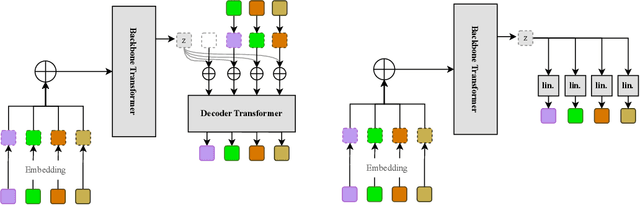

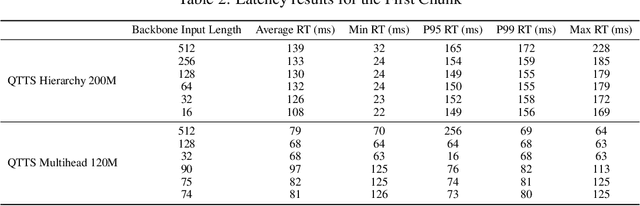

Abstract:Text-to-speech (TTS) synthesis has seen renewed progress under the discrete modeling paradigm. Existing autoregressive approaches often rely on single-codebook representations, which suffer from significant information loss. Even with post-hoc refinement techniques such as flow matching, these methods fail to recover fine-grained details (e.g., prosodic nuances, speaker-specific timbres), especially in challenging scenarios like singing voice or music synthesis. We propose QTTS, a novel TTS framework built upon our new audio codec, QDAC. The core innovation of QDAC lies in its end-to-end training of an ASR-based auto-regressive network with a GAN, which achieves superior semantic feature disentanglement for scalable, near-lossless compression. QTTS models these discrete codes using two innovative strategies: the Hierarchical Parallel architecture, which uses a dual-AR structure to model inter-codebook dependencies for higher-quality synthesis, and the Delay Multihead approach, which employs parallelized prediction with a fixed delay to accelerate inference speed. Our experiments demonstrate that the proposed framework achieves higher synthesis quality and better preserves expressive content compared to baseline. This suggests that scaling up compression via multi-codebook modeling is a promising direction for high-fidelity, general-purpose speech and audio generation.

Robust Trajectory and Offloading for Energy-Efficient UAV Edge Computing in Industrial Internet of Things

Mar 08, 2023Abstract:Efficient data processing and computation are essential for the industrial Internet of things (IIoT) to empower various applications, which yet can be significantly bottlenecked by the limited energy capacity and computation capability of the IIoT nodes. In this paper, we employ an unmanned aerial vehicle (UAV) as an edge server to assist IIoT data processing, while considering the practical issue of UAV jittering. Specifically, we propose a joint design on trajectory and offloading strategies to minimize energy consumption due to local and edge computation, as well as data transmission. We particularly address the UAV jittering that induces Gaussian-distributed uncertainties associated with flying waypoints, resulting in probabilistic-form flying speed and data offloading constraints. We exploit the Bernstein-type inequality to reformulate the constraints in deterministic forms and decompose the energy minimization to solve for trajectory and offloading separately within an alternating optimization framework. The subproblems are then tackled with the successive convex approximation technique. Simulation results show that our proposal strictly guarantees robustness under uncertainties and effectively reduces energy consumption as compared with the baselines.

Frequency-Aware Self-Supervised Monocular Depth Estimation

Oct 11, 2022

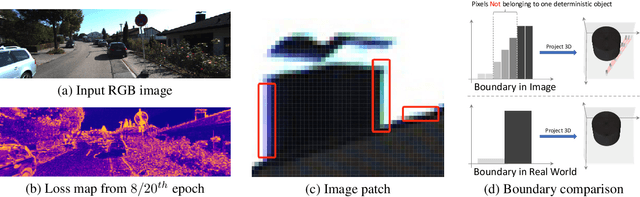

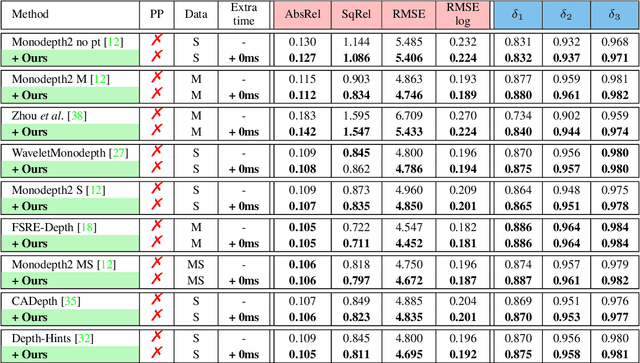

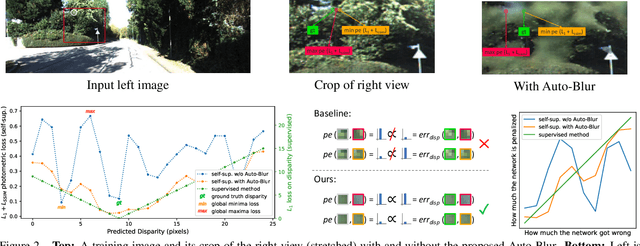

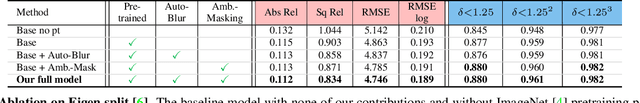

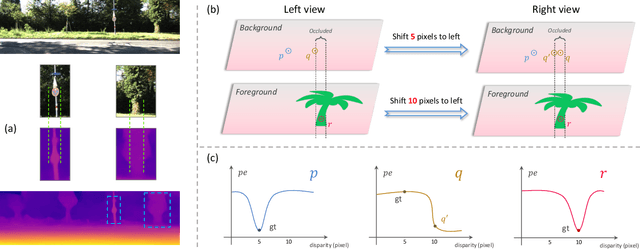

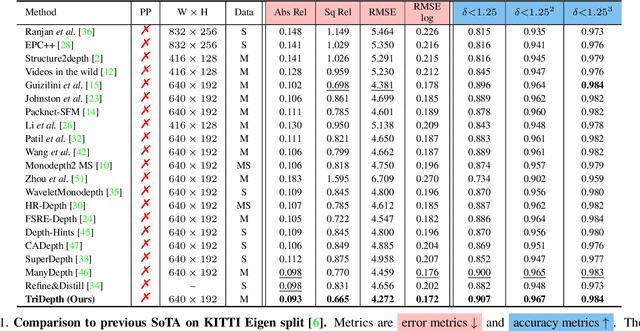

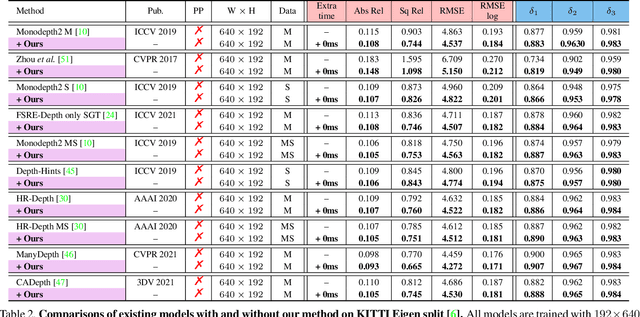

Abstract:We present two versatile methods to generally enhance self-supervised monocular depth estimation (MDE) models. The high generalizability of our methods is achieved by solving the fundamental and ubiquitous problems in photometric loss function. In particular, from the perspective of spatial frequency, we first propose Ambiguity-Masking to suppress the incorrect supervision under photometric loss at specific object boundaries, the cause of which could be traced to pixel-level ambiguity. Second, we present a novel frequency-adaptive Gaussian low-pass filter, designed to robustify the photometric loss in high-frequency regions. We are the first to propose blurring images to improve depth estimators with an interpretable analysis. Both modules are lightweight, adding no parameters and no need to manually change the network structures. Experiments show that our methods provide performance boosts to a large number of existing models, including those who claimed state-of-the-art, while introducing no extra inference computation at all.

Self-Supervised Monocular Depth Estimation: Solving the Edge-Fattening Problem

Oct 04, 2022

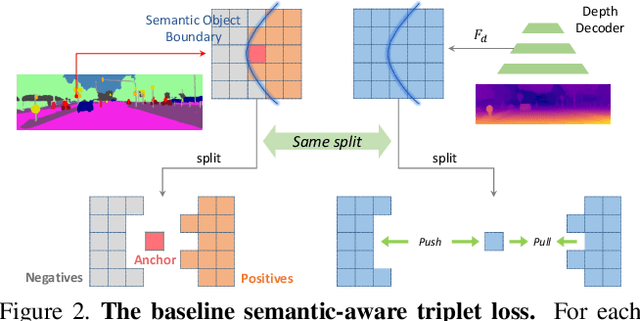

Abstract:Self-supervised monocular depth estimation (MDE) models universally suffer from the notorious edge-fattening issue. Triplet loss, popular for metric learning, has made a great success in many computer vision tasks. In this paper, we redesign the patch-based triplet loss in MDE to alleviate the ubiquitous edge-fattening issue. We show two drawbacks of the raw triplet loss in MDE and demonstrate our problem-driven redesigns. First, we present a min. operator based strategy applied to all negative samples, to prevent well-performing negatives sheltering the error of edge-fattening negatives. Second, we split the anchor-positive distance and anchor-negative distance from within the original triplet, which directly optimizes the positives without any mutual effect with the negatives. Extensive experiments show the combination of these two small redesigns can achieve unprecedented results: Our powerful and versatile triplet loss not only makes our model outperform all previous SoTA by a large margin, but also provides substantial performance boosts to a large number of existing models, while introducing no extra inference computation at all.

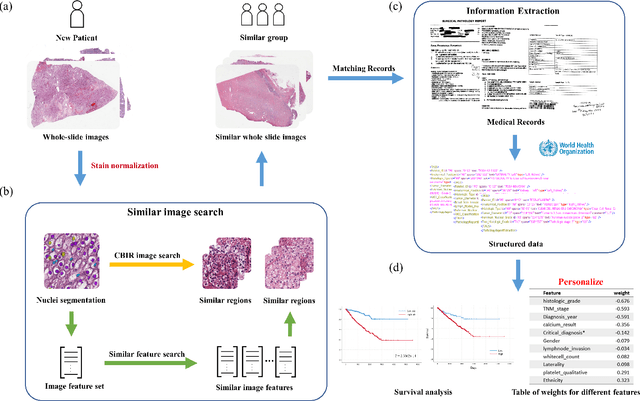

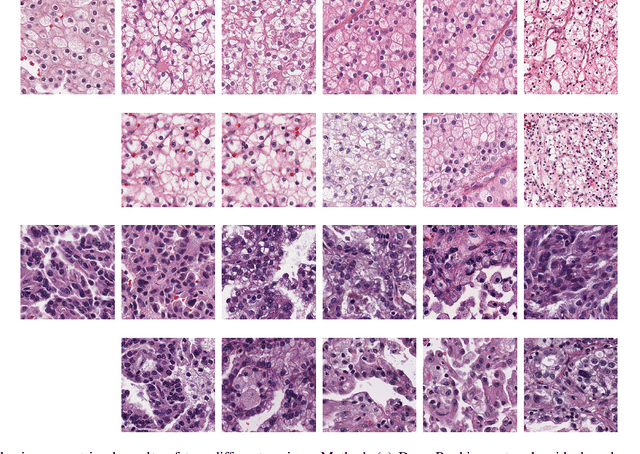

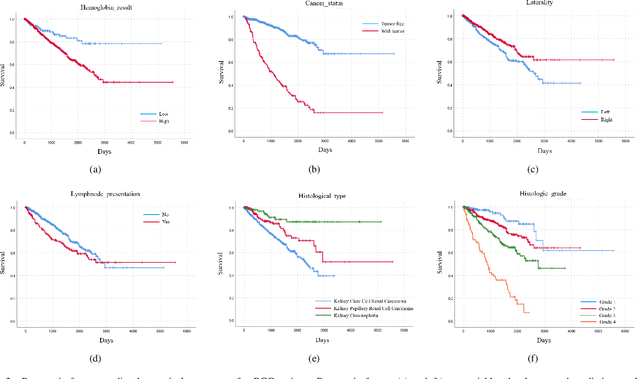

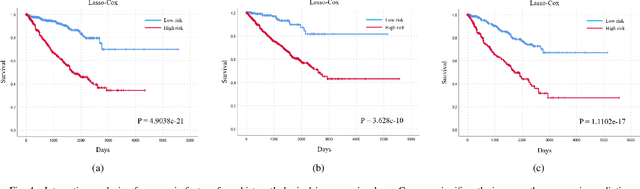

A Personalized Diagnostic Generation Framework Based on Multi-source Heterogeneous Data

Oct 26, 2021

Abstract:Personalized diagnoses have not been possible due to sear amount of data pathologists have to bear during the day-to-day routine. This lead to the current generalized standards that are being continuously updated as new findings are reported. It is noticeable that these effective standards are developed based on a multi-source heterogeneous data, including whole-slide images and pathology and clinical reports. In this study, we propose a framework that combines pathological images and medical reports to generate a personalized diagnosis result for individual patient. We use nuclei-level image feature similarity and content-based deep learning method to search for a personalized group of population with similar pathological characteristics, extract structured prognostic information from descriptive pathology reports of the similar patient population, and assign importance of different prognostic factors to generate a personalized pathological diagnosis result. We use multi-source heterogeneous data from TCGA (The Cancer Genome Atlas) database. The result demonstrate that our framework matches the performance of pathologists in the diagnosis of renal cell carcinoma. This framework is designed to be generic, thus could be applied for other types of cancer. The weights could provide insights to the known prognostic factors and further guide more precise clinical treatment protocols.

StructureFlow: Image Inpainting via Structure-aware Appearance Flow

Aug 11, 2019

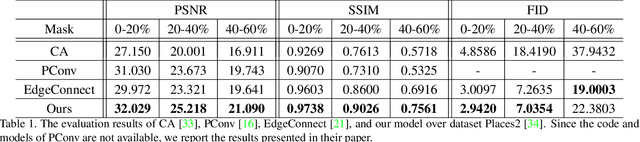

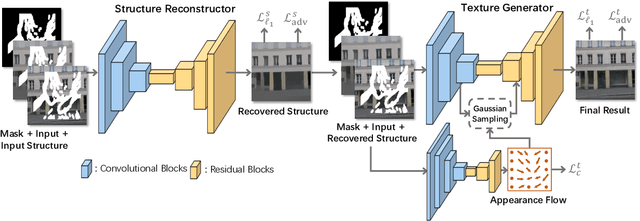

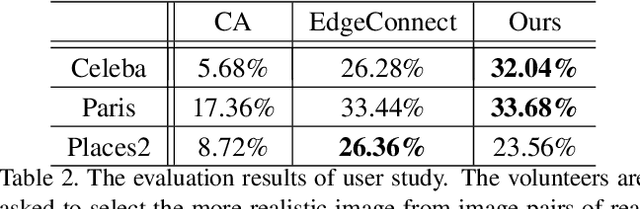

Abstract:Image inpainting techniques have shown significant improvements by using deep neural networks recently. However, most of them may either fail to reconstruct reasonable structures or restore fine-grained textures. In order to solve this problem, in this paper, we propose a two-stage model which splits the inpainting task into two parts: structure reconstruction and texture generation. In the first stage, edge-preserved smooth images are employed to train a structure reconstructor which completes the missing structures of the inputs. In the second stage, based on the reconstructed structures, a texture generator using appearance flow is designed to yield image details. Experiments on multiple publicly available datasets show the superior performance of the proposed network.

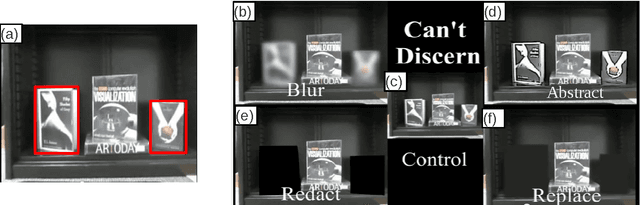

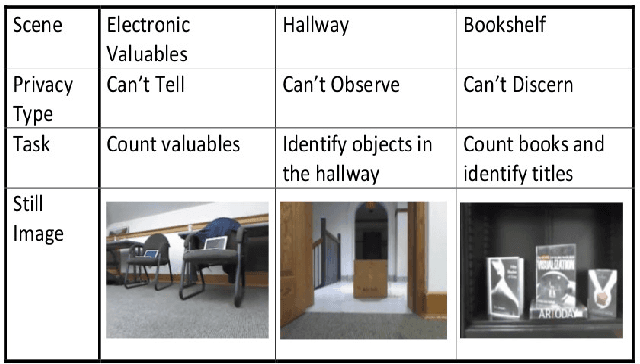

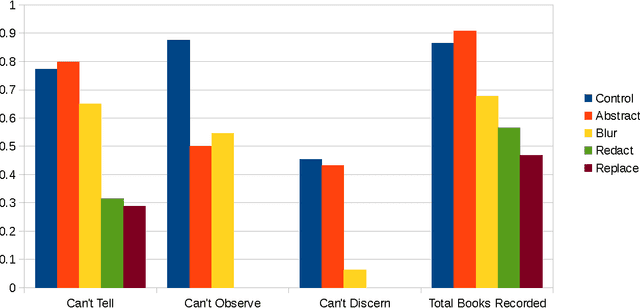

Video Manipulation Techniques for the Protection of Privacy in Remote Presence Systems

Jan 13, 2015

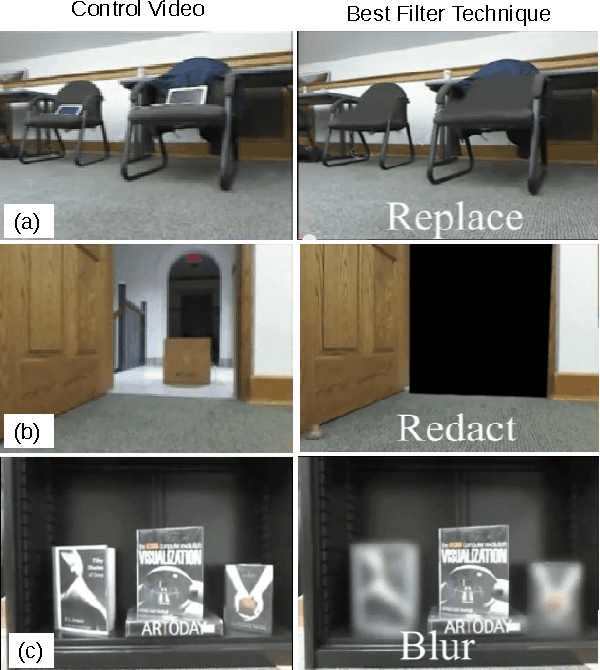

Abstract:Systems that give control of a mobile robot to a remote user raise privacy concerns about what the remote user can see and do through the robot. We aim to preserve some of that privacy by manipulating the video data that the remote user sees. Through two user studies, we explore the effectiveness of different video manipulation techniques at providing different types of privacy. We simultaneously examine task performance in the presence of privacy protection. In the first study, participants were asked to watch a video captured by a robot exploring an office environment and to complete a series of observational tasks under differing video manipulation conditions. Our results show that using manipulations of the video stream can lead to fewer privacy violations for different privacy types. Through a second user study, it was demonstrated that these privacy-protecting techniques were effective without diminishing the task performance of the remote user.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge