Ross Sowell

Effects of Interfaces on Human-Robot Trust: Specifying and Visualizing Physical Zones

Dec 01, 2021

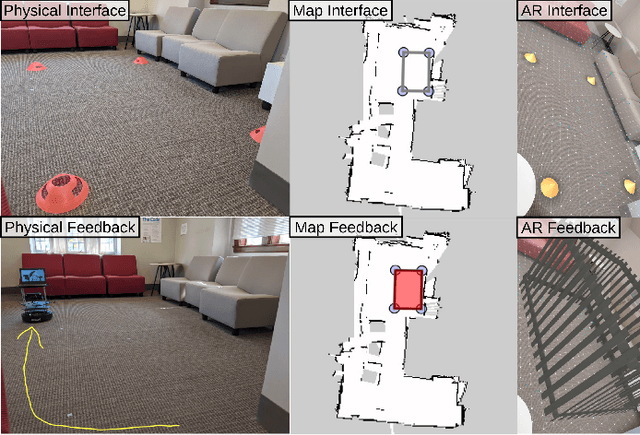

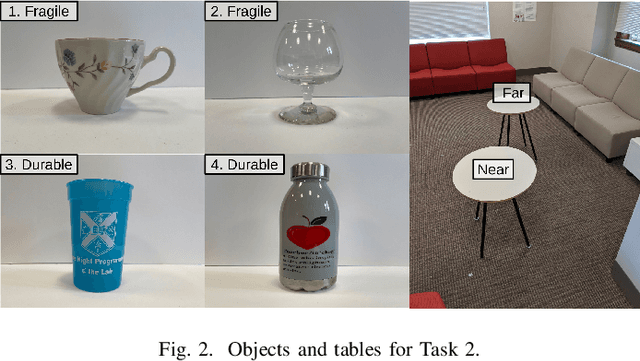

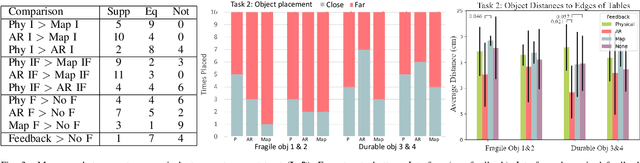

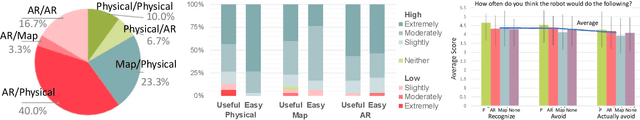

Abstract:In this paper we investigate the influence interfaces and feedback have on human-robot trust levels when operating in a shared physical space. The task we use is specifying a "no-go" region for a robot in an indoor environment. We evaluate three styles of interface (physical, AR, and map-based) and four feedback mechanisms (no feedback, robot drives around the space, an AR "fence", and the region marked on the map). Our evaluation looks at both usability and trust. Specifically, if the participant trusts that the robot "knows" where the no-go region is and their confidence in the robot's ability to avoid that region. We use both self-reported and indirect measures of trust and usability. Our key findings are: 1) interfaces and feedback do influence levels of trust; 2) the participants largely preferred a mixed interface-feedback pair, where the modality for the interface differed from the feedback.

Video Manipulation Techniques for the Protection of Privacy in Remote Presence Systems

Jan 13, 2015

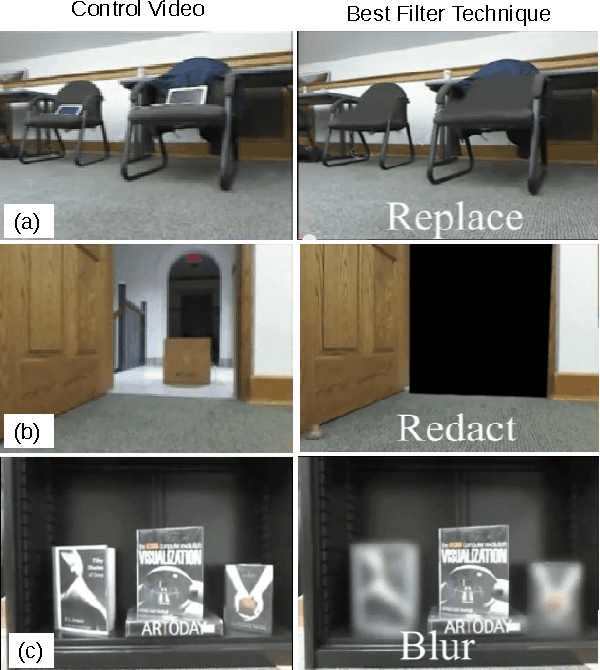

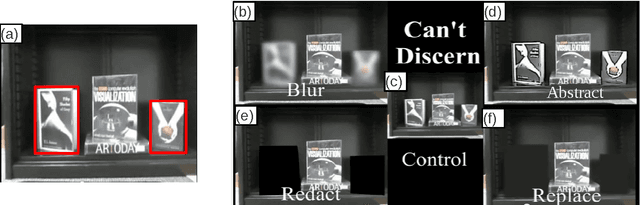

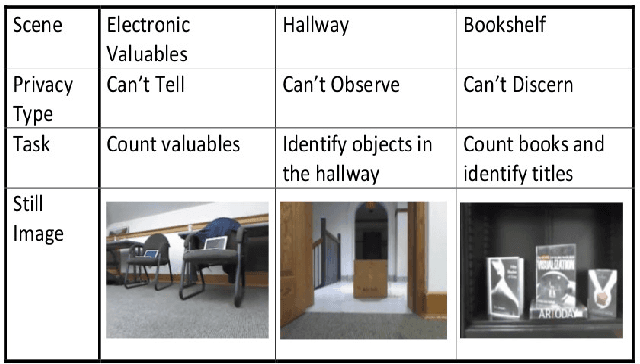

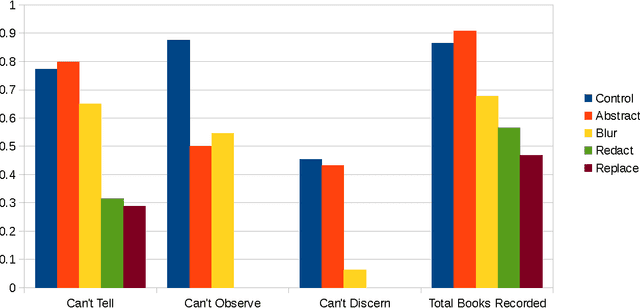

Abstract:Systems that give control of a mobile robot to a remote user raise privacy concerns about what the remote user can see and do through the robot. We aim to preserve some of that privacy by manipulating the video data that the remote user sees. Through two user studies, we explore the effectiveness of different video manipulation techniques at providing different types of privacy. We simultaneously examine task performance in the presence of privacy protection. In the first study, participants were asked to watch a video captured by a robot exploring an office environment and to complete a series of observational tasks under differing video manipulation conditions. Our results show that using manipulations of the video stream can lead to fewer privacy violations for different privacy types. Through a second user study, it was demonstrated that these privacy-protecting techniques were effective without diminishing the task performance of the remote user.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge