Ruimin Shen

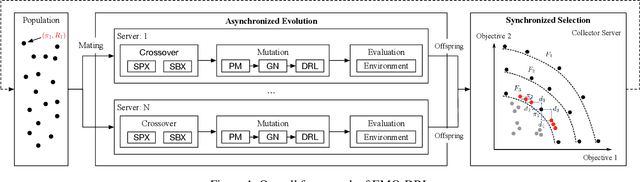

Lamarckian Platform: Pushing the Boundaries of Evolutionary Reinforcement Learning towards Asynchronous Commercial Games

Sep 21, 2022

Abstract:Despite the emerging progress of integrating evolutionary computation into reinforcement learning, the absence of a high-performance platform endowing composability and massive parallelism causes non-trivial difficulties for research and applications related to asynchronous commercial games. Here we introduce Lamarckian - an open-source platform featuring support for evolutionary reinforcement learning scalable to distributed computing resources. To improve the training speed and data efficiency, Lamarckian adopts optimized communication methods and an asynchronous evolutionary reinforcement learning workflow. To meet the demand for an asynchronous interface by commercial games and various methods, Lamarckian tailors an asynchronous Markov Decision Process interface and designs an object-oriented software architecture with decoupled modules. In comparison with the state-of-the-art RLlib, we empirically demonstrate the unique advantages of Lamarckian on benchmark tests with up to 6000 CPU cores: i) both the sampling efficiency and training speed are doubled when running PPO on Google football game; ii) the training speed is 13 times faster when running PBT+PPO on Pong game. Moreover, we also present two use cases: i) how Lamarckian is applied to generating behavior-diverse game AI; ii) how Lamarckian is applied to game balancing tests for an asynchronous commercial game.

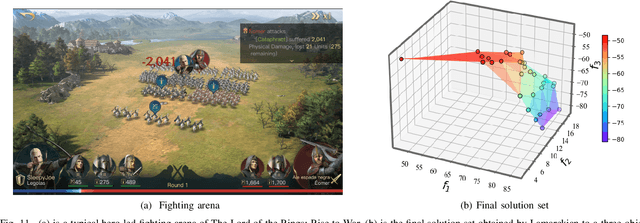

Diverse Behavior Is What Game AI Needs: Generating Varied Human-Like Playing Styles Using Evolutionary Multi-Objective Deep Reinforcement Learning

Oct 20, 2019

Abstract:Designing artificial intelligence for games (Game AI) has been long recognized as a notoriously challenging task in the game industry, as it mainly relies on manual design, requiring plenty of domain knowledge. More frustratingly, even spending a lot of effort, a satisfying Game AI is still hard to achieve by manual design due to the almost infinite search space. The recent success of deep reinforcement learning (DRL) sheds light on advancing automated game designing, significantly relaxing human competitive intelligent support. However, existing DRL algorithms mostly focus on training a Game AI to win the game rather than the way it wins (style). To bridge the gap, we introduce EMO-DRL, an end-to-end game design framework, leveraging evolutionary algorithm, DRL and multi-objective optimization (MOO) to perform intelligent and automatic game design. Firstly, EMO-DRL proposes style-oriented learning to bypass manual reward shaping in DRL and directly learns a Game AI with an expected style in an end-to-end fashion. On this basis, the prioritized multi-objective optimization is introduced to achieve more diverse, nature and human-like Game AI. Large-scale evaluations on an Atari game and a commercial massively multiplayer online game are conducted. The results demonstrate that EMO-DRL, compared to existing algorithms, achieve better game designs in an intelligent and automatic way.

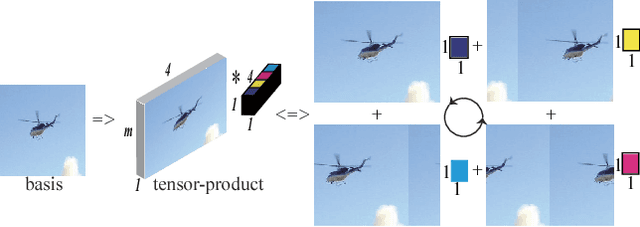

Efficient Two-Dimensional Sparse Coding Using Tensor-Linear Combination

Mar 28, 2017

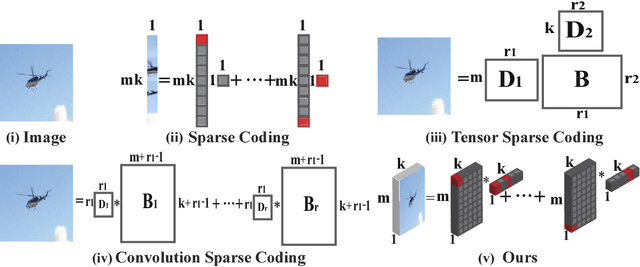

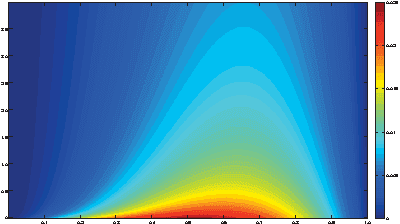

Abstract:Sparse coding (SC) is an automatic feature extraction and selection technique that is widely used in unsupervised learning. However, conventional SC vectorizes the input images, which breaks apart the local proximity of pixels and destructs the elementary object structures of images. In this paper, we propose a novel two-dimensional sparse coding (2DSC) scheme that represents the input images as the tensor-linear combinations under a novel algebraic framework. 2DSC learns much more concise dictionaries because it uses the circular convolution operator, since the shifted versions of atoms learned by conventional SC are treated as the same ones. We apply 2DSC to natural images and demonstrate that 2DSC returns meaningful dictionaries for large patches. Moreover, for mutli-spectral images denoising, the proposed 2DSC reduces computational costs with competitive performance in comparison with the state-of-the-art algorithms.

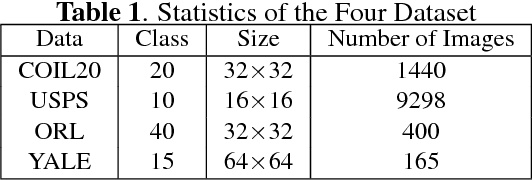

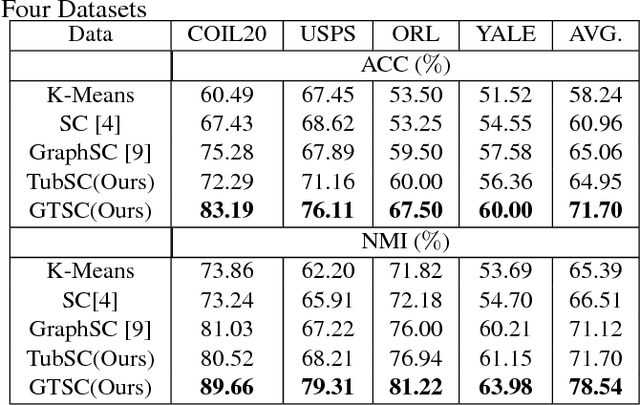

Graph Regularized Tensor Sparse Coding for Image Representation

Mar 27, 2017

Abstract:Sparse coding (SC) is an unsupervised learning scheme that has received an increasing amount of interests in recent years. However, conventional SC vectorizes the input images, which destructs the intrinsic spatial structures of the images. In this paper, we propose a novel graph regularized tensor sparse coding (GTSC) for image representation. GTSC preserves the local proximity of elementary structures in the image by adopting the newly proposed tubal-tensor representation. Simultaneously, it considers the intrinsic geometric properties by imposing graph regularization that has been successfully applied to uncover the geometric distribution for the image data. Moreover, the returned sparse representations by GTSC have better physical explanations as the key operation (i.e., circular convolution) in the tubal-tensor model preserves the shifting invariance property. Experimental results on image clustering demonstrate the effectiveness of the proposed scheme.

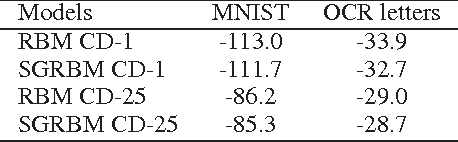

Sparse Group Restricted Boltzmann Machines

Aug 30, 2010

Abstract:Since learning is typically very slow in Boltzmann machines, there is a need to restrict connections within hidden layers. However, the resulting states of hidden units exhibit statistical dependencies. Based on this observation, we propose using $l_1/l_2$ regularization upon the activation possibilities of hidden units in restricted Boltzmann machines to capture the loacal dependencies among hidden units. This regularization not only encourages hidden units of many groups to be inactive given observed data but also makes hidden units within a group compete with each other for modeling observed data. Thus, the $l_1/l_2$ regularization on RBMs yields sparsity at both the group and the hidden unit levels. We call RBMs trained with the regularizer \emph{sparse group} RBMs. The proposed sparse group RBMs are applied to three tasks: modeling patches of natural images, modeling handwritten digits and pretaining a deep networks for a classification task. Furthermore, we illustrate the regularizer can also be applied to deep Boltzmann machines, which lead to sparse group deep Boltzmann machines. When adapted to the MNIST data set, a two-layer sparse group Boltzmann machine achieves an error rate of $0.84\%$, which is, to our knowledge, the best published result on the permutation-invariant version of the MNIST task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge