Yinfeng Chen

Diverse Behavior Is What Game AI Needs: Generating Varied Human-Like Playing Styles Using Evolutionary Multi-Objective Deep Reinforcement Learning

Oct 20, 2019

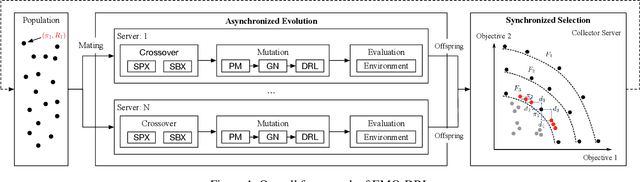

Abstract:Designing artificial intelligence for games (Game AI) has been long recognized as a notoriously challenging task in the game industry, as it mainly relies on manual design, requiring plenty of domain knowledge. More frustratingly, even spending a lot of effort, a satisfying Game AI is still hard to achieve by manual design due to the almost infinite search space. The recent success of deep reinforcement learning (DRL) sheds light on advancing automated game designing, significantly relaxing human competitive intelligent support. However, existing DRL algorithms mostly focus on training a Game AI to win the game rather than the way it wins (style). To bridge the gap, we introduce EMO-DRL, an end-to-end game design framework, leveraging evolutionary algorithm, DRL and multi-objective optimization (MOO) to perform intelligent and automatic game design. Firstly, EMO-DRL proposes style-oriented learning to bypass manual reward shaping in DRL and directly learns a Game AI with an expected style in an end-to-end fashion. On this basis, the prioritized multi-objective optimization is introduced to achieve more diverse, nature and human-like Game AI. Large-scale evaluations on an Atari game and a commercial massively multiplayer online game are conducted. The results demonstrate that EMO-DRL, compared to existing algorithms, achieve better game designs in an intelligent and automatic way.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge