Ronald S. Fearing

Design and Control of a Compact Series Elastic Actuator Module for Robots in MRI Scanners

Jun 11, 2024

Abstract:In this study, we introduce a novel MRI-compatible rotary series elastic actuator module utilizing velocity-sourced ultrasonic motors for force-controlled robots operating within MRI scanners. Unlike previous MRI-compatible SEA designs, our module incorporates a transmission force sensing series elastic actuator structure, with four off-the-shelf compression springs strategically placed between the gearbox housing and the motor housing. This design features a compact size, thus expanding possibilities for a wider range of MRI robotic applications. To achieve precise torque control, we develop a controller that incorporates a disturbance observer tailored for velocity-sourced motors. This controller enhances the robustness of torque control in our actuator module, even in the presence of varying external impedance, thereby augmenting its suitability for MRI-guided medical interventions. Experimental validation demonstrates the actuator's torque control performance in both 3 Tesla MRI and non-MRI environments, achieving a settling time of 0.1 seconds and a steady-state error within 2% of its maximum output torque. Notably, our force controller exhibits consistent performance across low and high external impedance scenarios, in contrast to conventional controllers for velocity-sourced series elastic actuators, which struggle with steady-state performance under low external impedance conditions.

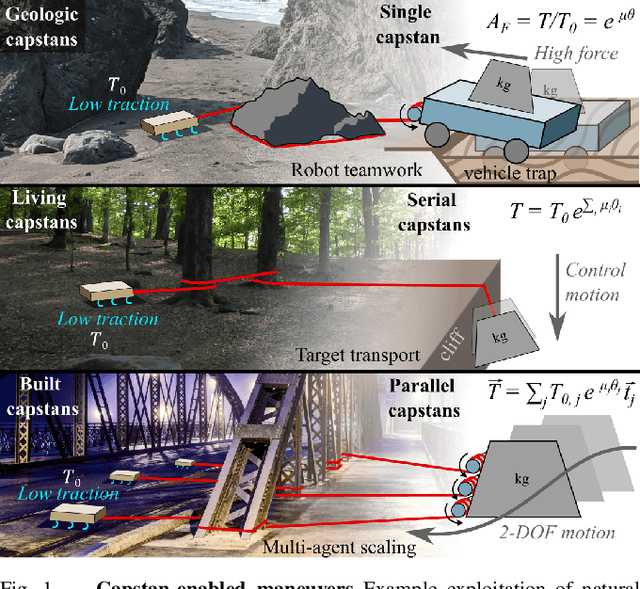

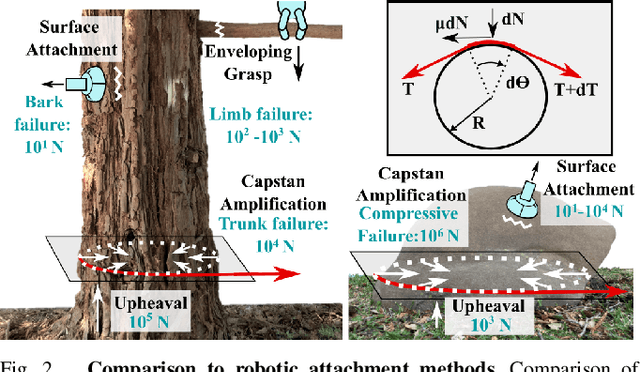

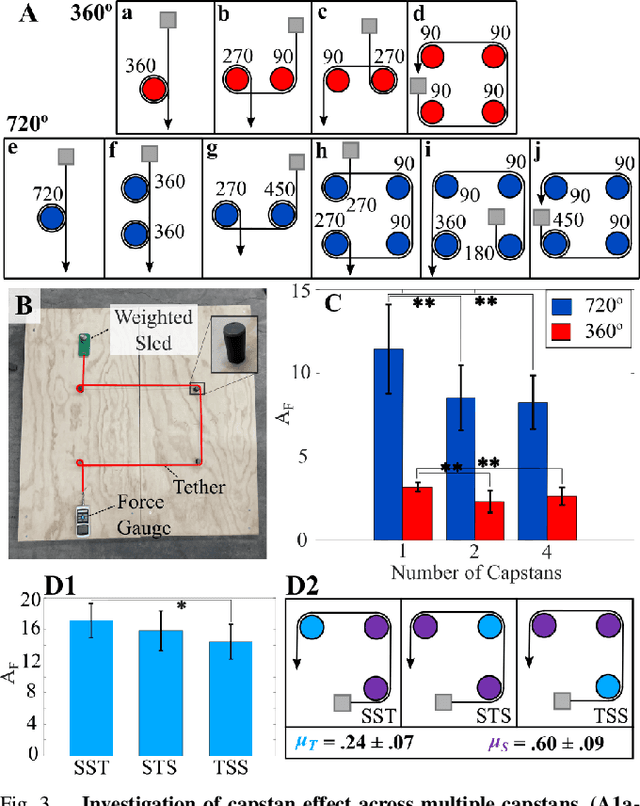

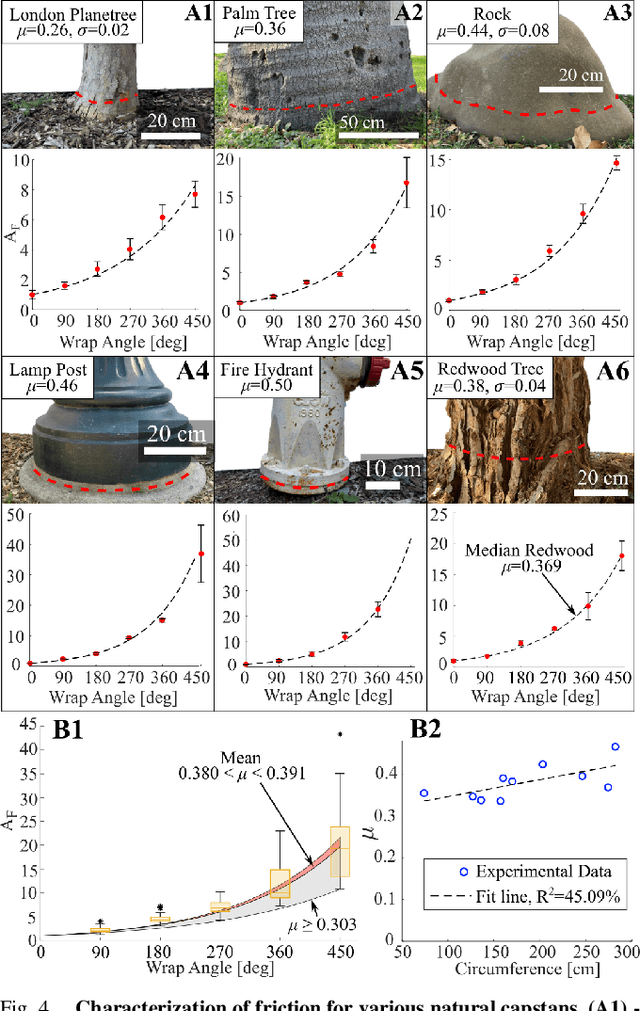

The Robustness of Tether Friction in Non-idealized Terrains

Aug 22, 2022

Abstract:Reduced traction limits the ability of mobile robotic systems to resist or apply large external loads, such as tugging a massive payload. One simple and versatile solution is to wrap a tether around naturally occurring objects to leverage the capstan effect and create exponentially-amplified holding forces. Experiments show that an idealized capstan model explains force amplification experienced on common irregular outdoor objects - trees, rocks, posts. Robust to variable environmental conditions, this exponential amplification method can harness single or multiple capstan objects, either in series or in parallel with a team of robots. This adaptability allows for a range of potential configurations especially useful for when objects cannot be fully encircled or gripped. These principles are demonstrated with mobile platforms to (1) control the lowering and arrest of a payload, (2) to achieve planar control of a payload, and (3) to act as an anchor point for a more massive platform to winch towards. We show the simple addition of a tether, wrapped around shallow stones in sand, amplifies holding force of a low-traction platform by up to 774x.

Mechanical principles of dynamic terrestrial self-righting using wings

Mar 17, 2021Abstract:Terrestrial animals and robots are susceptible to flipping-over during rapid locomotion in complex terrains. However, small robots are less capable of self-righting from an upside-down orientation compared to small animals like insects. Inspired by the winged discoid cockroach, we designed a new robot that opens its wings to self-right by pushing against the ground. We used this robot to systematically test how self-righting performance depends on wing opening magnitude, speed, and asymmetry, and modeled how kinematic and energetic requirements depend on wing shape and body/wing mass distribution. We discovered that the robot self-rights dynamically using kinetic energy to overcome potential energy barriers, that larger and faster symmetric wing opening increases self-righting performance, and that opening wings asymmetrically increases righting probability when wing opening is small. Our results suggested that the discoid cockroach's winged self-righting is a dynamic maneuver. While the thin, lightweight wings of the discoid cockroach and our robot are energetically sub-optimal for self-righting compared to tall, heavy ones, their ability to open wings saves them substantial energy compared to if they had static shells. Analogous to biological exaptations, our study provided a proof-of-concept for terrestrial robots to use existing morphology in novel ways to overcome new locomotor challenges.

OpenRoACH: A Durable Open-Source Hexapedal Platform with Onboard Robot Operating System (ROS)

Mar 01, 2019

Abstract:OpenRoACH is a 15-cm 200-gram self-contained hexapedal robot with an onboard single-board computer. To our knowledge, it is the smallest legged robot with the capability of running the Robot Operating System (ROS) onboard. The robot is fully open sourced, uses accessible materials and off-the-shelf electronic components, can be fabricated with benchtop fast-prototyping machines such as a laser cutter and a 3D printer, and can be assembled by one person within two hours. Its sensory capacity has been tested with gyroscopes, accelerometers, Beacon sensors, color vision sensors, linescan sensors and cameras. It is low-cost within \$150 including structure materials, motors, electronics, and a battery. The capabilities of OpenRoACH are demonstrated with multi-surface walking and running, 24-hour continuous walking burn-ins, carrying 200-gram dynamic payloads and 800-gram static payloads, and ROS control of steering based on camera feedback. Information and files related to mechanical design, fabrication, assembly, electronics, and control algorithms are all publicly available on https://wiki.eecs.berkeley.edu/biomimetics/Main/OpenRoACH.

Learning to Adapt in Dynamic, Real-World Environments Through Meta-Reinforcement Learning

Feb 27, 2019

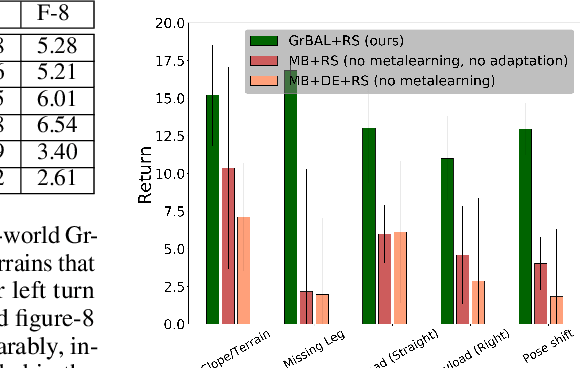

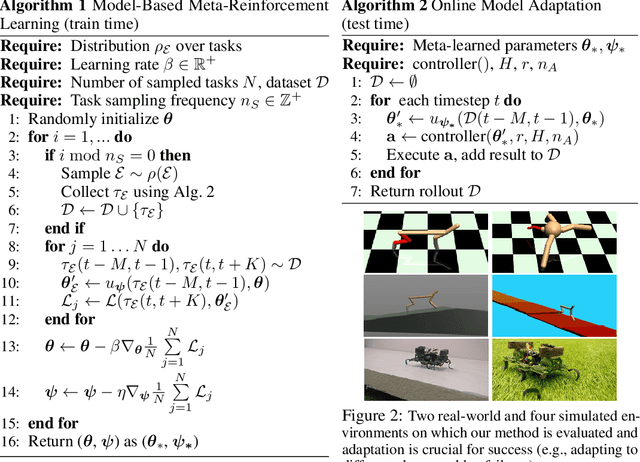

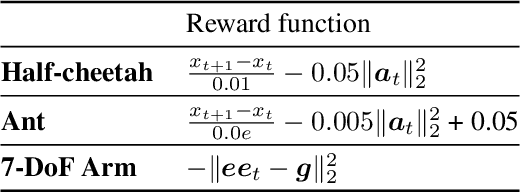

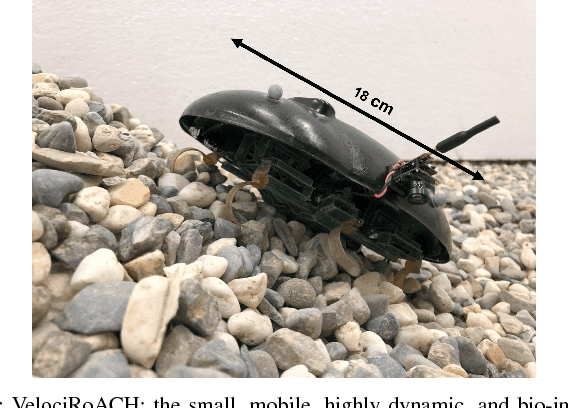

Abstract:Although reinforcement learning methods can achieve impressive results in simulation, the real world presents two major challenges: generating samples is exceedingly expensive, and unexpected perturbations or unseen situations cause proficient but specialized policies to fail at test time. Given that it is impractical to train separate policies to accommodate all situations the agent may see in the real world, this work proposes to learn how to quickly and effectively adapt online to new tasks. To enable sample-efficient learning, we consider learning online adaptation in the context of model-based reinforcement learning. Our approach uses meta-learning to train a dynamics model prior such that, when combined with recent data, this prior can be rapidly adapted to the local context. Our experiments demonstrate online adaptation for continuous control tasks on both simulated and real-world agents. We first show simulated agents adapting their behavior online to novel terrains, crippled body parts, and highly-dynamic environments. We also illustrate the importance of incorporating online adaptation into autonomous agents that operate in the real world by applying our method to a real dynamic legged millirobot. We demonstrate the agent's learned ability to quickly adapt online to a missing leg, adjust to novel terrains and slopes, account for miscalibration or errors in pose estimation, and compensate for pulling payloads.

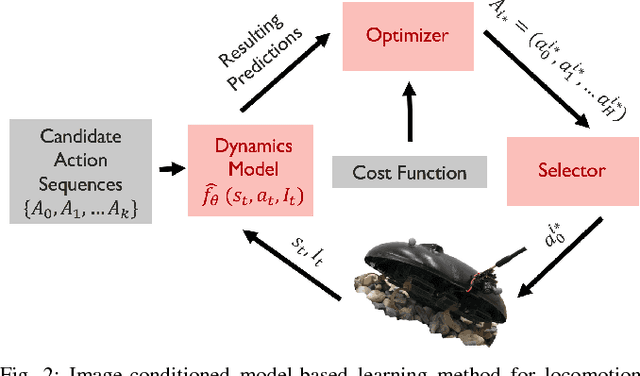

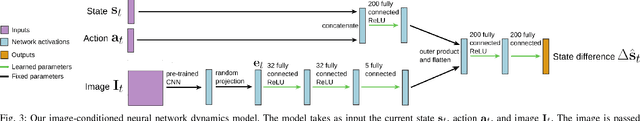

Learning Image-Conditioned Dynamics Models for Control of Under-actuated Legged Millirobots

Mar 30, 2018

Abstract:Millirobots are a promising robotic platform for many applications due to their small size and low manufacturing costs. Legged millirobots, in particular, can provide increased mobility in complex environments and improved scaling of obstacles. However, controlling these small, highly dynamic, and underactuated legged systems is difficult. Hand-engineered controllers can sometimes control these legged millirobots, but they have difficulties with dynamic maneuvers and complex terrains. We present an approach for controlling a real-world legged millirobot that is based on learned neural network models. Using less than 17 minutes of data, our method can learn a predictive model of the robot's dynamics that can enable effective gaits to be synthesized on the fly for following user-specified waypoints on a given terrain. Furthermore, by leveraging expressive, high-capacity neural network models, our approach allows for these predictions to be directly conditioned on camera images, endowing the robot with the ability to predict how different terrains might affect its dynamics. This enables sample-efficient and effective learning for locomotion of a dynamic legged millirobot on various terrains, including gravel, turf, carpet, and styrofoam. Experiment videos can be found at https://sites.google.com/view/imageconddyn

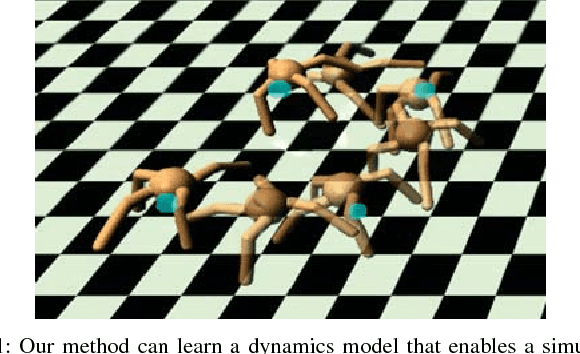

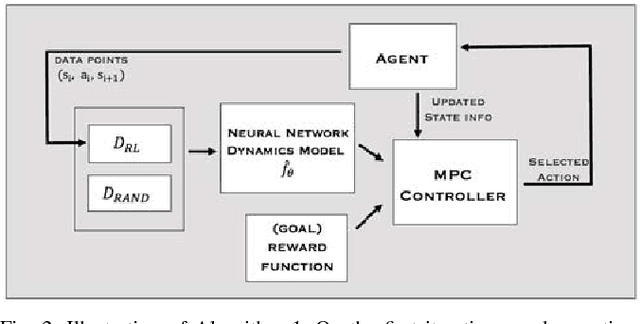

Neural Network Dynamics for Model-Based Deep Reinforcement Learning with Model-Free Fine-Tuning

Dec 02, 2017

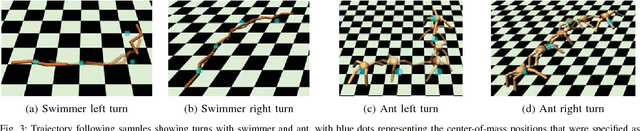

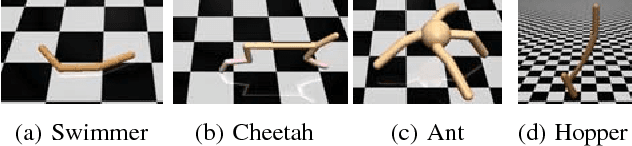

Abstract:Model-free deep reinforcement learning algorithms have been shown to be capable of learning a wide range of robotic skills, but typically require a very large number of samples to achieve good performance. Model-based algorithms, in principle, can provide for much more efficient learning, but have proven difficult to extend to expressive, high-capacity models such as deep neural networks. In this work, we demonstrate that medium-sized neural network models can in fact be combined with model predictive control (MPC) to achieve excellent sample complexity in a model-based reinforcement learning algorithm, producing stable and plausible gaits to accomplish various complex locomotion tasks. We also propose using deep neural network dynamics models to initialize a model-free learner, in order to combine the sample efficiency of model-based approaches with the high task-specific performance of model-free methods. We empirically demonstrate on MuJoCo locomotion tasks that our pure model-based approach trained on just random action data can follow arbitrary trajectories with excellent sample efficiency, and that our hybrid algorithm can accelerate model-free learning on high-speed benchmark tasks, achieving sample efficiency gains of 3-5x on swimmer, cheetah, hopper, and ant agents. Videos can be found at https://sites.google.com/view/mbmf

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge