Renke Huang

ICON: Intent-Context Coupling for Efficient Multi-Turn Jailbreak Attack

Jan 28, 2026Abstract:Multi-turn jailbreak attacks have emerged as a critical threat to Large Language Models (LLMs), bypassing safety mechanisms by progressively constructing adversarial contexts from scratch and incrementally refining prompts. However, existing methods suffer from the inefficiency of incremental context construction that requires step-by-step LLM interaction, and often stagnate in suboptimal regions due to surface-level optimization. In this paper, we characterize the Intent-Context Coupling phenomenon, revealing that LLM safety constraints are significantly relaxed when a malicious intent is coupled with a semantically congruent context pattern. Driven by this insight, we propose ICON, an automated multi-turn jailbreak framework that efficiently constructs an authoritative-style context via prior-guided semantic routing. Specifically, ICON first routes the malicious intent to a congruent context pattern (e.g., Scientific Research) and instantiates it into an attack prompt sequence. This sequence progressively builds the authoritative-style context and ultimately elicits prohibited content. In addition, ICON incorporates a Hierarchical Optimization Strategy that combines local prompt refinement with global context switching, preventing the attack from stagnating in ineffective contexts. Experimental results across eight SOTA LLMs demonstrate the effectiveness of ICON, achieving a state-of-the-art average Attack Success Rate (ASR) of 97.1\%. Code is available at https://github.com/xwlin-roy/ICON.

Efficient Learning of Voltage Control Strategies via Model-based Deep Reinforcement Learning

Dec 06, 2022Abstract:This article proposes a model-based deep reinforcement learning (DRL) method to design emergency control strategies for short-term voltage stability problems in power systems. Recent advances show promising results in model-free DRL-based methods for power systems, but model-free methods suffer from poor sample efficiency and training time, both critical for making state-of-the-art DRL algorithms practically applicable. DRL-agent learns an optimal policy via a trial-and-error method while interacting with the real-world environment. And it is desirable to minimize the direct interaction of the DRL agent with the real-world power grid due to its safety-critical nature. Additionally, state-of-the-art DRL-based policies are mostly trained using a physics-based grid simulator where dynamic simulation is computationally intensive, lowering the training efficiency. We propose a novel model-based-DRL framework where a deep neural network (DNN)-based dynamic surrogate model, instead of a real-world power-grid or physics-based simulation, is utilized with the policy learning framework, making the process faster and sample efficient. However, stabilizing model-based DRL is challenging because of the complex system dynamics of large-scale power systems. We solved these issues by incorporating imitation learning to have a warm start in policy learning, reward-shaping, and multi-step surrogate loss. Finally, we achieved 97.5% sample efficiency and 87.7% training efficiency for an application to the IEEE 300-bus test system.

Safe Reinforcement Learning for Grid Voltage Control

Dec 02, 2021

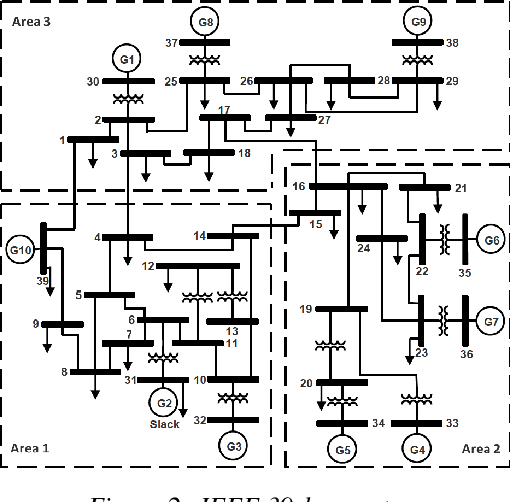

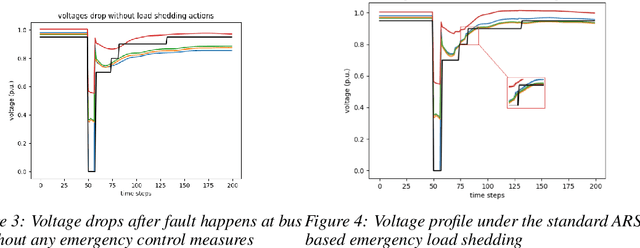

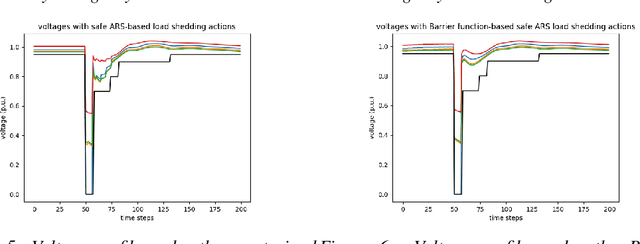

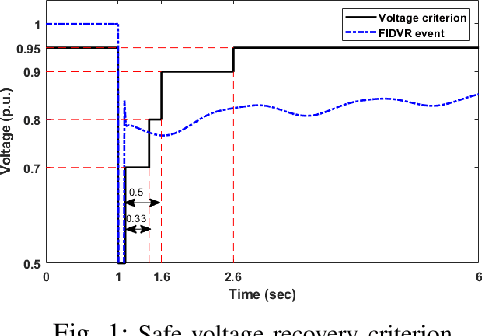

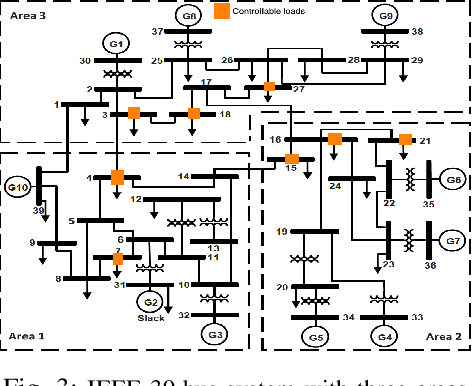

Abstract:Under voltage load shedding has been considered as a standard approach to recover the voltage stability of the electric power grid under emergency conditions, yet this scheme usually trips a massive amount of load inefficiently. Reinforcement learning (RL) has been adopted as a promising approach to circumvent the issues; however, RL approach usually cannot guarantee the safety of the systems under control. In this paper, we discuss a couple of novel safe RL approaches, namely constrained optimization approach and Barrier function-based approach, that can safely recover voltage under emergency events. This method is general and can be applied to other safety-critical control problems. Numerical simulations on the 39-bus IEEE benchmark are performed to demonstrate the effectiveness of the proposed safe RL emergency control.

Physics-informed Evolutionary Strategy based Control for Mitigating Delayed Voltage Recovery

Nov 29, 2021

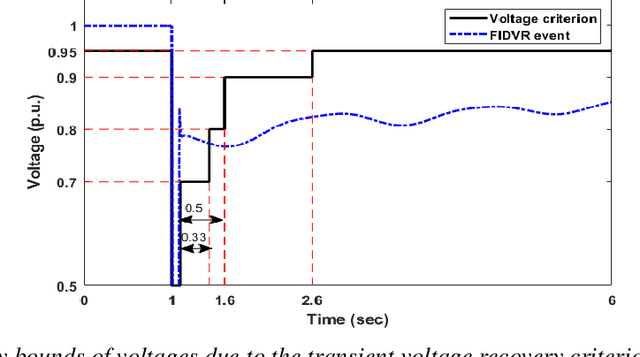

Abstract:In this work we propose a novel data-driven, real-time power system voltage control method based on the physics-informed guided meta evolutionary strategy (ES). The main objective is to quickly provide an adaptive control strategy to mitigate the fault-induced delayed voltage recovery (FIDVR) problem. Reinforcement learning methods have been developed for the same or similar challenging control problems, but they suffer from training inefficiency and lack of robustness for "corner or unseen" scenarios. On the other hand, extensive physical knowledge has been developed in power systems but little has been leveraged in learning-based approaches. To address these challenges, we introduce the trainable action mask technique for flexibly embedding physical knowledge into RL models to rule out unnecessary or unfavorable actions, and achieve notable improvements in sample efficiency, control performance and robustness. Furthermore, our method leverages past learning experience to derive surrogate gradient to guide and accelerate the exploration process in training. Case studies on the IEEE 300-bus system and comparisons with other state-of-the-art benchmark methods demonstrate effectiveness and advantages of our method.

Scalable Voltage Control using Structure-Driven Hierarchical Deep Reinforcement Learning

Jan 29, 2021

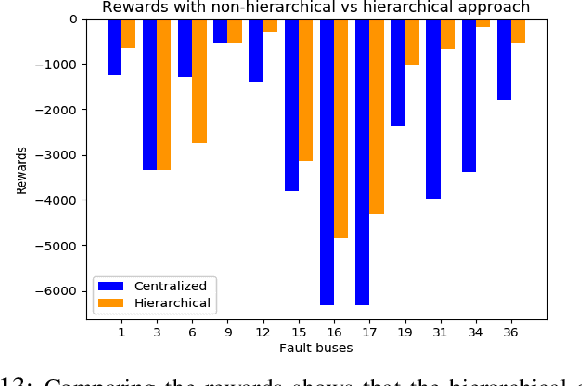

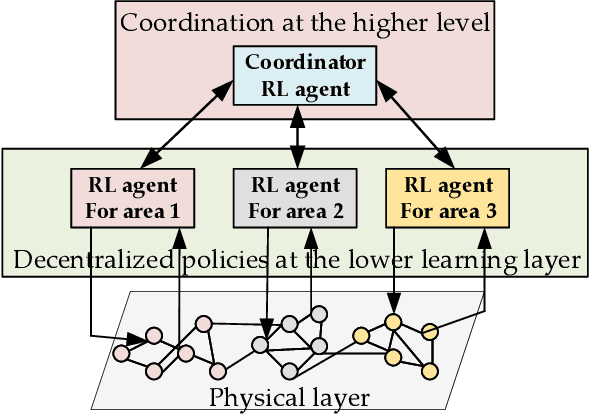

Abstract:This paper presents a novel hierarchical deep reinforcement learning (DRL) based design for the voltage control of power grids. DRL agents are trained for fast, and adaptive selection of control actions such that the voltage recovery criterion can be met following disturbances. Existing voltage control techniques suffer from the issues of speed of operation, optimal coordination between different locations, and scalability. We exploit the area-wise division structure of the power system to propose a hierarchical DRL design that can be scaled to the larger grid models. We employ an enhanced augmented random search algorithm that is tailored for the voltage control problem in a two-level architecture. We train area-wise decentralized RL agents to compute lower-level policies for the individual areas, and concurrently train a higher-level DRL agent that uses the updates of the lower-level policies to efficiently coordinate the control actions taken by the lower-level agents. Numerical experiments on the IEEE benchmark 39-bus model with 3 areas demonstrate the advantages and various intricacies of the proposed hierarchical approach.

Learning and Fast Adaptation for Grid Emergency Control via Deep Meta Reinforcement Learning

Jan 13, 2021

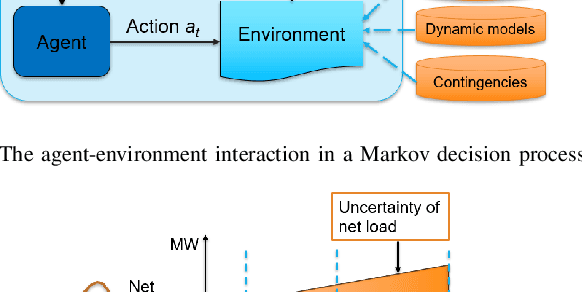

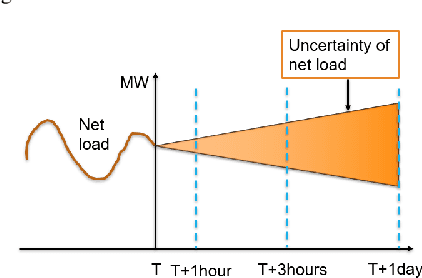

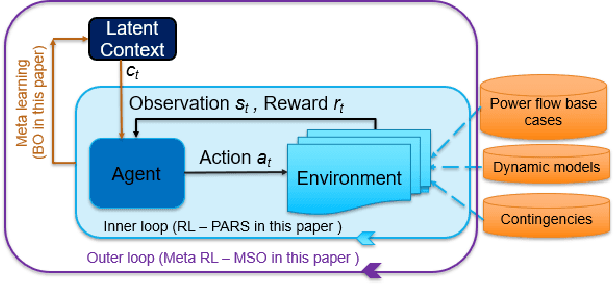

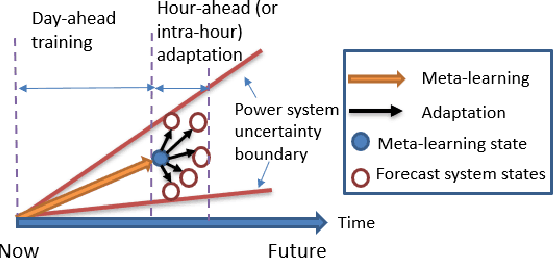

Abstract:As power systems are undergoing a significant transformation with more uncertainties, less inertia and closer to operation limits, there is increasing risk of large outages. Thus, there is an imperative need to enhance grid emergency control to maintain system reliability and security. Towards this end, great progress has been made in developing deep reinforcement learning (DRL) based grid control solutions in recent years. However, existing DRL-based solutions have two main limitations: 1) they cannot handle well with a wide range of grid operation conditions, system parameters, and contingencies; 2) they generally lack the ability to fast adapt to new grid operation conditions, system parameters, and contingencies, limiting their applicability for real-world applications. In this paper, we mitigate these limitations by developing a novel deep meta reinforcement learning (DMRL) algorithm. The DMRL combines the meta strategy optimization together with DRL, and trains policies modulated by a latent space that can quickly adapt to new scenarios. We test the developed DMRL algorithm on the IEEE 300-bus system. We demonstrate fast adaptation of the meta-trained DRL polices with latent variables to new operating conditions and scenarios using the proposed method and achieve superior performance compared to the state-of-the-art DRL and model predictive control (MPC) methods.

Electric Load and Power Forecasting Using Ensemble Gaussian Process Regression

Oct 09, 2019

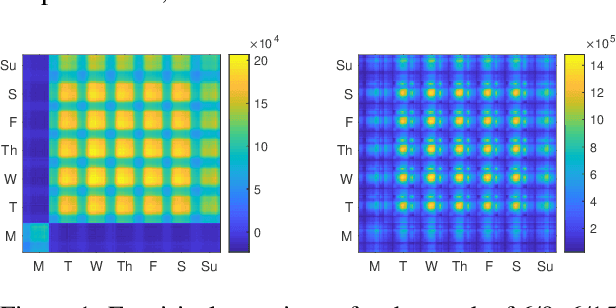

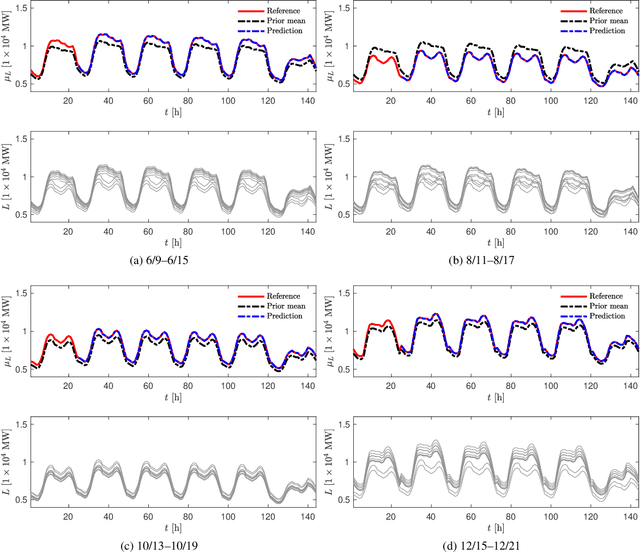

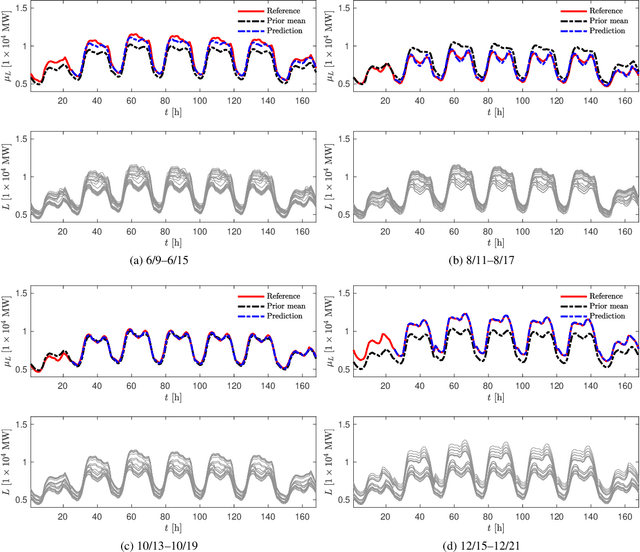

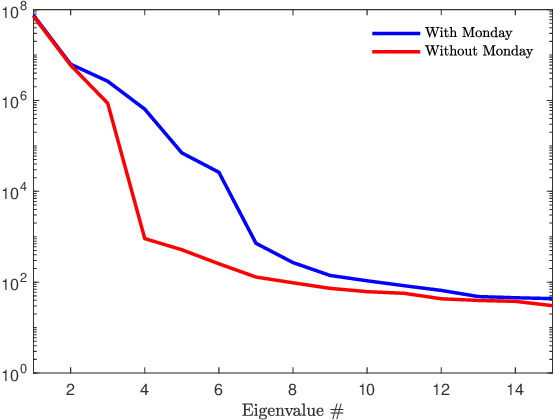

Abstract:We propose a new forecasting method for predicting load demand and generation scheduling. Accurate week-long forecasting of load demand and optimal power generation is critical for efficient operation of power grid systems. In this work, we use a synthetic data set describing a power grid with 700 buses and 134 generators over a 365-days period with data synthetically generated at an hourly rate. The proposed approach for week-long forecasting is based on the Gaussian process regression (GPR) method, with prior covariance matrices of the quantities of interest (QoI) computed from ensembles formed by up to twenty preceding weeks of QoI observations. Then, we use these covariances within the GPR framework to forecast the QoIs for the following week. We demonstrate that the the proposed ensemble GPR (EGPR) method is capable of accurately forecasting weekly total load demand and power generation profiles. The EGPR method is shown to outperform traditional forecasting methods including the standard GPR and autoregressive integrated moving average (ARIMA) methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge