Yan Du

Nurse-in-the-Loop Artificial Intelligence for Precision Management of Type 2 Diabetes in a Clinical Trial Utilizing Transfer-Learned Predictive Digital Twin

Jan 05, 2024Abstract:Background: Type 2 diabetes (T2D) is a prevalent chronic disease with a significant risk of serious health complications and negative impacts on the quality of life. Given the impact of individual characteristics and lifestyle on the treatment plan and patient outcomes, it is crucial to develop precise and personalized management strategies. Artificial intelligence (AI) provides great promise in combining patterns from various data sources with nurses' expertise to achieve optimal care. Methods: This is a 6-month ancillary study among T2D patients (n = 20, age = 57 +- 10). Participants were randomly assigned to an intervention (AI, n=10) group to receive daily AI-generated individualized feedback or a control group without receiving the daily feedback (non-AI, n=10) in the last three months. The study developed an online nurse-in-the-loop predictive control (ONLC) model that utilizes a predictive digital twin (PDT). The PDT was developed using a transfer-learning-based Artificial Neural Network. The PDT was trained on participants self-monitoring data (weight, food logs, physical activity, glucose) from the first three months, and the online control algorithm applied particle swarm optimization to identify impactful behavioral changes for maintaining the patient's glucose and weight levels for the next three months. The ONLC provided the intervention group with individualized feedback and recommendations via text messages. The PDT was re-trained weekly to improve its performance. Findings: The trained ONLC model achieved >=80% prediction accuracy across all patients while the model was tuned online. Participants in the intervention group exhibited a trend of improved daily steps and stable or improved total caloric and total carb intake as recommended.

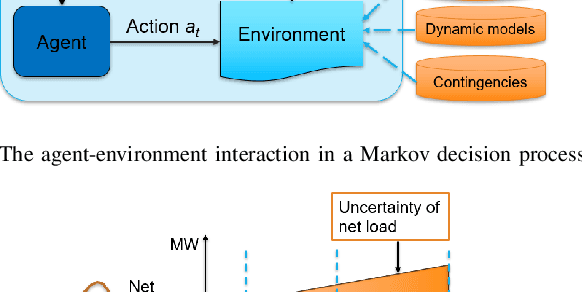

Efficient Learning of Voltage Control Strategies via Model-based Deep Reinforcement Learning

Dec 06, 2022Abstract:This article proposes a model-based deep reinforcement learning (DRL) method to design emergency control strategies for short-term voltage stability problems in power systems. Recent advances show promising results in model-free DRL-based methods for power systems, but model-free methods suffer from poor sample efficiency and training time, both critical for making state-of-the-art DRL algorithms practically applicable. DRL-agent learns an optimal policy via a trial-and-error method while interacting with the real-world environment. And it is desirable to minimize the direct interaction of the DRL agent with the real-world power grid due to its safety-critical nature. Additionally, state-of-the-art DRL-based policies are mostly trained using a physics-based grid simulator where dynamic simulation is computationally intensive, lowering the training efficiency. We propose a novel model-based-DRL framework where a deep neural network (DNN)-based dynamic surrogate model, instead of a real-world power-grid or physics-based simulation, is utilized with the policy learning framework, making the process faster and sample efficient. However, stabilizing model-based DRL is challenging because of the complex system dynamics of large-scale power systems. We solved these issues by incorporating imitation learning to have a warm start in policy learning, reward-shaping, and multi-step surrogate loss. Finally, we achieved 97.5% sample efficiency and 87.7% training efficiency for an application to the IEEE 300-bus test system.

Physics-informed Evolutionary Strategy based Control for Mitigating Delayed Voltage Recovery

Nov 29, 2021

Abstract:In this work we propose a novel data-driven, real-time power system voltage control method based on the physics-informed guided meta evolutionary strategy (ES). The main objective is to quickly provide an adaptive control strategy to mitigate the fault-induced delayed voltage recovery (FIDVR) problem. Reinforcement learning methods have been developed for the same or similar challenging control problems, but they suffer from training inefficiency and lack of robustness for "corner or unseen" scenarios. On the other hand, extensive physical knowledge has been developed in power systems but little has been leveraged in learning-based approaches. To address these challenges, we introduce the trainable action mask technique for flexibly embedding physical knowledge into RL models to rule out unnecessary or unfavorable actions, and achieve notable improvements in sample efficiency, control performance and robustness. Furthermore, our method leverages past learning experience to derive surrogate gradient to guide and accelerate the exploration process in training. Case studies on the IEEE 300-bus system and comparisons with other state-of-the-art benchmark methods demonstrate effectiveness and advantages of our method.

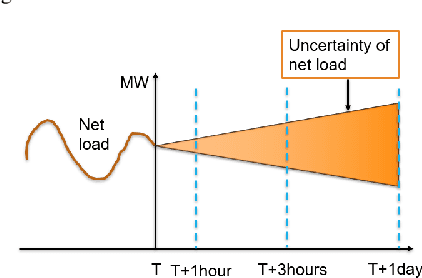

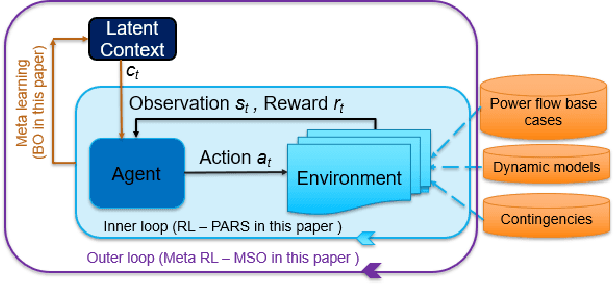

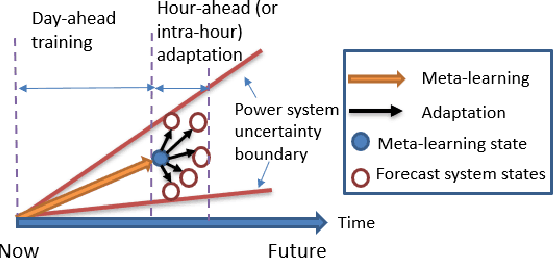

Learning and Fast Adaptation for Grid Emergency Control via Deep Meta Reinforcement Learning

Jan 13, 2021

Abstract:As power systems are undergoing a significant transformation with more uncertainties, less inertia and closer to operation limits, there is increasing risk of large outages. Thus, there is an imperative need to enhance grid emergency control to maintain system reliability and security. Towards this end, great progress has been made in developing deep reinforcement learning (DRL) based grid control solutions in recent years. However, existing DRL-based solutions have two main limitations: 1) they cannot handle well with a wide range of grid operation conditions, system parameters, and contingencies; 2) they generally lack the ability to fast adapt to new grid operation conditions, system parameters, and contingencies, limiting their applicability for real-world applications. In this paper, we mitigate these limitations by developing a novel deep meta reinforcement learning (DMRL) algorithm. The DMRL combines the meta strategy optimization together with DRL, and trains policies modulated by a latent space that can quickly adapt to new scenarios. We test the developed DMRL algorithm on the IEEE 300-bus system. We demonstrate fast adaptation of the meta-trained DRL polices with latent variables to new operating conditions and scenarios using the proposed method and achieve superior performance compared to the state-of-the-art DRL and model predictive control (MPC) methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge