Rana Azzam

SlipNet: Slip Cost Map for Autonomous Navigation on Heterogeneous Deformable Terrains

Sep 03, 2024

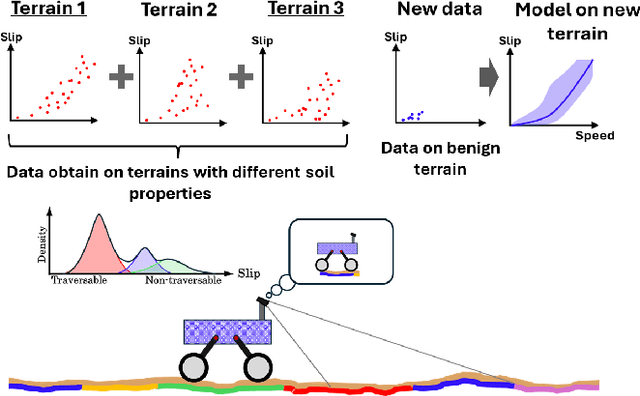

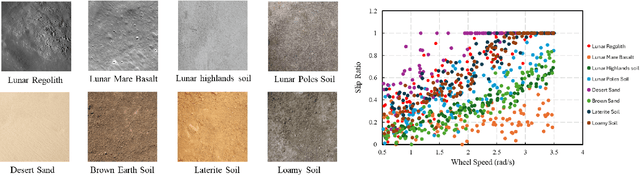

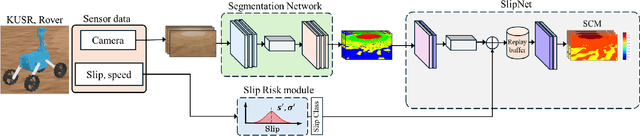

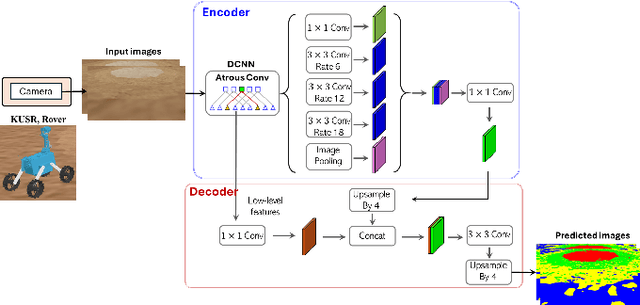

Abstract:Autonomous space rovers face significant challenges when navigating deformable and heterogeneous terrains during space exploration. The variability in terrain types, influenced by different soil properties, often results in severe wheel slip, compromising navigation efficiency and potentially leading to entrapment. This paper proposes SlipNet, an approach for predicting slip in segmented regions of heterogeneous deformable terrain surfaces to enhance navigation algorithms. Unlike previous methods, SlipNet does not depend on prior terrain classification, reducing prediction errors and misclassifications through dynamic terrain segmentation and slip assignment during deployment while maintaining a history of terrain classes. This adaptive reclassification mechanism has improved prediction performance. Extensive simulation results demonstrate that our model (DeepLab v3+ + SlipNet) achieves better slip prediction performance than the TerrainNet, with a lower mean absolute error (MAE) in five terrain sample tests.

Neuromorphic Vision-based Motion Segmentation with Graph Transformer Neural Network

Apr 16, 2024

Abstract:Moving object segmentation is critical to interpret scene dynamics for robotic navigation systems in challenging environments. Neuromorphic vision sensors are tailored for motion perception due to their asynchronous nature, high temporal resolution, and reduced power consumption. However, their unconventional output requires novel perception paradigms to leverage their spatially sparse and temporally dense nature. In this work, we propose a novel event-based motion segmentation algorithm using a Graph Transformer Neural Network, dubbed GTNN. Our proposed algorithm processes event streams as 3D graphs by a series of nonlinear transformations to unveil local and global spatiotemporal correlations between events. Based on these correlations, events belonging to moving objects are segmented from the background without prior knowledge of the dynamic scene geometry. The algorithm is trained on publicly available datasets including MOD, EV-IMO, and \textcolor{black}{EV-IMO2} using the proposed training scheme to facilitate efficient training on extensive datasets. Moreover, we introduce the Dynamic Object Mask-aware Event Labeling (DOMEL) approach for generating approximate ground-truth labels for event-based motion segmentation datasets. We use DOMEL to label our own recorded Event dataset for Motion Segmentation (EMS-DOMEL), which we release to the public for further research and benchmarking. Rigorous experiments are conducted on several unseen publicly-available datasets where the results revealed that GTNN outperforms state-of-the-art methods in the presence of dynamic background variations, motion patterns, and multiple dynamic objects with varying sizes and velocities. GTNN achieves significant performance gains with an average increase of 9.4% and 4.5% in terms of motion segmentation accuracy (IoU%) and detection rate (DR%), respectively.

Design of Dynamics Invariant LSTM for Touch Based Human-UAV Interaction Detection

Jul 12, 2022

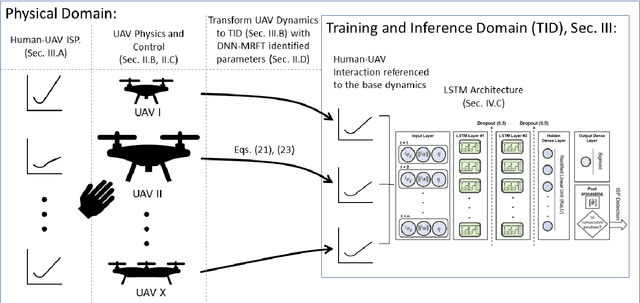

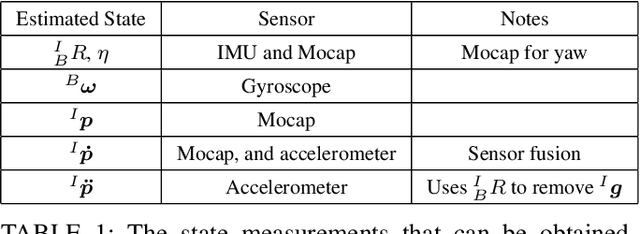

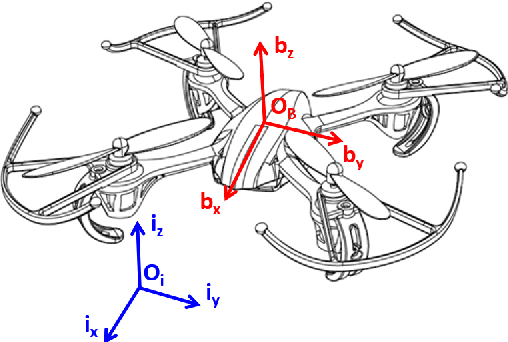

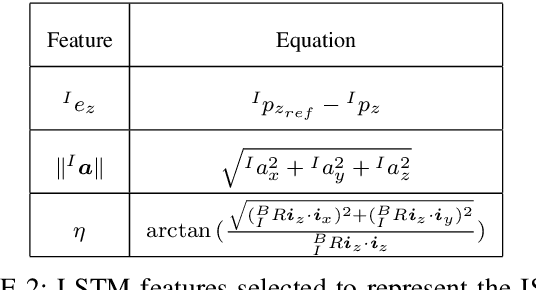

Abstract:The field of Unmanned Aerial Vehicles (UAVs) has reached a high level of maturity in the last few years. Hence, bringing such platforms from closed labs, to day-to-day interactions with humans is important for commercialization of UAVs. One particular human-UAV scenario of interest for this paper is the payload handover scheme, where a UAV hands over a payload to a human upon their request. In this scope, this paper presents a novel real-time human-UAV interaction detection approach, where Long short-term memory (LSTM) based neural network is developed to detect state profiles resulting from human interaction dynamics. A novel data pre-processing technique is presented; this technique leverages estimated process parameters of training and testing UAVs to build dynamics invariant testing data. The proposed detection algorithm is lightweight and thus can be deployed in real-time using off the shelf UAV platforms; in addition, it depends solely on inertial and position measurements present on any classical UAV platform. The proposed approach is demonstrated on a payload handover task between multirotor UAVs and humans. Training and testing data were collected using real-time experiments. The detection approach has achieved an accuracy of 96\%, giving no false positives even in the presence of external wind disturbances, and when deployed and tested on two different UAVs.

A Neuromorphic Vision-Based Measurement for Robust Relative Localization in Future Space Exploration Missions

Jun 23, 2022

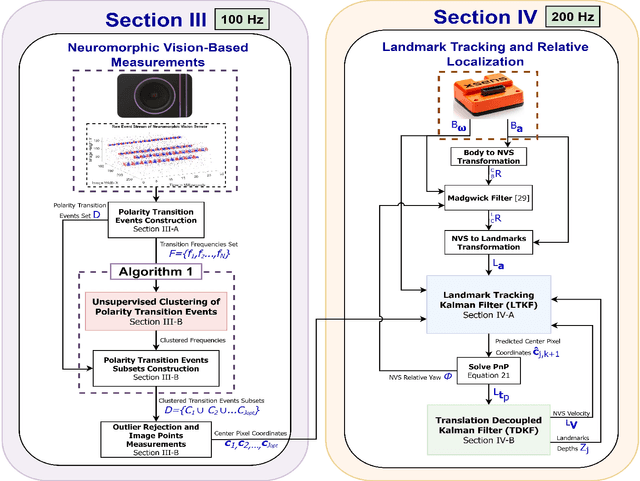

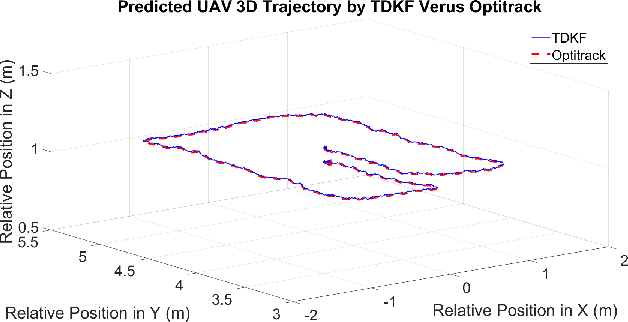

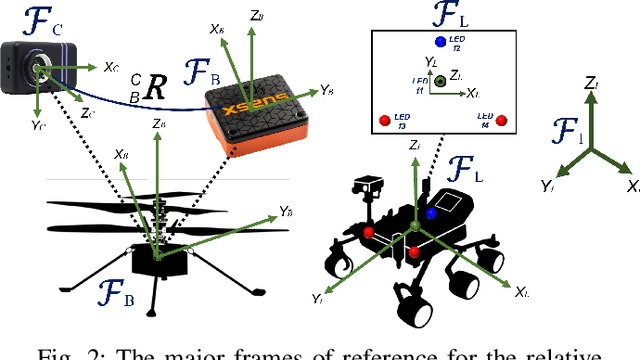

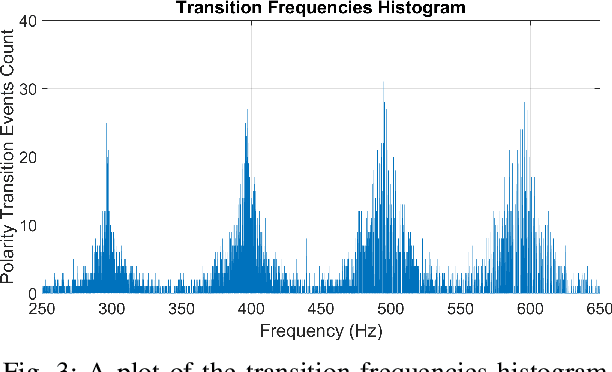

Abstract:Space exploration has witnessed revolutionary changes upon landing of the Perseverance Rover on the Martian surface and demonstrating the first flight beyond Earth by the Mars helicopter, Ingenuity. During their mission on Mars, Perseverance Rover and Ingenuity collaboratively explore the Martian surface, where Ingenuity scouts terrain information for rover's safe traversability. Hence, determining the relative poses between both the platforms is of paramount importance for the success of this mission. Driven by this necessity, this work proposes a robust relative localization system based on a fusion of neuromorphic vision-based measurements (NVBMs) and inertial measurements. The emergence of neuromorphic vision triggered a paradigm shift in the computer vision community, due to its unique working principle delineated with asynchronous events triggered by variations of light intensities occurring in the scene. This implies that observations cannot be acquired in static scenes due to illumination invariance. To circumvent this limitation, high frequency active landmarks are inserted in the scene to guarantee consistent event firing. These landmarks are adopted as salient features to facilitate relative localization. A novel event-based landmark identification algorithm using Gaussian Mixture Models (GMM) is developed for matching the landmarks correspondences formulating our NVBMs. The NVBMs are fused with inertial measurements in proposed state estimators, landmark tracking Kalman filter (LTKF) and translation decoupled Kalman filter (TDKF) for landmark tracking and relative localization, respectively. The proposed system was tested in a variety of experiments and has outperformed state-of-the-art approaches in accuracy and range.

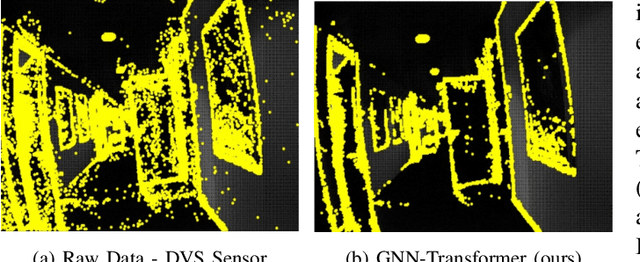

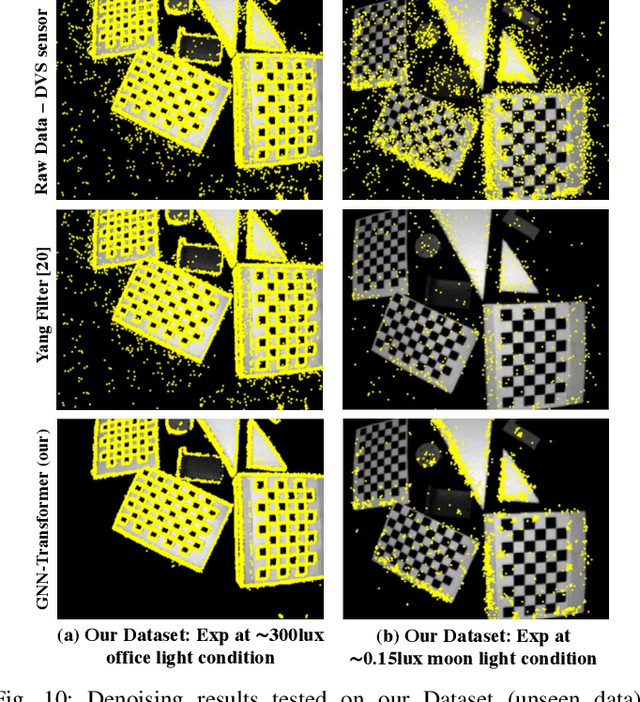

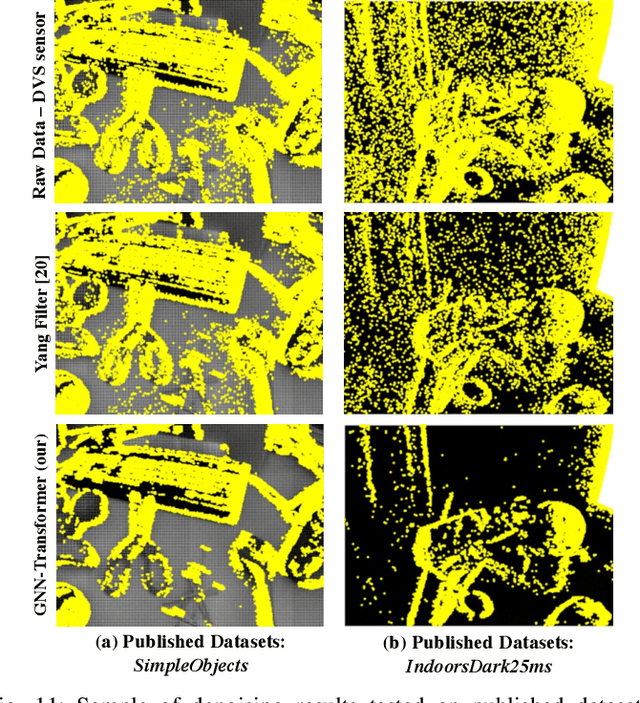

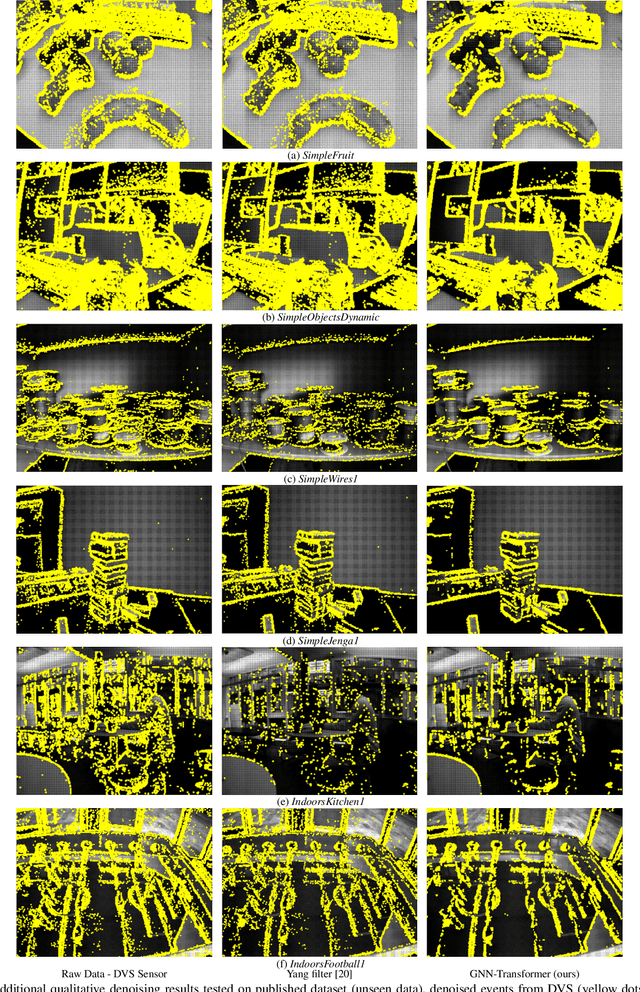

Neuromorphic Camera Denoising using Graph Neural Network-driven Transformers

Dec 17, 2021

Abstract:Neuromorphic vision is a bio-inspired technology that has triggered a paradigm shift in the computer-vision community and is serving as a key-enabler for a multitude of applications. This technology has offered significant advantages including reduced power consumption, reduced processing needs, and communication speed-ups. However, neuromorphic cameras suffer from significant amounts of measurement noise. This noise deteriorates the performance of neuromorphic event-based perception and navigation algorithms. In this paper, we propose a novel noise filtration algorithm to eliminate events which do not represent real log-intensity variations in the observed scene. We employ a Graph Neural Network (GNN)-driven transformer algorithm, called GNN-Transformer, to classify every active event pixel in the raw stream into real-log intensity variation or noise. Within the GNN, a message-passing framework, called EventConv, is carried out to reflect the spatiotemporal correlation among the events, while preserving their asynchronous nature. We also introduce the Known-object Ground-Truth Labeling (KoGTL) approach for generating approximate ground truth labels of event streams under various illumination conditions. KoGTL is used to generate labeled datasets, from experiments recorded in challenging lighting conditions. These datasets are used to train and extensively test our proposed algorithm. When tested on unseen datasets, the proposed algorithm outperforms existing methods by 12% in terms of filtration accuracy. Additional tests are also conducted on publicly available datasets to demonstrate the generalization capabilities of the proposed algorithm in the presence of illumination variations and different motion dynamics. Compared to existing solutions, qualitative results verified the superior capability of the proposed algorithm to eliminate noise while preserving meaningful scene events.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge