Igor Boiko

Fuzzy Ensembles of Reinforcement Learning Policies for Robotic Systems with Varied Parameters

Nov 08, 2023

Abstract:Reinforcement Learning (RL) is an emerging approach to control many dynamical systems for which classical control approaches are not applicable or insufficient. However, the resultant policies may not generalize to variations in the parameters that the system may exhibit. This paper presents a powerful yet simple algorithm in which collaboration is facilitated between RL agents that are trained independently to perform the same task but with different system parameters. The independency among agents allows the exploitation of multi-core processing to perform parallel training. Two examples are provided to demonstrate the effectiveness of the proposed technique. The main demonstration is performed on a quadrotor with slung load tracking problem in a real-time experimental setup. It is shown that integrating the developed algorithm outperforms individual policies by reducing the RMSE tracking error. The robustness of the ensemble is also verified against wind disturbance.

The Role of Time Delay in Sim2real Transfer of Reinforcement Learning for Cyber-Physical Systems

Sep 30, 2022

Abstract:This paper analyzes the simulation to reality gap in reinforcement learning (RL) cyber-physical systems with fractional delays (i.e. delays that are non-integer multiple of the sampling period). The consideration of fractional delay has important implications on the nature of the cyber-physical system considered. Systems with delays are non-Markovian, and the system state vector needs to be extended to make the system Markovian. We show that this is not possible when the delay is in the output, and the problem would always be non-Markovian. Based on this analysis, a sampling scheme is proposed that results in efficient RL training and agents that perform well in realistic multirotor unmanned aerial vehicle simulations. We demonstrate that the resultant agents do not produce excessive oscillations, which is not the case with RL agents that do not consider time delay in the model.

Analysis of the Effect of Time Delay for Unmanned Aerial Vehicles with Applications to Vision Based Navigation

Sep 05, 2022

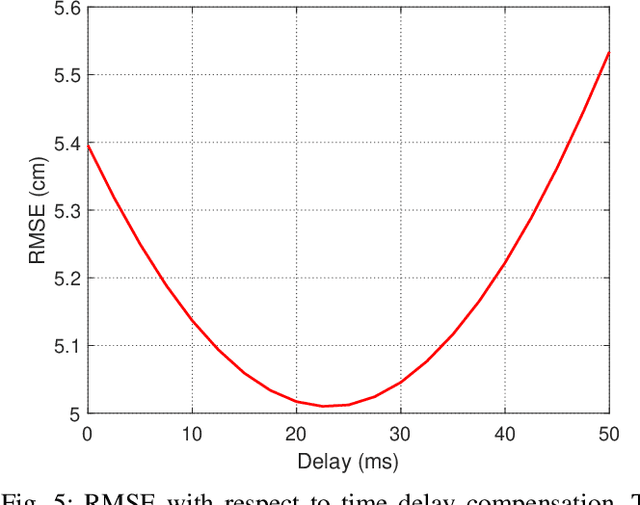

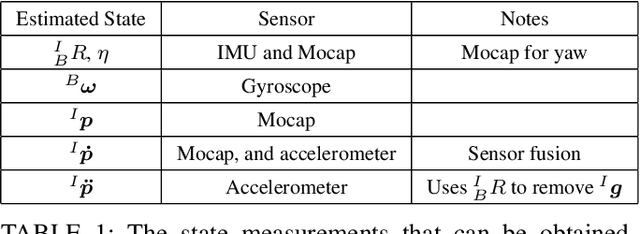

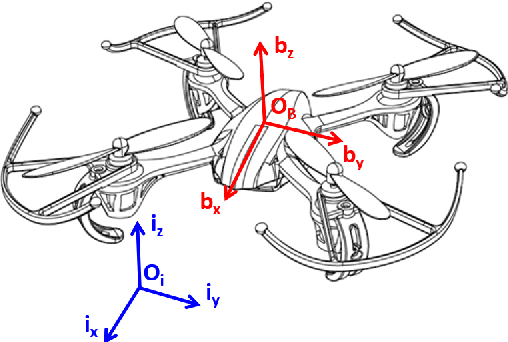

Abstract:In this paper, we analyze the effect of time delay dynamics on controller design for Unmanned Aerial Vehicles (UAVs) with vision based navigation. Time delay is an inevitable phenomenon in cyber-physical systems, and has important implications on controller design and trajectory generation for UAVs. The impact of time delay on UAV dynamics increases with the use of the slower vision based navigation stack. We show that the existing models in the literature, which exclude time delay, are unsuitable for controller tuning since a trivial solution for minimizing an error cost functional always exists. The trivial solution that we identify suggests use of infinite controller gains to achieve optimal performance, which contradicts practical findings. We avoid such shortcomings by introducing a novel nonlinear time delay model for UAVs, and then obtain a set of linear decoupled models corresponding to each of the UAV control loops. The cost functional of the linearized time delay model of angular and altitude dynamics is analyzed, and in contrast to the delay-free models, we show the existence of finite optimal controller parameters. Due to the use of time delay models, we experimentally show that the proposed model accurately represents system stability limits. Due to time delay consideration, we achieved a tracking results of RMSE 5.01 cm when tracking a lemniscate trajectory with a peak velocity of 2.09 m/s using visual odometry (VO) based UAV navigation, which is on par with the state-of-the-art.

Design of Dynamics Invariant LSTM for Touch Based Human-UAV Interaction Detection

Jul 12, 2022

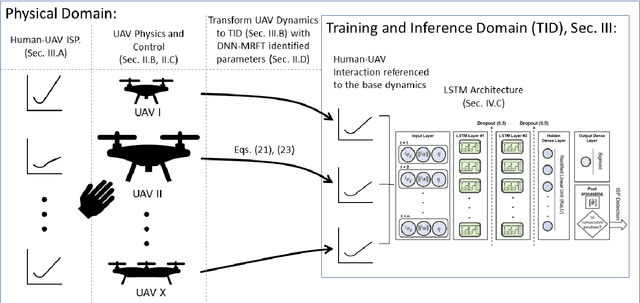

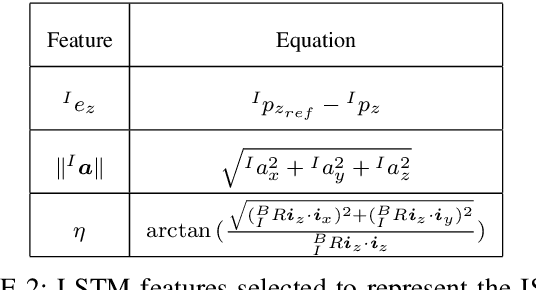

Abstract:The field of Unmanned Aerial Vehicles (UAVs) has reached a high level of maturity in the last few years. Hence, bringing such platforms from closed labs, to day-to-day interactions with humans is important for commercialization of UAVs. One particular human-UAV scenario of interest for this paper is the payload handover scheme, where a UAV hands over a payload to a human upon their request. In this scope, this paper presents a novel real-time human-UAV interaction detection approach, where Long short-term memory (LSTM) based neural network is developed to detect state profiles resulting from human interaction dynamics. A novel data pre-processing technique is presented; this technique leverages estimated process parameters of training and testing UAVs to build dynamics invariant testing data. The proposed detection algorithm is lightweight and thus can be deployed in real-time using off the shelf UAV platforms; in addition, it depends solely on inertial and position measurements present on any classical UAV platform. The proposed approach is demonstrated on a payload handover task between multirotor UAVs and humans. Training and testing data were collected using real-time experiments. The detection approach has achieved an accuracy of 96\%, giving no false positives even in the presence of external wind disturbances, and when deployed and tested on two different UAVs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge