Yusra Alkendi

Neuromorphic Vision-based Motion Segmentation with Graph Transformer Neural Network

Apr 16, 2024

Abstract:Moving object segmentation is critical to interpret scene dynamics for robotic navigation systems in challenging environments. Neuromorphic vision sensors are tailored for motion perception due to their asynchronous nature, high temporal resolution, and reduced power consumption. However, their unconventional output requires novel perception paradigms to leverage their spatially sparse and temporally dense nature. In this work, we propose a novel event-based motion segmentation algorithm using a Graph Transformer Neural Network, dubbed GTNN. Our proposed algorithm processes event streams as 3D graphs by a series of nonlinear transformations to unveil local and global spatiotemporal correlations between events. Based on these correlations, events belonging to moving objects are segmented from the background without prior knowledge of the dynamic scene geometry. The algorithm is trained on publicly available datasets including MOD, EV-IMO, and \textcolor{black}{EV-IMO2} using the proposed training scheme to facilitate efficient training on extensive datasets. Moreover, we introduce the Dynamic Object Mask-aware Event Labeling (DOMEL) approach for generating approximate ground-truth labels for event-based motion segmentation datasets. We use DOMEL to label our own recorded Event dataset for Motion Segmentation (EMS-DOMEL), which we release to the public for further research and benchmarking. Rigorous experiments are conducted on several unseen publicly-available datasets where the results revealed that GTNN outperforms state-of-the-art methods in the presence of dynamic background variations, motion patterns, and multiple dynamic objects with varying sizes and velocities. GTNN achieves significant performance gains with an average increase of 9.4% and 4.5% in terms of motion segmentation accuracy (IoU%) and detection rate (DR%), respectively.

Asynchronous Events-based Panoptic Segmentation using Graph Mixer Neural Network

May 05, 2023Abstract:In the context of robotic grasping, object segmentation encounters several difficulties when faced with dynamic conditions such as real-time operation, occlusion, low lighting, motion blur, and object size variability. In response to these challenges, we propose the Graph Mixer Neural Network that includes a novel collaborative contextual mixing layer, applied to 3D event graphs formed on asynchronous events. The proposed layer is designed to spread spatiotemporal correlation within an event graph at four nearest neighbor levels parallelly. We evaluate the effectiveness of our proposed method on the Event-based Segmentation (ESD) Dataset, which includes five unique image degradation challenges, including occlusion, blur, brightness, trajectory, scale variance, and segmentation of known and unknown objects. The results show that our proposed approach outperforms state-of-the-art methods in terms of mean intersection over the union and pixel accuracy. Code available at: https://github.com/sanket0707/GNN-Mixer.git

Neuromorphic Camera Denoising using Graph Neural Network-driven Transformers

Dec 17, 2021

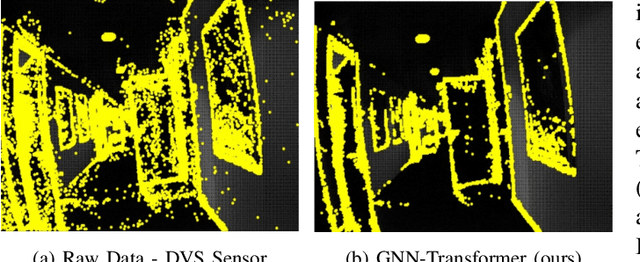

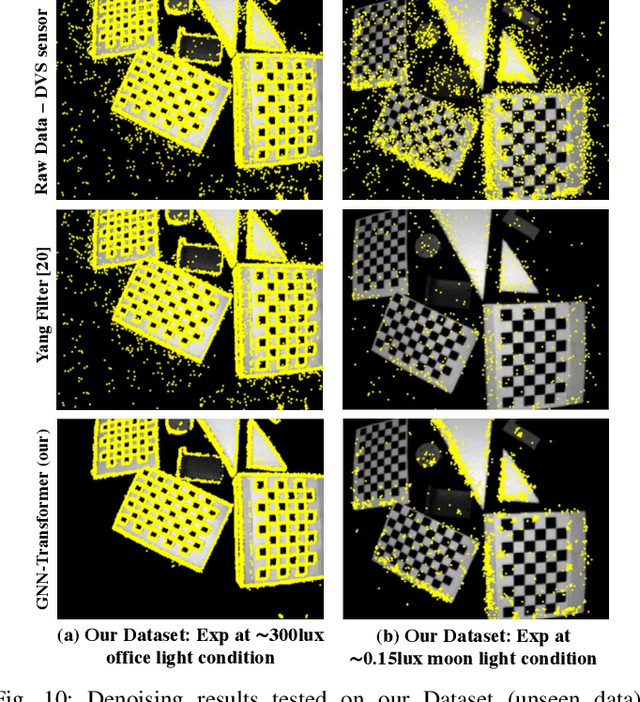

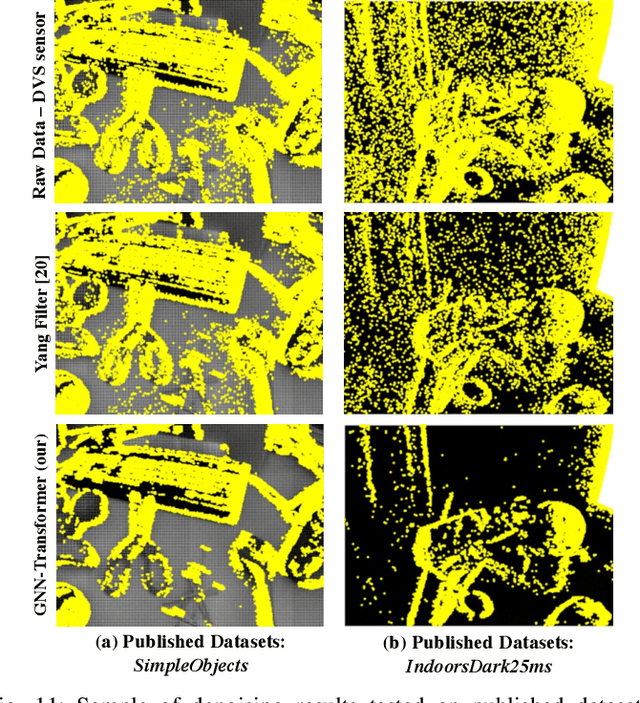

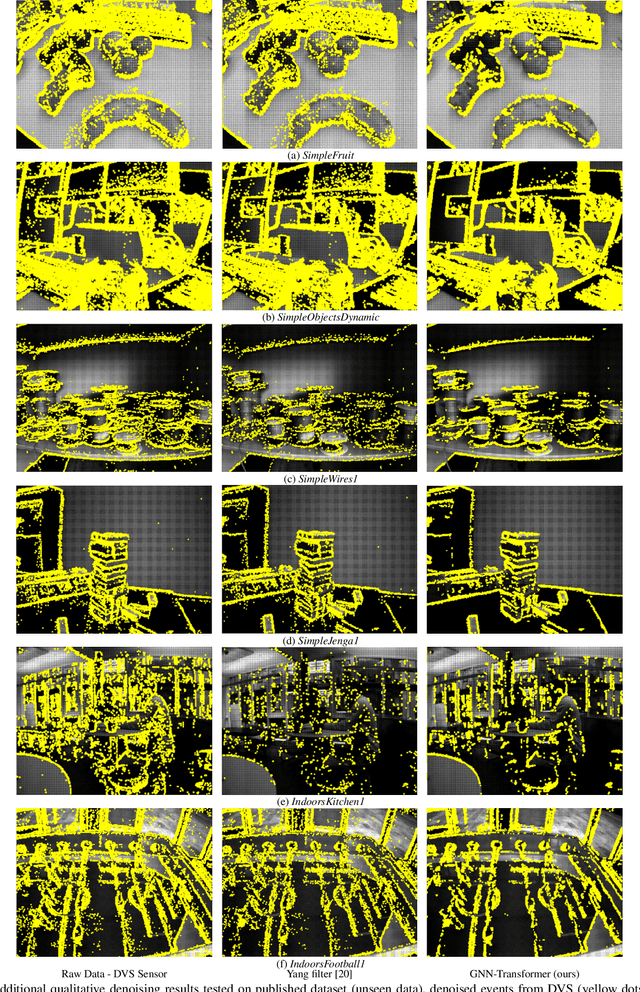

Abstract:Neuromorphic vision is a bio-inspired technology that has triggered a paradigm shift in the computer-vision community and is serving as a key-enabler for a multitude of applications. This technology has offered significant advantages including reduced power consumption, reduced processing needs, and communication speed-ups. However, neuromorphic cameras suffer from significant amounts of measurement noise. This noise deteriorates the performance of neuromorphic event-based perception and navigation algorithms. In this paper, we propose a novel noise filtration algorithm to eliminate events which do not represent real log-intensity variations in the observed scene. We employ a Graph Neural Network (GNN)-driven transformer algorithm, called GNN-Transformer, to classify every active event pixel in the raw stream into real-log intensity variation or noise. Within the GNN, a message-passing framework, called EventConv, is carried out to reflect the spatiotemporal correlation among the events, while preserving their asynchronous nature. We also introduce the Known-object Ground-Truth Labeling (KoGTL) approach for generating approximate ground truth labels of event streams under various illumination conditions. KoGTL is used to generate labeled datasets, from experiments recorded in challenging lighting conditions. These datasets are used to train and extensively test our proposed algorithm. When tested on unseen datasets, the proposed algorithm outperforms existing methods by 12% in terms of filtration accuracy. Additional tests are also conducted on publicly available datasets to demonstrate the generalization capabilities of the proposed algorithm in the presence of illumination variations and different motion dynamics. Compared to existing solutions, qualitative results verified the superior capability of the proposed algorithm to eliminate noise while preserving meaningful scene events.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge