Piyush Jha

LLMStinger: Jailbreaking LLMs using RL fine-tuned LLMs

Nov 13, 2024Abstract:We introduce LLMStinger, a novel approach that leverages Large Language Models (LLMs) to automatically generate adversarial suffixes for jailbreak attacks. Unlike traditional methods, which require complex prompt engineering or white-box access, LLMStinger uses a reinforcement learning (RL) loop to fine-tune an attacker LLM, generating new suffixes based on existing attacks for harmful questions from the HarmBench benchmark. Our method significantly outperforms existing red-teaming approaches (we compared against 15 of the latest methods), achieving a +57.2% improvement in Attack Success Rate (ASR) on LLaMA2-7B-chat and a +50.3% ASR increase on Claude 2, both models known for their extensive safety measures. Additionally, we achieved a 94.97% ASR on GPT-3.5 and 99.4% on Gemma-2B-it, demonstrating the robustness and adaptability of LLMStinger across open and closed-source models.

RLSF: Reinforcement Learning via Symbolic Feedback

May 26, 2024Abstract:In recent years, large language models (LLMs) have had a dramatic impact on various sub-fields of AI, most notably on natural language understanding tasks. However, there is widespread agreement that the logical reasoning capabilities of contemporary LLMs are, at best, fragmentary (i.e., may work well on some problem instances but fail dramatically on others). While traditional LLM fine-tuning approaches (e.g., those that use human feedback) do address this problem to some degree, they suffer from many issues, including unsound black-box reward models, difficulties in collecting preference data, and sparse scalar reward values. To address these challenges, we propose a new training/fine-tuning paradigm we refer to as Reinforcement Learning via Symbolic Feedback (RLSF), which is aimed at enhancing the reasoning capabilities of LLMs. In the RLSF setting, the LLM that is being trained/fine-tuned is considered as the RL agent, while the environment is allowed access to reasoning or domain knowledge tools (e.g., solvers, algebra systems). Crucially, in RLSF, these reasoning tools can provide feedback to the LLMs via poly-sized certificates (e.g., proofs), that characterize errors in the LLM-generated object with respect to some correctness specification. The ability of RLSF-based training/fine-tuning to leverage certificate-generating symbolic tools enables sound fine-grained (token-level) reward signals to LLMs, and thus addresses the limitations of traditional reward models mentioned above. Via extensive evaluations, we show that our RLSF-based fine-tuning of LLMs outperforms traditional approaches on two different applications, namely, program synthesis from natural language pseudo-code to programming language (C++) and solving the Game of 24.

A Reinforcement Learning based Reset Policy for CDCL SAT Solvers

Apr 04, 2024

Abstract:Restart policy is an important technique used in modern Conflict-Driven Clause Learning (CDCL) solvers, wherein some parts of the solver state are erased at certain intervals during the run of the solver. In most solvers, variable activities are preserved across restart boundaries, resulting in solvers continuing to search parts of the assignment tree that are not far from the one immediately prior to a restart. To enable the solver to search possibly "distant" parts of the assignment tree, we study the effect of resets, a variant of restarts which not only erases the assignment trail, but also randomizes the activity scores of the variables of the input formula after reset, thus potentially enabling a better global exploration of the search space. In this paper, we model the problem of whether to trigger reset as a multi-armed bandit (MAB) problem, and propose two reinforcement learning (RL) based adaptive reset policies using the Upper Confidence Bound (UCB) and Thompson sampling algorithms. These two algorithms balance the exploration-exploitation tradeoff by adaptively choosing arms (reset vs. no reset) based on their estimated rewards during the solver's run. We implement our reset policies in four baseline SOTA CDCL solvers and compare the baselines against the reset versions on Satcoin benchmarks and SAT Competition instances. Our results show that RL-based reset versions outperform the corresponding baseline solvers on both Satcoin and the SAT competition instances, suggesting that our RL policy helps to dynamically and profitably adapt the reset frequency for any given input instance. We also introduce the concept of a partial reset, where at least a constant number of variable activities are retained across reset boundaries. Building on previous results, we show that there is an exponential separation between O(1) vs. $\Omega(n)$-length partial resets.

Layered and Staged Monte Carlo Tree Search for SMT Strategy Synthesis

Jan 30, 2024Abstract:Modern SMT solvers, such as Z3, offer user-controllable strategies, enabling users to tailor them for their unique set of instances, thus dramatically enhancing solver performance for their use case. However, this approach of strategy customization presents a significant challenge: handcrafting an optimized strategy for a class of SMT instances remains a complex and demanding task for both solver developers and users alike. In this paper, we address this problem of automatic SMT strategy synthesis via a novel Monte Carlo Tree Search (MCTS) based method. Our method treats strategy synthesis as a sequential decision-making process, whose search tree corresponds to the strategy space, and employs MCTS to navigate this vast search space. The key innovations that enable our method to identify effective strategies, while keeping costs low, are the ideas of layered and staged MCTS search. These novel approaches allow for a deeper and more efficient exploration of the strategy space, enabling us to synthesize more effective strategies than the default ones in state-of-the-art (SOTA) SMT solvers. We implement our method, dubbed Z3alpha, as part of the Z3 SMT solver. Through extensive evaluations across 6 important SMT logics, Z3alpha demonstrates superior performance compared to the SOTA synthesis tool FastSMT, the default Z3 solver, and the CVC5 solver on most benchmarks. Remarkably, on a challenging QF_BV benchmark set, Z3alpha solves 42.7% more instances than the default strategy in the Z3 SMT solver.

AlphaMapleSAT: An MCTS-based Cube-and-Conquer SAT Solver for Hard Combinatorial Problems

Jan 24, 2024Abstract:This paper introduces AlphaMapleSAT, a novel Monte Carlo Tree Search (MCTS) based Cube-and-Conquer (CnC) SAT solving method aimed at efficiently solving challenging combinatorial problems. Despite the tremendous success of CnC solvers in solving a variety of hard combinatorial problems, the lookahead cubing techniques at the heart of CnC have not evolved much for many years. Part of the reason is the sheer difficulty of coming up with new cubing techniques that are both low-cost and effective in partitioning input formulas into sub-formulas, such that the overall runtime is minimized. Lookahead cubing techniques used by current state-of-the-art CnC solvers, such as March, keep their cubing costs low by constraining the search for the optimal splitting variables. By contrast, our key innovation is a deductively-driven MCTS-based lookahead cubing technique, that performs a deeper heuristic search to find effective cubes, while keeping the cubing cost low. We perform an extensive comparison of AlphaMapleSAT against the March CnC solver on challenging combinatorial problems such as the minimum Kochen-Specker and Ramsey problems. We also perform ablation studies to verify the efficacy of the MCTS heuristic search for the cubing problem. Results show up to 2.3x speedup in parallel (and up to 27x in sequential) elapsed real time.

Attention, Compilation, and Solver-based Symbolic Analysis are All You Need

Jun 11, 2023

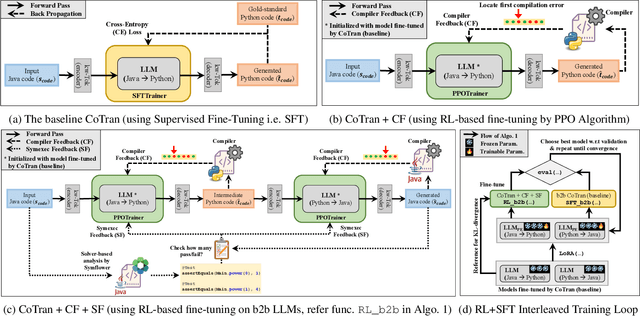

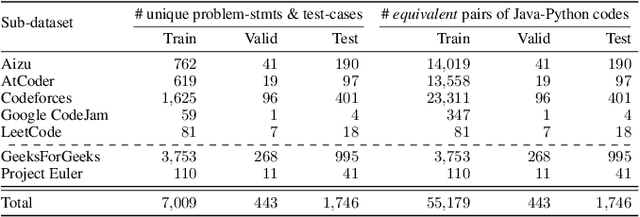

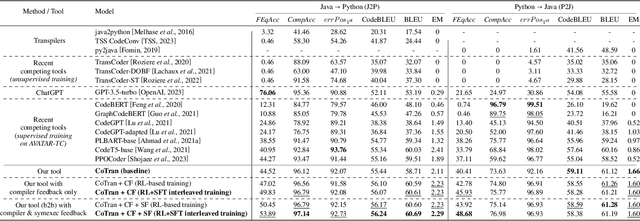

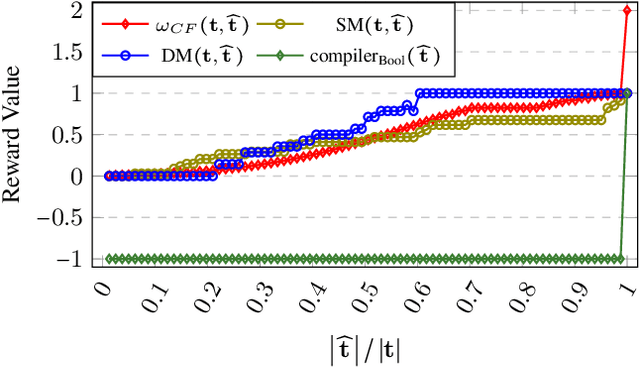

Abstract:In this paper we present a Java-to-Python (J2P) and Python-to-Java (P2J) back-to-back code translation method, and associated tool called CoTran, based on large language models (LLMs). Our method leverages the attention mechanism of LLMs, compilation, and symbolic execution-based test generation for equivalence testing between the input and output programs. More precisely, we modify the typical LLM training loop to incorporate compiler and symbolic execution loss. Via extensive experiments comparing CoTran with 10 other transpilers and LLM-based translation tools over a benchmark of more than 57,000 Java-Python equivalent pairs, we show that CoTran outperforms them on relevant metrics such as compilation and runtime equivalence accuracy. For example, our tool gets 97.43% compilation accuracy and 49.66% runtime equivalence accuracy for J2P translation, whereas the nearest competing tool only gets 96.44% and 6.8% respectively.

BertRLFuzzer: A BERT and Reinforcement Learning based Fuzzer

May 21, 2023

Abstract:We present a novel tool BertRLFuzzer, a BERT and Reinforcement Learning (RL) based fuzzer aimed at finding security vulnerabilities. BertRLFuzzer works as follows: given a list of seed inputs, the fuzzer performs grammar-adhering and attack-provoking mutation operations on them to generate candidate attack vectors. The key insight of BertRLFuzzer is the combined use of two machine learning concepts. The first one is the use of semi-supervised learning with language models (e.g., BERT) that enables BertRLFuzzer to learn (relevant fragments of) the grammar of a victim application as well as attack patterns, without requiring the user to specify it explicitly. The second one is the use of RL with BERT model as an agent to guide the fuzzer to efficiently learn grammar-adhering and attack-provoking mutation operators. The RL-guided feedback loop enables BertRLFuzzer to automatically search the space of attack vectors to exploit the weaknesses of the given victim application without the need to create labeled training data. Furthermore, these two features together enable BertRLFuzzer to be extensible, i.e., the user can extend BertRLFuzzer to a variety of victim applications and attack vectors automatically (i.e., without explicitly modifying the fuzzer or providing a grammar). In order to establish the efficacy of BertRLFuzzer we compare it against a total of 13 black box and white box fuzzers over a benchmark of 9 victim websites. We observed a significant improvement in terms of time to first attack (54% less than the nearest competing tool), time to find all vulnerabilities (40-60% less than the nearest competing tool), and attack rate (4.4% more attack vectors generated than the nearest competing tool). Our experiments show that the combination of the BERT model and RL-based learning makes BertRLFuzzer an effective, adaptive, easy-to-use, automatic, and extensible fuzzer.

CGDTest: A Constrained Gradient Descent Algorithm for Testing Neural Networks

Apr 04, 2023

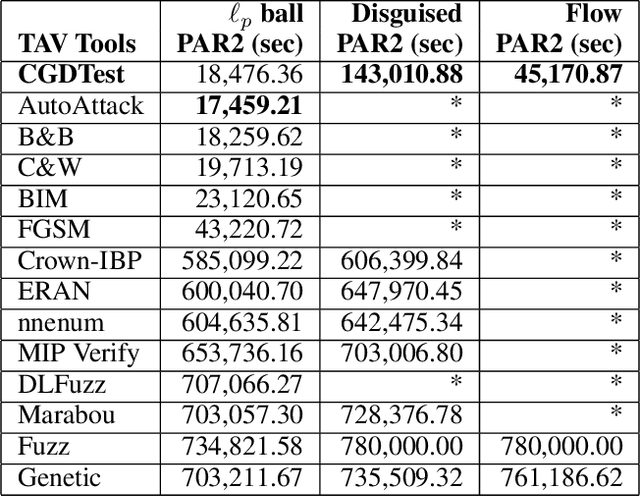

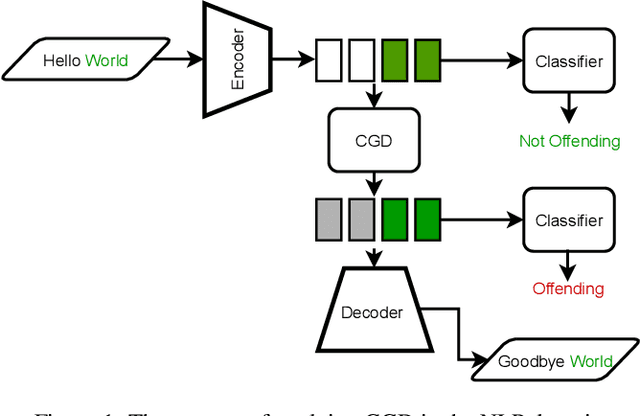

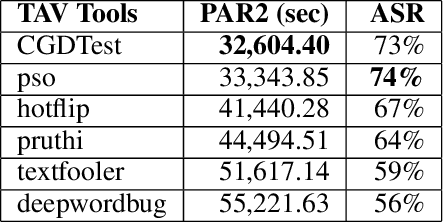

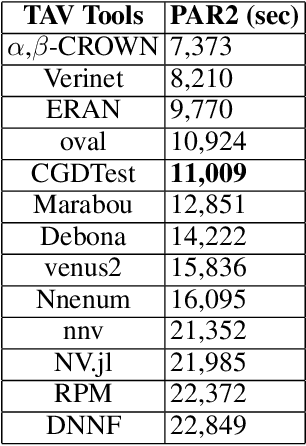

Abstract:In this paper, we propose a new Deep Neural Network (DNN) testing algorithm called the Constrained Gradient Descent (CGD) method, and an implementation we call CGDTest aimed at exposing security and robustness issues such as adversarial robustness and bias in DNNs. Our CGD algorithm is a gradient-descent (GD) method, with the twist that the user can also specify logical properties that characterize the kinds of inputs that the user may want. This functionality sets CGDTest apart from other similar DNN testing tools since it allows users to specify logical constraints to test DNNs not only for $\ell_p$ ball-based adversarial robustness but, more importantly, includes richer properties such as disguised and flow adversarial constraints, as well as adversarial robustness in the NLP domain. We showcase the utility and power of CGDTest via extensive experimentation in the context of vision and NLP domains, comparing against 32 state-of-the-art methods over these diverse domains. Our results indicate that CGDTest outperforms state-of-the-art testing tools for $\ell_p$ ball-based adversarial robustness, and is significantly superior in testing for other adversarial robustness, with improvements in PAR2 scores of over 1500% in some cases over the next best tool. Our evaluation shows that our CGD method outperforms competing methods we compared against in terms of expressibility (i.e., a rich constraint language and concomitant tool support to express a wide variety of properties), scalability (i.e., can be applied to very large real-world models with up to 138 million parameters), and generality (i.e., can be used to test a plethora of model architectures).

An Augmented Translation Technique for low Resource language pair: Sanskrit to Hindi translation

Jun 09, 2020

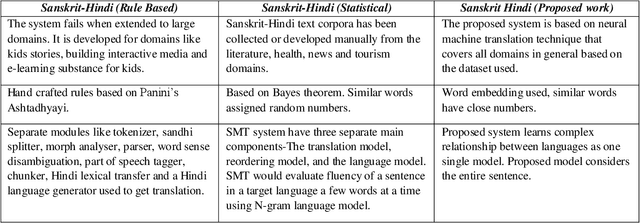

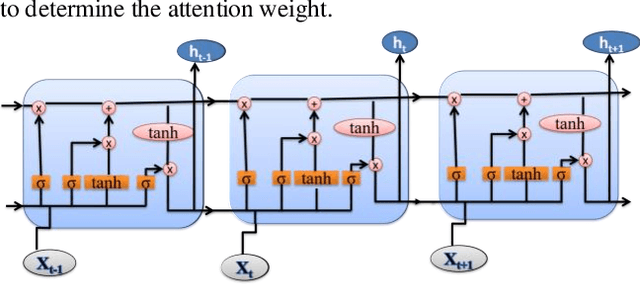

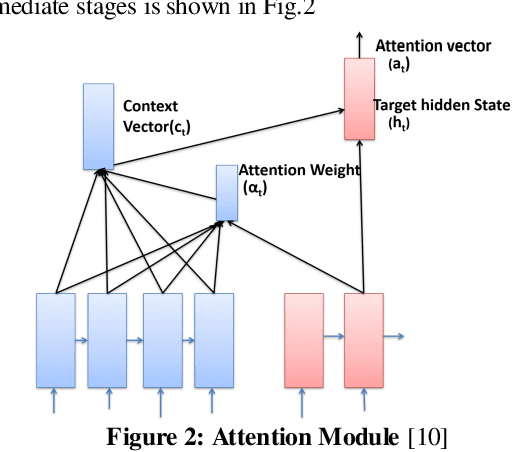

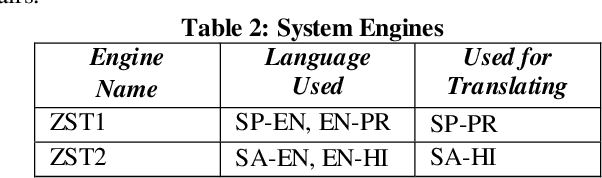

Abstract:Neural Machine Translation (NMT) is an ongoing technique for Machine Translation (MT) using enormous artificial neural network. It has exhibited promising outcomes and has shown incredible potential in solving challenging machine translation exercises. One such exercise is the best approach to furnish great MT to language sets with a little preparing information. In this work, Zero Shot Translation (ZST) is inspected for a low resource language pair. By working on high resource language pairs for which benchmarks are available, namely Spanish to Portuguese, and training on data sets (Spanish-English and English-Portuguese) we prepare a state of proof for ZST system that gives appropriate results on the available data. Subsequently the same architecture is tested for Sanskrit to Hindi translation for which data is sparse, by training the model on English-Hindi and Sanskrit-English language pairs. In order to prepare and decipher with ZST system, we broaden the preparation and interpretation pipelines of NMT seq2seq model in tensorflow, incorporating ZST features. Dimensionality reduction of word embedding is performed to reduce the memory usage for data storage and to achieve a faster training and translation cycles. In this work existing helpful technology has been utilized in an imaginative manner to execute our NLP issue of Sanskrit to Hindi translation. A Sanskrit-Hindi parallel corpus of 300 is constructed for testing. The data required for the construction of parallel corpus has been taken from the telecasted news, published on Department of Public Information, state government of Madhya Pradesh, India website.

Common Representation Learning Using Step-based Correlation Multi-Modal CNN

Oct 31, 2017

Abstract:Deep learning techniques have been successfully used in learning a common representation for multi-view data, wherein the different modalities are projected onto a common subspace. In a broader perspective, the techniques used to investigate common representation learning falls under the categories of canonical correlation-based approaches and autoencoder based approaches. In this paper, we investigate the performance of deep autoencoder based methods on multi-view data. We propose a novel step-based correlation multi-modal CNN (CorrMCNN) which reconstructs one view of the data given the other while increasing the interaction between the representations at each hidden layer or every intermediate step. Finally, we evaluate the performance of the proposed model on two benchmark datasets - MNIST and XRMB. Through extensive experiments, we find that the proposed model achieves better performance than the current state-of-the-art techniques on joint common representation learning and transfer learning tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge