Nirupam Gupta

Mosaic Learning: A Framework for Decentralized Learning with Model Fragmentation

Feb 04, 2026Abstract:Decentralized learning (DL) enables collaborative machine learning (ML) without a central server, making it suitable for settings where training data cannot be centrally hosted. We introduce Mosaic Learning, a DL framework that decomposes models into fragments and disseminates them independently across the network. Fragmentation reduces redundant communication across correlated parameters and enables more diverse information propagation without increasing communication cost. We theoretically show that Mosaic Learning (i) shows state-of-the-art worst-case convergence rate, and (ii) leverages parameter correlation in an ML model, improving contraction by reducing the highest eigenvalue of a simplified system. We empirically evaluate Mosaic Learning on four learning tasks and observe up to 12 percentage points higher node-level test accuracy compared to epidemic learning (EL), a state-of-the-art baseline. In summary, Mosaic Learning improves DL performance without sacrificing its utility or efficiency, and positions itself as a new DL standard.

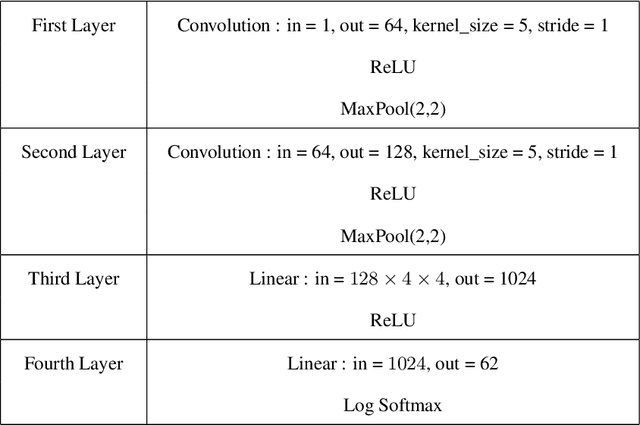

Reconciling Communication Compression and Byzantine-Robustness in Distributed Learning

Aug 23, 2025Abstract:Distributed learning (DL) enables scalable model training over decentralized data, but remains challenged by Byzantine faults and high communication costs. While both issues have been studied extensively in isolation, their interaction is less explored. Prior work shows that naively combining communication compression with Byzantine-robust aggregation degrades resilience to faulty nodes (or workers). The state-of-the-art algorithm, namely Byz-DASHA-PAGE [29], makes use of the momentum variance reduction scheme to mitigate the detrimental impact of compression noise on Byzantine-robustness. We propose a new algorithm, named RoSDHB, that integrates the classic Polyak's momentum with a new coordinated compression mechanism. We show that RoSDHB performs comparably to Byz-DASHA-PAGE under the standard (G, B)-gradient dissimilarity heterogeneity model, while it relies on fewer assumptions. In particular, we only assume Lipschitz smoothness of the average loss function of the honest workers, in contrast to [29]that additionally assumes a special smoothness of bounded global Hessian variance. Empirical results on benchmark image classification task show that RoSDHB achieves strong robustness with significant communication savings.

Revisiting Ensembling in One-Shot Federated Learning

Nov 11, 2024

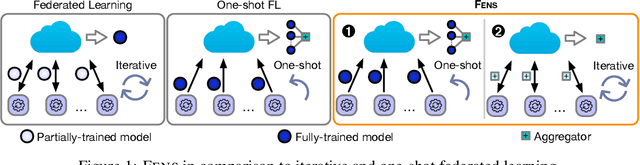

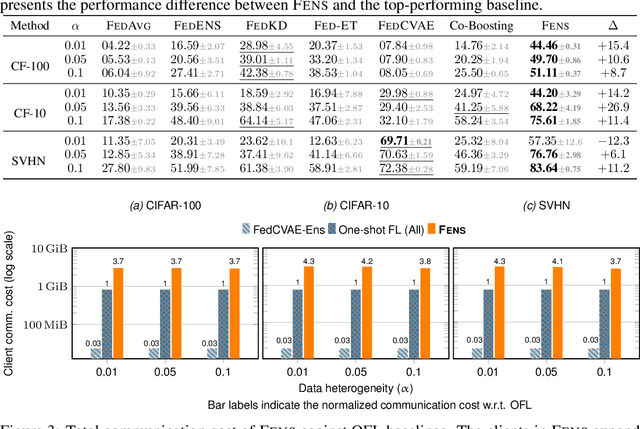

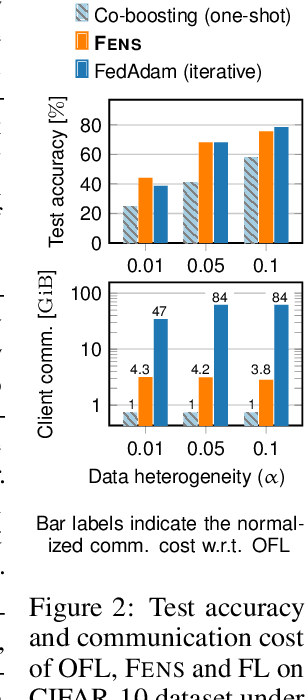

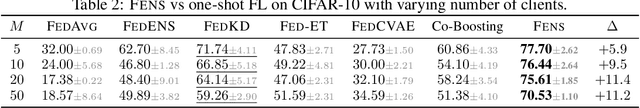

Abstract:Federated learning (FL) is an appealing approach to training machine learning models without sharing raw data. However, standard FL algorithms are iterative and thus induce a significant communication cost. One-shot federated learning (OFL) trades the iterative exchange of models between clients and the server with a single round of communication, thereby saving substantially on communication costs. Not surprisingly, OFL exhibits a performance gap in terms of accuracy with respect to FL, especially under high data heterogeneity. We introduce FENS, a novel federated ensembling scheme that approaches the accuracy of FL with the communication efficiency of OFL. Learning in FENS proceeds in two phases: first, clients train models locally and send them to the server, similar to OFL; second, clients collaboratively train a lightweight prediction aggregator model using FL. We showcase the effectiveness of FENS through exhaustive experiments spanning several datasets and heterogeneity levels. In the particular case of heterogeneously distributed CIFAR-10 dataset, FENS achieves up to a 26.9% higher accuracy over state-of-the-art (SOTA) OFL, being only 3.1% lower than FL. At the same time, FENS incurs at most 4.3x more communication than OFL, whereas FL is at least 10.9x more communication-intensive than FENS.

Fine-Tuning Personalization in Federated Learning to Mitigate Adversarial Clients

Sep 30, 2024

Abstract:Federated learning (FL) is an appealing paradigm that allows a group of machines (a.k.a. clients) to learn collectively while keeping their data local. However, due to the heterogeneity between the clients' data distributions, the model obtained through the use of FL algorithms may perform poorly on some client's data. Personalization addresses this issue by enabling each client to have a different model tailored to their own data while simultaneously benefiting from the other clients' data. We consider an FL setting where some clients can be adversarial, and we derive conditions under which full collaboration fails. Specifically, we analyze the generalization performance of an interpolated personalized FL framework in the presence of adversarial clients, and we precisely characterize situations when full collaboration performs strictly worse than fine-tuned personalization. Our analysis determines how much we should scale down the level of collaboration, according to data heterogeneity and the tolerable fraction of adversarial clients. We support our findings with empirical results on mean estimation and binary classification problems, considering synthetic and benchmark image classification datasets.

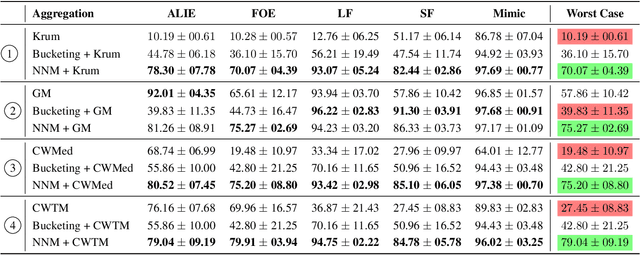

Boosting Robustness by Clipping Gradients in Distributed Learning

May 23, 2024

Abstract:Robust distributed learning consists in achieving good learning performance despite the presence of misbehaving workers. State-of-the-art (SOTA) robust distributed gradient descent (Robust-DGD) methods, relying on robust aggregation, have been proven to be optimal: Their learning error matches the lower bound established under the standard heterogeneity model of $(G, B)$-gradient dissimilarity. The learning guarantee of SOTA Robust-DGD cannot be further improved when model initialization is done arbitrarily. However, we show that it is possible to circumvent the lower bound, and improve the learning performance, when the workers' gradients at model initialization are assumed to be bounded. We prove this by proposing pre-aggregation clipping of workers' gradients, using a novel scheme called adaptive robust clipping (ARC). Incorporating ARC in Robust-DGD provably improves the learning, under the aforementioned assumption on model initialization. The factor of improvement is prominent when the tolerable fraction of misbehaving workers approaches the breakdown point. ARC induces this improvement by constricting the search space, while preserving the robustness property of the original aggregation scheme at the same time. We validate this theoretical finding through exhaustive experiments on benchmark image classification tasks.

On the Relevance of Byzantine Robust Optimization Against Data Poisoning

May 01, 2024Abstract:The success of machine learning (ML) has been intimately linked with the availability of large amounts of data, typically collected from heterogeneous sources and processed on vast networks of computing devices (also called {\em workers}). Beyond accuracy, the use of ML in critical domains such as healthcare and autonomous driving calls for robustness against {\em data poisoning}and some {\em faulty workers}. The problem of {\em Byzantine ML} formalizes these robustness issues by considering a distributed ML environment in which workers (storing a portion of the global dataset) can deviate arbitrarily from the prescribed algorithm. Although the problem has attracted a lot of attention from a theoretical point of view, its practical importance for addressing realistic faults (where the behavior of any worker is locally constrained) remains unclear. It has been argued that the seemingly weaker threat model where only workers' local datasets get poisoned is more reasonable. We prove that, while tolerating a wider range of faulty behaviors, Byzantine ML yields solutions that are, in a precise sense, optimal even under the weaker data poisoning threat model. Then, we study a generic data poisoning model wherein some workers have {\em fully-poisonous local data}, i.e., their datasets are entirely corruptible, and the remainders have {\em partially-poisonous local data}, i.e., only a fraction of their local datasets is corruptible. We prove that Byzantine-robust schemes yield optimal solutions against both these forms of data poisoning, and that the former is more harmful when workers have {\em heterogeneous} local data.

Tackling Byzantine Clients in Federated Learning

Feb 20, 2024

Abstract:The possibility of adversarial (a.k.a., {\em Byzantine}) clients makes federated learning (FL) prone to arbitrary manipulation. The natural approach to robustify FL against adversarial clients is to replace the simple averaging operation at the server in the standard $\mathsf{FedAvg}$ algorithm by a \emph{robust averaging rule}. While a significant amount of work has been devoted to studying the convergence of federated {\em robust averaging} (which we denote by $\mathsf{FedRo}$), prior work has largely ignored the impact of {\em client subsampling} and {\em local steps}, two fundamental FL characteristics. While client subsampling increases the effective fraction of Byzantine clients, local steps increase the drift between the local updates computed by honest (i.e., non-Byzantine) clients. Consequently, a careless deployment of $\mathsf{FedRo}$ could yield poor performance. We validate this observation by presenting an in-depth analysis of $\mathsf{FedRo}$ tightly analyzing the impact of client subsampling and local steps. Specifically, we present a sufficient condition on client subsampling for nearly-optimal convergence of $\mathsf{FedRo}$ (for smooth non-convex loss). Also, we show that the rate of improvement in learning accuracy {\em diminishes} with respect to the number of clients subsampled, as soon as the sample size exceeds a threshold value. Interestingly, we also observe that under a careful choice of step-sizes, the learning error due to Byzantine clients decreases with the number of local steps. We validate our theory by experiments on the FEMNIST and CIFAR-$10$ image classification tasks.

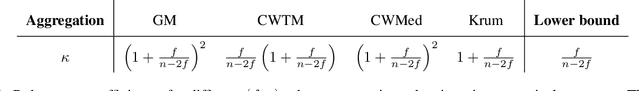

Robust Distributed Learning: Tight Error Bounds and Breakdown Point under Data Heterogeneity

Sep 24, 2023Abstract:The theory underlying robust distributed learning algorithms, designed to resist adversarial machines, matches empirical observations when data is homogeneous. Under data heterogeneity however, which is the norm in practical scenarios, established lower bounds on the learning error are essentially vacuous and greatly mismatch empirical observations. This is because the heterogeneity model considered is too restrictive and does not cover basic learning tasks such as least-squares regression. We consider in this paper a more realistic heterogeneity model, namely (G,B)-gradient dissimilarity, and show that it covers a larger class of learning problems than existing theory. Notably, we show that the breakdown point under heterogeneity is lower than the classical fraction 1/2. We also prove a new lower bound on the learning error of any distributed learning algorithm. We derive a matching upper bound for a robust variant of distributed gradient descent, and empirically show that our analysis reduces the gap between theory and practice.

Distributed Learning with Curious and Adversarial Machines

Feb 09, 2023

Abstract:The ubiquity of distributed machine learning (ML) in sensitive public domain applications calls for algorithms that protect data privacy, while being robust to faults and adversarial behaviors. Although privacy and robustness have been extensively studied independently in distributed ML, their synthesis remains poorly understood. We present the first tight analysis of the error incurred by any algorithm ensuring robustness against a fraction of adversarial machines, as well as differential privacy (DP) for honest machines' data against any other curious entity. Our analysis exhibits a fundamental trade-off between privacy, robustness, and utility. Surprisingly, we show that the cost of this trade-off is marginal compared to that of the classical privacy-utility trade-off. To prove our lower bound, we consider the case of mean estimation, subject to distributed DP and robustness constraints, and devise reductions to centralized estimation of one-way marginals. We prove our matching upper bound by presenting a new distributed ML algorithm using a high-dimensional robust aggregation rule. The latter amortizes the dependence on the dimension in the error (caused by adversarial workers and DP), while being agnostic to the statistical properties of the data.

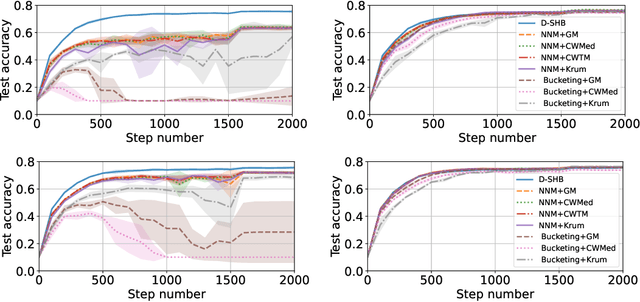

Fixing by Mixing: A Recipe for Optimal Byzantine ML under Heterogeneity

Feb 03, 2023

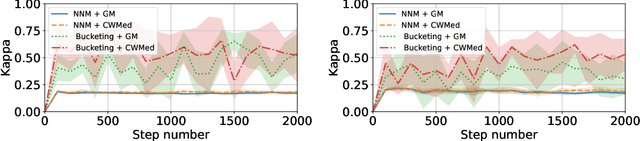

Abstract:Byzantine machine learning (ML) aims to ensure the resilience of distributed learning algorithms to misbehaving (or Byzantine) machines. Although this problem received significant attention, prior works often assume the data held by the machines to be homogeneous, which is seldom true in practical settings. Data heterogeneity makes Byzantine ML considerably more challenging, since a Byzantine machine can hardly be distinguished from a non-Byzantine outlier. A few solutions have been proposed to tackle this issue, but these provide suboptimal probabilistic guarantees and fare poorly in practice. This paper closes the theoretical gap, achieving optimality and inducing good empirical results. In fact, we show how to automatically adapt existing solutions for (homogeneous) Byzantine ML to the heterogeneous setting through a powerful mechanism, we call nearest neighbor mixing (NNM), which boosts any standard robust distributed gradient descent variant to yield optimal Byzantine resilience under heterogeneity. We obtain similar guarantees (in expectation) by plugging NNM in the distributed stochastic heavy ball method, a practical substitute to distributed gradient descent. We obtain empirical results that significantly outperform state-of-the-art Byzantine ML solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge