Nikoletta Basiou

Multi-User MultiWOZ: Task-Oriented Dialogues among Multiple Users

Oct 31, 2023

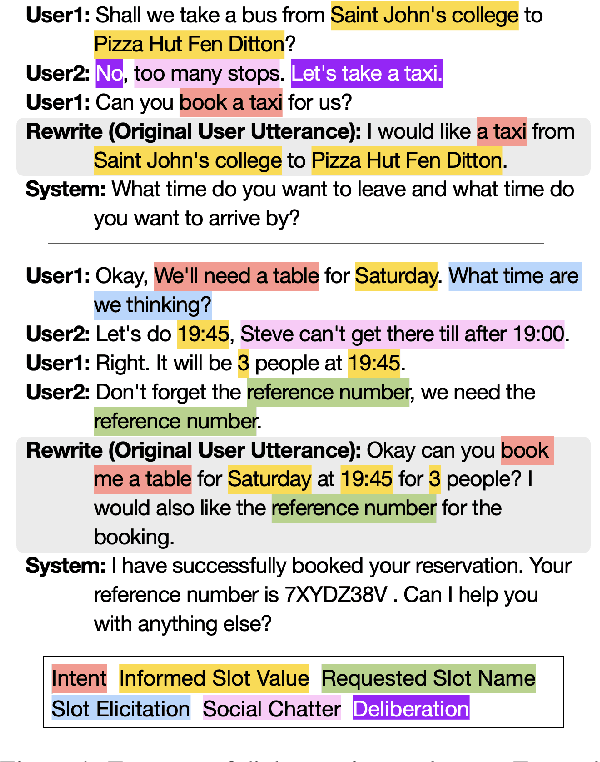

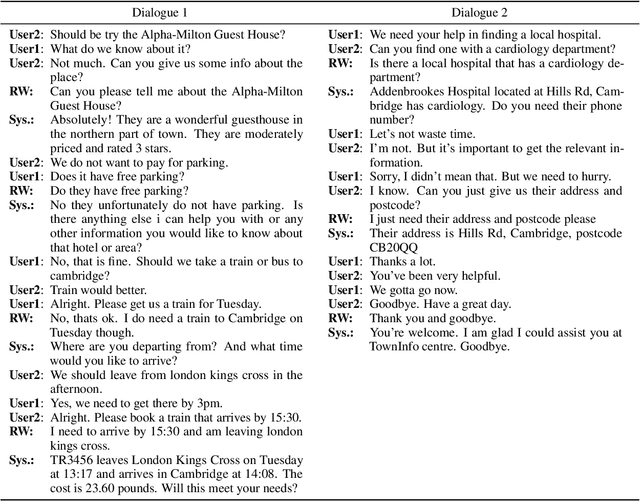

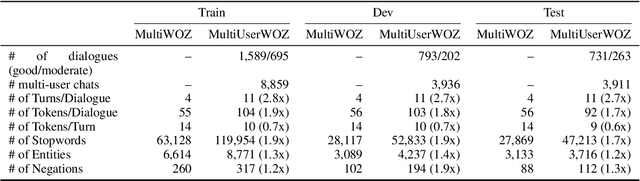

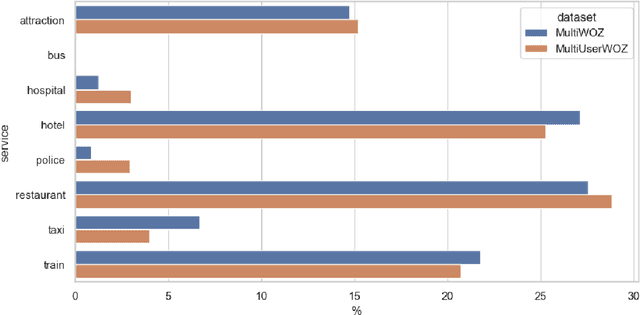

Abstract:While most task-oriented dialogues assume conversations between the agent and one user at a time, dialogue systems are increasingly expected to communicate with multiple users simultaneously who make decisions collaboratively. To facilitate development of such systems, we release the Multi-User MultiWOZ dataset: task-oriented dialogues among two users and one agent. To collect this dataset, each user utterance from MultiWOZ 2.2 was replaced with a small chat between two users that is semantically and pragmatically consistent with the original user utterance, thus resulting in the same dialogue state and system response. These dialogues reflect interesting dynamics of collaborative decision-making in task-oriented scenarios, e.g., social chatter and deliberation. Supported by this data, we propose the novel task of multi-user contextual query rewriting: to rewrite a task-oriented chat between two users as a concise task-oriented query that retains only task-relevant information and that is directly consumable by the dialogue system. We demonstrate that in multi-user dialogues, using predicted rewrites substantially improves dialogue state tracking without modifying existing dialogue systems that are trained for single-user dialogues. Further, this method surpasses training a medium-sized model directly on multi-user dialogues and generalizes to unseen domains.

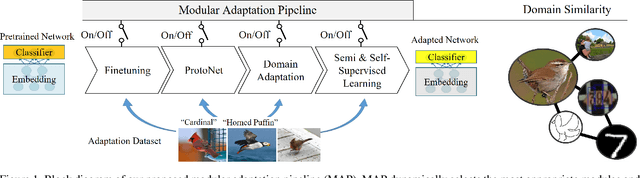

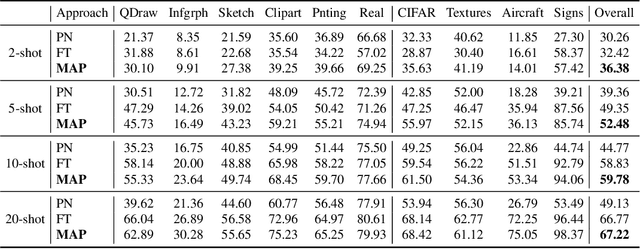

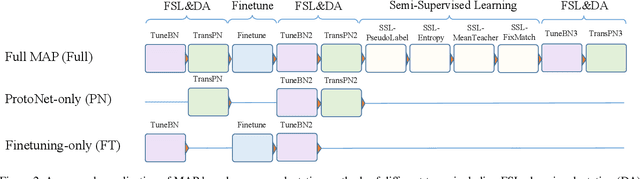

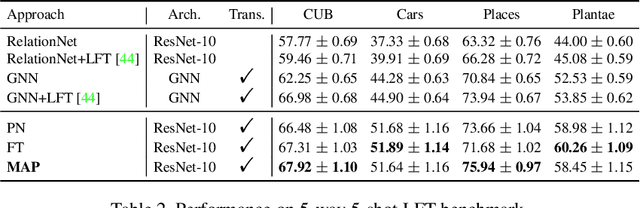

Modular Adaptation for Cross-Domain Few-Shot Learning

Apr 01, 2021

Abstract:Adapting pre-trained representations has become the go-to recipe for learning new downstream tasks with limited examples. While literature has demonstrated great successes via representation learning, in this work, we show that substantial performance improvement of downstream tasks can also be achieved by appropriate designs of the adaptation process. Specifically, we propose a modular adaptation method that selectively performs multiple state-of-the-art (SOTA) adaptation methods in sequence. As different downstream tasks may require different types of adaptation, our modular adaptation enables the dynamic configuration of the most suitable modules based on the downstream task. Moreover, as an extension to existing cross-domain 5-way k-shot benchmarks (e.g., miniImageNet -> CUB), we create a new high-way (~100) k-shot benchmark with data from 10 different datasets. This benchmark provides a diverse set of domains and allows the use of stronger representations learned from ImageNet. Experimental results show that by customizing adaptation process towards downstream tasks, our modular adaptation pipeline (MAP) improves 3.1% in 5-shot classification accuracy over baselines of finetuning and Prototypical Networks.

Zero-shot Multi-Domain Dialog State Tracking Using Descriptive Rules

Sep 17, 2020

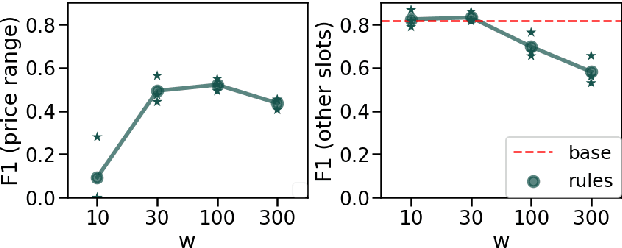

Abstract:In this work, we present a framework for incorporating descriptive logical rules in state-of-the-art neural networks, enabling them to learn how to handle unseen labels without the introduction of any new training data. The rules are integrated into existing networks without modifying their architecture, through an additional term in the network's loss function that penalizes states of the network that do not obey the designed rules. As a case of study, the framework is applied to an existing neural-based Dialog State Tracker. Our experiments demonstrate that the inclusion of logical rules allows the prediction of unseen labels, without deteriorating the predictive capacity of the original system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge