John Byrnes

Zero-Shot Learning with Knowledge Enhanced Visual Semantic Embeddings

Nov 21, 2020

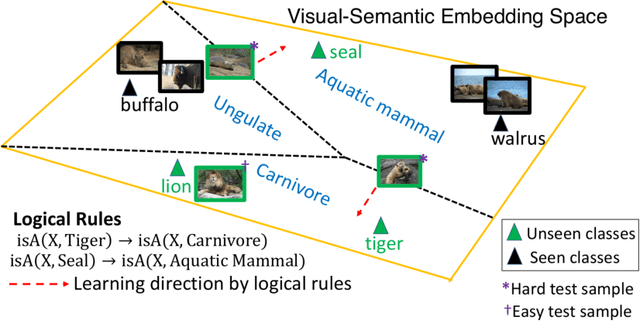

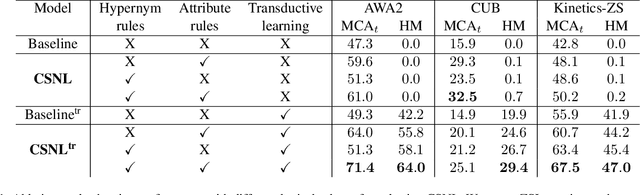

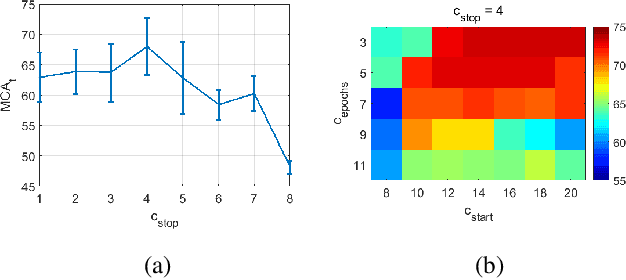

Abstract:We improve zero-shot learning (ZSL) by incorporating common-sense knowledge in DNNs. We propose Common-Sense based Neuro-Symbolic Loss (CSNL) that formulates prior knowledge as novel neuro-symbolic loss functions that regularize visual-semantic embedding. CSNL forces visual features in the VSE to obey common-sense rules relating to hypernyms and attributes. We introduce two key novelties for improved learning: (1) enforcement of rules for a group instead of a single concept to take into account class-wise relationships, and (2) confidence margins inside logical operators that enable implicit curriculum learning and prevent premature overfitting. We evaluate the advantages of incorporating each knowledge source and show consistent gains over prior state-of-art methods in both conventional and generalized ZSL e.g. 11.5%, 5.5%, and 11.6% improvements on AWA2, CUB, and Kinetics respectively.

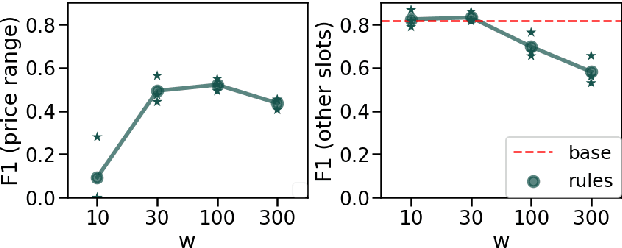

Zero-shot Multi-Domain Dialog State Tracking Using Descriptive Rules

Sep 17, 2020

Abstract:In this work, we present a framework for incorporating descriptive logical rules in state-of-the-art neural networks, enabling them to learn how to handle unseen labels without the introduction of any new training data. The rules are integrated into existing networks without modifying their architecture, through an additional term in the network's loss function that penalizes states of the network that do not obey the designed rules. As a case of study, the framework is applied to an existing neural-based Dialog State Tracker. Our experiments demonstrate that the inclusion of logical rules allows the prediction of unseen labels, without deteriorating the predictive capacity of the original system.

Deep Adaptive Semantic Logic (DASL): Compiling Declarative Knowledge into Deep Neural Networks

Mar 16, 2020

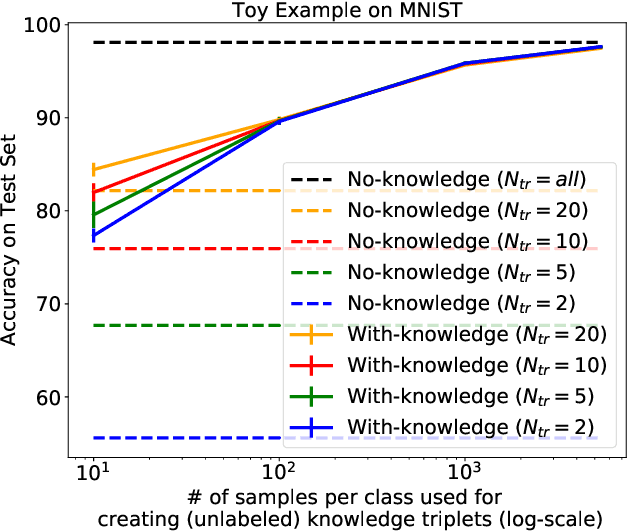

Abstract:We introduce Deep Adaptive Semantic Logic (DASL), a novel framework for automating the generation of deep neural networks that incorporates user-provided formal knowledge to improve learning from data. We provide formal semantics that demonstrate that our knowledge representation captures all of first order logic and that finite sampling from infinite domains converges to correct truth values. DASL's representation improves on prior neural-symbolic work by avoiding vanishing gradients, allowing deeper logical structure, and enabling richer interactions between the knowledge and learning components. We illustrate DASL through a toy problem in which we add structure to an image classification problem and demonstrate that knowledge of that structure reduces data requirements by a factor of $1000$. We then evaluate DASL on a visual relationship detection task and demonstrate that the addition of commonsense knowledge improves performance by $10.7\%$ in a data scarce setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge