Nikolaos Evangeliou

SLAM-based Safe Indoor Exploration Strategy

Aug 19, 2025

Abstract:This paper suggests a 2D exploration strategy for a planar space cluttered with obstacles. Rather than using point robots capable of adjusting their position and altitude instantly, this research is tailored to classical agents with circular footprints that cannot control instantly their pose. Inhere, a self-balanced dual-wheeled differential drive system is used to explore the place. The system is equipped with linear accelerometers and angular gyroscopes, a 3D-LiDAR, and a forward-facing RGB-D camera. The system performs RTAB-SLAM using the IMU and the LiDAR, while the camera is used for loop closures. The mobile agent explores the planar space using a safe skeleton approach that places the agent as far as possible from the static obstacles. During the exploration strategy, the heading is towards any offered openings of the space. This space exploration strategy has as its highest priority the agent's safety in avoiding the obstacles followed by the exploration of undetected space. Experimental studies with a ROS-enabled mobile agent are presented indicating the path planning strategy while exploring the space.

* 5 pages, 8 figures. Published in the 2025 11th International Conference on Automation, Robotics, and Applications (ICARA)

RCM-Constrained Manipulator Trajectory Tracking Using Differential Kinematics Control

Sep 09, 2024

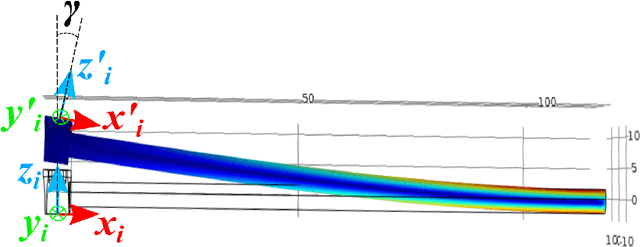

Abstract:This paper proposes an approach for controlling surgical robotic systems, while complying with the Remote Center of Motion (RCM) constraint in Robot-Assisted Minimally Invasive Surgery (RA-MIS). In this approach, the RCM-constraint is upheld algorithmically, providing flexibility in the positioning of the insertion point and enabling compatibility with a wide range of general-purpose robots. The paper further investigates the impact of the tool's insertion ratio on the RCM-error, and introduces a manipulability index of the robot which considers the RCM-error that it is used to find a starting configuration. To accurately evaluate the proposed method's trajectory tracking within an RCM-constrained environment, an electromagnetic tracking system is employed. The results demonstrate the effectiveness of the proposed method in addressing the RCM constraint problem in RA-MIS.

* 6 pages, 7 figures. Published in the 21st International Conference on Advanced Robotics (ICAR 2023)

1st Workshop on Maritime Computer Vision 2023: Challenge Results

Nov 28, 2022

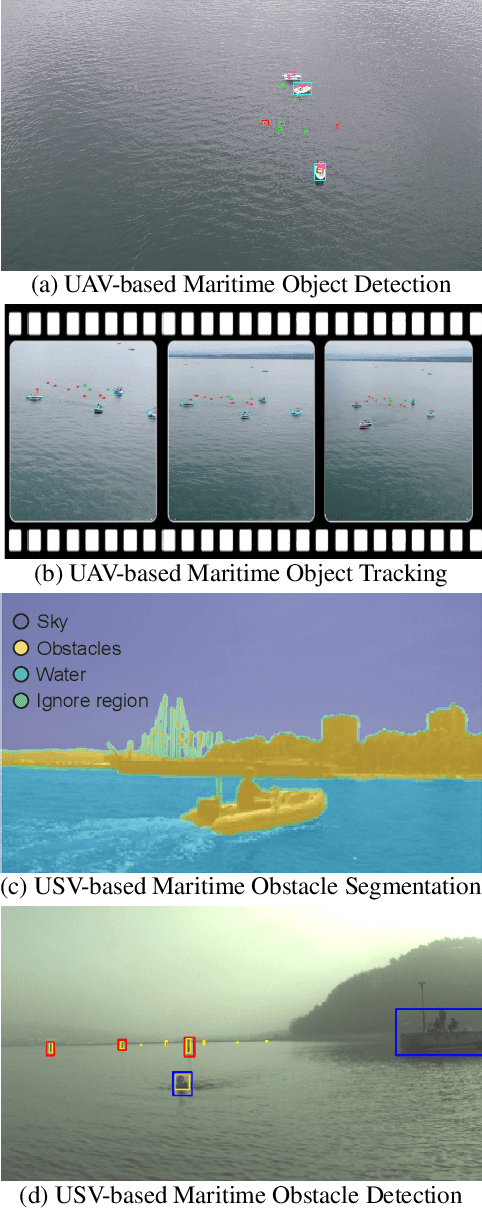

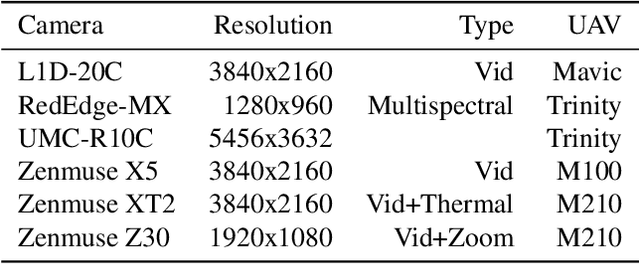

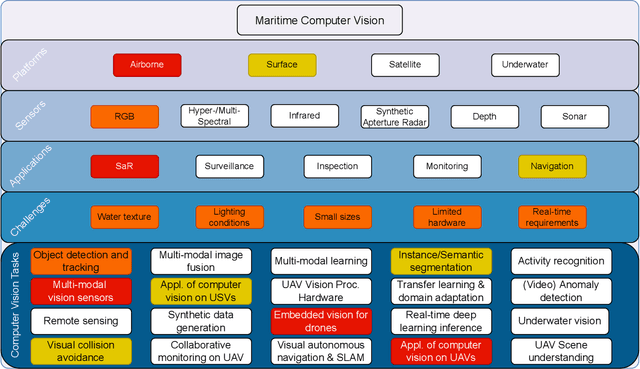

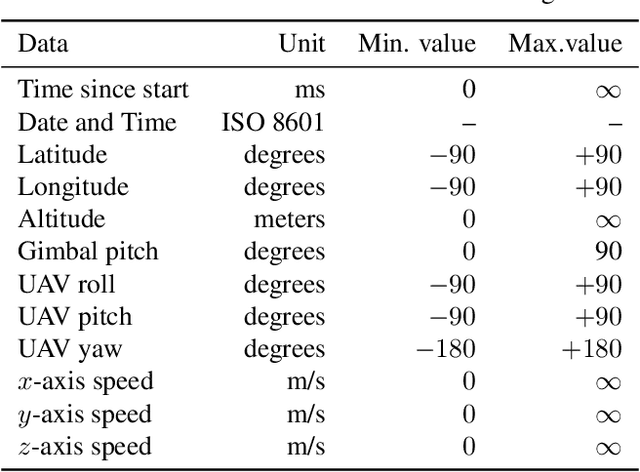

Abstract:The 1$^{\text{st}}$ Workshop on Maritime Computer Vision (MaCVi) 2023 focused on maritime computer vision for Unmanned Aerial Vehicles (UAV) and Unmanned Surface Vehicle (USV), and organized several subchallenges in this domain: (i) UAV-based Maritime Object Detection, (ii) UAV-based Maritime Object Tracking, (iii) USV-based Maritime Obstacle Segmentation and (iv) USV-based Maritime Obstacle Detection. The subchallenges were based on the SeaDronesSee and MODS benchmarks. This report summarizes the main findings of the individual subchallenges and introduces a new benchmark, called SeaDronesSee Object Detection v2, which extends the previous benchmark by including more classes and footage. We provide statistical and qualitative analyses, and assess trends in the best-performing methodologies of over 130 submissions. The methods are summarized in the appendix. The datasets, evaluation code and the leaderboard are publicly available at https://seadronessee.cs.uni-tuebingen.de/macvi.

Modular Multi-Copter Structure Control for Cooperative Aerial Cargo Transportation

Oct 10, 2022

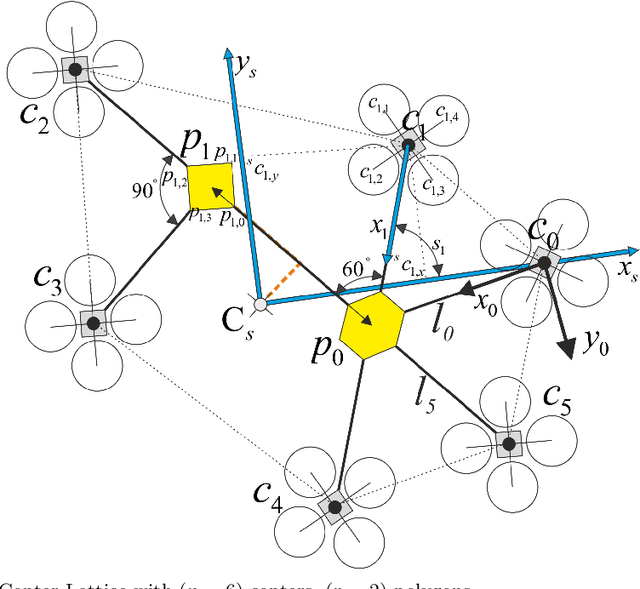

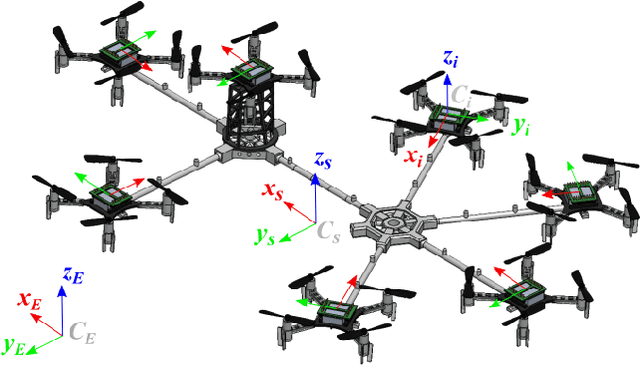

Abstract:The control problem of a multi-copter swarm, mechanically coupled through a modular lattice structure of connecting rods, is considered in this article. The system's structural elasticity is considered in deriving the system's dynamics. The devised controller is robust against the induced flexibilities, while an inherent adaptation scheme allows for the control of asymmetrical configurations and the transportation of unknown payloads. Certain optimization metrics are introduced for solving the individual agent thrust allocation problem while achieving maximum system flight time, resulting in a platform-independent control implementation. Experimental studies are offered to illustrate the efficiency of the suggested controller under typical flight conditions, increased rod elasticities and payload transportation.

Siamese Transformer Pyramid Networks for Real-Time UAV Tracking

Oct 17, 2021

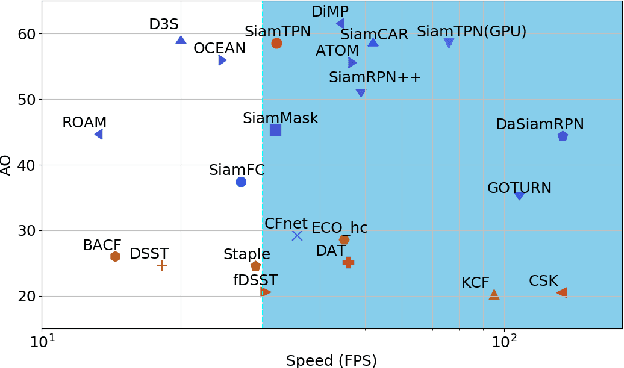

Abstract:Recent object tracking methods depend upon deep networks or convoluted architectures. Most of those trackers can hardly meet real-time processing requirements on mobile platforms with limited computing resources. In this work, we introduce the Siamese Transformer Pyramid Network (SiamTPN), which inherits the advantages from both CNN and Transformer architectures. Specifically, we exploit the inherent feature pyramid of a lightweight network (ShuffleNetV2) and reinforce it with a Transformer to construct a robust target-specific appearance model. A centralized architecture with lateral cross attention is developed for building augmented high-level feature maps. To avoid the computation and memory intensity while fusing pyramid representations with the Transformer, we further introduce the pooling attention module, which significantly reduces memory and time complexity while improving the robustness. Comprehensive experiments on both aerial and prevalent tracking benchmarks achieve competitive results while operating at high speed, demonstrating the effectiveness of SiamTPN. Moreover, our fastest variant tracker operates over 30 Hz on a single CPU-core and obtaining an AUC score of 58.1% on the LaSOT dataset. Source codes are available at https://github.com/RISCNYUAD/SiamTPNTracker

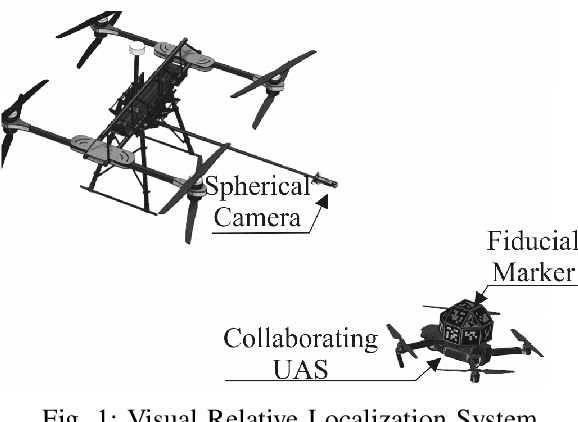

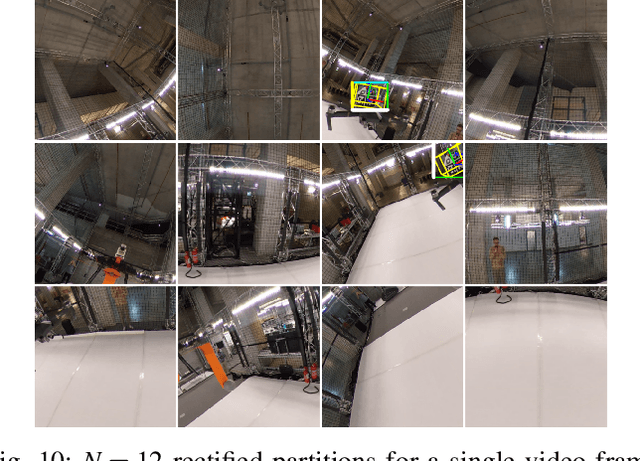

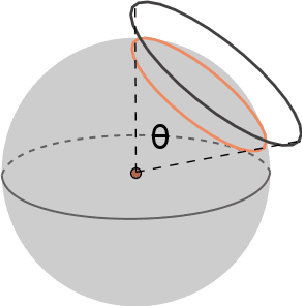

Relative Visual Localization for Unmanned Aerial Systems

Mar 04, 2020

Abstract:Cooperative Unmanned Aerial Systems (UASs) in GPS-denied environments demand an accurate pose-localization system to ensure efficient operation. In this paper we present a novel visual relative localization system capable of monitoring a 360$^o$ Field-of-View (FoV) in the immediate surroundings of the UAS using a spherical camera. Collaborating UASs carry a set of fiducial markers which are detected by the camera-system. The spherical image is partitioned and rectified into a set of square images. An algorithm is proposed to select the number of images that balances the computational load while maintaining a minimum tracking-accuracy level. The developed system tracks UASs in the vicinity of the spherical camera and experimental studies using two UASs are offered to validate the performance of the relative visual localization against that of a motion capture system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge