Mohammed Korayem

Forecasting Application Counts in Talent Acquisition Platforms: Harnessing Multimodal Signals using LMs

Nov 19, 2024Abstract:As recruitment and talent acquisition have become more and more competitive, recruitment firms have become more sophisticated in using machine learning (ML) methodologies for optimizing their day to day activities. But, most of published ML based methodologies in this area have been limited to the tasks like candidate matching, job to skill matching, job classification and normalization. In this work, we discuss a novel task in the recruitment domain, namely, application count forecasting, motivation of which comes from designing of effective outreach activities to attract qualified applicants. We show that existing auto-regressive based time series forecasting methods perform poorly for this task. Henceforth, we propose a multimodal LM-based model which fuses job-posting metadata of various modalities through a simple encoder. Experiments from large real-life datasets from CareerBuilder LLC show the effectiveness of the proposed method over existing state-of-the-art methods.

Embedding-based Recommender System for Job to Candidate Matching on Scale

Jul 01, 2021

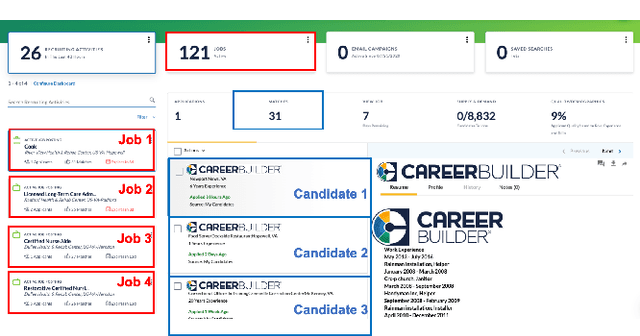

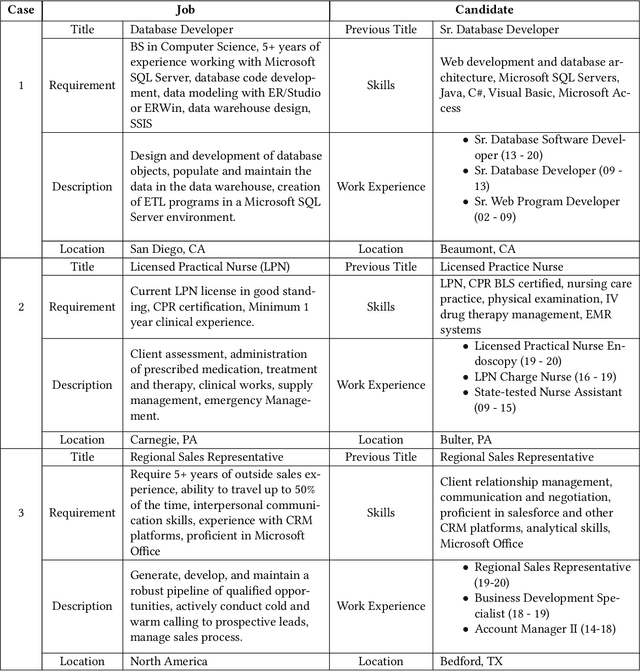

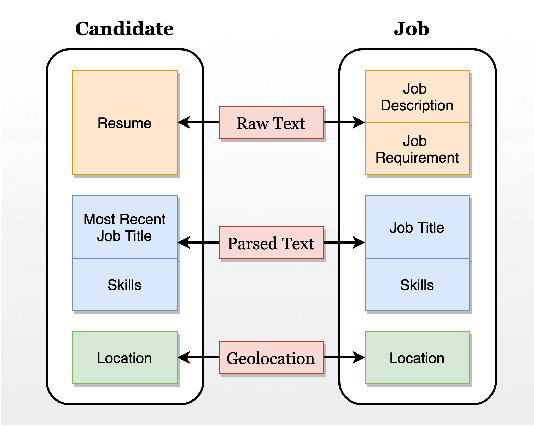

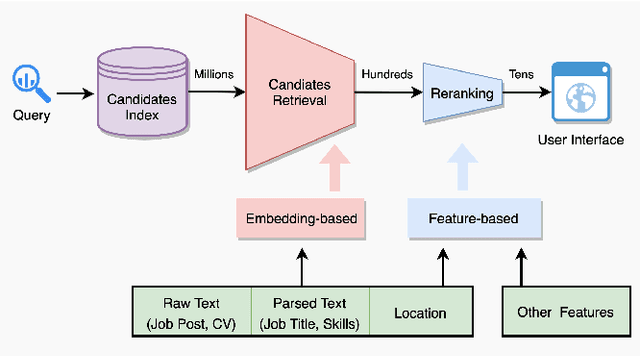

Abstract:The online recruitment matching system has been the core technology and service platform in CareerBuilder. One of the major challenges in an online recruitment scenario is to provide good matches between job posts and candidates using a recommender system on the scale. In this paper, we discussed the techniques for applying an embedding-based recommender system for the large scale of job to candidates matching. To learn the comprehensive and effective embedding for job posts and candidates, we have constructed a fused-embedding via different levels of representation learning from raw text, semantic entities and location information. The clusters of fused-embedding of job and candidates are then used to build and train the Faiss index that supports runtime approximate nearest neighbor search for candidate retrieval. After the first stage of candidate retrieval, a second stage reranking model that utilizes other contextual information was used to generate the final matching result. Both offline and online evaluation results indicate a significant improvement of our proposed two-staged embedding-based system in terms of click-through rate (CTR), quality and normalized discounted accumulated gain (nDCG), compared to those obtained from our baseline system. We further described the deployment of the system that supports the million-scale job and candidate matching process at CareerBuilder. The overall improvement of our job to candidate matching system has demonstrated its feasibility and scalability at a major online recruitment site.

Tripartite Vector Representations for Better Job Recommendation

Jul 23, 2019

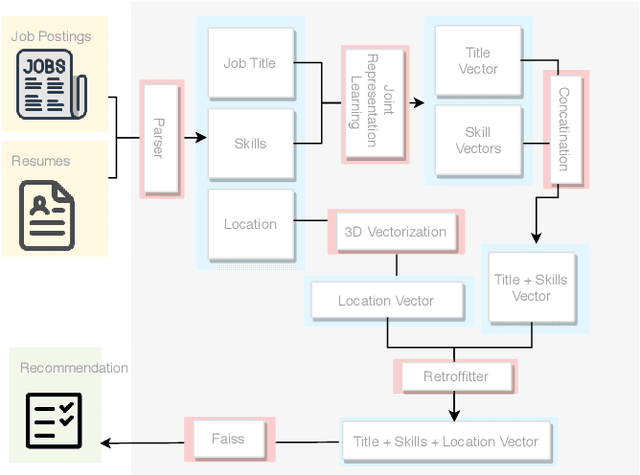

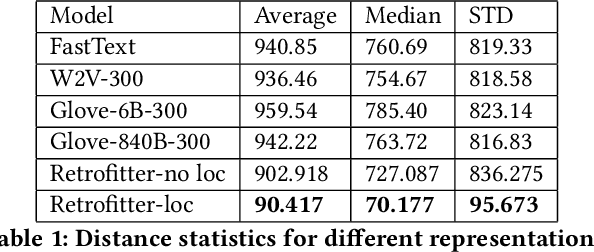

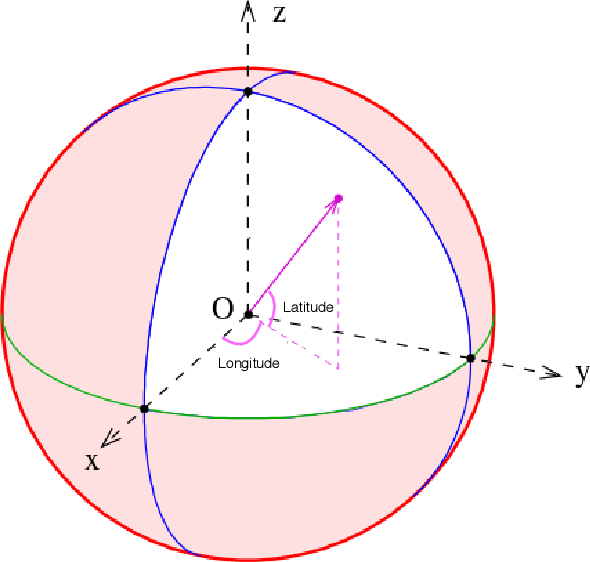

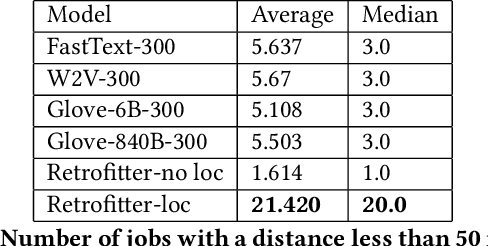

Abstract:Job recommendation is a crucial part of the online job recruitment business. To match the right person with the right job, a good representation of job postings is required. Such representations should ideally recommend jobs with fitting titles, aligned skill set, and reasonable commute. To address these aspects, we utilize three information graphs ( job-job, skill-skill, job-skill) from historical job data to learn a joint representation for both job titles and skills in a shared latent space. This allows us to gain a representation of job postings/ resume using both elements, which subsequently can be combined with location. In this paper, we first present how the presentation of each component is obtained, and then we discuss how these different representations are combined together into one single space to acquire the final representation. The results of comparing the proposed methodology against different base-line methods show significant improvement in terms of relevancy.

Automated Discovery and Classification of Training Videos for Career Progression

Jul 23, 2019

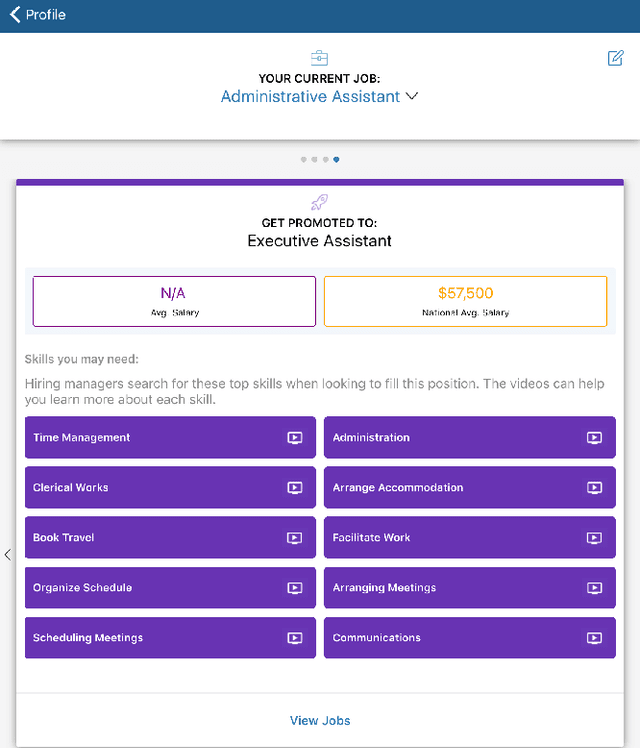

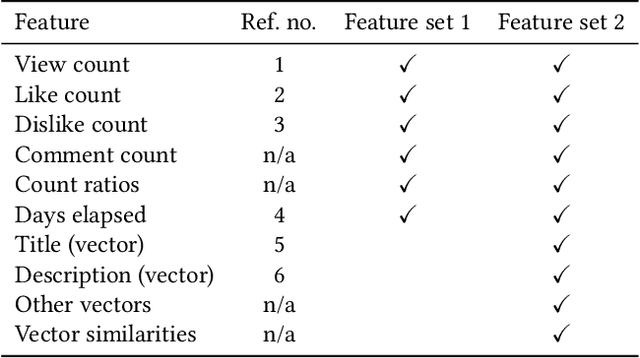

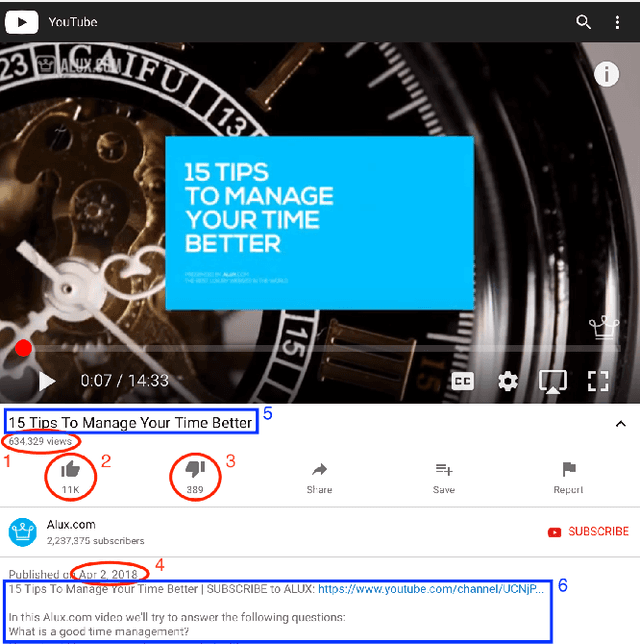

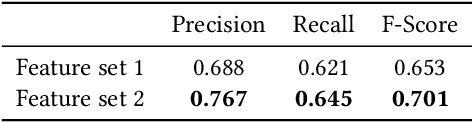

Abstract:Job transitions and upskilling are common actions taken by many industry working professionals throughout their career. With the current rapidly changing job landscape where requirements are constantly changing and industry sectors are emerging, it is especially difficult to plan and navigate a predetermined career path. In this work, we implemented a system to automate the collection and classification of training videos to help job seekers identify and acquire the skills necessary to transition to the next step in their career. We extracted educational videos and built a machine learning classifier to predict video relevancy. This system allows us to discover relevant videos at a large scale for job title-skill pairs. Our experiments show significant improvements in the model performance by incorporating embedding vectors associated with the video attributes. Additionally, we evaluated the optimal probability threshold to extract as many videos as possible with minimal false positive rate.

Solving Cold-Start Problem in Large-scale Recommendation Engines: A Deep Learning Approach

Nov 16, 2016

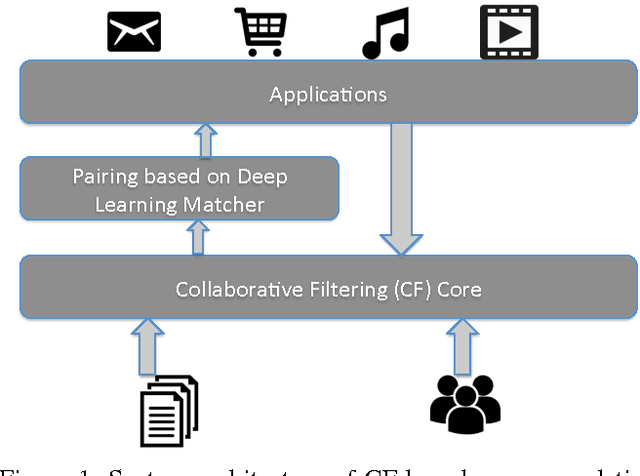

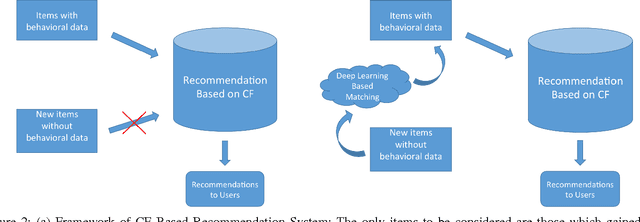

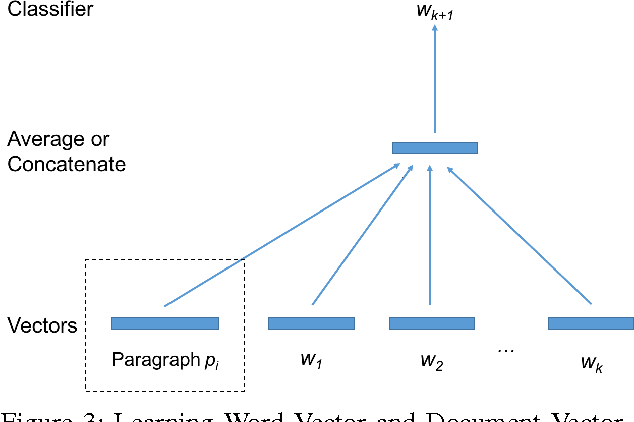

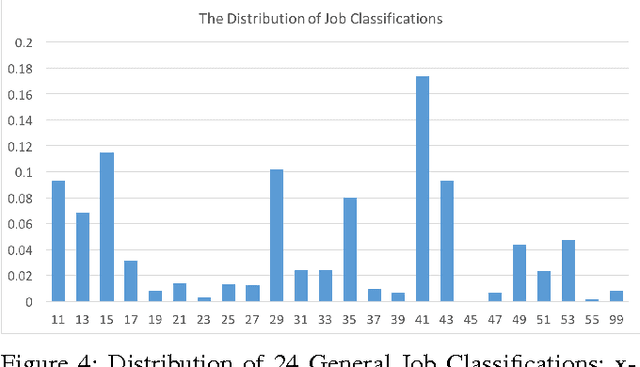

Abstract:Collaborative Filtering (CF) is widely used in large-scale recommendation engines because of its efficiency, accuracy and scalability. However, in practice, the fact that recommendation engines based on CF require interactions between users and items before making recommendations, make it inappropriate for new items which haven't been exposed to the end users to interact with. This is known as the cold-start problem. In this paper we introduce a novel approach which employs deep learning to tackle this problem in any CF based recommendation engine. One of the most important features of the proposed technique is the fact that it can be applied on top of any existing CF based recommendation engine without changing the CF core. We successfully applied this technique to overcome the item cold-start problem in Careerbuilder's CF based recommendation engine. Our experiments show that the proposed technique is very efficient to resolve the cold-start problem while maintaining high accuracy of the CF recommendations.

Macro-optimization of email recommendation response rates harnessing individual activity levels and group affinity trends

Sep 20, 2016

Abstract:Recommendation emails are among the best ways to re-engage with customers after they have left a website. While on-site recommendation systems focus on finding the most relevant items for a user at the moment (right item), email recommendations add two critical additional dimensions: who to send recommendations to (right person) and when to send them (right time). It is critical that a recommendation email system not send too many emails to too many users in too short of a time-window, as users may unsubscribe from future emails or become desensitized and ignore future emails if they receive too many. Also, email service providers may mark such emails as spam if too many of their users are contacted in a short time-window. Optimizing email recommendation systems such that they can yield a maximum response rate for a minimum number of email sends is thus critical for the long-term performance of such a system. In this paper, we present a novel recommendation email system that not only generates recommendations, but which also leverages a combination of individual user activity data, as well as the behavior of the group to which they belong, in order to determine each user's likelihood to respond to any given set of recommendations within a given time period. In doing this, we have effectively created a meta-recommendation system which recommends sets of recommendations in order to optimize the aggregate response rate of the entire system. The proposed technique has been applied successfully within CareerBuilder's job recommendation email system to generate a 50\% increase in total conversions while also decreasing sent emails by 72%

* This version is accepted as regular paper in The 15th IEEE International Conference on Machine Learning and Applications (IEEE ICMLA'16) . pre-camera ready version

The Semantic Knowledge Graph: A compact, auto-generated model for real-time traversal and ranking of any relationship within a domain

Sep 05, 2016

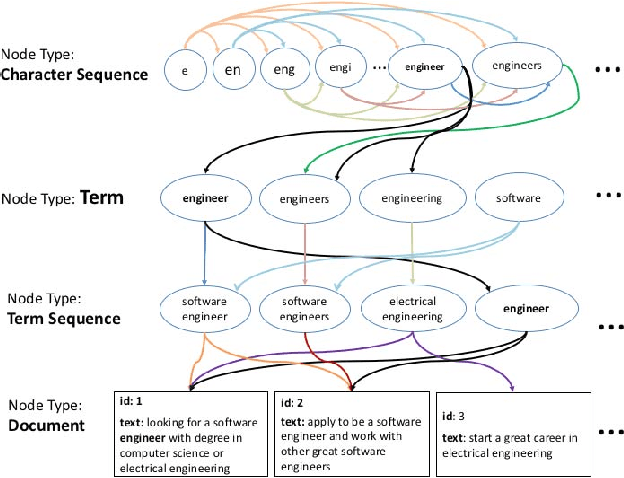

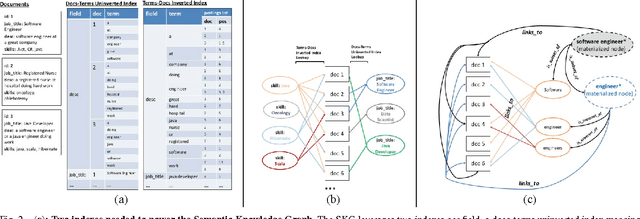

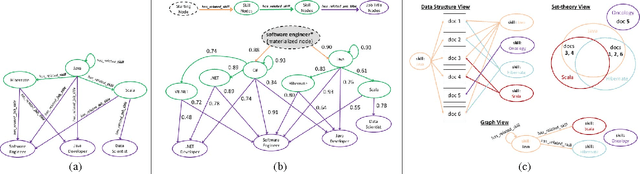

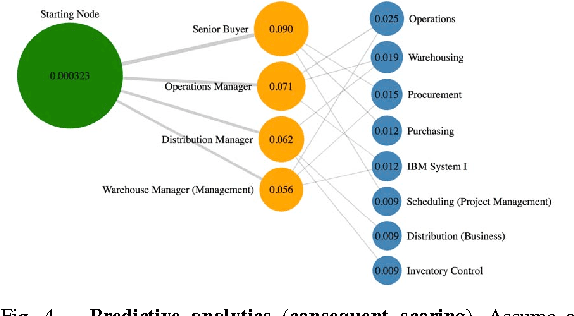

Abstract:This paper describes a new kind of knowledge representation and mining system which we are calling the Semantic Knowledge Graph. At its heart, the Semantic Knowledge Graph leverages an inverted index, along with a complementary uninverted index, to represent nodes (terms) and edges (the documents within intersecting postings lists for multiple terms/nodes). This provides a layer of indirection between each pair of nodes and their corresponding edge, enabling edges to materialize dynamically from underlying corpus statistics. As a result, any combination of nodes can have edges to any other nodes materialize and be scored to reveal latent relationships between the nodes. This provides numerous benefits: the knowledge graph can be built automatically from a real-world corpus of data, new nodes - along with their combined edges - can be instantly materialized from any arbitrary combination of preexisting nodes (using set operations), and a full model of the semantic relationships between all entities within a domain can be represented and dynamically traversed using a highly compact representation of the graph. Such a system has widespread applications in areas as diverse as knowledge modeling and reasoning, natural language processing, anomaly detection, data cleansing, semantic search, analytics, data classification, root cause analysis, and recommendations systems. The main contribution of this paper is the introduction of a novel system - the Semantic Knowledge Graph - which is able to dynamically discover and score interesting relationships between any arbitrary combination of entities (words, phrases, or extracted concepts) through dynamically materializing nodes and edges from a compact graphical representation built automatically from a corpus of data representative of a knowledge domain.

Sentiment/Subjectivity Analysis Survey for Languages other than English

Aug 25, 2016

Abstract:Subjective and sentiment analysis have gained considerable attention recently. Most of the resources and systems built so far are done for English. The need for designing systems for other languages is increasing. This paper surveys different ways used for building systems for subjective and sentiment analysis for languages other than English. There are three different types of systems used for building these systems. The first (and the best) one is the language specific systems. The second type of systems involves reusing or transferring sentiment resources from English to the target language. The third type of methods is based on using language independent methods. The paper presents a separate section devoted to Arabic sentiment analysis.

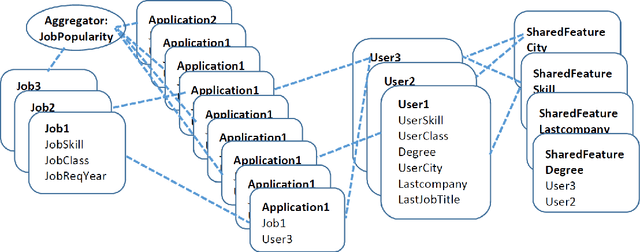

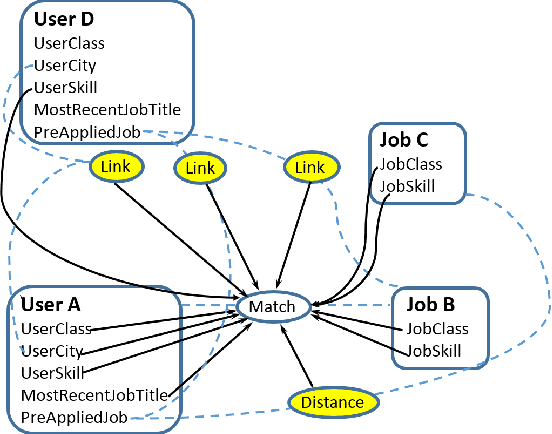

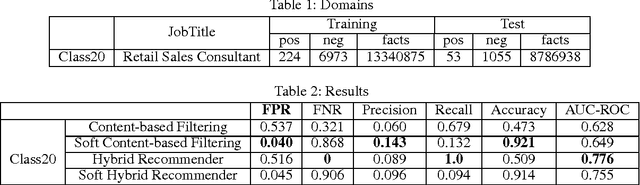

Application of Statistical Relational Learning to Hybrid Recommendation Systems

Jul 04, 2016

Abstract:Recommendation systems usually involve exploiting the relations among known features and content that describe items (content-based filtering) or the overlap of similar users who interacted with or rated the target item (collaborative filtering). To combine these two filtering approaches, current model-based hybrid recommendation systems typically require extensive feature engineering to construct a user profile. Statistical Relational Learning (SRL) provides a straightforward way to combine the two approaches. However, due to the large scale of the data used in real world recommendation systems, little research exists on applying SRL models to hybrid recommendation systems, and essentially none of that research has been applied on real big-data-scale systems. In this paper, we proposed a way to adapt the state-of-the-art in SRL learning approaches to construct a real hybrid recommendation system. Furthermore, in order to satisfy a common requirement in recommendation systems (i.e. that false positives are more undesirable and therefore penalized more harshly than false negatives), our approach can also allow tuning the trade-off between the precision and recall of the system in a principled way. Our experimental results demonstrate the efficiency of our proposed approach as well as its improved performance on recommendation precision.

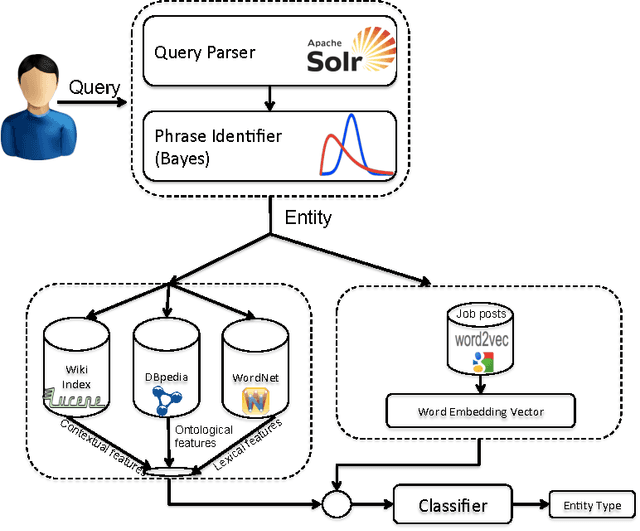

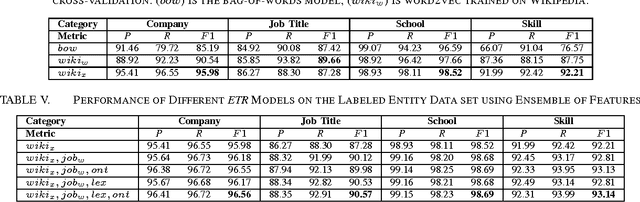

Entity Type Recognition using an Ensemble of Distributional Semantic Models to Enhance Query Understanding

Apr 04, 2016

Abstract:We present an ensemble approach for categorizing search query entities in the recruitment domain. Understanding the types of entities expressed in a search query (Company, Skill, Job Title, etc.) enables more intelligent information retrieval based upon those entities compared to a traditional keyword-based search. Because search queries are typically very short, leveraging a traditional bag-of-words model to identify entity types would be inappropriate due to the lack of contextual information. Our approach instead combines clues from different sources of varying complexity in order to collect real-world knowledge about query entities. We employ distributional semantic representations of query entities through two models: 1) contextual vectors generated from encyclopedic corpora like Wikipedia, and 2) high dimensional word embedding vectors generated from millions of job postings using word2vec. Additionally, our approach utilizes both entity linguistic properties obtained from WordNet and ontological properties extracted from DBpedia. We evaluate our approach on a data set created at CareerBuilder; the largest job board in the US. The data set contains entities extracted from millions of job seekers/recruiters search queries, job postings, and resume documents. After constructing the distributional vectors of search entities, we use supervised machine learning to infer search entity types. Empirical results show that our approach outperforms the state-of-the-art word2vec distributional semantics model trained on Wikipedia. Moreover, we achieve micro-averaged F 1 score of 97% using the proposed distributional representations ensemble.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge