Mihajlo Velimirović

The CARFAC v2 Cochlear Model in Matlab, NumPy, and JAX

Apr 26, 2024

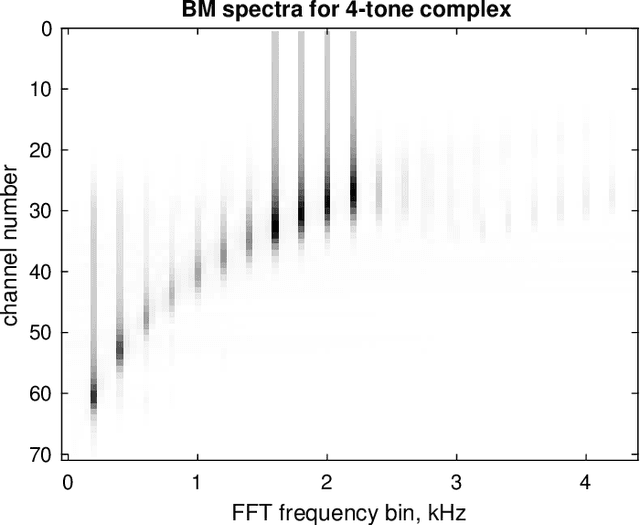

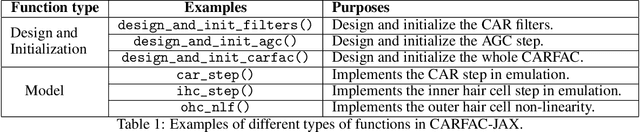

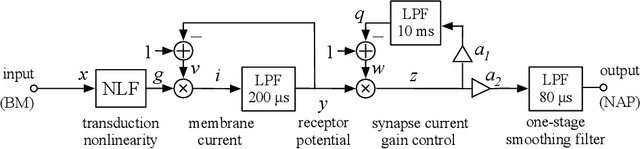

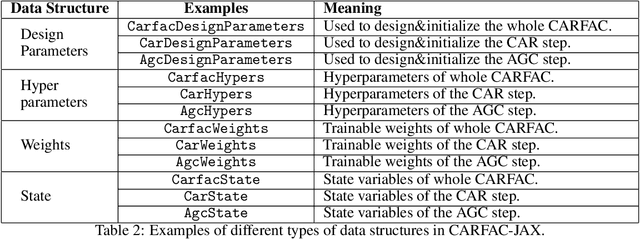

Abstract:The open-source CARFAC (Cascade of Asymmetric Resonators with Fast-Acting Compression) cochlear model is upgraded to version 2, with improvements to the Matlab implementation, and with new Python/NumPy and JAX implementations -- but C++ version changes are still pending. One change addresses the DC (direct current, or zero frequency) quadratic distortion anomaly previously reported; another reduces the neural synchrony at high frequencies; the others have little or no noticeable effect in the default configuration. A new feature allows modeling a reduction of cochlear amplifier function, as a step toward a differentiable parameterized model of hearing impairment. In addition, the integration into the Auditory Model Toolbox (AMT) has been extensively improved, as the prior integration had bugs that made it unsuitable for including CARFAC in multi-model comparisons.

AudioPaLM: A Large Language Model That Can Speak and Listen

Jun 22, 2023

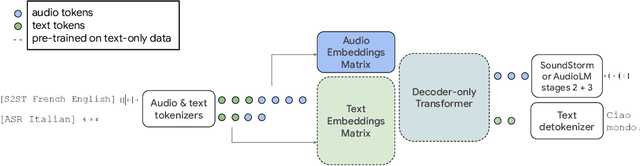

Abstract:We introduce AudioPaLM, a large language model for speech understanding and generation. AudioPaLM fuses text-based and speech-based language models, PaLM-2 [Anil et al., 2023] and AudioLM [Borsos et al., 2022], into a unified multimodal architecture that can process and generate text and speech with applications including speech recognition and speech-to-speech translation. AudioPaLM inherits the capability to preserve paralinguistic information such as speaker identity and intonation from AudioLM and the linguistic knowledge present only in text large language models such as PaLM-2. We demonstrate that initializing AudioPaLM with the weights of a text-only large language model improves speech processing, successfully leveraging the larger quantity of text training data used in pretraining to assist with the speech tasks. The resulting model significantly outperforms existing systems for speech translation tasks and has the ability to perform zero-shot speech-to-text translation for many languages for which input/target language combinations were not seen in training. AudioPaLM also demonstrates features of audio language models, such as transferring a voice across languages based on a short spoken prompt. We release examples of our method at https://google-research.github.io/seanet/audiopalm/examples

SPICE: Self-supervised Pitch Estimation

Oct 25, 2019

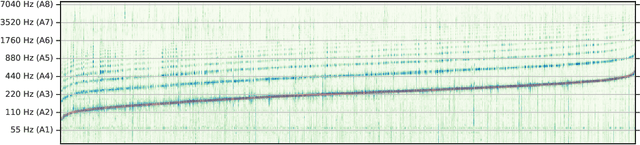

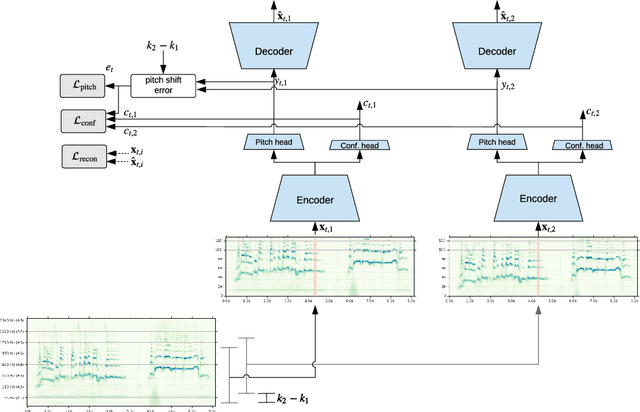

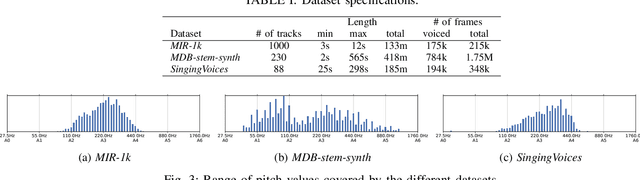

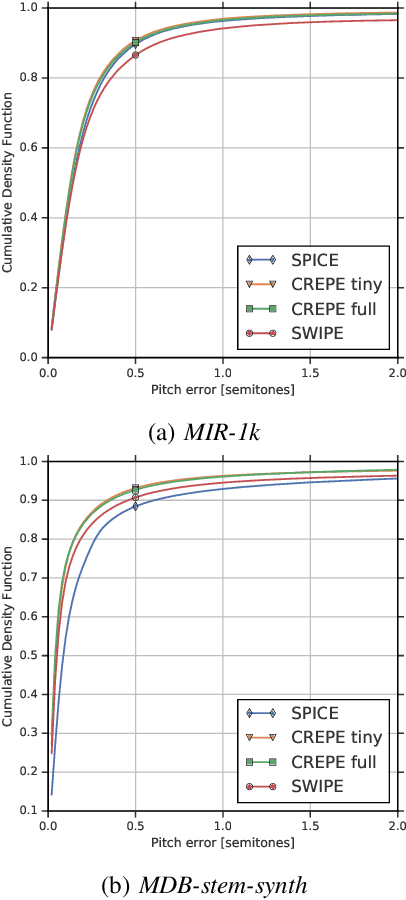

Abstract:We propose a model to estimate the fundamental frequency in monophonic audio, often referred to as pitch estimation. We acknowledge the fact that obtaining ground truth annotations at the required temporal and frequency resolution is a particularly daunting task. Therefore, we propose to adopt a self-supervised learning technique, which is able to estimate (relative) pitch without any form of supervision. The key observation is that pitch shift maps to a simple translation when the audio signal is analysed through the lens of the constant-Q transform (CQT). We design a self-supervised task by feeding two shifted slices of the CQT to the same convolutional encoder, and require that the difference in the outputs is proportional to the corresponding difference in pitch. In addition, we introduce a small model head on top of the encoder, which is able to determine the confidence of the pitch estimate, so as to distinguish between voiced and unvoiced audio. Our results show that the proposed method is able to estimate pitch at a level of accuracy comparable to fully supervised models, both on clean and noisy audio samples, yet it does not require access to large labeled datasets

Now Playing: Continuous low-power music recognition

Nov 29, 2017

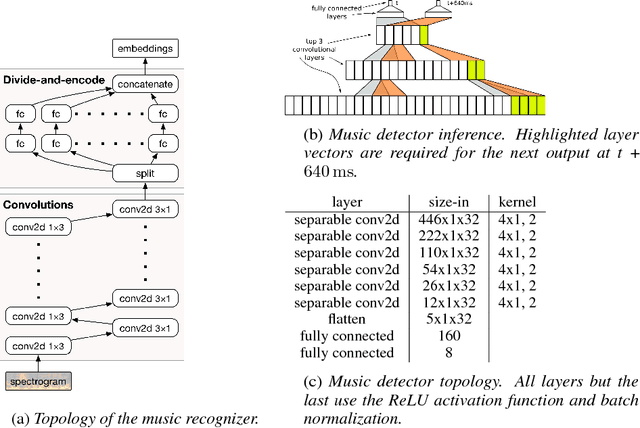

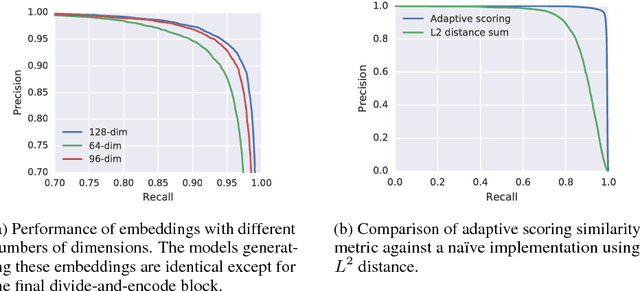

Abstract:Existing music recognition applications require a connection to a server that performs the actual recognition. In this paper we present a low-power music recognizer that runs entirely on a mobile device and automatically recognizes music without user interaction. To reduce battery consumption, a small music detector runs continuously on the mobile device's DSP chip and wakes up the main application processor only when it is confident that music is present. Once woken, the recognizer on the application processor is provided with a few seconds of audio which is fingerprinted and compared to the stored fingerprints in the on-device fingerprint database of tens of thousands of songs. Our presented system, Now Playing, has a daily battery usage of less than 1% on average, respects user privacy by running entirely on-device and can passively recognize a wide range of music.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge