Mengyao Zheng

Enhancing Exchange Rate Forecasting with Explainable Deep Learning Models

Oct 25, 2024

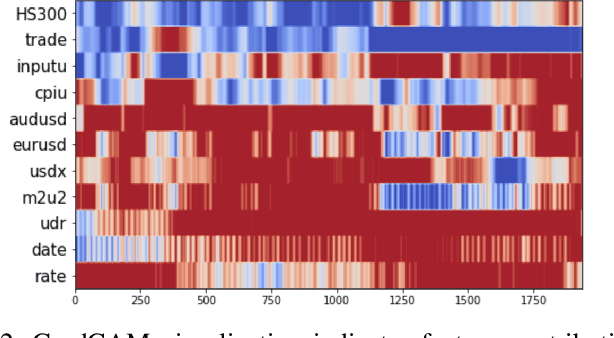

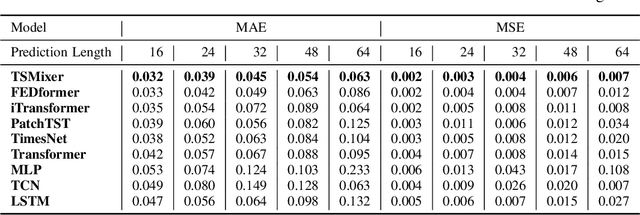

Abstract:Accurate exchange rate prediction is fundamental to financial stability and international trade, positioning it as a critical focus in economic and financial research. Traditional forecasting models often falter when addressing the inherent complexities and non-linearities of exchange rate data. This study explores the application of advanced deep learning models, including LSTM, CNN, and transformer-based architectures, to enhance the predictive accuracy of the RMB/USD exchange rate. Utilizing 40 features across 6 categories, the analysis identifies TSMixer as the most effective model for this task. A rigorous feature selection process emphasizes the inclusion of key economic indicators, such as China-U.S. trade volumes and exchange rates of other major currencies like the euro-RMB and yen-dollar pairs. The integration of grad-CAM visualization techniques further enhances model interpretability, allowing for clearer identification of the most influential features and bolstering the credibility of the predictions. These findings underscore the pivotal role of fundamental economic data in exchange rate forecasting and highlight the substantial potential of machine learning models to deliver more accurate and reliable predictions, thereby serving as a valuable tool for financial analysis and decision-making.

Evaluating Modern Approaches in 3D Scene Reconstruction: NeRF vs Gaussian-Based Methods

Aug 08, 2024Abstract:Exploring the capabilities of Neural Radiance Fields (NeRF) and Gaussian-based methods in the context of 3D scene reconstruction, this study contrasts these modern approaches with traditional Simultaneous Localization and Mapping (SLAM) systems. Utilizing datasets such as Replica and ScanNet, we assess performance based on tracking accuracy, mapping fidelity, and view synthesis. Findings reveal that NeRF excels in view synthesis, offering unique capabilities in generating new perspectives from existing data, albeit at slower processing speeds. Conversely, Gaussian-based methods provide rapid processing and significant expressiveness but lack comprehensive scene completion. Enhanced by global optimization and loop closure techniques, newer methods like NICE-SLAM and SplaTAM not only surpass older frameworks such as ORB-SLAM2 in terms of robustness but also demonstrate superior performance in dynamic and complex environments. This comparative analysis bridges theoretical research with practical implications, shedding light on future developments in robust 3D scene reconstruction across various real-world applications.

Lightweight and Unobtrusive Privacy Preservation for Remote Inference via Edge Data Obfuscation

Dec 20, 2019

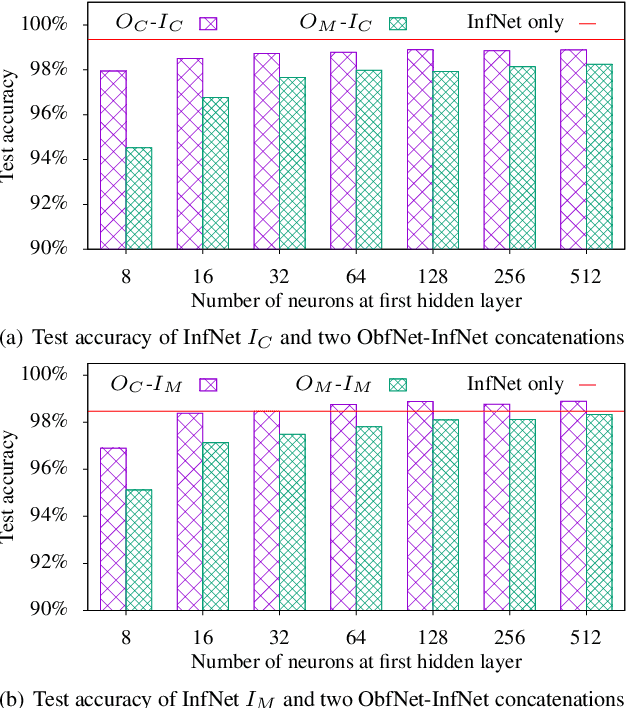

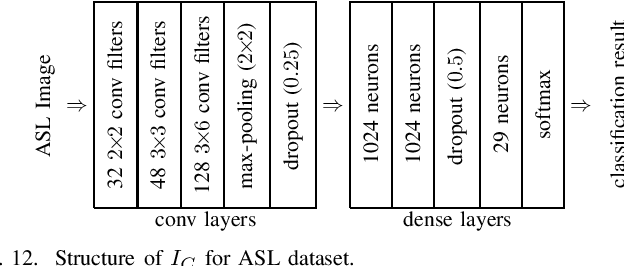

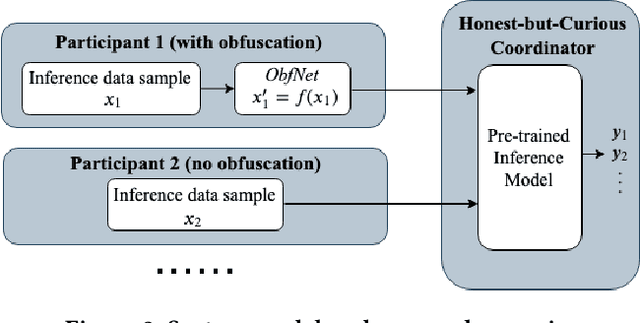

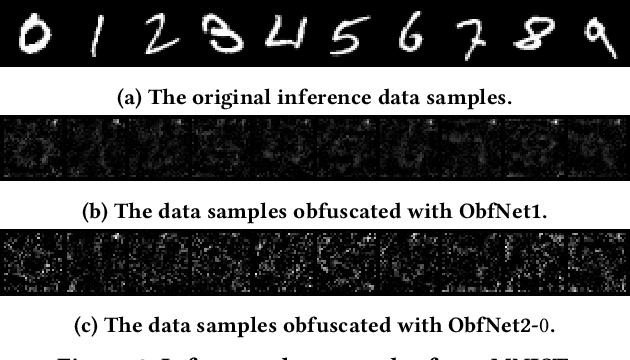

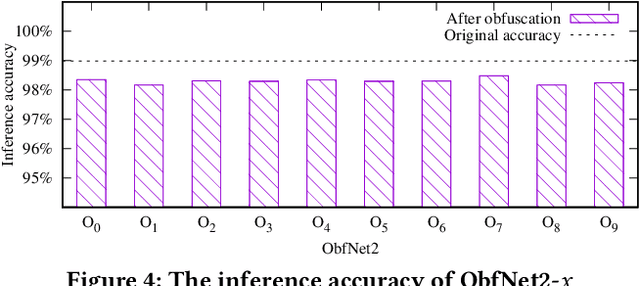

Abstract:The growing momentum of instrumenting the Internet of Things (IoT) with advanced machine learning techniques such as deep neural networks (DNNs) faces two practical challenges of limited compute power of edge devices and the need of protecting the confidentiality of the DNNs. The remote inference scheme that executes the DNNs on the server-class or cloud backend can address the above two challenges. However, it brings the concern of leaking the privacy of the IoT devices' users to the curious backend since the user-generated/related data is to be transmitted to the backend. This work develops a lightweight and unobtrusive approach to obfuscate the data before being transmitted to the backend for remote inference. In this approach, the edge device only needs to execute a small-scale neural network, incurring light compute overhead. Moreover, the edge device does not need to inform the backend on whether the data is obfuscated, making the protection unobtrusive. We apply the approach to three case studies of free spoken digit recognition, handwritten digit recognition, and American sign language recognition. The evaluation results obtained from the case studies show that our approach prevents the backend from obtaining the raw forms of the inference data while maintaining the DNN's inference accuracy at the backend.

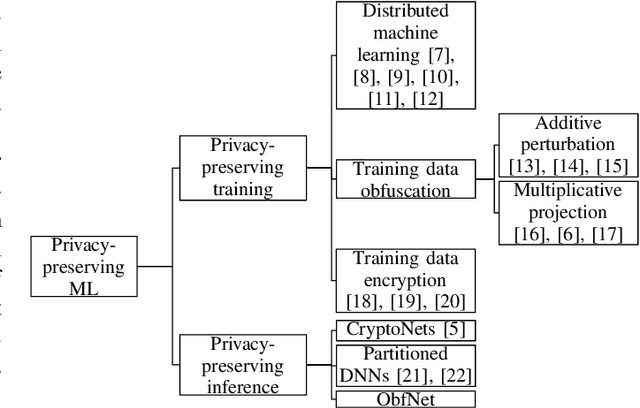

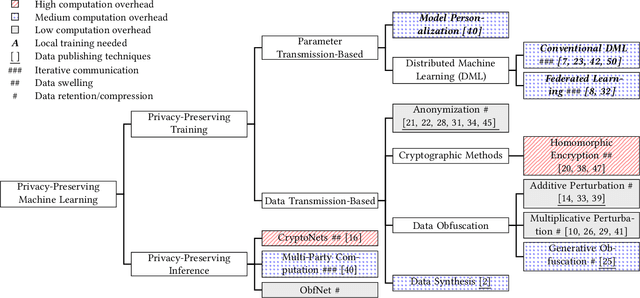

Challenges of Privacy-Preserving Machine Learning in IoT

Sep 21, 2019

Abstract:The Internet of Things (IoT) will be a main data generation infrastructure for achieving better system intelligence. However, the extensive data collection and processing in IoT also engender various privacy concerns. This paper provides a taxonomy of the existing privacy-preserving machine learning approaches developed in the context of cloud computing and discusses the challenges of applying them in the context of IoT. Moreover, we present a privacy-preserving inference approach that runs a lightweight neural network at IoT objects to obfuscate the data before transmission and a deep neural network in the cloud to classify the obfuscated data. Evaluation based on the MNIST dataset shows satisfactory performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge