Lightweight and Unobtrusive Privacy Preservation for Remote Inference via Edge Data Obfuscation

Paper and Code

Dec 20, 2019

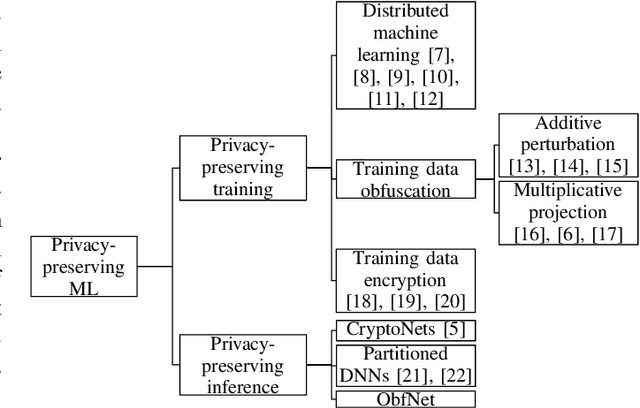

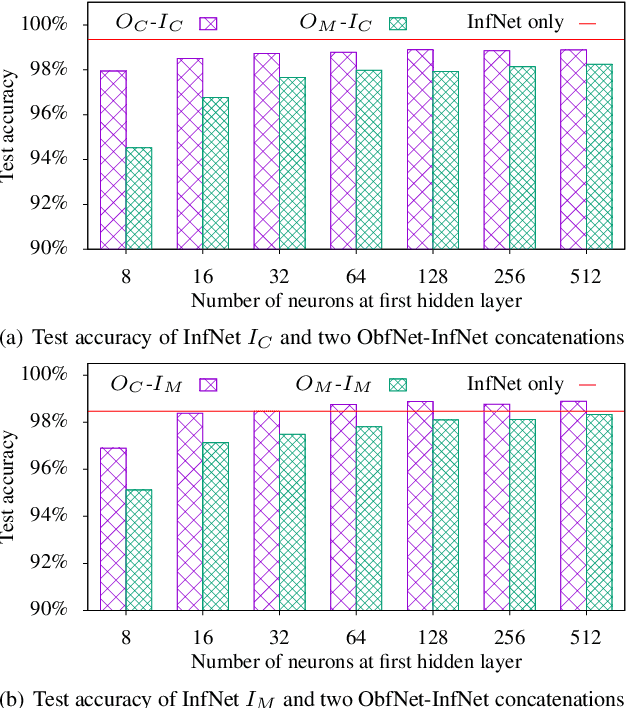

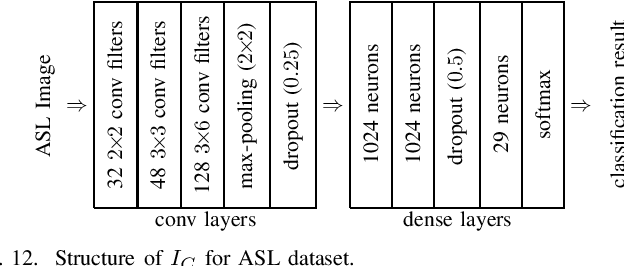

The growing momentum of instrumenting the Internet of Things (IoT) with advanced machine learning techniques such as deep neural networks (DNNs) faces two practical challenges of limited compute power of edge devices and the need of protecting the confidentiality of the DNNs. The remote inference scheme that executes the DNNs on the server-class or cloud backend can address the above two challenges. However, it brings the concern of leaking the privacy of the IoT devices' users to the curious backend since the user-generated/related data is to be transmitted to the backend. This work develops a lightweight and unobtrusive approach to obfuscate the data before being transmitted to the backend for remote inference. In this approach, the edge device only needs to execute a small-scale neural network, incurring light compute overhead. Moreover, the edge device does not need to inform the backend on whether the data is obfuscated, making the protection unobtrusive. We apply the approach to three case studies of free spoken digit recognition, handwritten digit recognition, and American sign language recognition. The evaluation results obtained from the case studies show that our approach prevents the backend from obtaining the raw forms of the inference data while maintaining the DNN's inference accuracy at the backend.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge