Matteo De Carlo

Emergence of specialized Collective Behaviors in Evolving Heterogeneous Swarms

Feb 07, 2024Abstract:Natural groups of animals, such as swarms of social insects, exhibit astonishing degrees of task specialization, useful to address complex tasks and to survive. This is supported by phenotypic plasticity: individuals sharing the same genotype that is expressed differently for different classes of individuals, each specializing in one task. In this work, we evolve a swarm of simulated robots with phenotypic plasticity to study the emergence of specialized collective behavior during an emergent perception task. Phenotypic plasticity is realized in the form of heterogeneity of behavior by dividing the genotype into two components, with one different neural network controller associated to each component. The whole genotype, expressing the behavior of the whole group through the two components, is subject to evolution with a single fitness function. We analyse the obtained behaviors and use the insights provided by these results to design an online regulatory mechanism. Our experiments show three main findings: 1) The sub-groups evolve distinct emergent behaviors. 2) The effectiveness of the whole swarm depends on the interaction between the two sub-groups, leading to a more robust performance than with singular sub-group behavior. 3) The online regulatory mechanism enhances overall performance and scalability.

Heritability in Morphological Robot Evolution

Oct 21, 2021

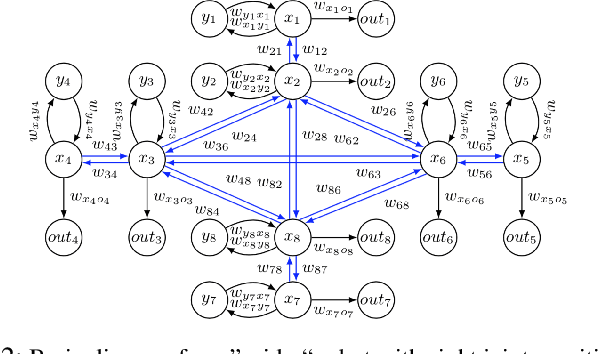

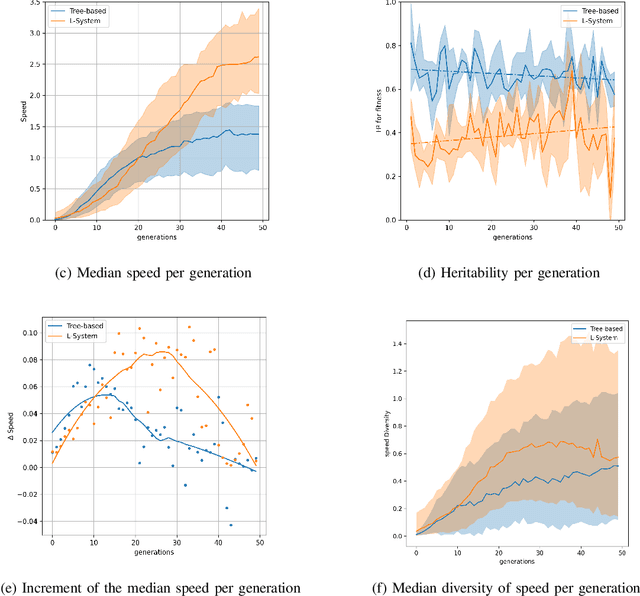

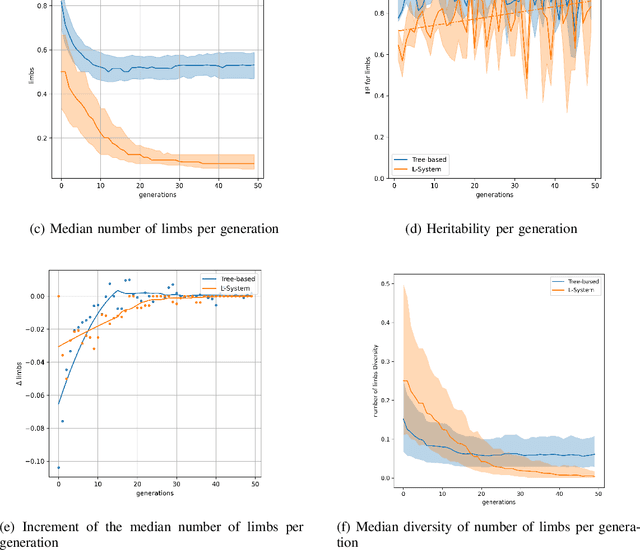

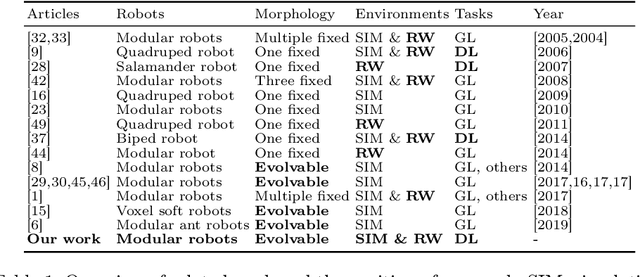

Abstract:In the field of evolutionary robotics, choosing the correct encoding is very complicated, especially when robots evolve both behaviours and morphologies at the same time. With the objective of improving our understanding of the mapping process from encodings to functional robots, we introduce the biological notion of heritability, which captures the amount of phenotypic variation caused by genotypic variation. In our analysis we measure the heritability on the first generation of robots evolved from two different encodings, a direct encoding and an indirect encoding. In addition we investigate the interplay between heritability and phenotypic diversity through the course of an entire evolutionary process. In particular, we investigate how direct and indirect genotypes can exhibit preferences for exploration or exploitation throughout the course of evolution. We observe how an exploration or exploitation tradeoff can be more easily understood by examining patterns in heritability and phenotypic diversity. In conclusion, we show how heritability can be a useful tool to better understand the relationship between genotypes and phenotypes, especially helpful when designing more complicated systems where complex individuals and environments can adapt and influence each other.

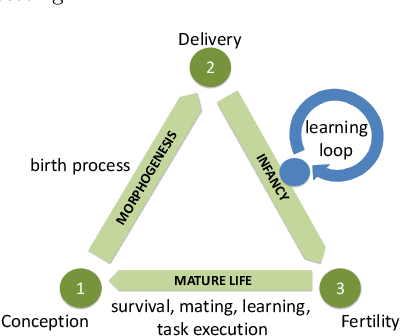

Morpho-evolution with learning using a controller archive as an inheritance mechanism

Apr 09, 2021

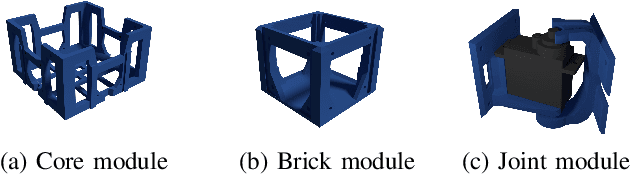

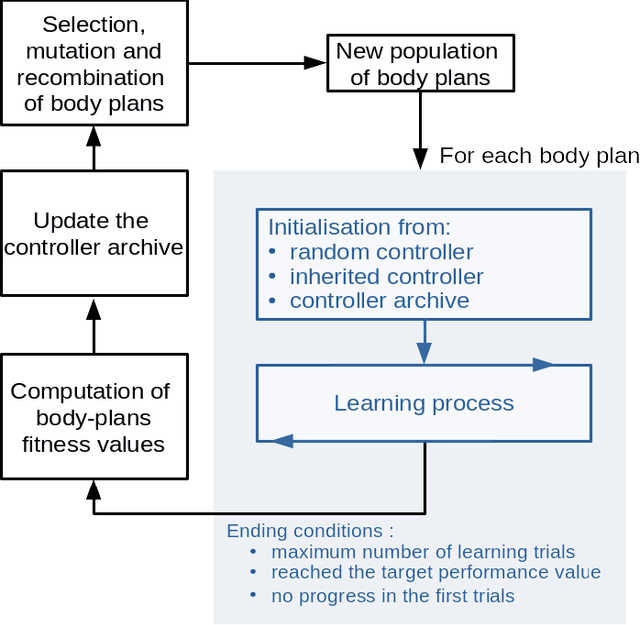

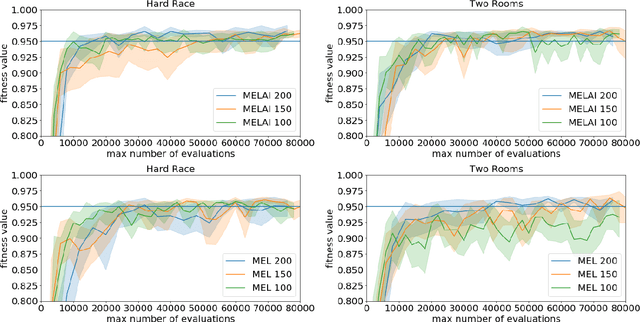

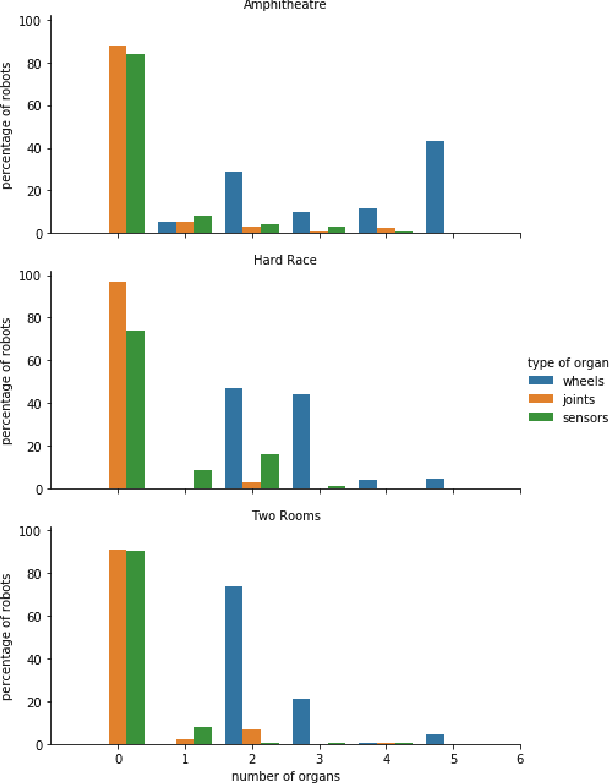

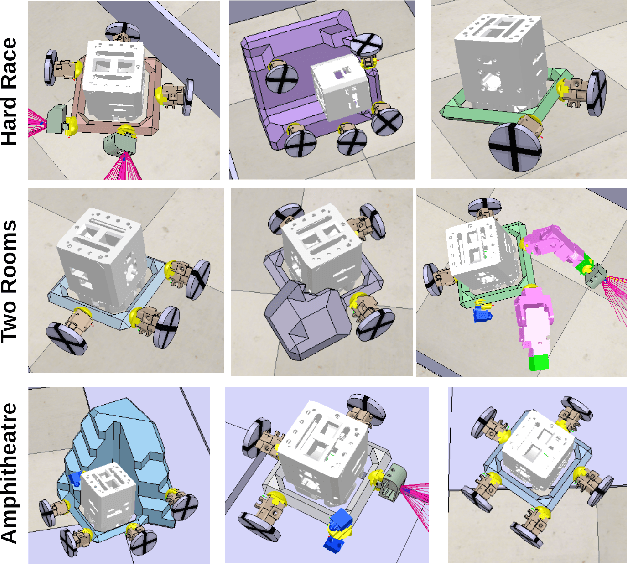

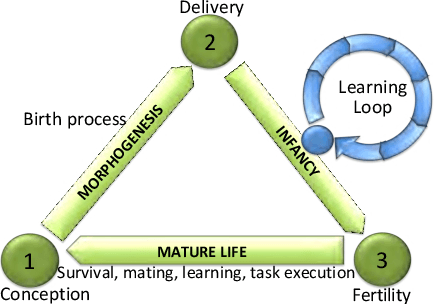

Abstract:In evolutionary robotics, several approaches have been shown to be capable of the joint optimisation of body-plans and controllers by either using only evolution or combining evolution and learning. When working in rich morphological spaces, it is common for offspring to have body-plans that are very different from either of their parents, which can cause difficulties with respect to inheriting a suitable controller. To address this, we propose a framework that combines an evolutionary algorithm to generate body-plans and a learning algorithm to optimise the parameters of a neural controller where the topology of this controller is created once the body-plan of each offspring body-plan is generated. The key novelty of the approach is to add an external archive for storing learned controllers that map to explicit `types' of robots (where this is defined with respect the features of the body-plan). By inheriting an appropriate controller from the archive rather than learning from a randomly initialised one, we show that both the speed and magnitude of learning increases over time when compared to an approach that starts from scratch, using three different test-beds. The framework also provides new insights into the complex interactions between evolution and learning, and the role of morphological intelligence in robot design.

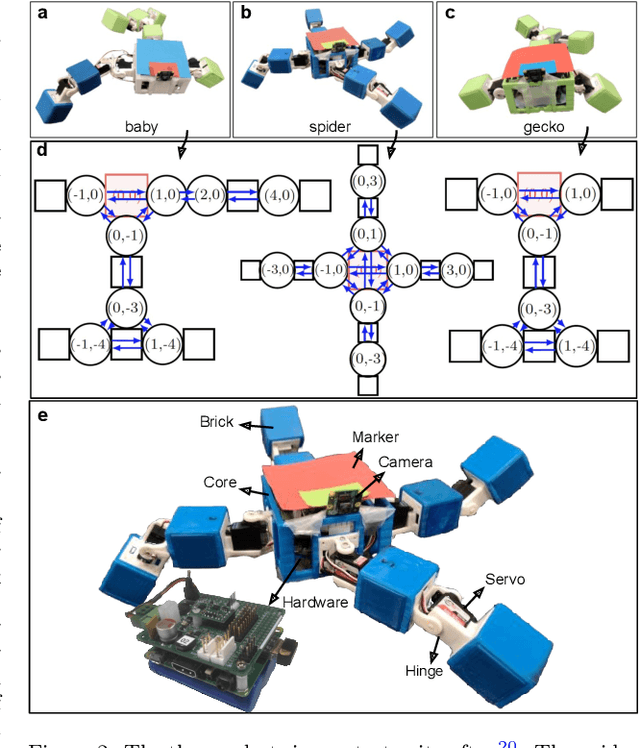

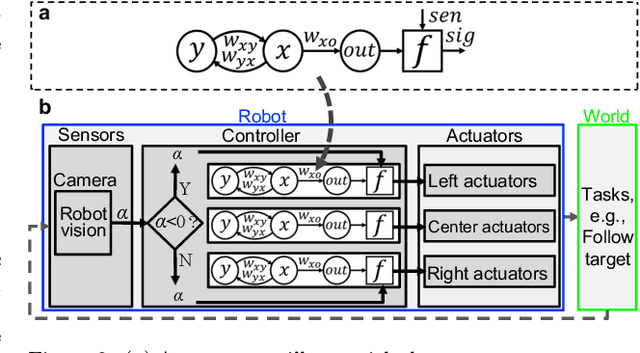

Learning Locomotion Skills in Evolvable Robots

Oct 19, 2020

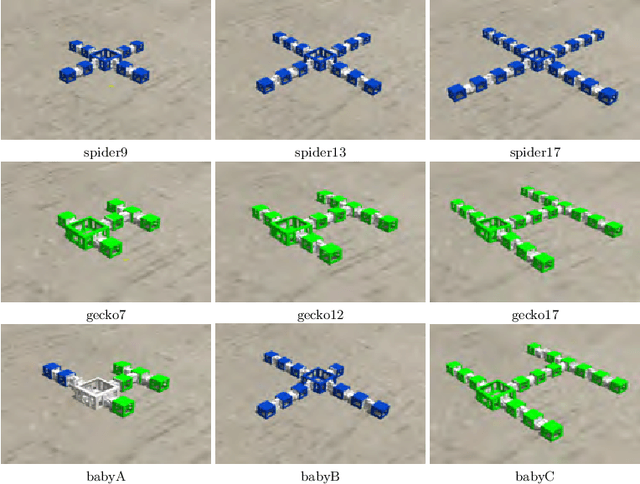

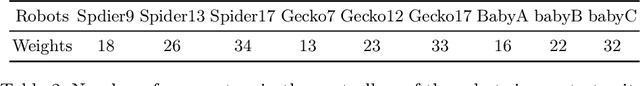

Abstract:The challenge of robotic reproduction -- making of new robots by recombining two existing ones -- has been recently cracked and physically evolving robot systems have come within reach. Here we address the next big hurdle: producing an adequate brain for a newborn robot. In particular, we address the task of targeted locomotion which is arguably a fundamental skill in any practical implementation. We introduce a controller architecture and a generic learning method to allow a modular robot with an arbitrary shape to learn to walk towards a target and follow this target if it moves. Our approach is validated on three robots, a spider, a gecko, and their offspring, in three real-world scenarios.

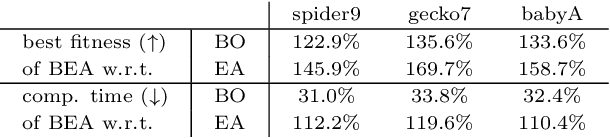

Learning Directed Locomotion in Modular Robots with Evolvable Morphologies

Jan 21, 2020

Abstract:We generalize the well-studied problem of gait learning in modular robots in two dimensions. Firstly, we address locomotion in a given target direction that goes beyond learning a typical undirected gait. Secondly, rather than studying one fixed robot morphology we consider a test suite of different modular robots. This study is based on our interest in evolutionary robot systems where both morphologies and controllers evolve. In such a system, newborn robots have to learn to control their own body that is a random combination of the bodies of the parents. We apply and compare two learning algorithms, Bayesian optimization and HyperNEAT. The results of the experiments in simulation show that both methods successfully learn good controllers, but Bayesian optimization is more effective and efficient. We validate the best learned controllers by constructing three robots from the test suite in the real world and observe their fitness and actual trajectories. The obtained results indicate a reality gap that depends on the controllers and the shape of the robots, but overall the trajectories are adequate and follow the target directions successfully.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge