Fuda van Diggelen

Emergence of specialized Collective Behaviors in Evolving Heterogeneous Swarms

Feb 07, 2024Abstract:Natural groups of animals, such as swarms of social insects, exhibit astonishing degrees of task specialization, useful to address complex tasks and to survive. This is supported by phenotypic plasticity: individuals sharing the same genotype that is expressed differently for different classes of individuals, each specializing in one task. In this work, we evolve a swarm of simulated robots with phenotypic plasticity to study the emergence of specialized collective behavior during an emergent perception task. Phenotypic plasticity is realized in the form of heterogeneity of behavior by dividing the genotype into two components, with one different neural network controller associated to each component. The whole genotype, expressing the behavior of the whole group through the two components, is subject to evolution with a single fitness function. We analyse the obtained behaviors and use the insights provided by these results to design an online regulatory mechanism. Our experiments show three main findings: 1) The sub-groups evolve distinct emergent behaviors. 2) The effectiveness of the whole swarm depends on the interaction between the two sub-groups, leading to a more robust performance than with singular sub-group behavior. 3) The online regulatory mechanism enhances overall performance and scalability.

Environment induced emergence of collective behaviour in evolving swarms with limited sensing

Apr 11, 2022

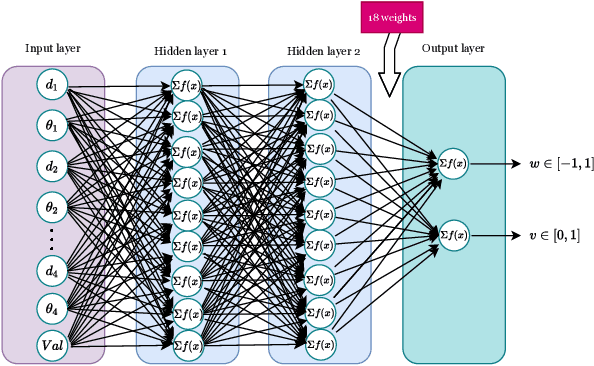

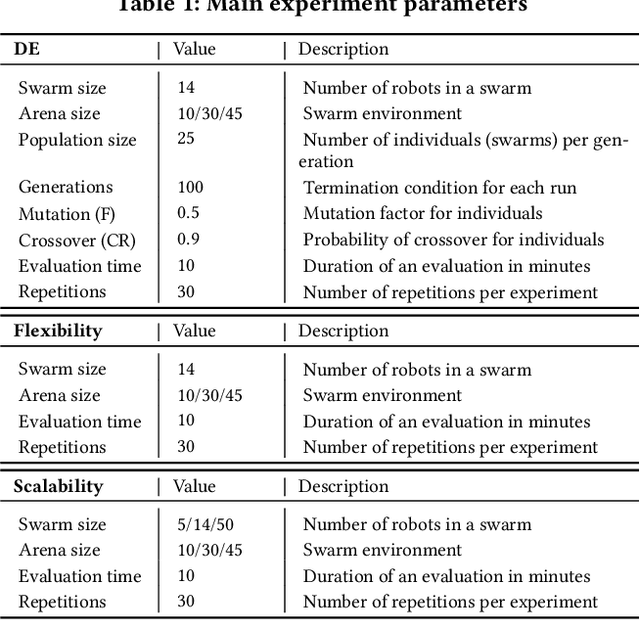

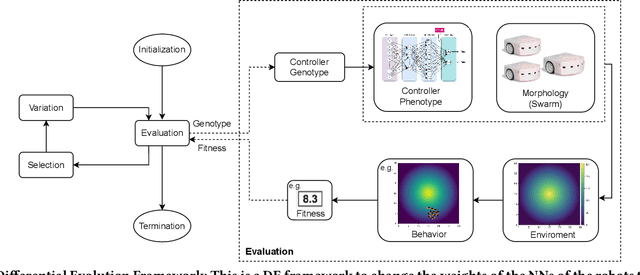

Abstract:Designing controllers for robot swarms is challenging, because human developers have typically no good understanding of the link between the details of a controller that governs individual robots and the swarm behavior that is an indirect result of the interactions between swarm members and the environment. In this paper we investigate whether an evolutionary approach can mitigate this problem. We consider a very challenging task where robots with limited sensing and communication abilities must follow the gradient of an environmental feature and use Differential Evolution to evolve a neural network controller for simulated robots. We conduct a systematic study to measure the flexibility and scalability of the method by varying the size of the arena and number of robots in the swarm. The experiments confirm the feasibility of our approach, the evolved robot controllers induced swarm behavior that solved the task. We found that solutions evolved under the harshest conditions (where the environmental clues were the weakest) were the most flexible and that there is a sweet spot regarding the swarm size. Furthermore, we observed collective motion of the swarm, showcasing truly emergent behavior that was not represented in- and selected for during evolution.

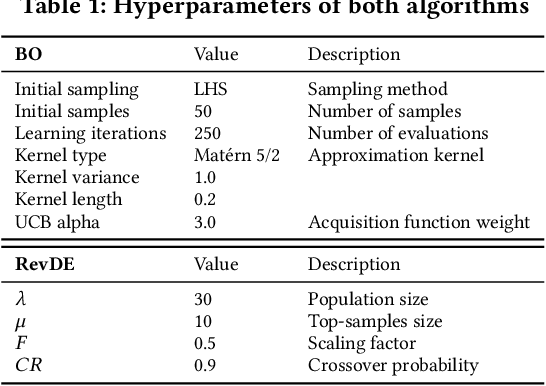

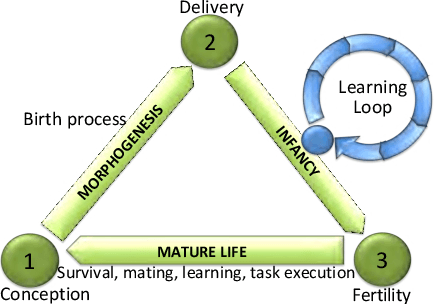

Comparing lifetime learning methods for morphologically evolving robots

Mar 08, 2022

Abstract:Evolving morphologies and controllers of robots simultaneously leads to a problem: Even if the parents have well-matching bodies and brains, the stochastic recombination can break this match and cause a body-brain mismatch in their offspring. We argue that this can be mitigated by having newborn robots perform a learning process that optimizes their inherited brain quickly after birth. We compare three different algorithms for doing this. To this end, we consider three algorithmic properties, efficiency, efficacy, and the sensitivity to differences in the morphologies of the robots that run the learning process.

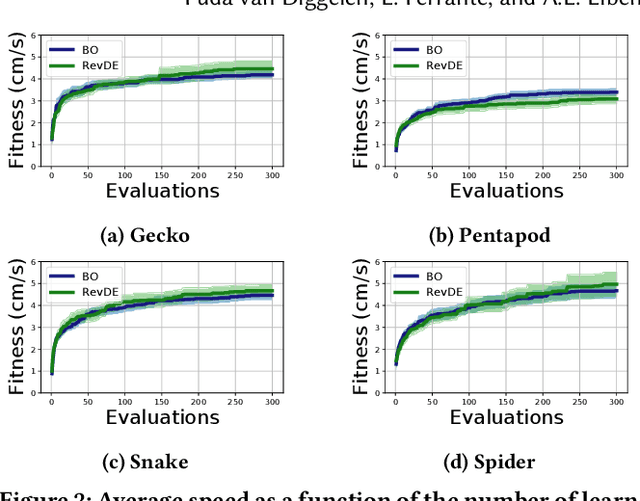

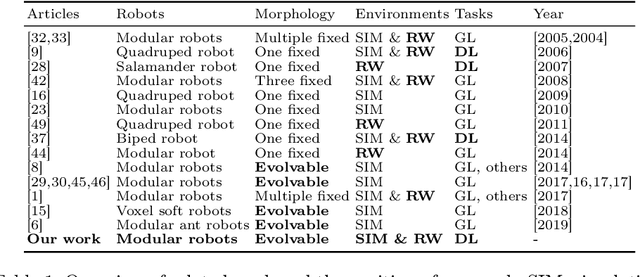

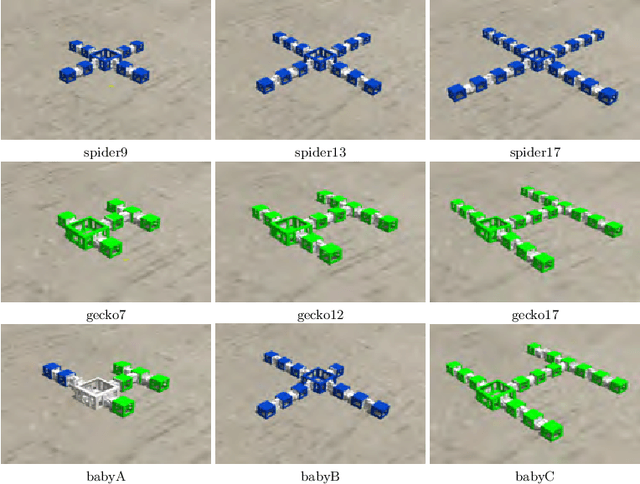

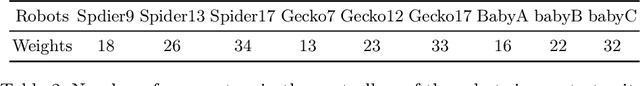

Learning Directed Locomotion in Modular Robots with Evolvable Morphologies

Jan 21, 2020

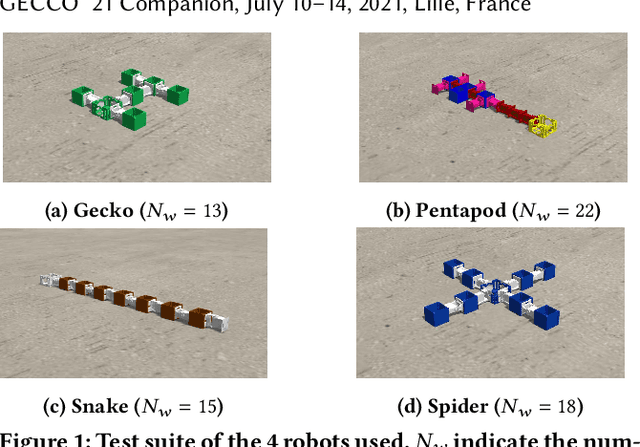

Abstract:We generalize the well-studied problem of gait learning in modular robots in two dimensions. Firstly, we address locomotion in a given target direction that goes beyond learning a typical undirected gait. Secondly, rather than studying one fixed robot morphology we consider a test suite of different modular robots. This study is based on our interest in evolutionary robot systems where both morphologies and controllers evolve. In such a system, newborn robots have to learn to control their own body that is a random combination of the bodies of the parents. We apply and compare two learning algorithms, Bayesian optimization and HyperNEAT. The results of the experiments in simulation show that both methods successfully learn good controllers, but Bayesian optimization is more effective and efficient. We validate the best learned controllers by constructing three robots from the test suite in the real world and observe their fitness and actual trajectories. The obtained results indicate a reality gap that depends on the controllers and the shape of the robots, but overall the trajectories are adequate and follow the target directions successfully.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge