Learning Directed Locomotion in Modular Robots with Evolvable Morphologies

Paper and Code

Jan 21, 2020

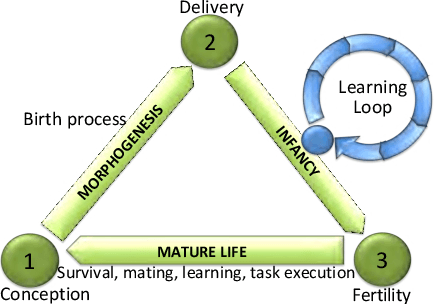

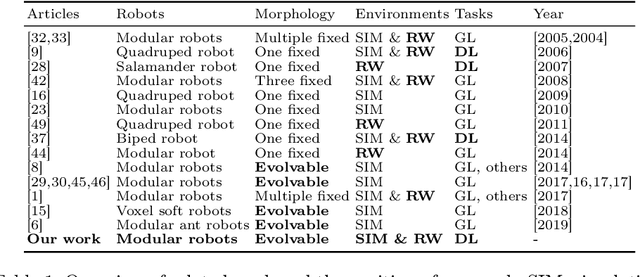

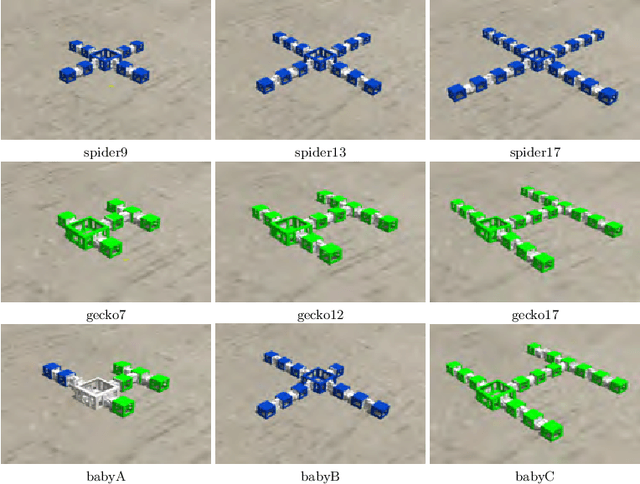

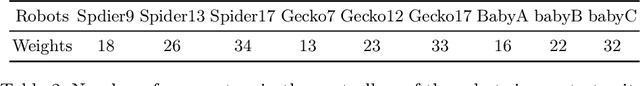

We generalize the well-studied problem of gait learning in modular robots in two dimensions. Firstly, we address locomotion in a given target direction that goes beyond learning a typical undirected gait. Secondly, rather than studying one fixed robot morphology we consider a test suite of different modular robots. This study is based on our interest in evolutionary robot systems where both morphologies and controllers evolve. In such a system, newborn robots have to learn to control their own body that is a random combination of the bodies of the parents. We apply and compare two learning algorithms, Bayesian optimization and HyperNEAT. The results of the experiments in simulation show that both methods successfully learn good controllers, but Bayesian optimization is more effective and efficient. We validate the best learned controllers by constructing three robots from the test suite in the real world and observe their fitness and actual trajectories. The obtained results indicate a reality gap that depends on the controllers and the shape of the robots, but overall the trajectories are adequate and follow the target directions successfully.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge