Léni K. Le Goff

An Investigation of the Factors Influencing Evolutionary Dynamics in the Joint Evolution of Robot Body and Control

Mar 15, 2024

Abstract:In evolutionary robotics, jointly optimising the design and the controller of robots is a challenging task due to the huge complexity of the solution space formed by the possible combinations of body and controller. We focus on the evolution of robots that can be physically created rather than just simulated, in a rich morphological space that includes a voxel-based chassis, wheels, legs and sensors. On the one hand, this space offers a high degree of liberty in the range of robots that can be produced, while on the other hand introduces a complexity rarely dealt with in previous works relating to matching controllers to designs and in evolving closed-loop control. This is usually addressed by augmenting evolution with a learning algorithm to refine controllers. Although several frameworks exist, few have studied the role of the \textit{evolutionary dynamics} of the intertwined `evolution+learning' processes in realising high-performing robots. We conduct an in-depth study of the factors that influence these dynamics, specifically: synchronous vs asynchronous evolution; the mechanism for replacing parents with offspring, and rewarding goal-based fitness vs novelty via selection. Results show that asynchronicity combined with goal-based selection and a `replace worst' strategy results in the highest performance.

Understanding fitness landscapes in morpho-evolution via local optima networks

Feb 12, 2024

Abstract:Morpho-evolution (ME) refers to the simultaneous optimisation of a robot's design and controller to maximise performance given a task and environment. Many genetic encodings have been proposed which are capable of representing design and control. Previous research has provided empirical comparisons between encodings in terms of their performance with respect to an objective function and the diversity of designs that are evaluated, however there has been no attempt to explain the observed findings. We address this by applying Local Optima Network (LON) analysis to investigate the structure of the fitness landscapes induced by three different encodings when evolving a robot for a locomotion task, shedding new light on the ease by which different fitness landscapes can be traversed by a search process. This is the first time LON analysis has been applied in the field of ME despite its popularity in combinatorial optimisation domains; the findings will facilitate design of new algorithms or operators that are customised to ME landscapes in the future.

Morpho-evolution with learning using a controller archive as an inheritance mechanism

Apr 09, 2021

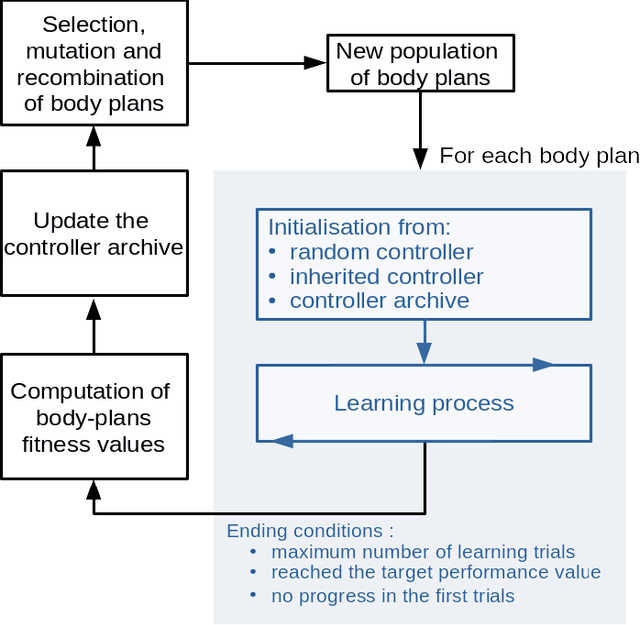

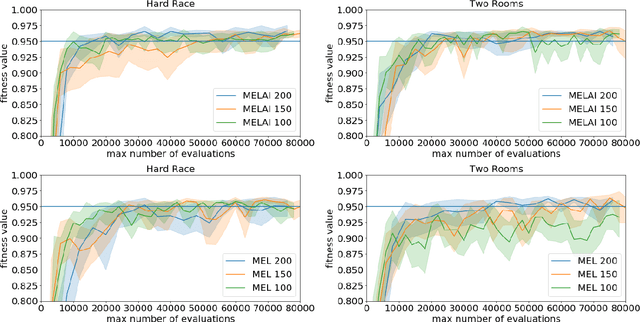

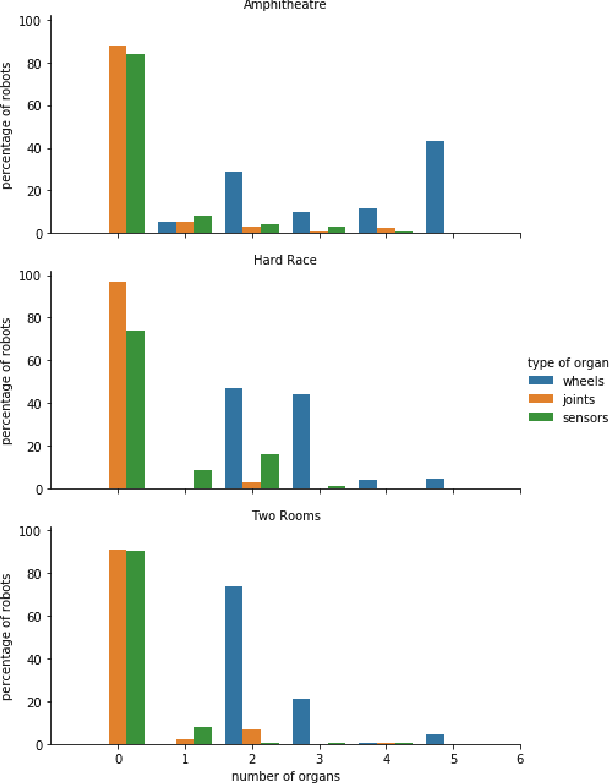

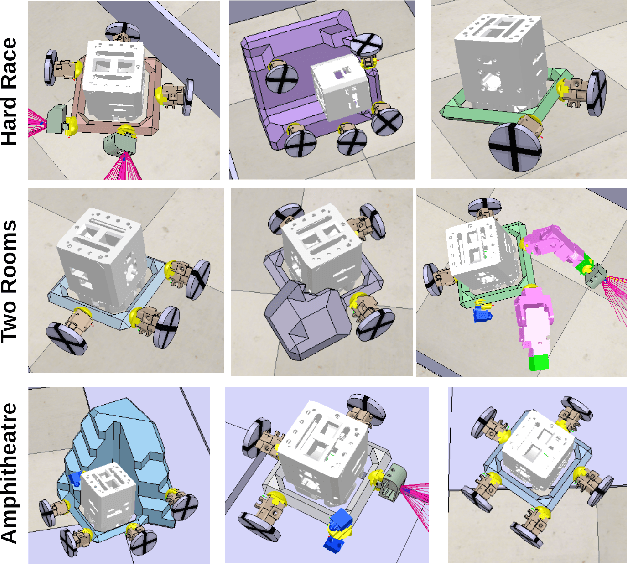

Abstract:In evolutionary robotics, several approaches have been shown to be capable of the joint optimisation of body-plans and controllers by either using only evolution or combining evolution and learning. When working in rich morphological spaces, it is common for offspring to have body-plans that are very different from either of their parents, which can cause difficulties with respect to inheriting a suitable controller. To address this, we propose a framework that combines an evolutionary algorithm to generate body-plans and a learning algorithm to optimise the parameters of a neural controller where the topology of this controller is created once the body-plan of each offspring body-plan is generated. The key novelty of the approach is to add an external archive for storing learned controllers that map to explicit `types' of robots (where this is defined with respect the features of the body-plan). By inheriting an appropriate controller from the archive rather than learning from a randomly initialised one, we show that both the speed and magnitude of learning increases over time when compared to an approach that starts from scratch, using three different test-beds. The framework also provides new insights into the complex interactions between evolution and learning, and the role of morphological intelligence in robot design.

Bootstrapping Robotic Ecological Perception from a Limited Set of Hypotheses Through Interactive Perception

Jan 30, 2019

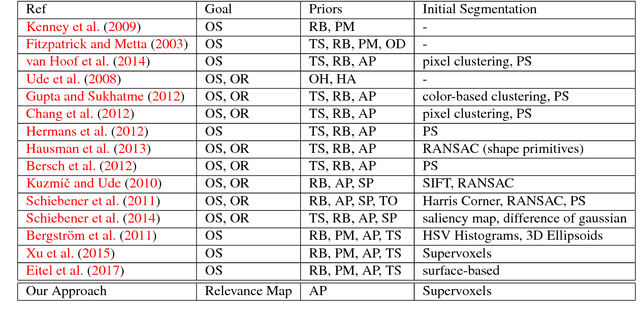

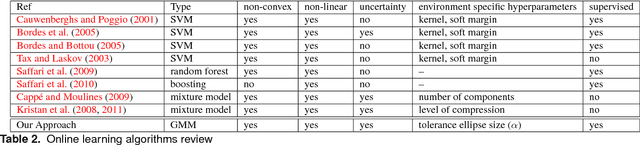

Abstract:To solve its task, a robot needs to have the ability to interpret its perceptions. In vision, this interpretation is particularly difficult and relies on the understanding of the structure of the scene, at least to the extent of its task and sensorimotor abilities. A robot with the ability to build and adapt this interpretation process according to its own tasks and capabilities would push away the limits of what robots can achieve in a non controlled environment. A solution is to provide the robot with processes to build such representations that are not specific to an environment or a situation. A lot of works focus on objects segmentation, recognition and manipulation. Defining an object solely on the basis of its visual appearance is challenging given the wide range of possible objects and environments. Therefore, current works make simplifying assumptions about the structure of a scene. Such assumptions reduce the adaptivity of the object extraction process to the environments in which the assumption holds. To limit such assumptions, we introduce an exploration method aimed at identifying moveable elements in a scene without considering the concept of object. By using the interactive perception framework, we aim at bootstrapping the acquisition process of a representation of the environment with a minimum of context specific assumptions. The robotic system builds a perceptual map called relevance map which indicates the moveable parts of the current scene. A classifier is trained online to predict the category of each region (moveable or non-moveable). It is also used to select a region with which to interact, with the goal of minimizing the uncertainty of the classification. A specific classifier is introduced to fit these needs: the collaborative mixture models classifier. The method is tested on a set of scenarios of increasing complexity, using both simulations and a PR2 robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge